Deep Blind Hyperspectral Image Fusion

Wu Wang

1

, Weihong Zeng

1

, Yue Huang

1

, Xinghao Ding

1∗

, John Paisley

2

1

Fujian Key Laboratory of Sensing and Computing for Smart City,

School of Information Science and Engineering, Xiamen University, China

2

Department of Electrical Engineering, Columbia University, New York, NY, USA

wangwu@stu.xmu.edu.cn, zengwh@stu.xmu.edu.cn

huangyue05@gmail.com, dxh@xmu.edu.cn, jpaisley@columbia.edu

Abstract

Hyperspectral image fusion (HIF) reconstructs high spa-

tial resolution hyperspectral images from low spatial res-

olution hyperspectral images and high spatial resolution

multispectral images. Previous works usually assume that

the linear mapping between the point spread functions of

the hyperspectral camera and the spectral response func-

tions of the conventional camera is known. This is unre-

alistic in many scenarios. We propose a method for blind

HIF problem based on deep learning, where the estimation

of the observation model and fusion process are optimized

iteratively and alternatingly during the super-resolution re-

construction. In addition, the proposed framework enforces

simultaneous spatial and spectral accuracy. Using three

public datasets, the experimental results demonstrate that

the proposed algorithm outperforms existing blind and non-

blind methods.

1. Introduction

Hyperspectral image (HSI) analysis has a wide range of

applications for object classification and recognition [13, 9,

33, 17], segmentation [22], tracking [23, 24] and environ-

mental monitoring [18] in both computer vision and remote

sensing. While HSI facilitates these tasks through informa-

tion across a large number of spectra, these many additional

dimensions of information means that the potential spatial

resolution of HSI systems is severely limited compared with

RGB cameras. HIF addresses this challenge by using the

jointly measured high resolution multispectral image (HR-

MSI)—often simply RGB—to improve the low resolution

HSI (LR-HSI) by approximating its high resolution version

(HR-HSI).

Generally, most state-of-the art methods formulate the

∗

Corresponding author

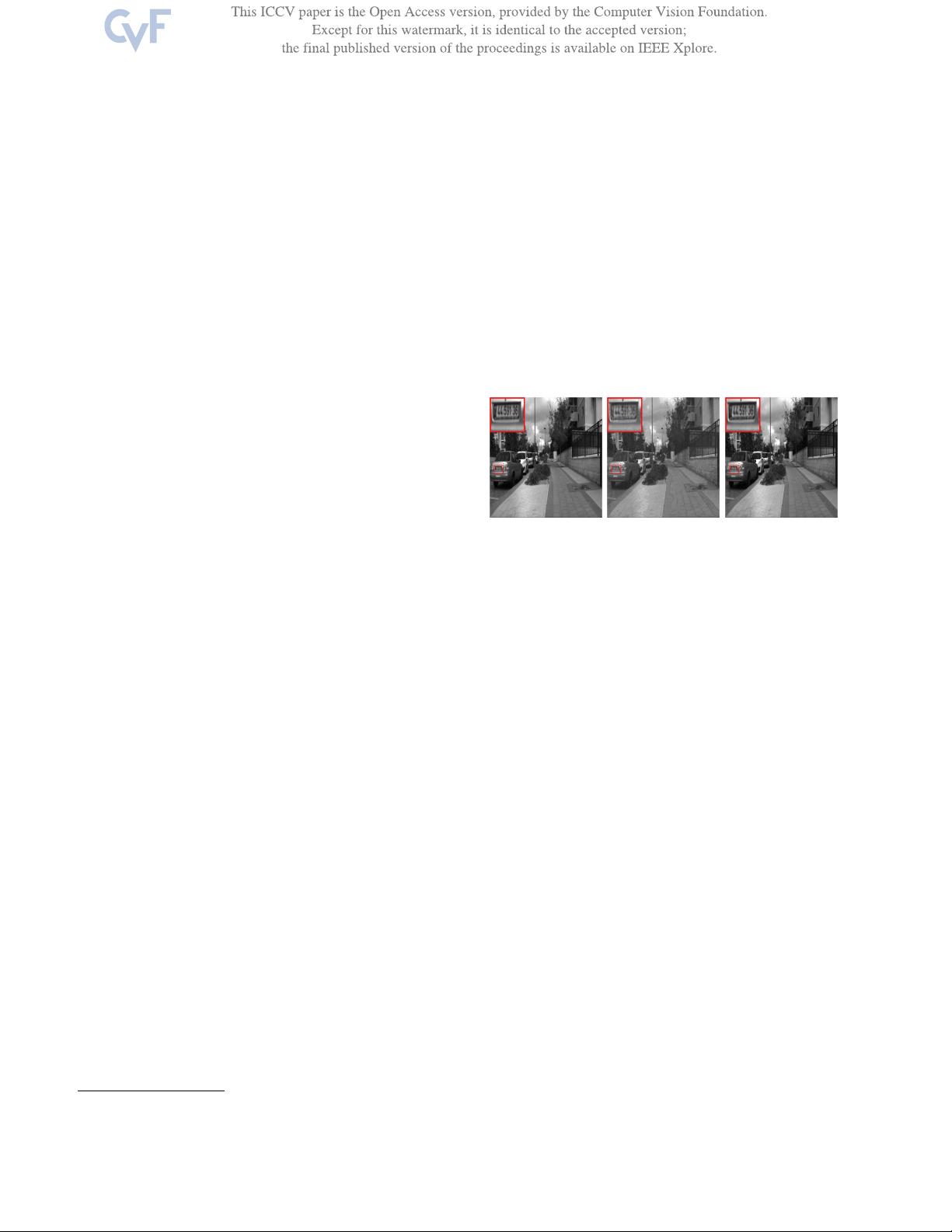

(a) gt (b) HySure (c) Ours

Figure 1: The 31st band of a reconstructed high resolu-

tion hyperspectral image (HR-HSI) with unknown spectral

response function. (a) ground-truth HR-HSI, (b) result of

HySure [20], (c) our result.

observation model through the linear functions [28, 7, 20]

Y = XBS,

(1)

Z = RX,

(2)

where X is the HR-HSI, Y is the LR-HSI and Z is the HR-

MSI. The linear operators B and S perform the appropriate

transformations to map X to the measured values; B repre-

sents a convolution between the point spread function of the

sensor and the HR-HSI bands, S is a downsampling opera-

tion, and R is the spectral response function of the multi-

spectral imaging sensor. The spectral response functions

and point spread functions are often assumed to be at least

partly known. A common way to learn X is through opti-

mizing an objective function of the form

min

X

kY − XBSk

2

F

+ λ

1

kZ − RXk

2

F

+ λ

2

ϕ(X), (3)

where the first and second terms enforce agreement with

the data and the third term is a regularization [12, 15, 6, 7].

However, this assumed relationship between X,Y,Z is not

always true, and because the information available about the

sensor is incomplete, it is unknowable [26]. In other words,

this non-blind fusion is often only an approximation, and

1

4150