100K 200K 300K 400K

Training Steps

20

40

60

80

100

FID-50K

XL/2 In-Context

XL/2 Cross-Attention

XL/2 adaLN

XL/2 adaLN-Zero

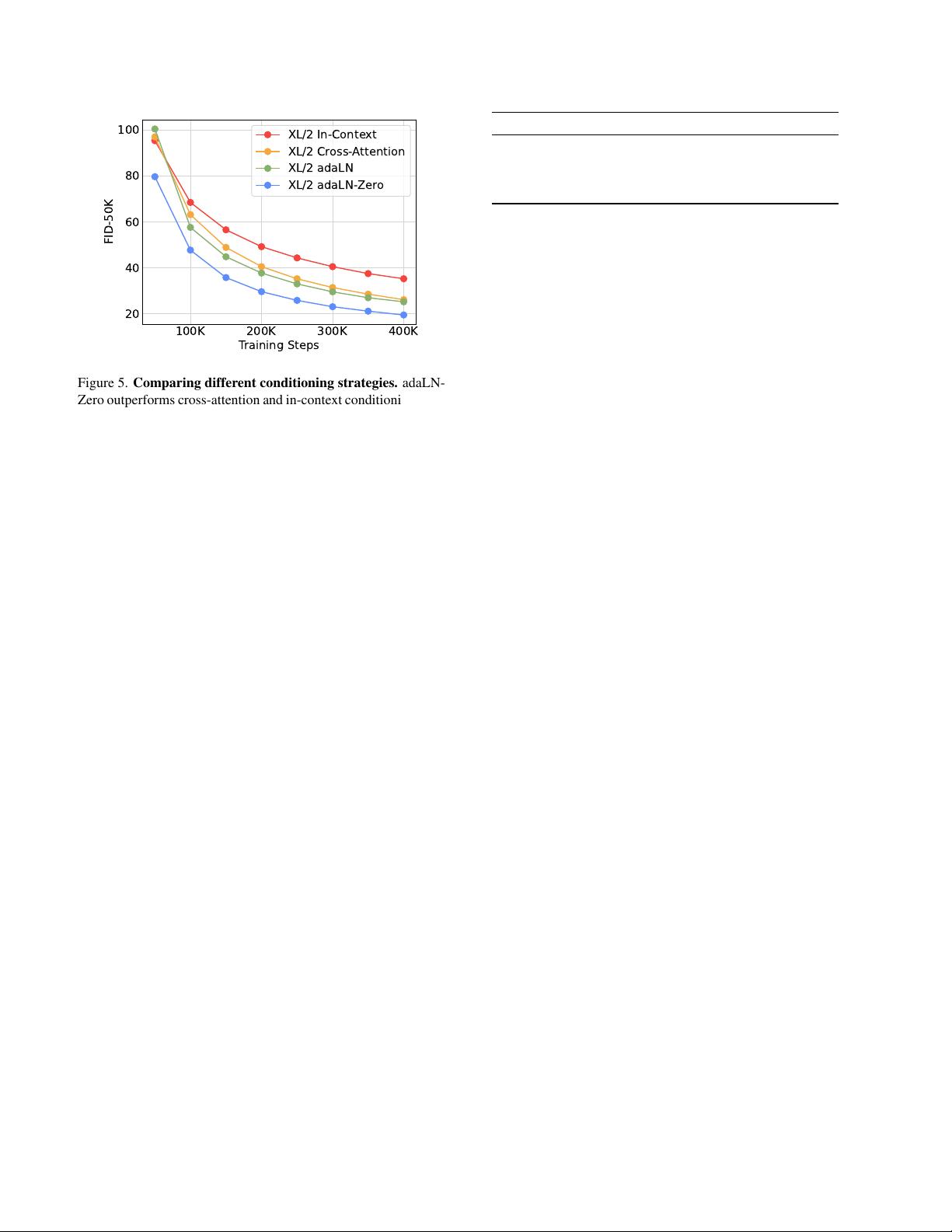

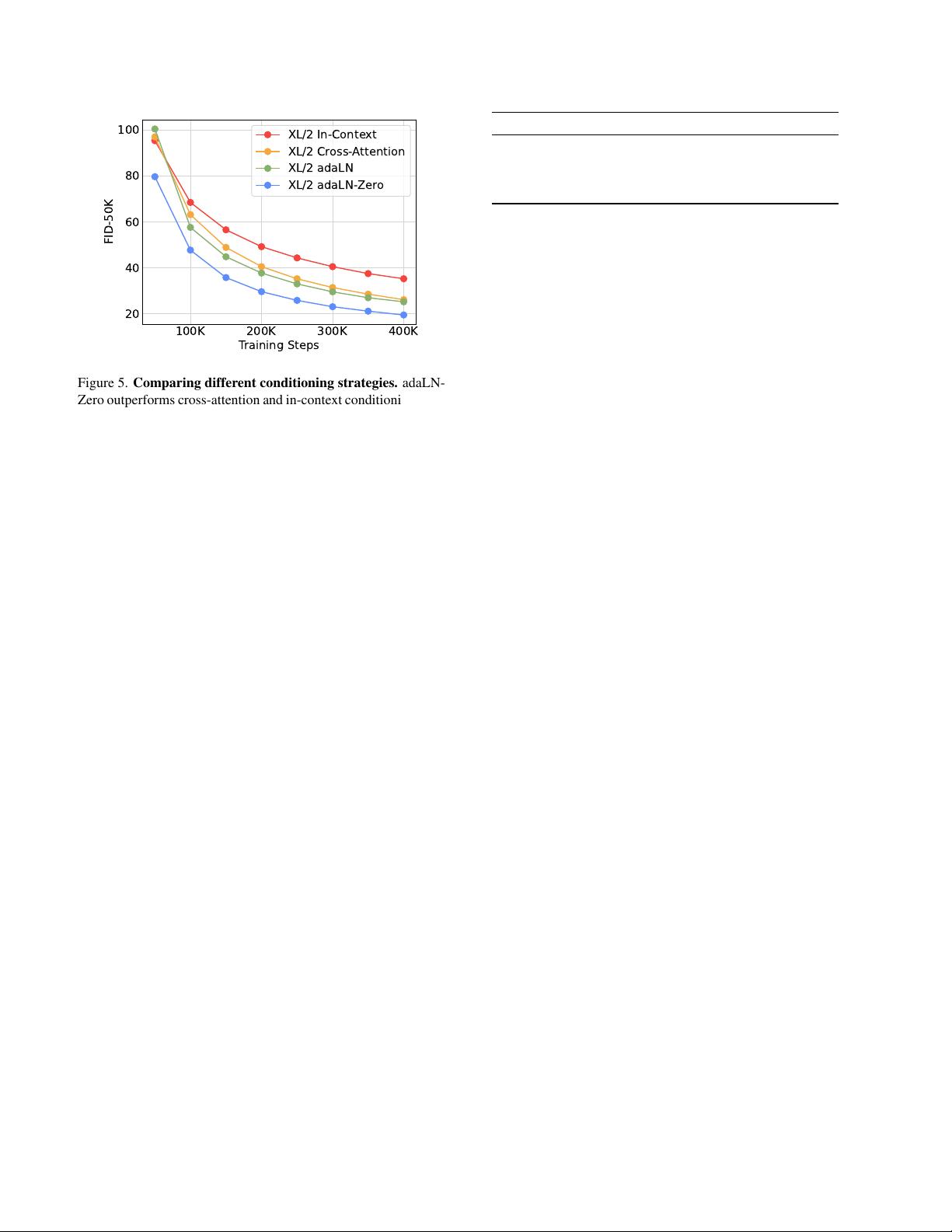

Figure 5. Comparing different conditioning strategies. adaLN-

Zero outperforms cross-attention and in-context conditioning at all

stages of training.

– Cross-attention block. We concatenate the embeddings

of t and c into a length-two sequence, separate from

the image token sequence. The transformer block is

modified to include an additional multi-head cross-

attention layer following the multi-head self-attention

block, similar to the original design from Vaswani et

al. [60], and also similar to the one used by LDM for

conditioning on class labels. Cross-attention adds the

most Gflops to the model, roughly a 15% overhead.

– Adaptive layer norm (adaLN) block. Following

the widespread usage of adaptive normalization lay-

ers [40] in GANs [2, 28] and diffusion models with U-

Net backbones [9], we explore replacing standard layer

norm layers in transformer blocks with adaptive layer

norm (adaLN). Rather than directly learn dimension-

wise scale and shift parameters γ and β, we regress

them from the sum of the embedding vectors of t and

c. Of the three block designs we explore, adaLN adds

the least Gflops and is thus the most compute-efficient.

It is also the only conditioning mechanism that is re-

stricted to apply the same function to all tokens.

– adaLN-Zero block. Prior work on ResNets has found

that initializing each residual block as the identity

function is beneficial. For example, Goyal et al. found

that zero-initializing the final batch norm scale factor γ

in each block accelerates large-scale training in the su-

pervised learning setting [13]. Diffusion U-Net mod-

els use a similar initialization strategy, zero-initializing

the final convolutional layer in each block prior to any

residual connections. We explore a modification of

the adaLN DiT block which does the same. In addi-

tion to regressing γ and β, we also regress dimension-

wise scaling parameters α that are applied immediately

prior to any residual connections within the DiT block.

Model Layers N Hidden size d Heads Gflops (I=32, p=4)

DiT-S 12 384 6 1.4

DiT-B 12 768 12 5.6

DiT-L 24 1024 16 19.7

DiT-XL 28 1152 16 29.1

Table 1. Details of DiT models. We follow ViT [10] model con-

figurations for the Small (S), Base (B) and Large (L) variants; we

also introduce an XLarge (XL) config as our largest model.

We initialize the MLP to output the zero-vector for all

α; this initializes the full DiT block as the identity

function. As with the vanilla adaLN block, adaLN-

Zero adds negligible Gflops to the model.

We include the in-context, cross-attention, adaptive layer

norm and adaLN-Zero blocks in the DiT design space.

Model size. We apply a sequence of N DiT blocks, each

operating at the hidden dimension size d. Following ViT,

we use standard transformer configs that jointly scale N,

d and attention heads [10, 63]. Specifically, we use four

configs: DiT-S, DiT-B, DiT-L and DiT-XL. They cover a

wide range of model sizes and flop allocations, from 0.3

to 118.6 Gflops, allowing us to gauge scaling performance.

Table 1 gives details of the configs.

We add B, S, L and XL configs to the DiT design space.

Transformer decoder. After the final DiT block, we need

to decode our sequence of image tokens into an output noise

prediction and an output diagonal covariance prediction.

Both of these outputs have shape equal to the original spa-

tial input. We use a standard linear decoder to do this; we

apply the final layer norm (adaptive if using adaLN) and lin-

early decode each token into a p×p×2C tensor, where C is

the number of channels in the spatial input to DiT. Finally,

we rearrange the decoded tokens into their original spatial

layout to get the predicted noise and covariance.

The complete DiT design space we explore is patch size,

transformer block architecture and model size.

4. Experimental Setup

We explore the DiT design space and study the scaling

properties of our model class. Our models are named ac-

cording to their configs and latent patch sizes p; for exam-

ple, DiT-XL/2 refers to the XLarge config and p = 2.

Training. We train class-conditional latent DiT models at

256 × 256 and 512 × 512 image resolution on the Ima-

geNet dataset [31], a highly-competitive generative mod-

eling benchmark. We initialize the final linear layer with

zeros and otherwise use standard weight initialization tech-

niques from ViT. We train all models with AdamW [29,33].

5