Chapter 1. Introduction to Deep Learning

"By far the greatest danger of Artificial Intelligence is that people conclude too early that they understand it."

--Eliezer Yudkowsky

Ever thought, why it is often difficult to beat the computer in chess, even for the best players of the game? How Facebook is able to recognize

your face amid hundreds of millions of photos? How can your mobile phone recognize your voice, and redirect the call to the correct person, from

hundreds of contacts listed?

The primary goal of this book is to deal with many of those queries, and to provide detailed solutions to the readers. This book can be used for a

wide range of reasons by a variety of readers, however, we wrote the book with two main target audiences in mind. One of the primary target

audiences is undergraduate or graduate university students learning about deep learning and Artificial Intelligence; the second group of readers

are the software engineers who already have a knowledge of big data, deep learning, and statistical modeling, but want to rapidly gain knowledge

of how deep learning can be used for big data and vice versa.

This chapter will mainly try to set a foundation for the readers by providing the basic concepts, terminologies, characteristics, and the major

challenges of deep learning. The chapter will also put forward the classification of different deep network algorithms, which have been widely used

by researchers over the last decade. The following are the main topics that this chapter will cover:

Getting started with deep learning

Deep learning terminologies

Deep learning: A revolution in Artificial Intelligence

Classification of deep learning networks

Ever since the dawn of civilization, people have always dreamt of building artificial machines or robots which can behave and work exactly like

human beings. From the Greek mythological characters to the ancient Hindu epics, there are numerous such examples, which clearly suggest

people's interest and inclination towards creating and having an artificial life.

During the initial computer generations, people had always wondered if the computer could ever become as intelligent as a human being! Going

forward, even in medical science, the need of automated machines has become indispensable and almost unavoidable. With this need and

constant research in the same field, Artificial Intelligence (AI) has turned out to be a flourishing technology with various applications in several

domains, such as image processing, video processing, and many other diagnosis tools in medical science too.

Although there are many problems that are resolved by AI systems on a daily basis, nobody knows the specific rules for how an AI system is

programmed! A few of the intuitive problems are as follows:

Google search, which does a really good job of understanding what you type or speak

As mentioned earlier, Facebook is also somewhat good at recognizing your face, and hence, understanding your interests

Moreover, with the integration of various other fields, for example, probability, linear algebra, statistics, machine learning, deep learning, and so

on, AI has already gained a huge amount of popularity in the research field over the course of time.

One of the key reasons for the early success of AI could be that it basically dealt with fundamental problems for which the computer did not require

a vast amount of knowledge. For example, in 1997, IBM's Deep Blue chess-playing system was able to defeat the world champion Garry

Kasparov [1]. Although this kind of achievement at that time can be considered significant, it was definitely not a burdensome task to train the

computer with only the limited number of rules involved in chess! Training a system with a fixed and limited number of rules is termed as hard-

coded knowledge of the computer. Many Artificial Intelligence projects have undergone this hard-coded knowledge about the various aspects of

the world in many traditional languages. As time progresses, this hard-coded knowledge does not seem to work with systems dealing with huge

amounts of data. Moreover, the number of rules that the data was following also kept changing in a frequent manner. Therefore, most of the

projects following that system failed to stand up to the height of expectation.

The setbacks faced by this hard-coded knowledge implied that those artificial intelligence systems needed some way of generalizing patterns

and rules from the supplied raw data, without the need for external spoon-feeding. The proficiency of a system to do so is termed as machine

learning. There are various successful machine learning implementations which we use in our daily life. A few of the most common and important

implementations are as follows:

Spam detection: Given an e-mail in your inbox, the model can detect whether to put that e-mail in spam or in the inbox folder. A common

naive Bayes model can distinguish between such e-mails.

Credit card fraud detection: A model that can detect whether a number of transactions performed at a specific time interval are carried

out by the original customer or not.

One of the most popular machine learning models, given by Mor-Yosef et al in 1990, used logistic regression, which could recommend

whether caesarean delivery was needed for the patient or not!

There are many such models which have been implemented with the help of machine learning techniques.

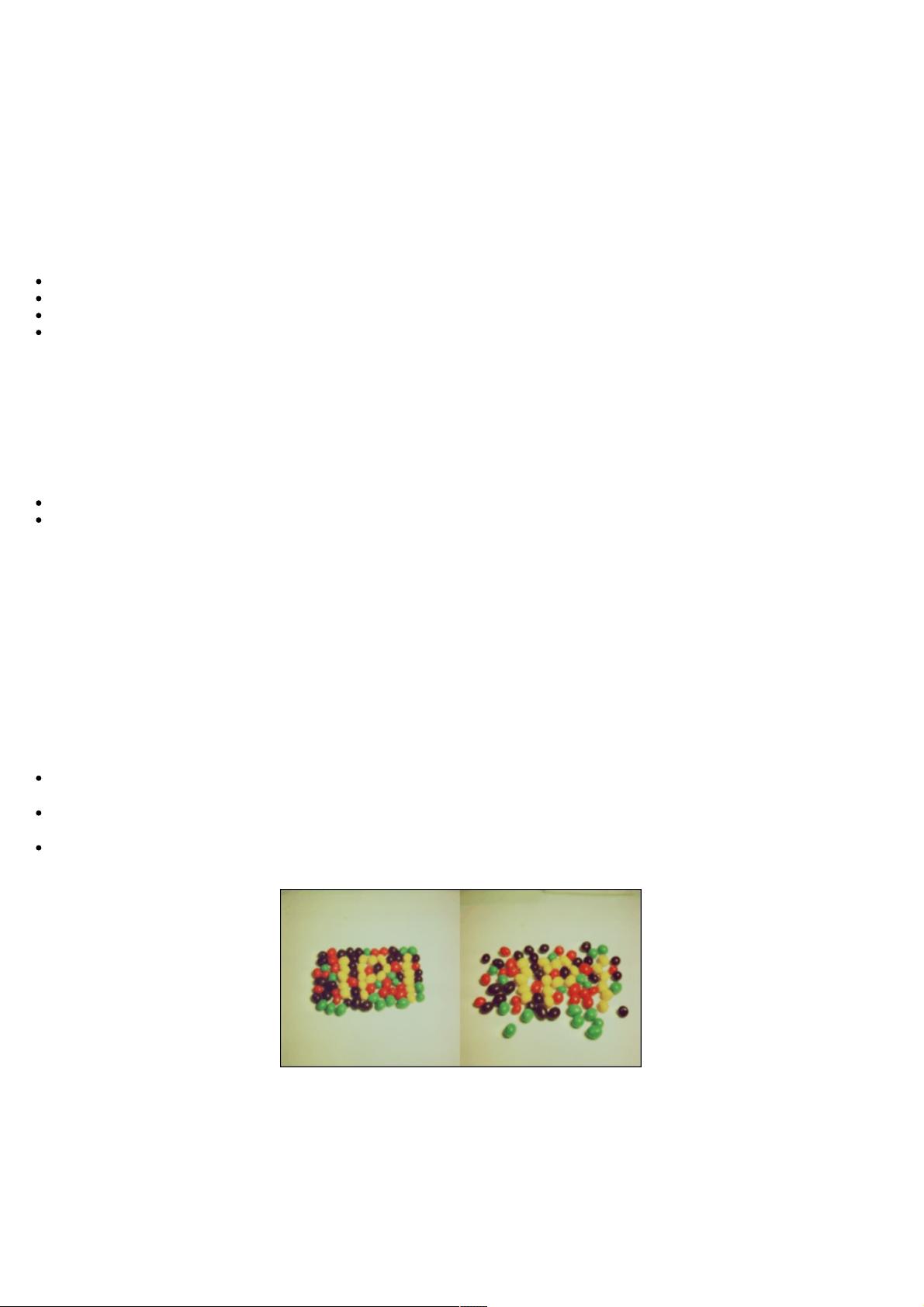

Figure 1.1: The figure shows the example of different types of representation. Let's say we want to train the machine to detect some empty

spaces in between the jelly beans. In the image on the right side, we have sparse jelly beans, and it would be easier for the AI system to

determine the empty parts. However, in the image on the left side, we have extremely compact jelly beans, and hence, it will be an extremely

difficult task for the machine to find the empty spaces. Images sourced from USC-SIPI image database

A large portion of performance of the machine learning systems depends on the data fed to the system. This is called representation of the data.

All the information related to the representation is called the feature of the data. For example, if logistic regression is used to detect a brain tumor

in a patient, the AI system will not try to diagnose the patient directly! Rather, the concerned doctor will provide the necessary input to the systems

according to the common symptoms of that patient. The AI system will then match those inputs with the already received past inputs which were

used to train the system.

Based on the predictive analysis of the system, it will provide its decision regarding the disease. Although logistic regression can learn and

decide based on the features given, it cannot influence or modify the way features are defined. Logistic regression is a type of regression model

where the dependent variable has a limited number of possible values based on the independent variable, unlike linear regression. So, for

example, if that model was provided with a caesarean patient's report instead of the brain tumor patient's report, it would surely fail to predict the