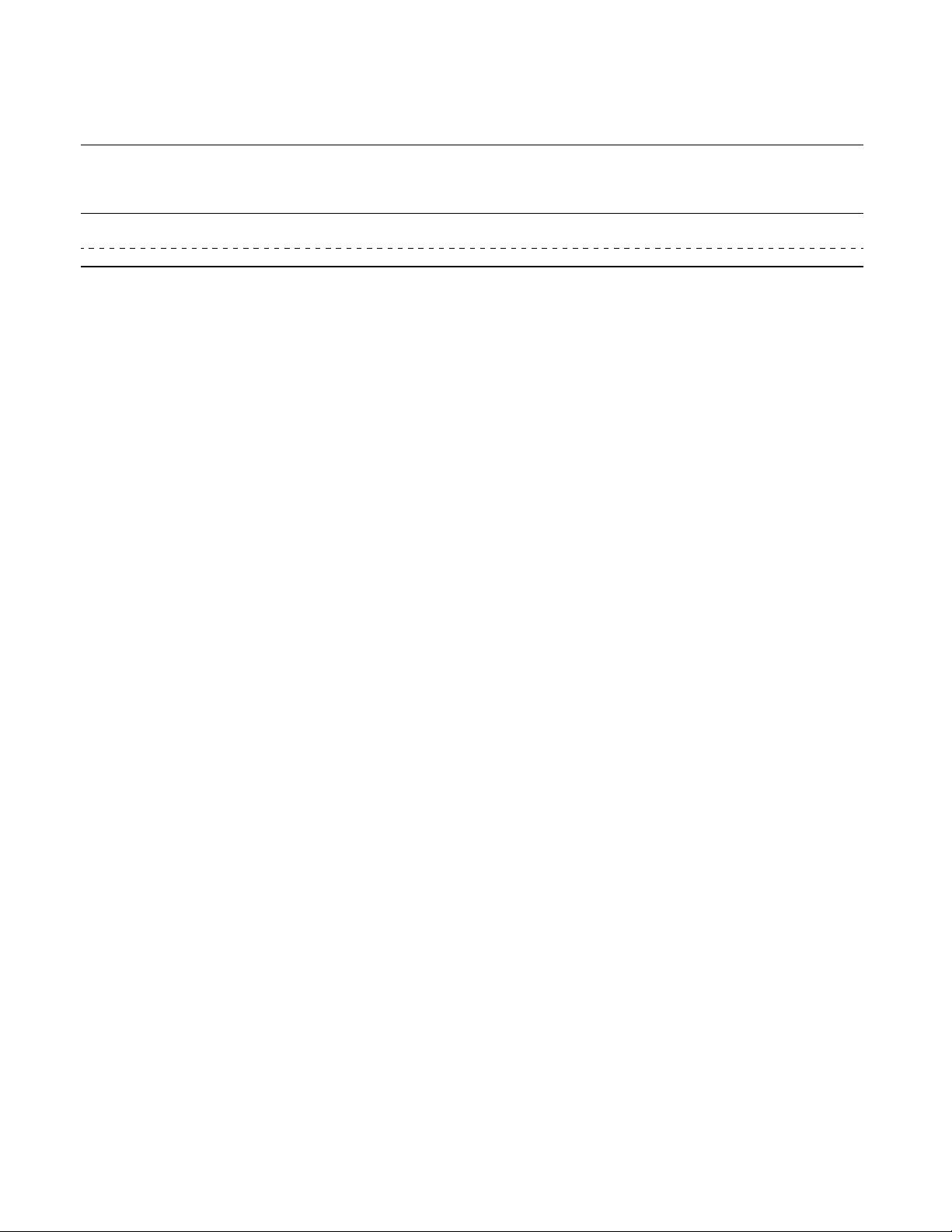

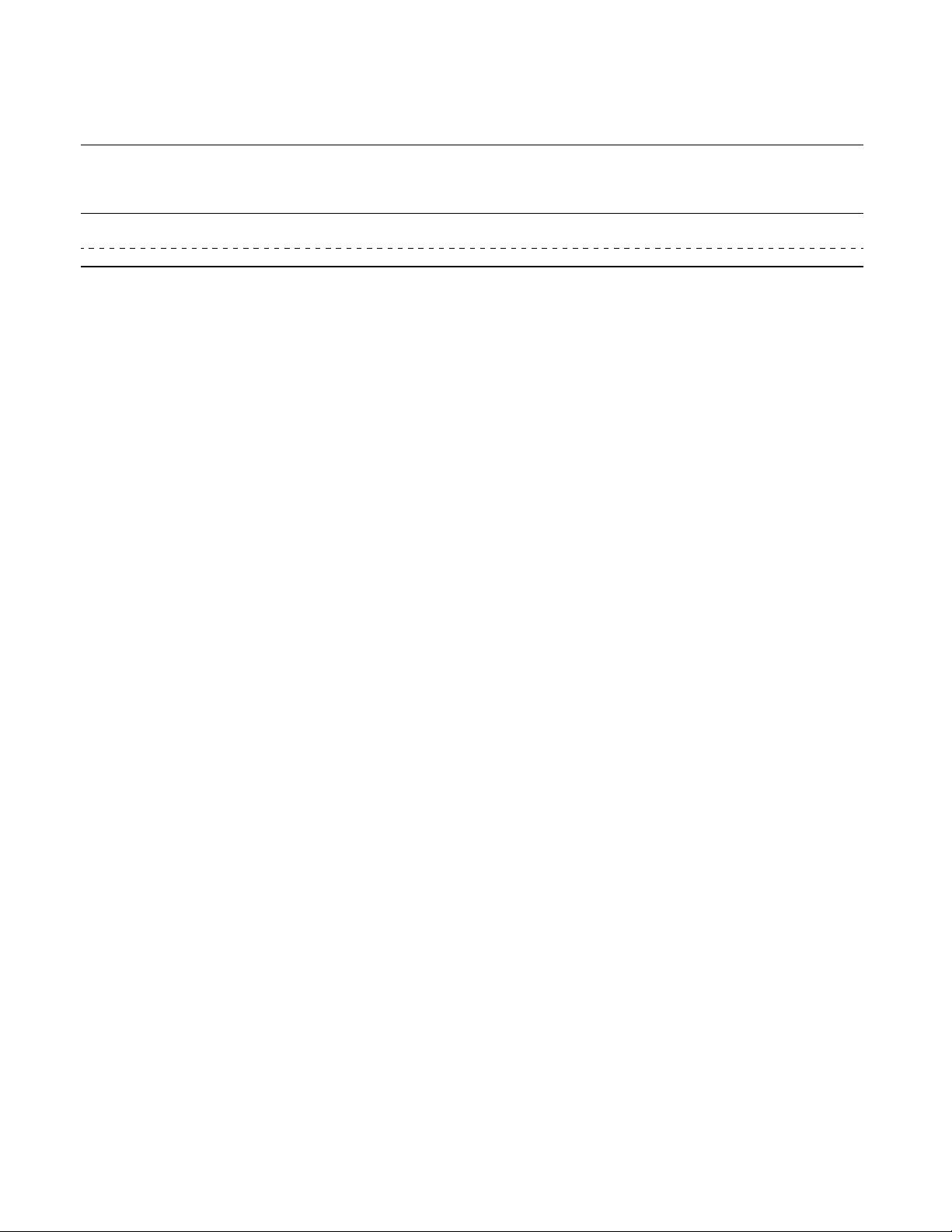

Dataset # Input Imgs/ Input Num Distort. # # Distort. # Judg- Judgment

Patches Type Distort. Types Levels Imgs/Patches ments Type

LIVE [51] 29 images 5 traditional continuous .8k 25k MOS

CSIQ [29] 30 images 6 traditional 5 .8k 25k MOS

TID2008 [46] 25 images 17 traditional 4 2.7k 250k MOS

TID2013 [45] 25 images 24 traditional 5 3.0k 500k MOS

BAPPS (2AFC–Distort) 160.8k 64 × 64 patch 425 trad + CNN continuous 321.6k 349.8k 2AFC

BAPPS (2AFC–Real alg) 26.9k 64 × 64 patch – alg outputs – 53.8k 134.5k 2AFC

BAPPS (JND–Distort) 9.6k 64 × 64 patch 425 trad. + CNN continuous 9.6k 28.8k Same/Not same

Table 1: Dataset comparison. A primary differentiator between our proposed Berkeley-Adobe Perceptual Patch Similarity

(BAPPS) dataset and previous work is scale of distortion types. We provide human perceptual judgments on distortion set

using uncompressed images from [7, 10]. Previous datasets have used a small number of distortions at discrete levels. We

use a large number of distortions (created by sequentially composing atomic distortions together) and sample continuously.

For each input patch, we corrupt it using two distortions and ask for a few human judgments (2 for train, 5 for test set) per

pair. This enables us to obtain judgments on a large number of patches. Previous databases summarize their judgments into

a mean opinion score (MOS); we simply report pairwise judgments (two alternative force choice). In addition, we provide

judgments on outputs from real algorithms, as well as a same/not same Just Noticeable Difference (JND) perceptual test.

model low-level perceptual similarity surprisingly

well, outperforming previous, widely-used metrics.

• We demonstrate that network architecture alone does

not account for the performance: untrained nets

achieve much lower performance.

• With our data, we can improve performance by “cali-

brating” feature responses from a pre-trained network.

Prior work on datasets In order to evaluate existing sim-

ilarity measures, a number of datasets have been proposed.

Some of the most popular are the LIVE [51], TID2008 [46],

CSIQ [29], and TID2013 [45] datasets. These datasets are

referred to Full-Reference Image Quality Assessment (FR-

IQA) datasets and have served as the de-facto baselines for

development and evaluation of similarity metrics. A related

line of work is on No-Reference Image Quality Assessment

(NR-IQA), such as AVA [38] and LIVE In the Wild [18].

These datasets investigate the “quality” of individual im-

ages by themselves, without a reference image. We collect

a new dataset that is complementary to these: it contains a

substantially larger number of distortions, including some

from newer, deep network based outputs, as well as ge-

ometric distortions. Our dataset is focused on perceptual

similarity, rather than quality assessment. Additionally, it is

collected on patches as opposed to full images, in the wild,

with a different experimental design (more details in Sec 2).

Prior work on deep networks and human judgments

Recently, advances in DNNs have motivated investigation

of applications in the context of visual similarity and image

quality assessment. Kim and Lee [25] use a CNN to pre-

dict visual similarity by training on low-level differences.

Concurrent work by Talebi and Milanfar [54, 55] train a

deep network in the context of NR-IQA for image aesthet-

ics. Gao et al. [16] and Amirshahi et al. [3] propose tech-

niques involving leveraging internal activations of deep net-

works (VGG and AlexNet, respectively) along with addi-

tional multiscale post-processing. In this work, we conduct

a more in-depth study across different architectures, train-

ing signals, on a new, large scale, highly-varied dataset.

Recently, Berardino et al. [6] train networks on percep-

tual similarity, and importantly, assess the ability of deep

networks to make predictions on a separate task – predict-

ing most and least perceptually-noticeable directions of dis-

tortion. Similarly, we not only assess image patch similarity

on parameterized distortions, but also test generalization to

real algorithms, as well as generalization to a separate per-

ceptual task – just noticeable differences.

2. Berkeley-Adobe Perceptual Patch Similarity

(BAPPS) Dataset

To evaluate the performance of different perceptual met-

rics, we collect a large-scale highly diverse dataset of per-

ceptual judgments using two approaches. Our main data

collection employs a two alternative forced choice (2AFC)

test, that asks which of two distortions is more similar to a

reference. This is validated by a second experiment where

we perform a just noticeable difference (JND) test, which

asks whether two patches – one reference and one distorted

– are the same or different. These judgments are collected

over a wide space of distortions and real algorithm outputs.

2.1. Distortions

Traditional distortions We create a set of “traditional”

distortions consisting of common operations performed on

the input patches, listed in Table 2 (left). In general, we

use photometric distortions, random noise, blurring, spatial

shifts and corruptions, and compression artifacts. We show

qualitative examples of our traditional distortions in Fig-

ure 2. The severity of each perturbation is parameterized -

3