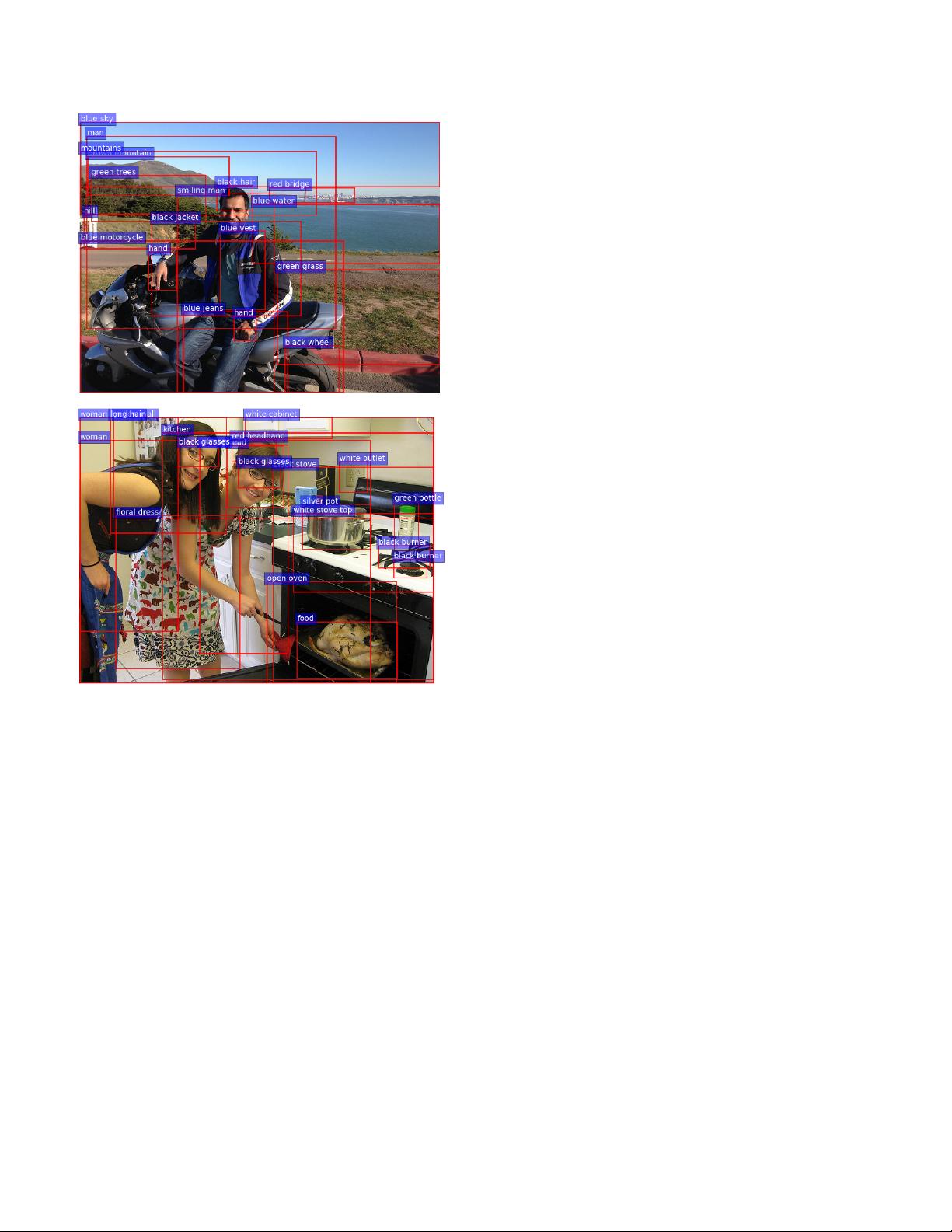

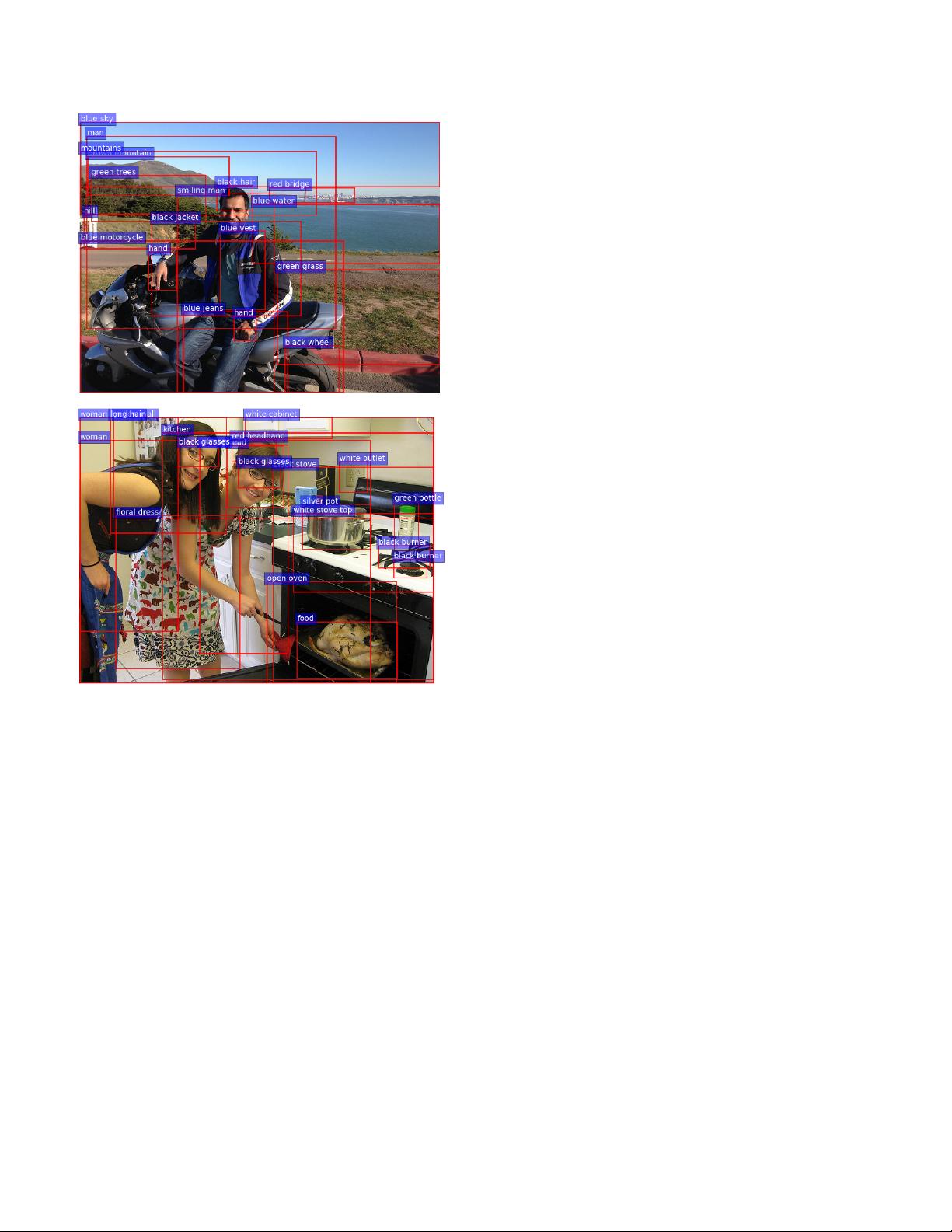

Figure 2. Example output from our Faster R-CNN bottom-up at-

tention model. Each bounding box is labeled with an attribute class

followed by an object class. Note however, that in captioning and

VQA we utilize only the feature vectors – not the predicted labels.

suppression with an intersection-over-union (IoU) thresh-

old, the top box proposals are selected as input to the second

stage. In the second stage, region of interest (RoI) pooling

is used to extract a small feature map (e.g. 14 ×14) for each

box proposal. These feature maps are then batched together

as input to the final layers of the CNN. The final output of

the model consists of a softmax distribution over class la-

bels and class-specific bounding box refinements for each

box proposal.

In this work, we use Faster R-CNN in conjunction with

the ResNet-101 [13] CNN. To generate an output set of im-

age features V for use in image captioning or VQA, we take

the final output of the model and perform non-maximum

suppression for each object class using an IoU threshold.

We then select all regions where any class detection prob-

ability exceeds a confidence threshold. For each selected

region i, v

i

is defined as the mean-pooled convolutional

feature from this region, such that the dimension D of the

image feature vectors is 2048. Used in this fashion, Faster

R-CNN effectively functions as a ‘hard’ attention mecha-

nism, as only a relatively small number of image bounding

box features are selected from a large number of possible

configurations.

To pretrain the bottom-up attention model, we first ini-

tialize Faster R-CNN with ResNet-101 pretrained for clas-

sification on ImageNet [35]. We then train on Visual

Genome [21] data. To aid the learning of good feature

representations, we add an additional training output for

predicting attribute classes (in addition to object classes).

To predict attributes for region i, we concatenate the mean

pooled convolutional feature v

i

with a learned embedding

of the ground-truth object class, and feed this into an addi-

tional output layer defining a softmax distribution over each

attribute class plus a ‘no attributes’ class.

The original Faster R-CNN multi-task loss function con-

tains four components, defined over the classification and

bounding box regression outputs for both the RPN and the

final object class proposals respectively. We retain these

components and add an additional multi-class loss compo-

nent to train the attribute predictor. In Figure 2 we provide

some examples of model output.

3.2. Captioning Model

Given a set of image features V , our proposed caption-

ing model uses a ‘soft’ top-down attention mechanism to

weight each feature during caption generation, using the

existing partial output sequence as context. This approach

is broadly similar to several previous works [34, 27, 46].

However, the particular design choices outlined below

make for a relatively simple yet high-performing baseline

model. Even without bottom-up attention, our captioning

model achieves performance comparable to state-of-the-art

on most evaluation metrics (refer Table 1).

At a high level, the captioning model is composed of two

LSTM [15] layers using a standard implementation [9]. In

the sections that follow we will refer to the operation of the

LSTM over a single time step using the following notation:

h

t

= LSTM(x

t

, h

t−1

) (1)

where x

t

is the LSTM input vector and h

t

is the LSTM

output vector. Here we have neglected the propagation of

memory cells for notational convenience. We now describe

the formulation of the LSTM input vector x

t

and the output

vector h

t

for each layer of the model. The overall caption-

ing model is illustrated in Figure 3.

3.2.1 Top-Down Attention LSTM

Within the captioning model, we characterize the first

LSTM layer as a top-down visual attention model, and the