没有合适的资源?快使用搜索试试~ 我知道了~

首页Sebesta的《编程互联网》第六版概览

"Programming the World Wide Web Sixth Edition" 是一本由 Robert W. Sebesta 编写的教科书,主要探讨了互联网和万维网(WWW)的编程技术。该书由 Addison-Wesley 出版社发行,涉及的标签包括 WWW、web 和 internet。

在这本第六版的教材中,Sebesta 教授深入讲解了构建和交互Web应用程序的关键概念和技术。读者可以期待学习到以下关键知识点:

1. **Web基础**:首先,书籍会介绍互联网和万维网的基本概念,包括HTTP协议(超文本传输协议)的工作原理,以及URL(统一资源定位符)的角色。

2. **HTML与CSS**:作为网页内容和样式的基石,HTML(超文本标记语言)和CSS(层叠样式表)是编程Web页面的核心。书中会详细介绍这两者的语法和用法,如何创建结构化的文档和美观的布局。

3. **JavaScript**:JavaScript是实现网页动态功能的关键,书中的内容可能涵盖了变量、函数、DOM(文档对象模型)操作、事件处理等,这些都是创建交互式Web应用的基础。

4. **Web服务器与客户端交互**:书中会讨论如何设置和配置Web服务器,以及如何通过HTTP协议进行客户端和服务器之间的通信。

5. **Web安全与隐私**:随着网络安全的重要性日益提升,教材会涵盖有关数据加密、身份验证、授权和防止跨站脚本(XSS)及SQL注入等安全问题的内容。

6. **Web服务和API**:现代Web开发中,Web服务(如RESTful API)扮演着重要角色。读者将了解到如何设计和使用这些接口进行数据交换。

7. **移动Web开发**:随着智能手机和平板电脑的普及,移动设备上的Web体验变得至关重要。书中可能包含有关响应式设计、移动优化和移动应用开发的信息。

8. **Web框架和库**:Sebesta教授可能会介绍一些流行的Web开发框架,如jQuery或AngularJS,以及它们如何简化开发过程。

9. **Web标准和最佳实践**:教材还将强调遵循W3C(万维网联盟)制定的Web标准,以确保代码的可访问性、兼容性和持久性。

10. **新兴技术**:最后,可能还会提及一些前沿技术,如WebAssembly、WebVR、Web Components,以及现代前端框架React或Vue.js等,以使读者了解Web发展的最新趋势。

通过阅读这本教材,学生和专业人士将获得构建和维护强大、高效、安全的Web应用程序所需的知识和技能。它不仅适合初学者入门,也为有经验的开发者提供了深入理解和更新知识的机会。

CHAPTER 1

Fundamentals

1.1 A Brief Introduction to the Internet

1.2 The World Wide Web

1.3 Web Browsers

1.4 Web Servers

1.5 Uniform Resource Locators

1.6 Multipurpose Internet Mail Extensions

1.7 The Hypertext Transfer Protocol

1.8 Security

1.9 The Web Programmer’s Toolbox

Summary • Review Questions • Exercises

The lives of most inhabitants of industrialized countries, as well as some in unindustrialized countries, have been changed forever by

the advent of the World Wide Web. Although this transformation has had some downsides—for example, easier access to pornography and

gambling and the ease with which people with destructive ideas can propagate those ideas to others—on balance, the changes have been

enormously positive. Many use the Internet and the World Wide Web daily, communicating with friends, relatives, and business associates through

e-mail and social networking sites, shopping for virtually anything that can be purchased anywhere, and digging up a limitless variety and amount of

information, from movie theater schedules, to hotel room prices in cities halfway around the world, to the history and characteristics of the culture of

some small and obscure society. Constructing the software and data that provide all of this information requires knowledge of several different

technologies, such as markup languages and meta-markup languages, as well as programming skills in a myriad of different programming

languages, some specific to the World Wide Web and some designed for general-purpose computing. This book is meant to provide the required

background and a basis for acquiring the knowledge and skills necessary to build the World Wide Web sites that provide both the information

users want and the advertising that pays for its presentation.

This chapter lays the groundwork for the remainder of the book. It begins with introductions to, and some history of, the Internet and the World

Wide Web. Then, it discusses the purposes and some of the characteristics of Web browsers and servers. Next, it describes uniform resource

locators (URLs), which specify addresses of resources available on the Web. Following this, it introduces Multipurpose Internet Mail Extensions,

which define types and file name extensions for files with different kinds of contents. Next, it discusses the Hypertext Transfer Protocol (HTTP),

which provides the communication interface for connections between browsers and Web servers. Finally, the chapter gives brief overviews of

some of the tools commonly used by Web programmers, including XHTML, XML, JavaScript, Flash, Servlets, JSP, JSF, ASP.NET, PHP, Ruby,

Rails, and Ajax. All of these are discussed in far more detail in the remainder of the book (XHTML in Chapters 2 and 3; JavaScript in Chapters 4, 5,

and 6; XML in Chapter 7; Flash in Chapter 8; PHP in Chapter 9; Ajax in Chapter 10; Servlets, JSP, and JSF in Chapter 11; Ruby in Chapters 14

and 15; and Rails in Chapter 15).

1.1 A Brief Introduction to the Internet

Virtually every topic discussed in this book is related to the Internet. Therefore, we begin with a quick introduction to the Internet itself.

1.1.1 Origins

In the 1960s, the U.S. Department of Defense (DoD) became interested in developing a new large-scale computer network. The purposes of this

network were communications, program sharing, and remote computer access for researchers working on defense-related contracts. One

fundamental requirement was that the network be sufficiently robust so that even if some network nodes were lost to sabotage, war, or some more

benign cause, the network would continue to function. The DoD’s Advanced Research Projects Agency (ARPA)

1

funded the construction of the first

such network, which connected about a dozen ARPA-funded research laboratories and universities. The first node of this network was established

at UCLA in 1969.

Because it was funded by ARPA, the network was named ARPAnet. Despite the initial intentions, the primary early use of ARPAnet was

simple text-based communications through e-mail. Because ARPAnet was available only to laboratories and universities that conducted ARPA-

funded research, the great majority of educational institutions were not connected. As a result, a number of other networks were developed during

the late 1970s and early 1980s, with BITNET and CSNET among them. BITNET, which is an acronym for Because It’s Time Network, began at the

City University of New York. It was built initially to provide electronic mail and file transfers. CSNET, which is an acronym for Computer Science

Network, connected the University of Delaware, Purdue University, the University of Wisconsin, the RAND Corporation, and Bolt, Beranek, and

Newman (a research company in Cambridge, Massachusetts). Its initial purpose was to provide electronic mail. For a variety of reasons, neither

BITNET nor CSNET became a widely used national network.

A new national network, NSFnet, was created in 1986. It was sponsored, of course, by the National Science Foundation (NSF). NSFnet initially

connected the NSF-funded supercomputer centers at five universities. Soon after being established, it became available to other academic

institutions and research laboratories. By 1990, NSFnet had replaced ARPAnet for most nonmilitary uses, and a wide variety of organizations had

established nodes on the new network—by 1992 NSFnet connected more than 1 million computers around the world. In 1995, a small part of

NSFnet returned to being a research network. The rest became known as the Internet, although this term was used much earlier for both ARPAnet

and NSFnet.

1.1.2 What Is the Internet?

The Internet is a huge collection of computers connected in a communications network. These computers are of every imaginable size,

configuration, and manufacturer. In fact, some of the devices connected to the Internet—such as plotters and printers—are not computers at all. The

innovation that allows all of these diverse devices to communicate with each other is a single, low-level protocol: the Transmission Control

Protocol/Internet Protocol (TCP/IP). TCP/IP became the standard for computer network connections in 1982. It can be used directly to allow a

program on one computer to communicate with a program on another computer via the Internet. In most cases, however, a higher-level protocol

runs on top of TCP/IP. Nevertheless, it’s important to know that TCP/IP provides the low-level interface that allows most computers (and other

devices) connected to the Internet to appear exactly the same.

2

Rather than connecting every computer on the Internet directly to every other computer on the Internet, normally the individual computers in an

organization are connected to each other in a local network. One node on this local network is physically connected to the Internet. So, the Internet

is actually a network of networks, rather than a network of computers.

Obviously, all devices connected to the Internet must be uniquely identifiable.

1.1.3 Internet Protocol Addresses

For people, Internet nodes are identified by names; for computers, they are identified by numeric addresses. This relationship exactly parallels the

one between a variable name in a program, which is for people, and the variable’s numeric memory address, which is for the machine.

The Internet Protocol (IP) address of a machine connected to the Internet is a unique 32-bit number. IP addresses usually are written (and

thought of) as four 8-bit numbers, separated by periods. The four parts are separately used by Internet-routing computers to decide where a

message must go next to get to its destination.

Organizations are assigned blocks of IPs, which they in turn assign to their machines that need Internet access—which now include most

computers. For example, a small organization may be assigned 256 IP addresses, such as 191.57.126.0 to 191.57.126.255. Very large

organizations, such as the Department of Defense, may be assigned 16 million IP addresses, which include IP addresses with one particular first

8-bit number, such as 12.0.0.0 to 12.255.255.255.

Although people nearly always type domain names into their browsers, the IP works just as well. For example, the IP for United Airlines

(www.ual.com) is 209.87.113.93. So, if a browser is pointed at http://209.87.113.93, it will be connected to the United Airlines Web site.

In late 1998, a new IP standard, IPv6, was approved, although it still is not widely used. The most significant change was to expand the

address size from 32 bits to 128 bits. This is a change that will soon be essential because the number of remaining unused IP addresses is

diminishing rapidly. The new standard can be found at ftp://ftp.isi.edu/in-notes/rfc2460.txt.

1.1.4 Domain Names

Because people have difficulty dealing with and remembering numbers, machines on the Internet also have textual names. These names begin with

the name of the host machine, followed by progressively larger enclosing collections of machines, called domains. There may be two, three, or

more domain names. The first domain name, which appears immediately to the right of the host name, is the domain of which the host is a part.

The second domain name gives the domain of which the first domain is a part. The last domain name identifies the type of organization in which the

host resides, which is the largest domain in the site’s name. For organizations in the United States, edu is the extension for educational institutions,

com specifies a company, gov is used for the U.S. government, and org is used for many other kinds of organizations. In other countries, the largest

domain is often an abbreviation for the country—for example, se is used for Sweden, and kz is used for Kazakhstan.

Consider this sample address:

movies.comedy.marxbros.com

Here, movies is the hostname and comedy is movies’s local domain, which is a part of marxbros’s domain, which is a part of the com domain. The

hostname and all of the domain names are together called a fully qualified domain name.

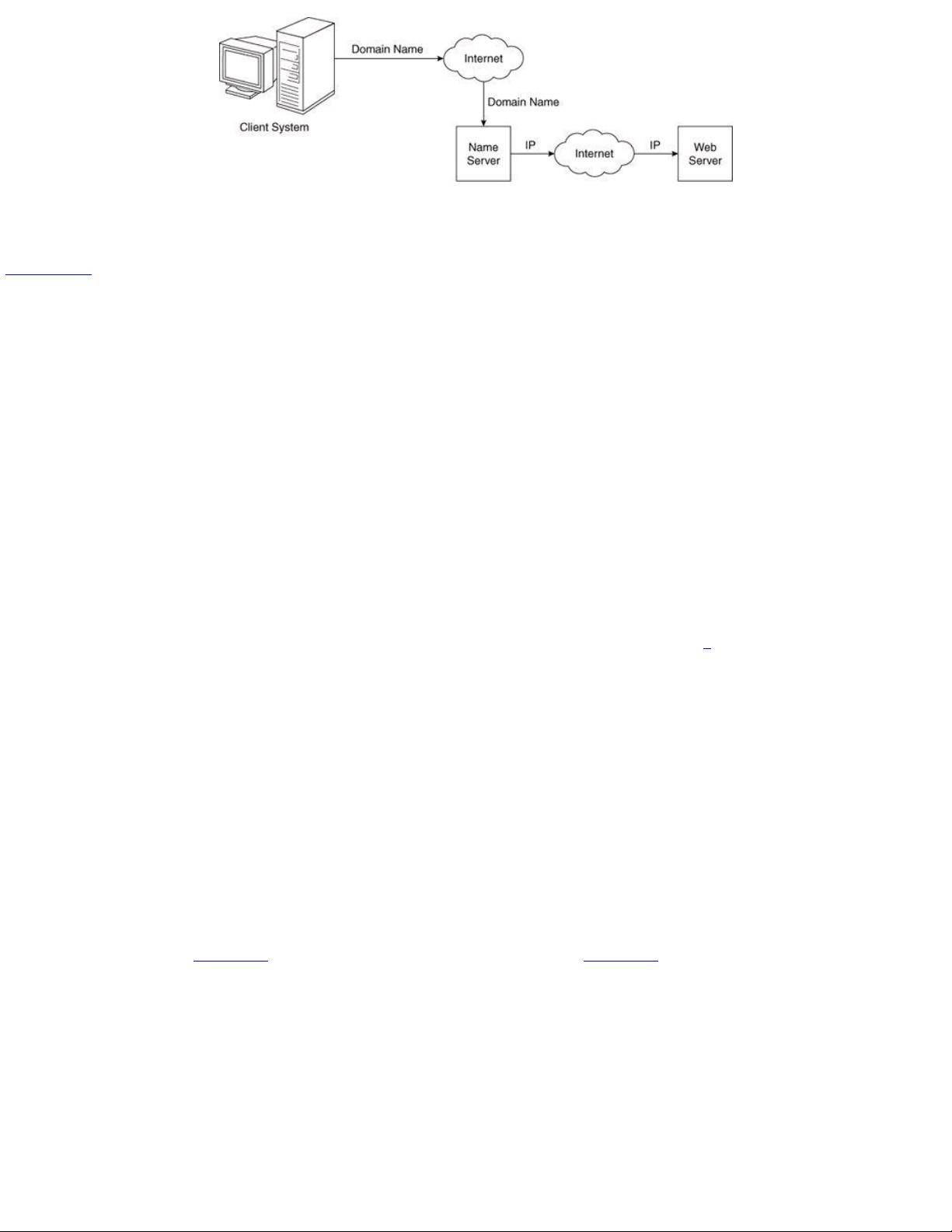

Because IP addresses are the addresses used internally by the Internet, the fully qualified domain name of the destination for a message,

which is what is given by a browser user, must be converted to an IP address before the message can be transmitted over the Internet to the

destination. These conversions are done by software systems called name servers, which implement the Domain Name System (DNS). Name

servers serve a collection of machines on the Internet and are operated by organizations that are responsible for the part of the Internet to which

those machines are connected. All document requests from browsers are routed to the nearest name server. If the name server can convert the fully

qualified domain name to an IP address, it does so. If it cannot, the name server sends the fully qualified domain name to another name server for

conversion. Like IP addresses, fully qualified domain names must be unique. Figure 1.1 shows how fully qualified domain names requested by a

browser are translated into IPs before they are routed to the appropriate Web server.

Figure 1.1 Domain name conversion

One way to determine the IP address of a Web site is by using telnet on the fully qualified domain name. This approach is illustrated in

Section 1.7.1.

By the mid-1980s, a collection of different protocols that run on top of TCP/IP had been developed to support a variety of Internet uses. Among

these protocols, the most common were telnet, which was developed to allow a user on one computer on the Internet to log onto and use another

computer on the Internet; File Transfer Protocol (ftp), which was developed to transfer files among computers on the Internet; Usenet, which was

developed to serve as an electronic bulletin board; and mailto, which was developed to allow messages to be sent from the user of one computer

on the Internet to other users of other computers on the Internet.

This variety of protocols, each with its own user interface and useful only for the purpose for which it was designed, restricted the growth of the

Internet. Users were required to learn all the different interfaces to gain all the advantages of the Internet. Before long, however, a better approach

was developed: the World Wide Web.

1.2 The World Wide Web

This section provides a brief introduction to the evolution of the World Wide Web.

1.2.1 Origins

In 1989, a small group of people led by Tim Berners-Lee at CERN (Conseil Européen pour la Recherche Nucléaire, or European Organization for

Particle Physics) proposed a new protocol for the Internet, as well as a system of document access to use it.

3

The intent of this new system, which

the group named the World Wide Web, was to allow scientists around the world to use the Internet to exchange documents describing their work.

The proposed new system was designed to allow a user anywhere on the Internet to search for and retrieve documents from databases on any

number of different document-serving computers connected to the Internet. By late 1990, the basic ideas for the new system had been fully

developed and implemented on a NeXT computer at CERN. In 1991, the system was ported to other computer platforms and released to the rest

of the world.

For the form of its documents, the system used hypertext, which is text with embedded links to text in other documents to allow nonsequential

browsing of textual material. The idea of hypertext had been developed earlier and had appeared in Xerox’s NoteCards and Apple’s HyperCard in

the mid-1980s.

From here on, we will refer to the World Wide Web simply as “the Web.” The units of information on the Web have been referred to by several

different names; among them, the most common are pages, documents, and resources. Perhaps the best of these is documents, although that

seems to imply only text. Pages is widely used, but it is misleading in that Web units of information often have more than one of the kind of pages

that make up printed media. There is some merit to calling these units resources, because that covers the possibility of nontextual information. This

book will use documents and pages more or less interchangeably, but we prefer documents in most situations.

Documents are sometimes just text, usually with embedded links to other documents, but they often also include images, sound recordings, or

other kinds of media. When a document contains nontextual information, it is called hypermedia.

In an abstract sense, the Web is a vast collection of documents, some of which are connected by links. These documents are accessed by

Web browsers, introduced in Section 1.3, and are provided by Web servers, introduced in Section 1.4.

1.2.2 Web or Internet?

It is important to understand that the Internet and the Web are not the same thing. The Internet is a collection of computers and other devices

connected by equipment that allows them to communicate with each other. The Web is a collection of software and protocols that has been

installed on most, if not all, of the computers on the Internet. Some of these computers run Web servers, which provide documents, but most run

Web clients, or browsers, which request documents from servers and display them to users. The Internet was quite useful before the Web was

developed, and it is still useful without it. However, most users of the Internet now use it through the Web.

1.3 Web Browsers

When two computers communicate over some network, in many cases one acts as a client and the other as a server. The client initiates the

communication, which is often a request for information stored on the server, which then sends that information back to the client. The Web, as well

as many other systems, operates in this client-server configuration.

Documents provided by servers on the Web are requested by browsers, which are programs running on client machines. They are called

browsers because they allow the user to browse the resources available on servers. The first browsers were text based—they were not capable of

displaying graphic information, nor did they have a graphical user interface. This limitation effectively constrained the growth of the Web. In early

1993, things changed with the release of Mosaic, the first browser with a graphical user interface. Mosaic was developed at the National Center for

Supercomputer Applications (NCSA) at the University of Illinois. Mosaic’s interface provided convenient access to the Web for users who were

neither scientists nor software developers. The first release of Mosaic ran on UNIX systems using the X Window system. By late 1993, versions of

Mosaic for Apple Macintosh and Microsoft Windows systems had been released. Finally, users of the computers connected to the Internet around

the world had a powerful way to access anything on the Web anywhere in the world. The result of this power and convenience was an explosive

growth in Web usage.

A browser is a client on the Web because it initiates the communication with a server, which waits for a request from the client before doing

anything. In the simplest case, a browser requests a static document from a server. The server locates the document among its servable

documents and sends it to the browser, which displays it for the user. However, more complicated situations are common. For example, the server

may provide a document that requests input from the user through the browser. After the user supplies the requested input, it is transmitted from the

browser to the server, which may use the input to perform some computation and then return a new document to the browser to inform the user of

the results of the computation. Sometimes a browser directly requests the execution of a program stored on the server. The output of the program

is then returned to the browser.

Although the Web supports a variety of protocols, the most common one is the Hypertext Transfer Protocol (HTTP). HTTP provides a standard

form of communication between browsers and Web servers. Section 1.7 presents an introduction to HTTP.

The most commonly used browsers are Microsoft Internet Explorer (IE), which runs only on PCs that use one of the Microsoft Windows

operating systems,

4

and Firefox, which is available in versions for several different computing platforms, including Windows, Mac OS, and Linux.

Several other browsers are available, such as the close relatives of Firefox and Netscape Navigator, as well as Opera and Apple’s Safari.

However, because the great majority of browsers now in use are either IE or Firefox, in this book we focus on those two.

1.4 Web Servers

Web servers are programs that provide documents to requesting browsers. Servers are slave programs: They act only when requests are made to

them by browsers running on other computers on the Internet.

The most commonly used Web servers are Apache, which has been implemented for a variety of computer platforms, and Microsoft’s Internet

Information Server (IIS), which runs under Windows operating systems. As of June 2009, there were over 75 million active Web hosts in operation,

5

about 47 percent of which were Apache, about 25 percent of which were IIS, and the remainder of which were spread thinly over a large number of

others. (The third-place server was qq.com, a product of a Chinese company, with almost 13 percent.)

6

1.4.1 Web Server Operation

Although having clients and servers is a natural consequence of information distribution, this configuration offers some additional benefits for the

Web. On the one hand, serving information does not take a great deal of time. On the other hand, displaying information on client screens is time

consuming. Because Web servers need not be involved in this display process, they can handle many clients. So, it is both a natural and an

efficient division of labor to have a small number of servers provide documents to a large number of clients.

Web browsers initiate network communications with servers by sending them URLs (discussed in Section 1.5). A URL can specify one of two

different things: the address of a data file stored on the server that is to be sent to the client, or a program stored on the server that the client wants

executed, with the output of the program returned to the client.

All the communications between a Web client and a Web server use the standard Web protocol, Hypertext Transfer Protocol (HTTP), which is

discussed in Section 1.7.

7

When a Web server begins execution, it informs the operating system under which it is running that it is now ready to accept incoming network

connections through a specific port on the machine. While in this running state, the server runs as a background process in the operating system

environment. A Web client, or browser, opens a network connection to a Web server, sends information requests and possibly data to the server,

receives information from the server, and closes the connection. Of course, other machines exist between browsers and servers on the network—

specifically, network routers and domain-name servers. This section, however, focuses on just one part of Web communication: the server.

Simply put, the primary task of a Web server is to monitor a communications port on its host machine, accept HTTP commands through that

port, and perform the operations specified by the commands. All HTTP commands include a URL, which includes the specification of a host server

machine. When the URL is received, it is translated into either a file name (in which case the file is returned to the requesting client) or a program

name (in which case the program is run and its output is sent to the requesting client). This process sounds pretty simple, but, as is the case in

many other simple-sounding processes, a large number of complicating details are involved.

All current Web servers have a common ancestry: the first two servers, developed at CERN in Europe and NCSA at the University of Illinois.

Currently, the most common server configuration is Apache running on some version of UNIX.

1.4.2 General Server Characteristics

Most of the available servers share common characteristics, regardless of their origin or the platform on which they run. This section provides brief

descriptions of some of these characteristics.

The file structure of a Web server has two separate directories. The root of one of these is called the document root. The file hierarchy that

grows from the document root stores the Web documents to which the server has direct access and normally serves to clients. The root of the other

directory is called the server root. This directory, along with its descendant directories, stores the server and its support software.

The files stored directly in the document root are those available to clients through top-level URLs. Typically, clients do not access the

document root directly in URLs; rather, the server maps requested URLs to the document root, whose location is not known to clients. For example,

suppose that the site name is www.tunias.com (not a real site—at least, not yet), which we will assume to be a UNIX-based system. Suppose

further that the document root is named topdocs and is stored in the /admin/web directory, making its address /admin/web/topdocs. A request for

a file from a client with the URL http://www.tunias.com/petunias.html will cause the server to search for the file with the file path

/admin/web/topdocs/petunias.html. Likewise, the URL http://www.tunias.com/bulbs/tulips.html will cause the server to search for the file with the

address /admin/web/topdocs/bulbs/tulips.html.

Many servers allow part of the servable document collection to be stored outside the directory at the document root. The secondary areas from

which documents can be served are called virtual document trees. For example, the original configuration of a server might have the server store

all its servable documents from the primary system disk on the server machine. Later, the collection of servable documents might outgrow that disk,

in which case part of the collection could be stored on a secondary disk. This secondary disk might reside on the server machine or on some other

machine on a local area network. To support this arrangement, the server is configured to direct-request URLs with a particular file path to a

storage area separate from the document-root directory. Sometimes files with different types of content, such as images, are stored outside the

document root.

Early servers provided few services other than the basic process of returning requested files or the output of programs whose execution had

been requested. The list of additional services has grown steadily over the years. Contemporary servers are large and complex systems that

provide a wide variety of client services. Many servers can support more than one site on a computer, potentially reducing the cost of each site and

making their maintenance more convenient. Such secondary hosts are called virtual hosts.

Some servers can serve documents that are in the document root of other machines on the Web; in this case, they are called proxy servers.

Although Web servers were originally designed to support only the HTTP protocol, many now support ftp, gopher, news, and mailto. In

addition, nearly all Web servers can interact with database systems through Common Gateway Interface (CGI) programs and server-side scripts.

1.4.3 Apache

Apache began as the NCSA server, httpd, with some added features. The name Apache has nothing to do with the Native American tribe of the

same name. Rather, it came from the nature of its first version, which was a patchy version of the httpd server. As seen in the usage statistics

given at the beginning of this section, Apache is the most widely used Web server. The primary reasons are as follows: Apache is an excellent

server because it is both fast and reliable. Furthermore, it is open-source software, which means that it is free and is managed by a large team of

volunteers, a process that efficiently and effectively maintains the system. Finally, it is one of the best available servers for Unix-based systems,

which are the most popular for Web servers.

Apache is capable of providing a long list of services beyond the basic process of serving documents to clients. When Apache begins

execution, it reads its configuration information from a file and sets its parameters to operate accordingly. A new copy of Apache includes default

configuration information for a “typical” operation. The site manager modifies this configuration information to fit his or her particular needs and

tastes.

For historical reasons, there are three configuration files in an Apache server: httpd.conf, srm.conf, and access.conf. Only one of these,

httpd.conf, actually stores the directives that control an Apache server’s behavior. The other two point to httpd.conf, which is the file that contains

the list of directives that specify the server’s operation. These directives are described at http://httpd.apache.org/docs/2.2/mod/quickreference.html.

1.4.4 IIS

Although Apache has been ported to the Windows platforms, it is not the most popular server on those systems. Because the Microsoft IIS server is

supplied as part of Windows—and because it is a reasonably good server—most Windows-based Web servers use IIS. Apache and IIS provide

similar varieties of services.

From the point of view of the site manager, the most important difference between Apache and IIS is that Apache is controlled by a

configuration file that is edited by the manager to change Apache’s behavior. With IIS, server behavior is modified by changes made through a

window-based management program, named the IIS snap-in, which controls both IIS and ftp. This program allows the site manager to set

parameters for the server.

Under Windows XP and Vista, the IIS snap-in is accessed by going to Control Panel, Administrative Tools, and IIS Admin. Clicking on this

last selection takes you to a window that allows starting, stopping, or pausing IIS. This same window allows IIS parameters to be changed when the

server has been stopped.

1.5 Uniform Resource Locators

Uniform (or universal)

8

resource locators (URLs) are used to identify documents (resources) on the Internet. There are many different kinds of

resources, identified by different forms of URLs.

剩余628页未读,继续阅读

点击了解资源详情

点击了解资源详情

点击了解资源详情

2015-11-22 上传

2009-04-06 上传

2018-09-26 上传

2013-08-20 上传

1010 浏览量

2010-05-27 上传

amazingbob

- 粉丝: 0

- 资源: 1

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

最新资源

- [PHP.5.&.MySQL.5基础与实例教程.随书光盘].PHP.5.&.MySQL.5

- [PHP.5.&.MySQL.5基础与实例教程.随书光盘].PHP.5.&.MySQL.5

- Core J2EE Patter.pdf

- 深入浅出struts2

- S7-200自由口通讯文档

- 在tomcat6.0里配置虚拟路径

- LR8.1 操作笔记

- ASP的聊天室源码,可进行聊天

- RealView® 编译工具-汇编程序指南(pdf)

- Java连接Mysql,SQL Server, Access,Oracle实例

- 易我c++,菜鸟版c++教程。

- 软件性能测试计划模板

- SUN Multithread Programming

- 城市酒店入住信息管理系统论

- Learning patterns of activity using real-time tracking.pdf

- bus hound5.0使用 bus hound5.0使用 bus hound5.0使用

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功