422 A. Ponsich et al. / Chemical Engineering and Processing 47 (2008) 420–434

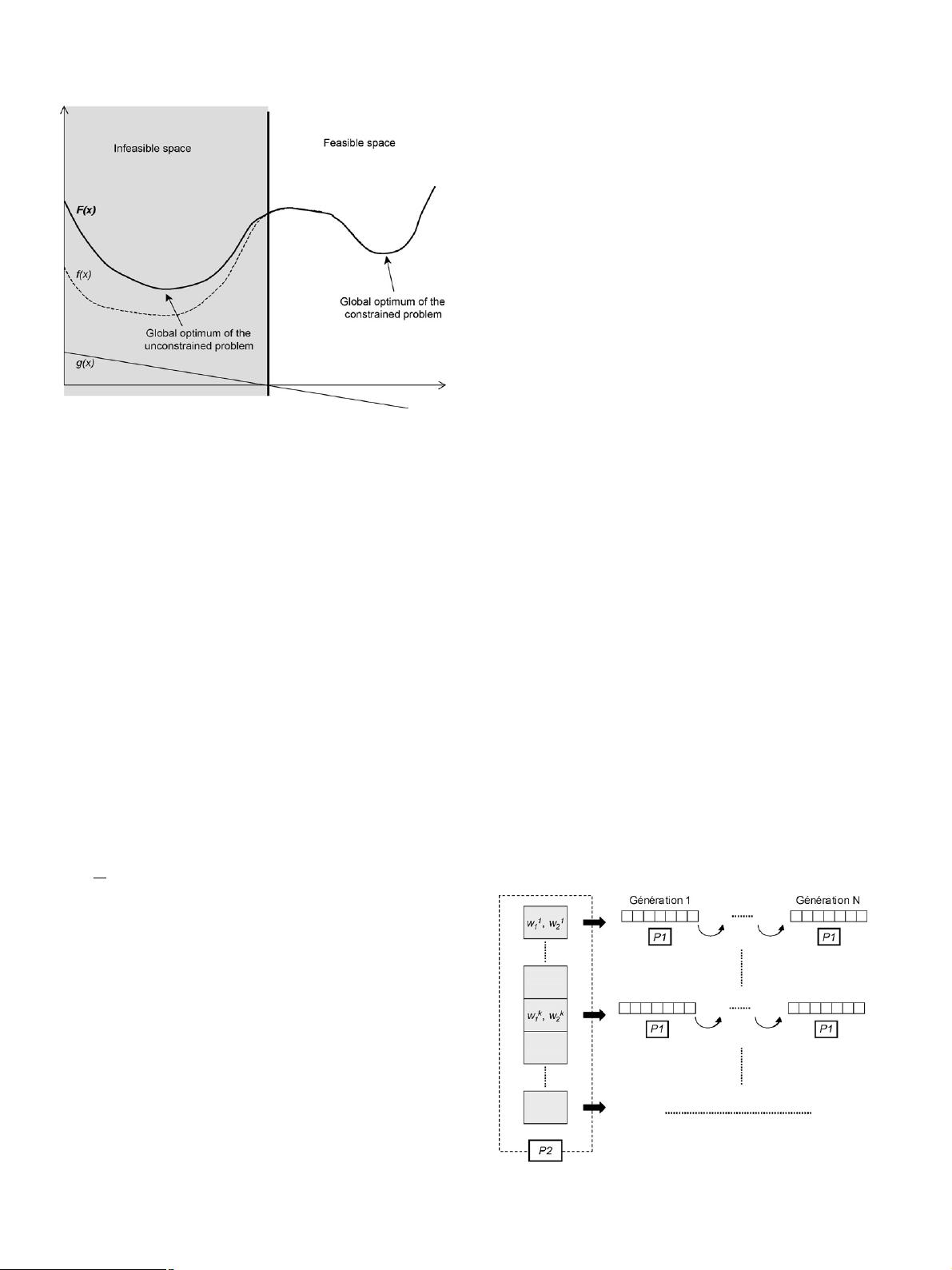

Fig. 1. Too weak penalty factor.

According to these principles, a great variety of penalisa-

tion methods were implemented, some of them are recalled in

[14]. The simplest is the static penalty: a numerical value, that

will not vary during the whole search, is allocated to each fac-

tor R

j

. Obviously, the drawback is that as many parameters as

existing constraints have to be tuned without any known method-

ology. Normalizing the constraints enables however to reduce

the number of parameters to be chosen from m to 1.

A modified static penalty technique is proposed in [15],in

which violation levels are set for each constraint. So considering

l levels in a problem with m constraints, it was shown that the

method needs the tuning of m(2l + 1) parameters (see [14] for

details).

Another proposal is a dynamic penalty strategy, for which R

j

is written as (C × t)

a

where t is the generation number. Here,

two parameters must be tuned, i.e. C and a. Common values are

0.5 and 2, respectively. Thus, this method enables to increase

the pressure on infeasible solutions along the search. A similar

effect can be obtained with a method presenting an analogy with

Simulated Annealing:

R

j

=

1

2t

(3)

where τ is a decreasing temperature. It is necessary to determine

initial and final temperatures, τ

i

and τ

f

, as well as a cooling

scheme for τ. This technique has two special features. First, it

involves a difference between linear and non-linear constraints.

Feasibility as regard with the former is maintained by specific

operators, so that only the latter has to be included in the anneal-

ing penalty term. In addition, the initial population is composed

of clones of a same feasible individual that respects linear con-

straints [14].

Different approaches, called adaptive penalties, are based on

learning from the population behaviour in the previous gener-

ations. In [16], the penalty factor decreases (resp. increases) if

the best individual was always feasible (resp. infeasible) during

the k last generations. For undeterminated cases, the factor value

is kept unchanged. This methodology imposes the tuning of the

initial value for the penalty factor and of the number of learning

generations k.

New techniques now rest on self-adaptive penalty

approaches, which also learn from the current run, without any

parameter tuning. In [13], the constraints and the objective func-

tion are first normalized. Then, the method consists in computing

the penalty factor for constraint j at generation q as the product

of the factor at generation q − 1 with a coefficient depending on

the ratio of individuals violating constraint j at generation q.If

this ratio is fewer to 50%, then the coefficient is inferior to 1

in order to favour individuals located in the infeasible side of

the boundary. On the contrary, if the feasible individuals num-

ber is weak, the value increases up to 1 to have the population

heading for the inside part of the feasible region. This oper-

ating mode enables to concentrate the search on the boundary

built by each constraint, i.e. where the global optimum is likely

to be located. The initial value is the ratio of the interquartile

range of the objective function by the interquartile range of the

considered constraint at first generation, which implicitly car-

ries out normalization. No parameter is thus necessary in this

method.

Another kind of self-adaptive penalty is proposed by Coello

Colleo [17], but this one is based on the principle of co-evolution.

In addition to the classical population P1 coding the tackled

problem, the method considers a population P2 representing two

penalty coefficients that enable to evaluate population P1 (w

1

for

the amount of violation of all constraints and w

2

for the number

of violated constraints). Thus, each individual of P1 is evaluated

as many times as there are individuals in P2. Then, P1 evolves

during a fixed number of generations and each individual of P2,

i.e. each set of two penalty factors, is evaluated. This mechanism

is depicted in Fig. 2. Basically, the evaluation is calculated as the

average of all objective functions of P1 evaluated by each indi-

vidual of P2. Then P2 evolves like in any GA process, given that

one generation for P2 is equivalent to a complete evolution of P1.

The evident drawback is the huge number of objective function

evaluations, making this method computationally expensive. In

addition, co-evolution involves the introduction and tuning of a

new GAs parameters set: population size, maximum generation

number, etc.

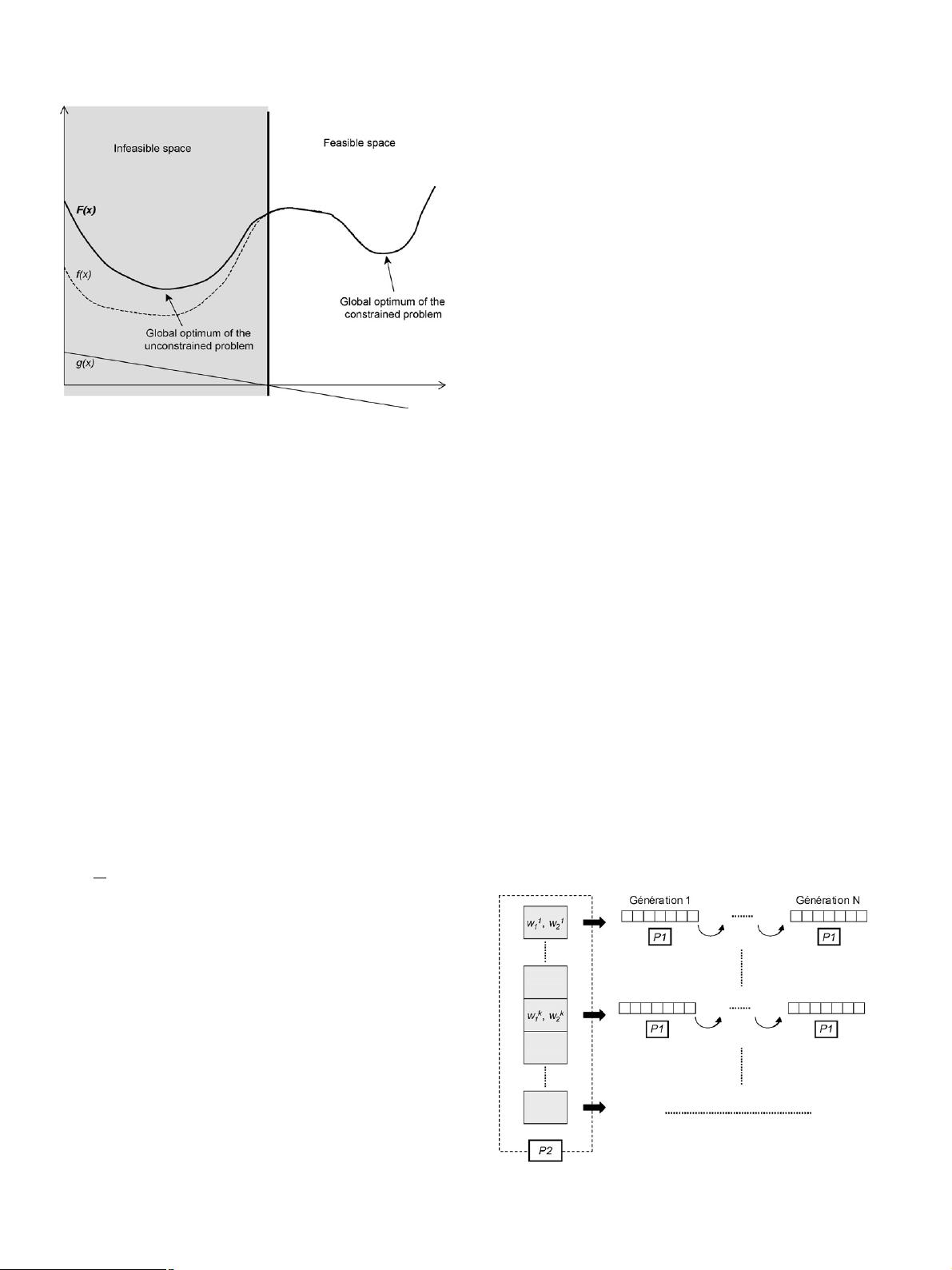

Fig. 2. Self-adaptive penalty by co-evolution [17].