Published as a conference paper at ICLR 2020

CIFAR-10: It is a standard image classification dataset and consists of 60K 32×32 colour images

in 10 classes. The original training set contains 50K images, with 5K images per class. The original

test set contains 10K images, with 1K images per class. Due to the need of validation set, we split

all 50K training images in CIFAR-10 into two groups. Each group contains 25K images with 10

classes. We regard the first group as the new training set and the second group as the validation set.

CIFAR-100: This dataset is just like CIFAR-10. It has the same images as CIFAR-10 but categorizes

each image into 100 fine-grained classes. The original training set on CIFAR-100 has 50K images,

and the original test set has 10K images. We randomly split the original test set into two group of

equal size — 5K images per group. One group is regarded as the validation set, and another one is

regarded as the new test set.

ImageNet-16-120: We build ImageNet-16-120 from the down-sampled variant of ImageNet

(ImageNet16×16). As indicated in Chrabaszcz et al. (2017), down-sampling images in ImageNet

can largely reduce the computation costs for optimal hyper-parameters of some classical models

while maintaining similar searching results. Chrabaszcz et al. (2017) down-sampled the original

ImageNet to 16×16 pixels to form ImageNet16×16, from which we select all images with label

∈ [1, 120] to construct ImageNet-16-120. In sum, ImageNet-16-120 contains 151.7K training im-

ages, 3K validation images, and 3K test images with 120 classes.

By default, in this paper, “the training set”, “the validation set”, “the test set” indicate the new

training, validation, and test sets, respectively.

2.3 ARCHITECTURE PERFORMANCE

Training Architectures. In order to unify the performance of every architecture, we give the per-

formance of every architecture in our search space. In our NAS-Bench-201, we follow previous

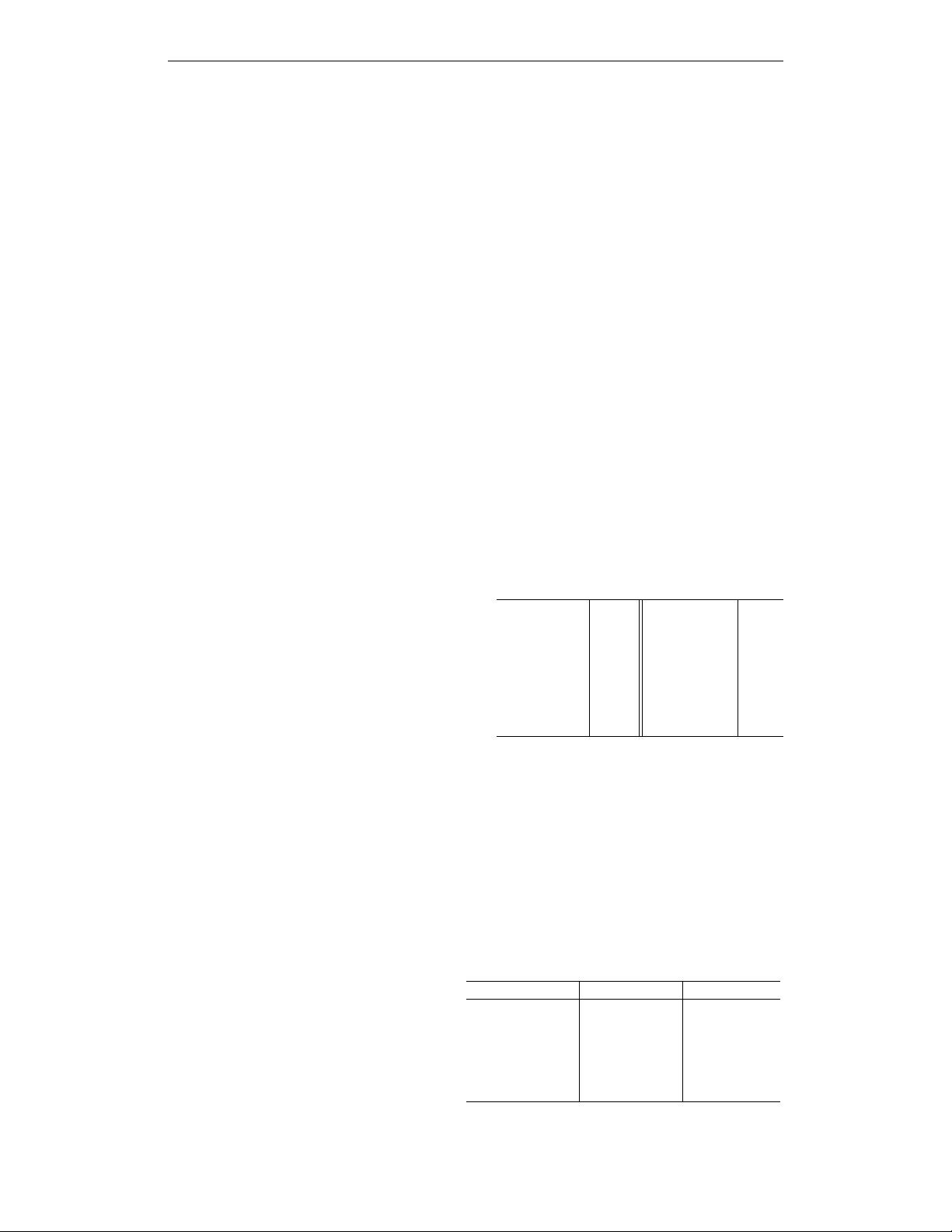

Table 1: The training hyper-parameter set H

†

.

optimizer SGD initial LR 0.1

Nesterov X ending LR 0

momentum 0.9 LR schedule cosine

weight decay 0.0005 epoch 200

batch size 256 initial channel 16

V 4 N 5

random flip p=0.5 random crop X

normalization X

literature to set up the hyper-parameters and train-

ing strategies (Zoph et al., 2018; Loshchilov &

Hutter, 2017; He et al., 2016). We train each ar-

chitecture with the same strategy, which is shown

in Table 1. For simplification, we denote all hyper-

parameters for training a model as a set H, and we

use H

†

to denote the values of hyper-parameter that

we use. Specifically, we train each architecture via

Nesterov momentum SGD, using the cross-entropy

loss for 200 epochs in total. We set the weight de-

cay as 0.0005 and decay the learning rate from 0.1 to 0 with a cosine annealing (Loshchilov &

Hutter, 2017). We use the same H

†

on different datasets, except for the data augmentation which is

slightly different due to the image resolution. On CIFAR, we use the random flip with probability

of 0.5, the random crop 32×32 patch with 4 pixels padding on each border, and the normalization

over RGB channels. On ImageNet-16-120, we use a similar strategy but random crop 16×16 patch

with 2 pixels padding on each border. Apart from using H

†

for all datasets, we also use a different

hyper-parameter set H

‡

for CIFAR-10. It is similar to H

†

but its total number of training epochs

is 12. In this way, we could provide bandit-based algorithms (Falkner et al., 2018; Li et al., 2018)

more options for the usage of short training budget (see more details in appendix).

Metrics. We train each architecture with different random seeds on different datasets. We evaluate

each architecture A after every training epoch. NAS-Bench-201 provides the training, validation,

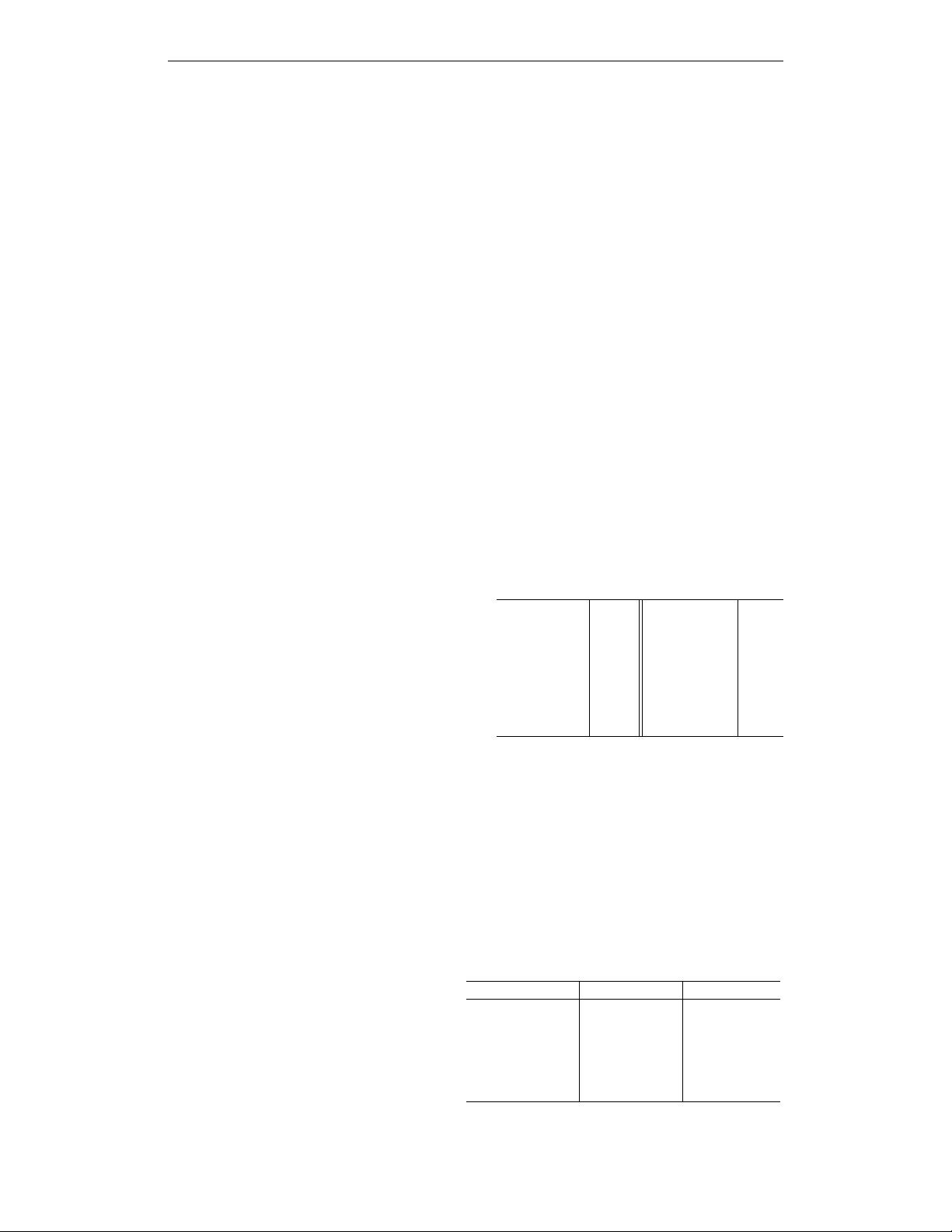

Table 2: NAS-Bench-201 provides the following

metrics with H

†

. ‘Acc.’ means accuracy.

Dataset Train Loss/Acc. Eval Loss/Acc.

CIFAR-10 train set valid set

CIFAR-10 train+valid set test set

CIFAR-100 train set valid set

CIFAR-100 train set test set

ImageNet-16-120 train set valid set

ImageNet-16-120 train set test set

and test loss as well as accuracy. We show the

supported metrics on different datasets in Ta-

ble 2. Users can easily use our API to query

the results of each trial of A, which has neg-

ligible computational costs. In this way, re-

searchers could significantly speed up their

searching algorithm on these datasets and fo-

cus solely on the essence of NAS.

We list the training/test loss/accuracies over

different split sets on four datasets in Table 2. On CIFAR-10, we train the model on the training set

and evaluate it on the validation set. We also train the model on the training and validation set and

4