A Discriminatively Trained, Multiscale, Deformable Part Model

Pedro Felzenszwalb

University of Chicago

pff@cs.uchicago.edu

David McAllester

Toyota Technological Institute at Chicago

mcallester@tti-c.org

Deva Ramanan

TTI-C and UC Irvine

dramanan@ics.uci.edu

Abstract

This paper describes a discriminatively trained, multi-

scale, deformable part model for object detection. Our sys-

tem achieves a two-fold improvement in average precision

over the best performance in the 2006 PASCAL person de-

tection challenge. It also outperforms the best results in the

2007 challenge in ten out of twenty categories. The system

relies heavily on deformable parts. While deformable part

models have become quite popular, their value had not been

demonstrated on difficult benchmarks such as the PASCAL

challenge. Our system also relies heavily on new methods

for discriminative training. We combine a margin-sensitive

approach for data mining hard negative examples with a

formalism we call latent SVM. A latent SVM, like a hid-

den CRF, leads to a non-convex training problem. How-

ever, a latent SVM is semi-convex and the training prob-

lem becomes convex once latent information is specified for

the positive examples. We believe that our training meth-

ods will eventually make possible the effective use of more

latent information such as hierarchical (grammar) models

and models involving latent three dimensional pose.

1. Introduction

We consider the problem of detecting and localizing ob-

jects of a generic category, such as people or cars, in static

images. We have developed a new multiscale deformable

part model for solving this problem. The models are trained

using a discriminative procedure that only requires bound-

ing box labels for the positive examples. Using these mod-

els we implemented a detection system that is both highly

efficient and accurate, processing an image in about 2 sec-

onds and achieving recognition rates that are significantly

better than previous systems.

Our system achieves a two-fold improvement in average

precision over the winning system [5] in the 2006 PASCAL

person detection challenge. The system also outperforms

the best results in the 2007 challenge in ten out of twenty

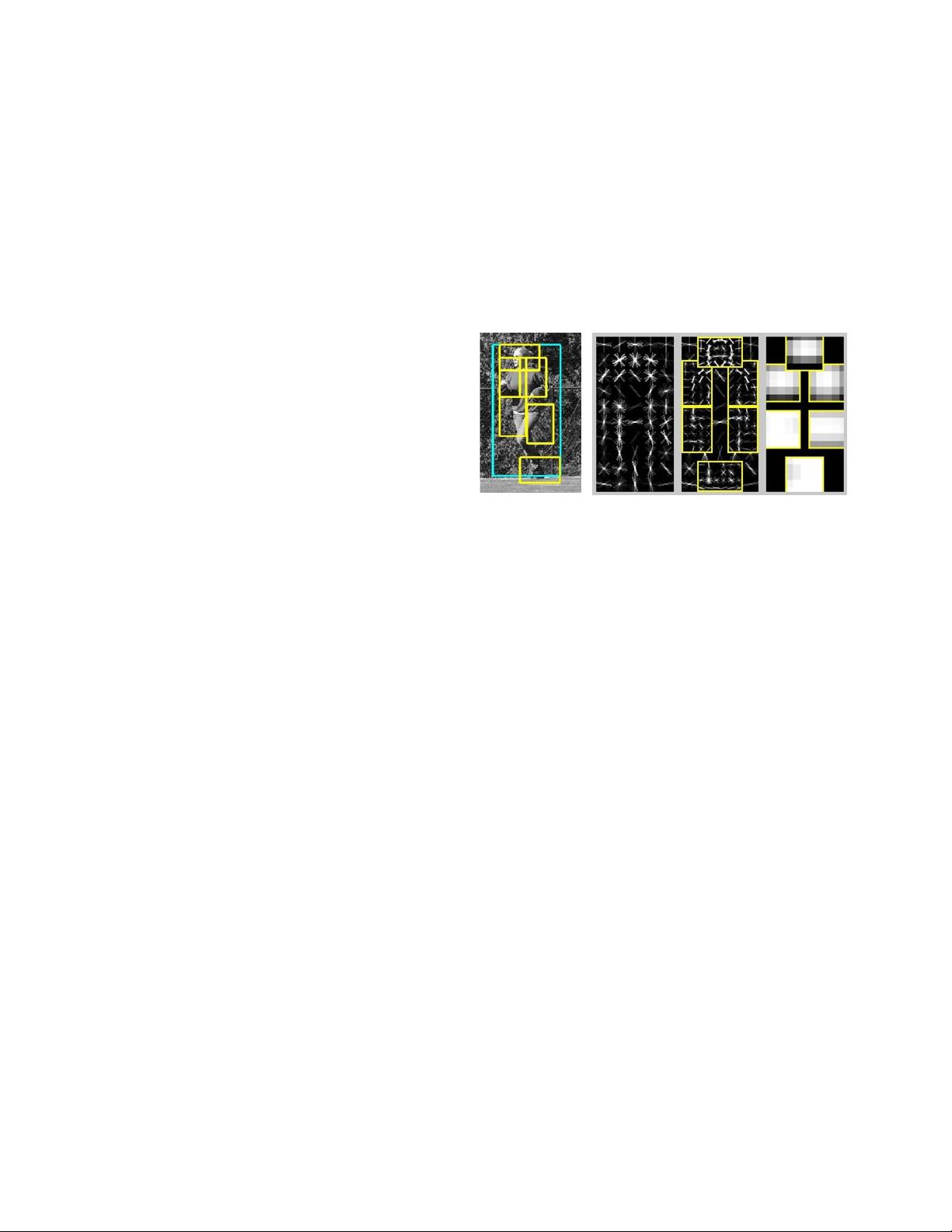

object categories. Figure 1 shows an example detection ob-

tained with our person model.

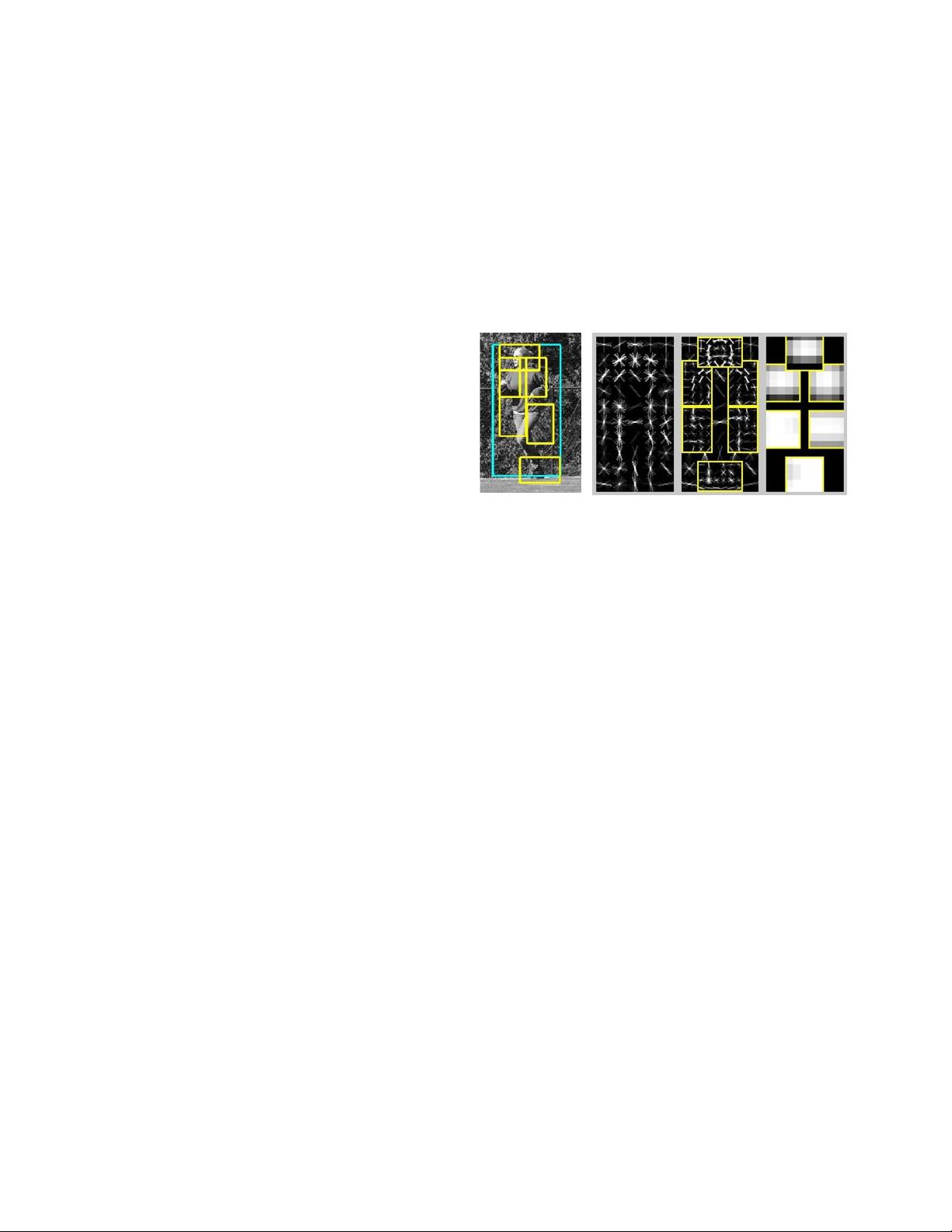

Figure 1. Example detection obtained with the person model. The

model is defined by a coarse template, several higher resolution

part templates and a spatial model for the location of each part.

The notion that objects can be modeled by parts in a de-

formable configuration provides an elegant framework for

representing object categories [1–3, 6, 10, 12, 13,15,16, 22].

While these models are appealing from a conceptual point

of view, it has been difficult to establish their value in prac-

tice. On difficult datasets, deformable models are often out-

performed by “conceptually weaker” models such as rigid

templates [5] or bag-of-features [23]. One of our main goals

is to address this performance gap.

Our models include both a coarse global template cov-

ering an entire object and higher resolution part templates.

The templates represent histogram of gradient features [5].

As in [14, 19, 21], we train models discriminatively. How-

ever, our system is semi-supervised, trained with a max-

margin framework, and does not rely on feature detection.

We also describe a simple and effective strategy for learn-

ing parts from weakly-labeled data. In contrast to computa-

tionally demanding approaches such as [4], we can learn a

model in 3 hours on a single CPU.

Another contribution of our work is a new methodology

for discriminative training. We generalize SVMs for han-

dling latent variables such as part positions, and introduce a

new method for data mining “hard negative” examples dur-

ing training. We believe that handling partially labeled data

is a significant issue in machine learning for computer vi-

sion. For example, the PASCAL dataset only specifies a

bounding box for each positive example of an object. We

treat the position of each object part as a latent variable. We

1