S. Bartelmaos, K. Abed-Meraim / Digital Signal Processing 21 (2011) 667–678 669

dV(t)

dt

=−2 tr

I −W

T

(t)W(t)

dW

T

(t)W(t)

dt

.

(9)

By definition [15], the ordinary differential equation associated

with the updating rule:

W(t +1) =W(t) − β f

W(t), x(t)

(10)

in the case where x(t) is a stationary process, is

dW(t)

dt

=E

f

W(t), x(t)

. (11)

Thus, for MCA-OJA, the ODE associated with (5) is

dW(t)

dt

=−CW(t)L +W(t)LW

T

(t)CW(t), (12)

which leads to

dV(t)

dt

=2 tr

I −W

T

(t)W(t)

2

LW

T

(t)CW(t)

+

W

T

(t)CW(t)L

.

(13)

This derivative may be positive-valued and hence lim

t→∞

V (t) = 0.

This can be seen easily in the MSA case where L

=I or for example

if we consider a weight matrix that span the minor subspace with-

out being strictly orthonormal (i.e. W

T

(t)W(t) = I + D(t) where

D

(t) is a

p

× p

diagonal matrix). We get in that case

dV(t)

dt

=4 tr

D

2

(t)LW

T

(t)CW(t)

0. (14)

A similar analysis can be done for MCA-FRANS. This analysis justi-

fies the proposal for using the orthonormalization approach intro-

duced next.

3. Orthogonal MCA algorithms

3.1. MCA orthogonal OJA (MCA-OOJA)

We propose here to stabilize the previous gradient-like algo-

rithms using orthogonalization of the weight matrix at each step.

Note that, besides guaranteeing the algorithm’s convergence, or-

thogonality is an important property that is desired in many sub-

space based estimation methods [19]. To this end, we set (using

informal notation):

W(i) := W(i)

W

H

(i)W(i)

−1/2

. (15)

The fast computation of (15) is obtained thanks to the following

result [4] (see also [2]):

Lemma 2.

1. Let R be a rank d hermitian matrix whose range is spanned by

the columns of matrix P

[p

1

,...,p

d

].LetR = EDE

H

(D =

diag(α

1

···α

d

), E unitary) be an eigendecomposition of R and de-

fine M

(P

H

P)

−1

P

H

RP. Then E = PT,whereT is obtained from an

eigendecomposition of M as M

=TDT

−1

.

2. Let N

= I + EDE

H

where E is orthonormal. Then, an i nverse square

root of N is given by

N

−

1

2

=I + ED

E

H

,

where D

=diag(

1

√

1+α

1

−1 ...

1

√

1+α

d

−1).

By applying Lemma 2toW

H

(i)W(i) (see computational details

in Appendix A.3), we get the orthogonal version of MCA-OJA sum-

marized in Table 1 (the notation

(.)

∗

in Table 1, stands for the

complex conjugation).

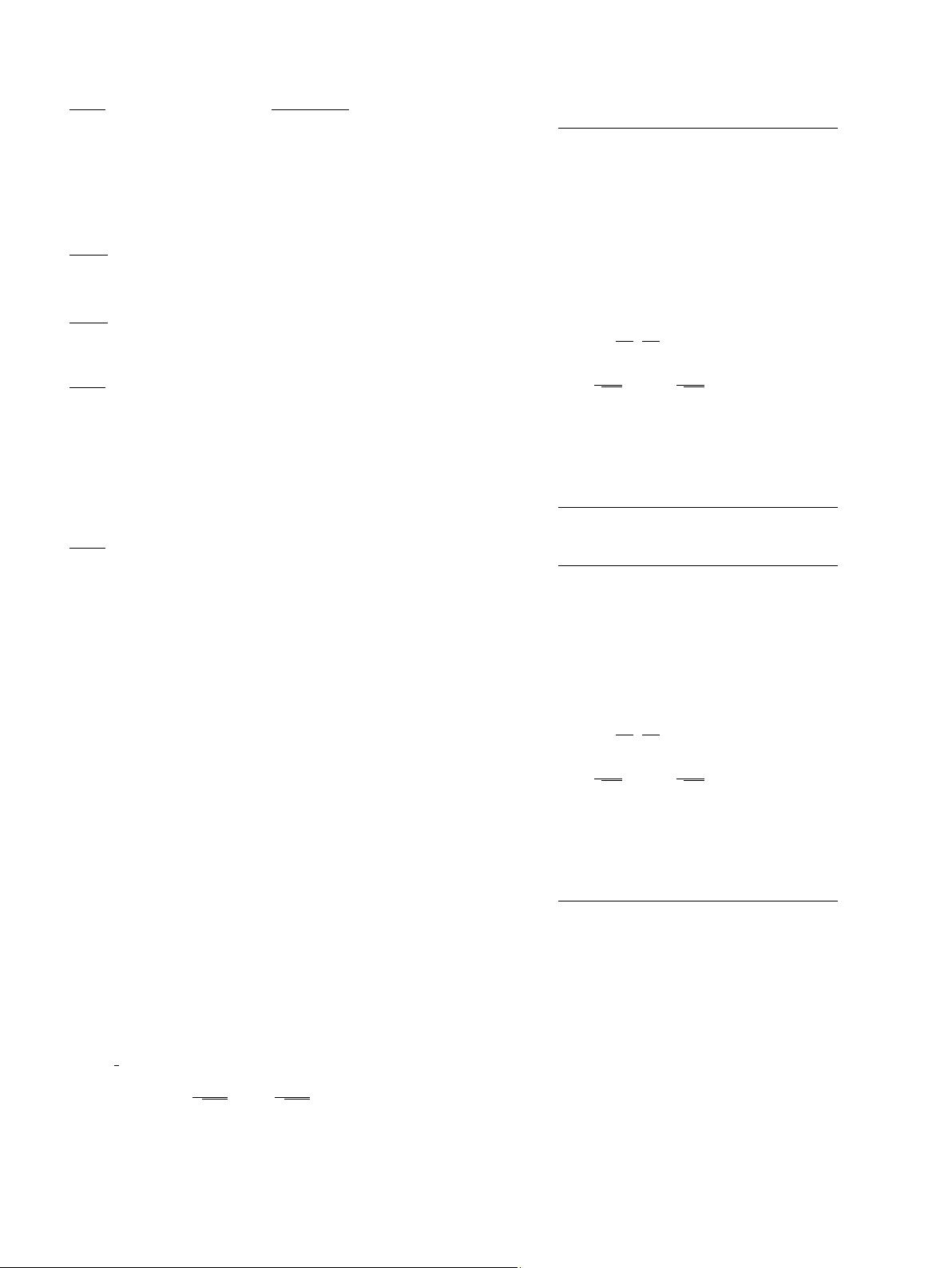

Table 1

MCA-OOJA.

y(i) = W

H

(i − 1)x(i) (with W(0) =[I

p

0]

T

)

z(i) = Ly(i)

z

p

(i) = W(i − 1)y(i)

R =β

2

(x(i)

2

z(i)z

H

(i) +z

p

(i)

2

y(i)y

H

(i)

−

x

H

(i)z

p

(i)z(i)y

H

(i) −z

H

p

(i)x(i)y(i)z

H

(i))

P =[y(i) z(i)]

M =(P

H

P)

−1

P

H

RP (2 ×2 matrix)

eig(M) = T diag(α

1

, α

2

)T

−1

(eigendecomposition of M)

E

=[e

1

e

2

]=PT

= diag(

1

e

1

,

1

e

2

)

E

=E [e

1

e

2

]; T

=T

τ

1

=

1

√

1+α

1

−1; τ

2

=

1

√

1+α

2

−1

T

−1

=

t

11

t

12

t

21

t

22

E

p

=W(i − 1)E

[e

p

1

e

p

2

]

p =τ

1

e

p

1

+β(1 +τ

1

)(t

∗

11

z

p

(i) −t

∗

12

x(i))

q =τ

2

e

p

2

+β(1 +τ

2

)(t

∗

21

z

p

(i) −t

∗

22

x(i))

W(i) = W(i − 1) +pe

H

1

+qe

H

2

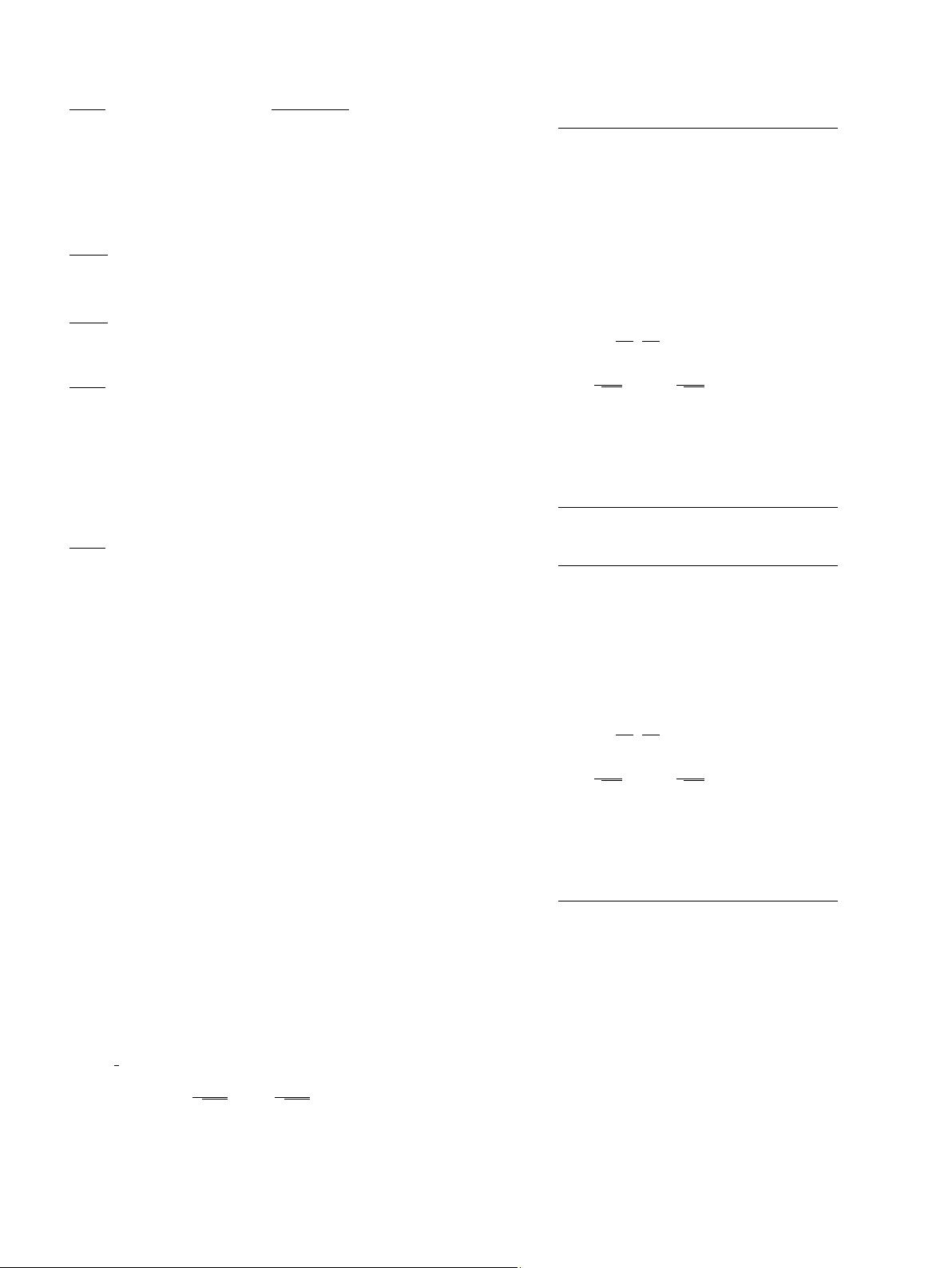

Table 2

MCA-OFRANS.

y(i) = W

H

(i − 1)x(i) (with W(0) =[I

p

0]

T

)

z

(i) = Ly(i)

R =−β(y(i)z

H

(i) +z(i)y

H

(i)) +β

2

x(i)

2

z(i)z

H

(i)

P =[z(i) y(i)]

M =(P

H

P)

−1

P

H

RP (2 ×2 matrix)

eig

(M) =T diag(α

1

, α

2

)T

−1

(eigendecomposition of M)

E

=[e

1

e

2

]=PT

= diag(

1

e

1

,

1

e

2

)

E

=E [e

1

e

2

]; T

=T

τ

1

=

1

√

1+α

1

−1; τ

2

=

1

√

1+α

2

−1

T

−1

=

t

11

t

12

t

21

t

22

E

p

=W(i − 1)E

[e

p

1

e

p

2

]

p =τ

1

e

p

1

−βt

∗

12

(1 +τ

1

)x(i)

q =τ

2

e

p

2

−βt

∗

22

(1 +τ

2

)x(i)

W(i) = W(i − 1) +pe

H

1

+qe

H

2

3.2. MCA orthogonal FRANS (MCA-OFRANS)

Similarly to MCA-OJA, we apply here the previous fast orthogo-

nalization procedure to MCA-FRANS,

W(i) := W(i)

W

H

(i)W(i)

−1/2

, (16)

where (W

H

(i)W(i))

−1/2

is computed in O (np) flops using Lemma 2

results. The resulting algorithm, is summarized in Table 2. Note

that the derivation of the algorithm in Table 2 is similar to that of

the MCA-OOJA and hence the details are omitted to avoid redun-

dancy.

3.3. Numerical instability of MCA-OOJA and MC A-OFRANS

In terms of orthogonality errors, the MCA-OOJA algorithm guar-

antees the orthogonality of the minor subspace at each iteration.