Ding et al. BMC Bioinformatics

(2016) 17:398

Page 3 of 13

dataset, our method achieves 82, 82, 62 and 61 % AUROC

on four different test classes (typical Cross-Validated (CV)

and distinct test classes C1, C2 and C3). On the human

dataset, our method achieves 82, 82, 60 and 57 % AUROC

on four different test classes. Finally, we test our method

on three important PPIs networks: the one-core network

(CD9) [21], the multiple-core network (Ras-Raf-Mek-Erk-

Elk-Srf pathway) [22], and the crossover network (Wnt-

related Network) [23]. Compared to the Conjoint Triad

(CT) method [13], accuracies of our method are increased

by 6.25, 2.06 and 18.75 %, respectively.

Methods

In our method for predicting protein-protein inter-

action based on protein sequence information, first

we extract features from protein sequence informa-

tion. The feature vector represents the characteristic

on one pair of proteins. We use k-gram feature repre-

sentation calculated as Multivariate Mutual Information

(MMI) and extract additional feature by normalized

Moreau-Broto Autocorrelation (NMBAC) from protein

sequences. These two approaches are employed to trans-

form the protein sequence into feature vectors. Then,

we feed the feature vectors into a specific classifier

for identifying interaction pairs and non-interaction

pairs.

Multivariate mutual information

Inspired by previous work [13, 24, 25] for extracting

features from protein sequences, we propose a novel

method to fully describe key information of protein-

protein interaction. There exist many technologies using

the k-gram feature representation, which is commonly

used for protein sequence classification [26, 27]. Here

k represents the number of conjoint amino acids. For

example, CT [13] used the 3-gram feature representation.

Shen et al. [13] indicated that methods without con-

sidering local environment are usually not reliable and

robust, so they produced a conjoint triad method to con-

sider properties of amino acids and their proximate amino

acids.

To continue the u sage of k-gram feature representa-

tion and to enhance classification accuracy, we utilize

MMI [28] for deeply extracting conjoint information of

amino acids in protein sequences.

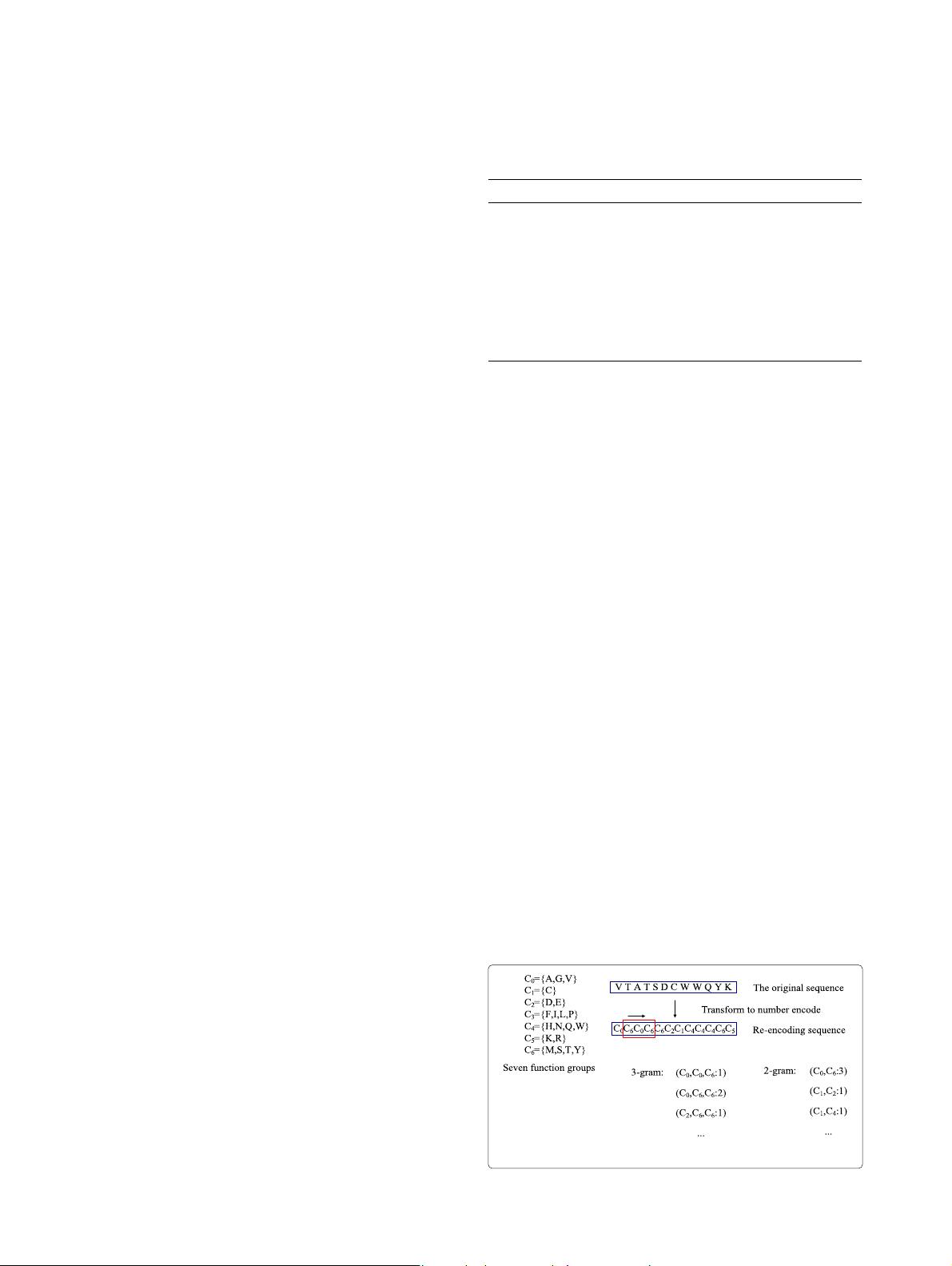

Classifying amino acids

The protein-protein interaction can be dominated by

dipoles and volumes of diverse amino acids, which reflect

electrostatic and hydrophobic properties. All 20 stan-

dard amino acid types are assigned to seven functional

groups [13], as shown in Table 1. For each pair of proteins,

we extract conjoint information based on these amino

acid categories.

Table 1 Division of 20 amino acid types, based on dipoles and

volumes of side chains

No. Group Dipolescale Volumescale

C

0

A, G, V Dipole < 1.0 Volume< 50

C

1

C 1.0 < Dipole < 2.0 (form disulphide bonds) Volume> 50

C

2

D, E Dipole > 3.0 (opposite orientation) Volume> 50

C

3

F, I, L, P Dipole < 1.0 Volume> 50

C

4

H, N, Q, W 2.0 < dipole < 3.0 Volume> 50

C

5

K, R Dipole> 3.0 Volume> 50

C

6

M, S, T, Y 1.0 < dipole < 2.0 Volume> 50

Calculating multivariate mutual information

Considering the neighbours of each amino acid, we regard

any three contiguous amino acids as a unit. We use a

sliding window of a length of 3 amino acids to parse the

protein sequence. For each window, categories of three

aminoacidsareusedtolabelthetypeofthisunit.Instead

of considering the order of the three amino acids, we

only consider the basic ingredient of the unit. We define

different types of 3-gram feature representation, such as

C

0

, C

0

, C

0

,

C

0

, C

0

, C

1

, ...,

C

6

, C

6

, C

6

. Similarly, we also

define different types of 2-gram feature representation,

such as

C

0

, C

0

,

C

0

, C

1

, ...,

C

6

, C

6

.Wecounteachtype

of 3-gram feature and 2-gram feature on one protein

sequence by a sliding window, as shown in Fig. 1.

At some point in the ensuing discussion of mutual infor-

mation, we state the logarithmic base as e.Incontrastto

the standard mutual information approach, our mutual

information and entropy method refer to single event on

one protein sequence, whereas standard mutual informa-

tion refers to overall possible events. We calculate the

multivariate mutual information for each type of 3-gram

feature, defined as follows:

I(a, b, c) = I(a, b) − I(a, b|c) (1)

where a, b and c are categories of three conjoint amino

acids in one unit.

Fig. 1 3-gram or 2-gram feature representation