1

4

16

64

256

FB FR HW KG0 KG1 KG2 LJ OR PK RD RM TW WK

Frontier sharing percentage (log scale)

Top-down Bottom-up

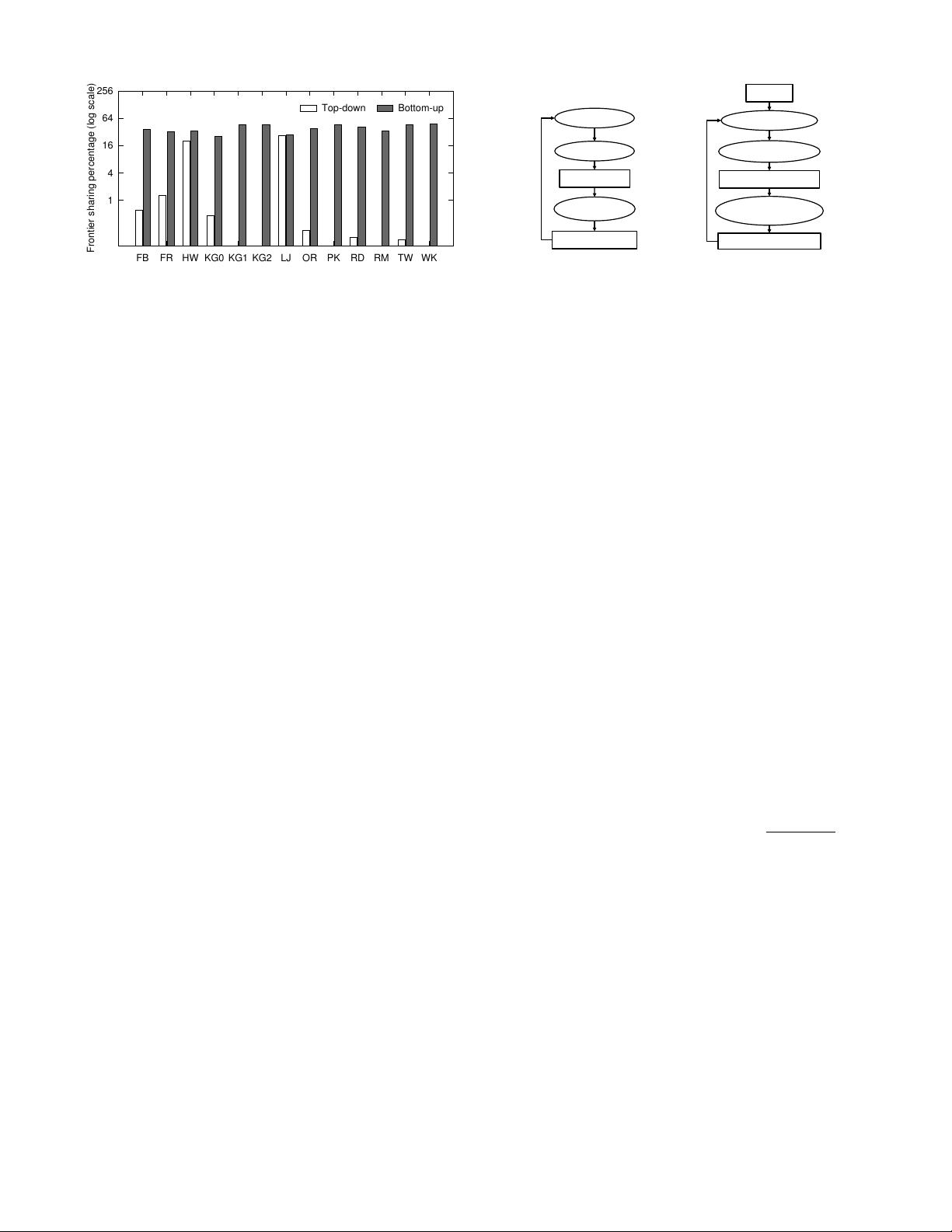

Figure 2: Average frontier sharing percentage between two differ-

ent BFS instances.

Among three tasks at each level, inspecting adjacent ver-

tices of the frontiers involves a lot of random memory ac-

cesses (pointer-chasing), accounting for most of the runtime.

This can be observed on four BFS traversals for both top-

down and bottom-up in Figure 1.

Concurrent BFS executes multiple BFS instances from

different source vertices. Using the example in Figure 1, four

BFS instances start from vertex 0, 3, 6, and 8, respectively.

A naive implementation of concurrent BFS will run all BFS

instances separately and keep its own private frontier queue

and status array. On a GPU device, each individual subrou-

tine is defined as a Kernel. Therefore, in the aforementioned

example, four kernels will run four BFS instances in parallel

from four source vertices. NVIDIA Kepler provides Hyper-Q

to support concurrent execution of multiple kernels, which

dramatically increases the GPU utilization especially when

a single kernel cannot fully utilize the GPU [34].

Unfortunately, this naive implementation of concurrent

BFS takes approximately the same amount of time as run-

ning these BFS instances sequentially, as we will show later

in Section 8. For example, for all the graphs evaluated

in this paper, sequential and naive implementation of con-

current BFS take average 52 ms and 48 ms, respectively,

with a difference in traversal rate of 500 million TEPS. The

main reason for such a small benefit is because simply run-

ning multiple BFS instances in parallel would overwhelm the

GPU, especially at the direction-switching level when a BFS

goes from top-down to bottom-up. At that moment each

individual BFS would require a large number of threads for

their workloads. As a result, such a naive implementation

may even underperform a sequential execution of all BFS

instances.

Opportunity of Frontier Sharing: iBFS aims to address

this problem by leveraging the existence of frontiers shared

among different BFS instances. Figure 2 presents the av-

erage percentage of shared frontiers per level between two

instances. The graphs used in this paper are presented in

Section 8. Top-down levels have smaller number of shared

frontiers (close to 4% on average) whereas bottom-up levels

have much more as high as 48.6%. This is because bottom-

up traversals often start from a large number of unvisited

vertices (frontiers in this case) and search for their parents.

The proposed GroupBy technique can improve the sharing

for both directions to 10× and 1.7×, respectively.

Potentially, the shared frontiers can yield three benefits in

concurrent BFS: (1). These frontiers need to be enqueued

only once into the frontier queue. (2). The neighbors of

shared frontiers need to be loaded in-core only once during

Status&Array&

Fron-er&Queue&

Expansion&

Inspec-on&

FQ&Genera-on&

Bitwise'Status'Array''

Joint'Fron2er'Queue'

Bitwise'Fron2er''

Iden2fica2on''

Bitwise'Inspec2on''

Joint'Expansion'

GroupBy'

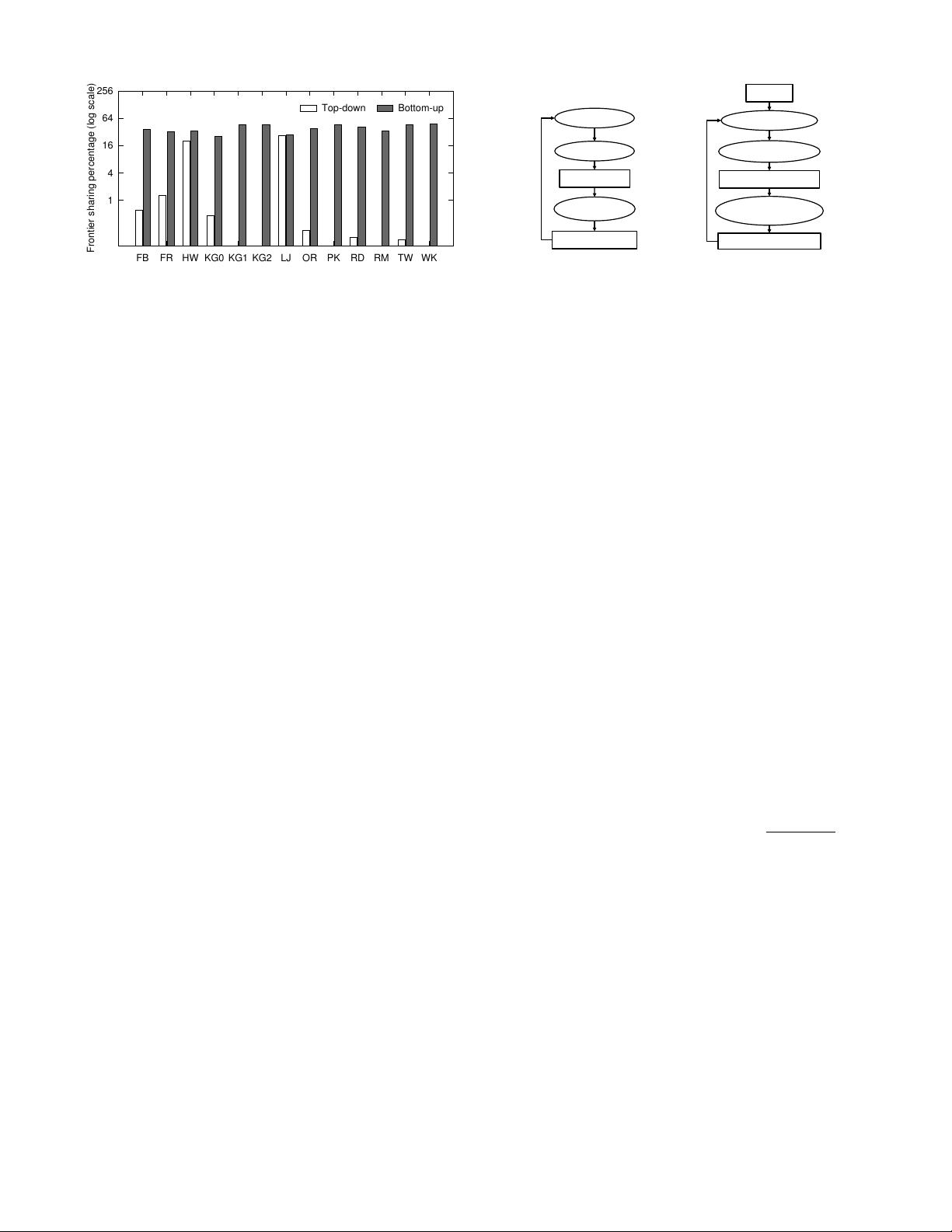

(a) A Single BFS (b) iBFS

Figure 3: The flow charts of (a) BFS, (b) iBFS.

expansion. (3). Memory accesses to the statuses of those

neighbors for different BFSes can be coalesced. It is impor-

tant to note that each BFS still has to inspect the statuses

independently, because not all BFSes will have the same sta-

tuses for their neighbors. In other words, shared frontiers

do not reduce the overall workload. Nevertheless, this work

proposes that shared frontiers can be utilized to offer faster

data access and saving in memory usage, both of which are

critical on GPUs. This is achieved through a combination

of bitwise, joint traversals and GroupBy rules that guide the

selection of groups of BFSes for parallel execution.

3. iBFS OVERVIEW

In a nutshell, iBFS as shown in Figure 3, consists of three

unique techniques, namely, joint traversal, GroupBy, and

bitwise optimization, which will be discussed in Section 4,

5, and 6, respectively.

Ideally the best performance for iBFS would be achieved

by running all i BFS instances together without GroupBy.

Unfortunately, ever-growing graph sizes, combined with lim-

ited GPU hardware resources, puts a cap on the number of

concurrent BFS instances. In particular, we have found that

GPU global memory is the dominant factor, e.g., 12GB on

K40 GPUs compared to many TB-scale graphs.

Let M be GPU memory size and N the maximum number

of concurrent BFS instances in one group (i.e., the group

size). If the whole graph requires S storage, a single BFS

instance needs |SA| to store its data structures (e.g., the

status array for all the vertices), and for a joint traversal

each group requires at least |JF Q| for joint data structures

(e.g., the joint frontier queue), then N 6

M−S−|JF Q|

|SA|

. In

most cases N satisfies 1 < N i 6 |V |. In this paper, we

use a value of 128 for N by default.

Unfortunately, randomly grouping N different BFS in-

stances is unlikely to produce the optimal performance. Care

has to be taken to ensure a good grouping strategy. To il-

lustrate this problem, for a group A with two BFS instances

BFS-s and BFS-t, let JF Q

A

(k) be the joint frontier queue of

group A at level k, F Q

s

(k) the individual frontier queue for

BFS-s, and F Q

t

for BFS-t. Thus, |JF Q

A

(k)| = |F Q

s

(k)| ∪

|F Q

t

(k)| − |F Q

s

(k)| ∩ |F Q

t

(k)|, where |F Q

s

(k)| ∩ |F Q

t

(k)|

represents the shared frontiers between two BFS instances.

Clearly, the more shared frontiers each group has, the higher

performance iBFS will be able to achieve. Before we describe

the GroupBy technique in Section 5 that aims to maximize

such sharing within each group, we will first introduce how

iBFS achieves joint traversal in the next section which makes

parallel execution possible.