From Word Embeddings To Document Distances

Matt J. Kusner MKUSNER@WUSTL.EDU

Yu Sun YUSUN@WUSTL.EDU

Nicholas I. Kolkin N.KOLKIN@WUSTL.EDU

Kilian Q. Weinberger KILIAN@WUSTL.EDU

Washington University in St. Louis, 1 Brookings Dr., St. Louis, MO 63130

Abstract

We present the Word Mover’s Distance (WMD),

a novel distance function between text docu-

ments. Our work is based on recent results in

word embeddings that learn semantically mean-

ingful representations for words from local co-

occurrences in sentences. The WMD distance

measures the dissimilarity between two text doc-

uments as the minimum amount of distance that

the embedded words of one document need to

“travel” to reach the embedded words of another

document. We show that this distance metric can

be cast as an instance of the Earth Mover’s Dis-

tance, a well studied transportation problem for

which several highly efficient solvers have been

developed. Our metric has no hyperparameters

and is straight-forward to implement. Further, we

demonstrate on eight real world document classi-

fication data sets, in comparison with seven state-

of-the-art baselines, that the WMD metric leads

to unprecedented low k-nearest neighbor docu-

ment classification error rates.

1. Introduction

Accurately representing the distance between two docu-

ments has far-reaching applications in document retrieval

(Salton & Buckley, 1988), news categorization and cluster-

ing (Ontrup & Ritter, 2001; Greene & Cunningham, 2006),

song identification (Brochu & Freitas, 2002), and multi-

lingual document matching (Quadrianto et al., 2009).

The two most common ways documents are represented

is via a bag of words (BOW) or by their term frequency-

inverse document frequency (TF-IDF). However, these fea-

tures are often not suitable for document distances due to

Proceedings of the 32

nd

International Conference on Machine

Learning, Lille, France, 2015. JMLR: W&CP volume 37. Copy-

right 2015 by the author(s).

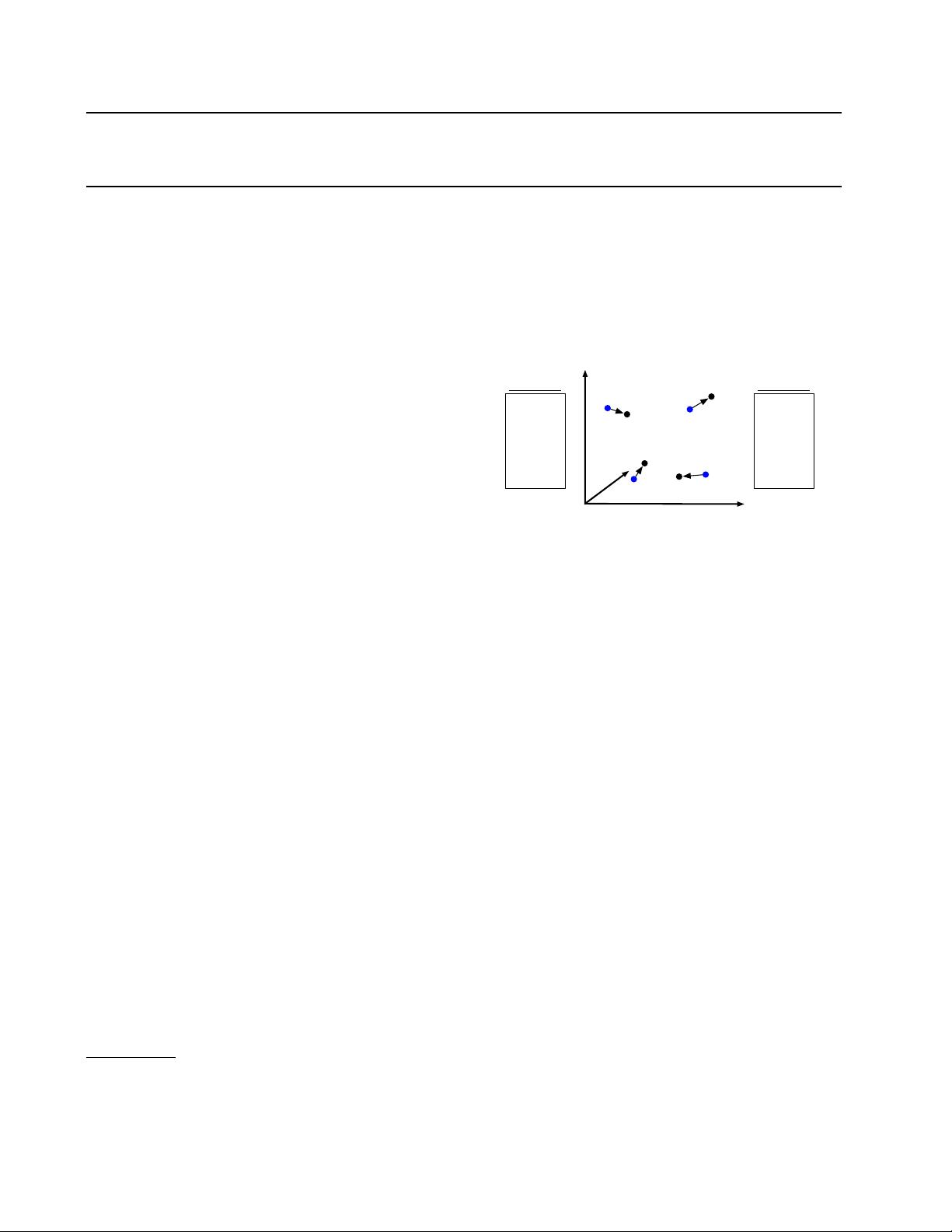

‘Obama’

word2vec embedding

‘President’

‘speaks’

‘Illinois’

‘media’

‘greets’

‘press’

‘Chicago’

document 2document 1

Obama

speaks

to

the

media

in

Illinois

The

President

greets

the

press

in

Chicago

Figure 1. An illustration of the word mover’s distance. All

non-stop words (bold) of both documents are embedded into a

word2vec space. The distance between the two documents is the

minimum cumulative distance that all words in document 1 need

to travel to exactly match document 2. (Best viewed in color.)

their frequent near-orthogonality (Sch

¨

olkopf et al., 2002;

Greene & Cunningham, 2006). Another significant draw-

back of these representations are that they do not capture

the distance between individual words. Take for example

the two sentences in different documents: Obama speaks

to the media in Illinois and: The President greets the press

in Chicago. While these sentences have no words in com-

mon, they convey nearly the same information, a fact that

cannot be represented by the BOW model. In this case, the

closeness of the word pairs: (Obama, President); (speaks,

greets); (media, press); and (Illinois, Chicago) is not fac-

tored into the BOW-based distance.

There have been numerous methods that attempt to circum-

vent this problem by learning a latent low-dimensional rep-

resentation of documents. Latent Semantic Indexing (LSI)

(Deerwester et al., 1990) eigendecomposes the BOW fea-

ture space, and Latent Dirichlet Allocation (LDA) (Blei

et al., 2003) probabilistically groups similar words into top-

ics and represents documents as distribution over these top-

ics. At the same time, there are many competing vari-

ants of BOW/TF-IDF (Salton & Buckley, 1988; Robert-

son & Walker, 1994). While these approaches produce a

more coherent document representation than BOW, they

often do not improve the empirical performance of BOW

on distance-based tasks (e.g., nearest-neighbor classifiers)

(Petterson et al., 2010; Mikolov et al., 2013c).