utilizes the computing power of the hardware, resulting in

a significant decrease in inference latency while enhancing

the representation ability in the meantime.

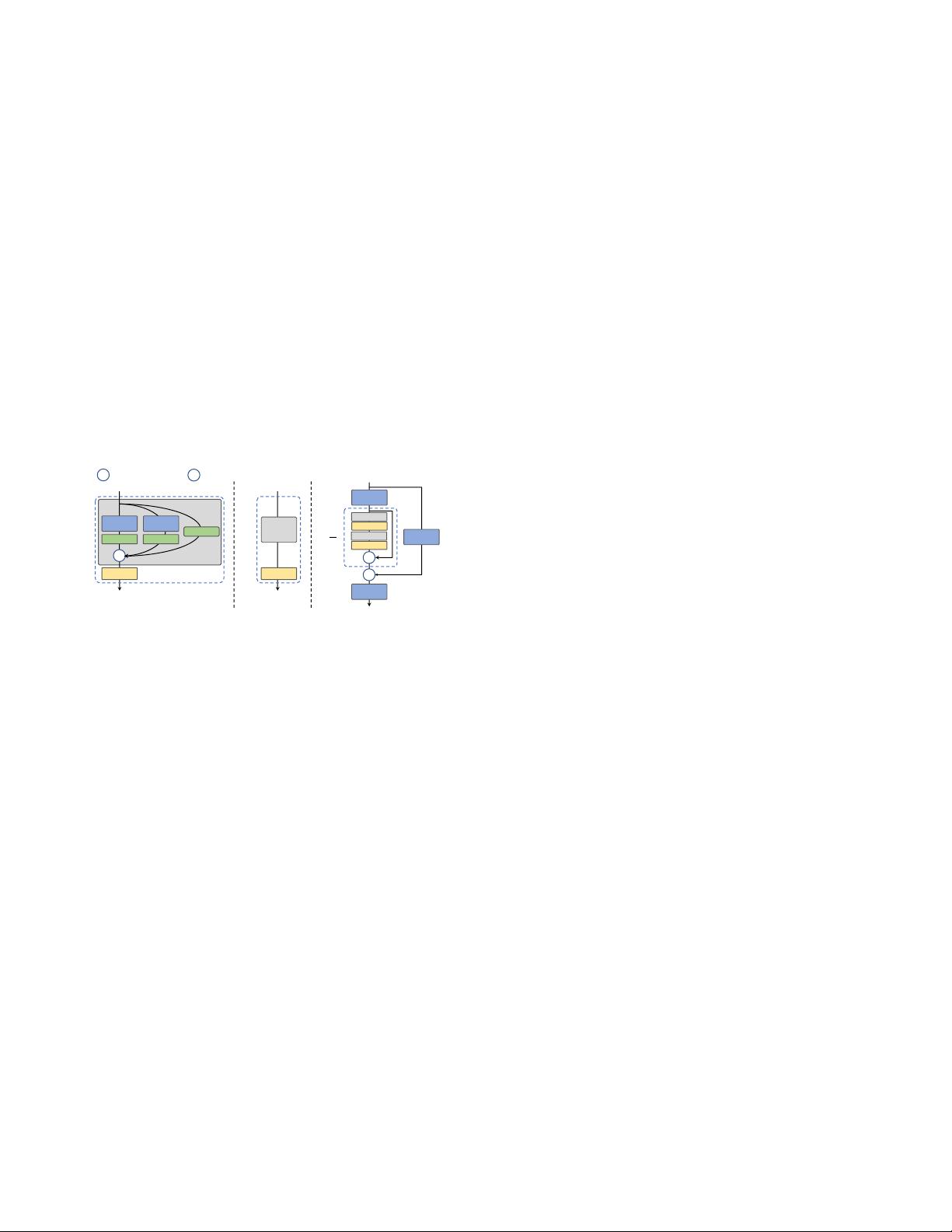

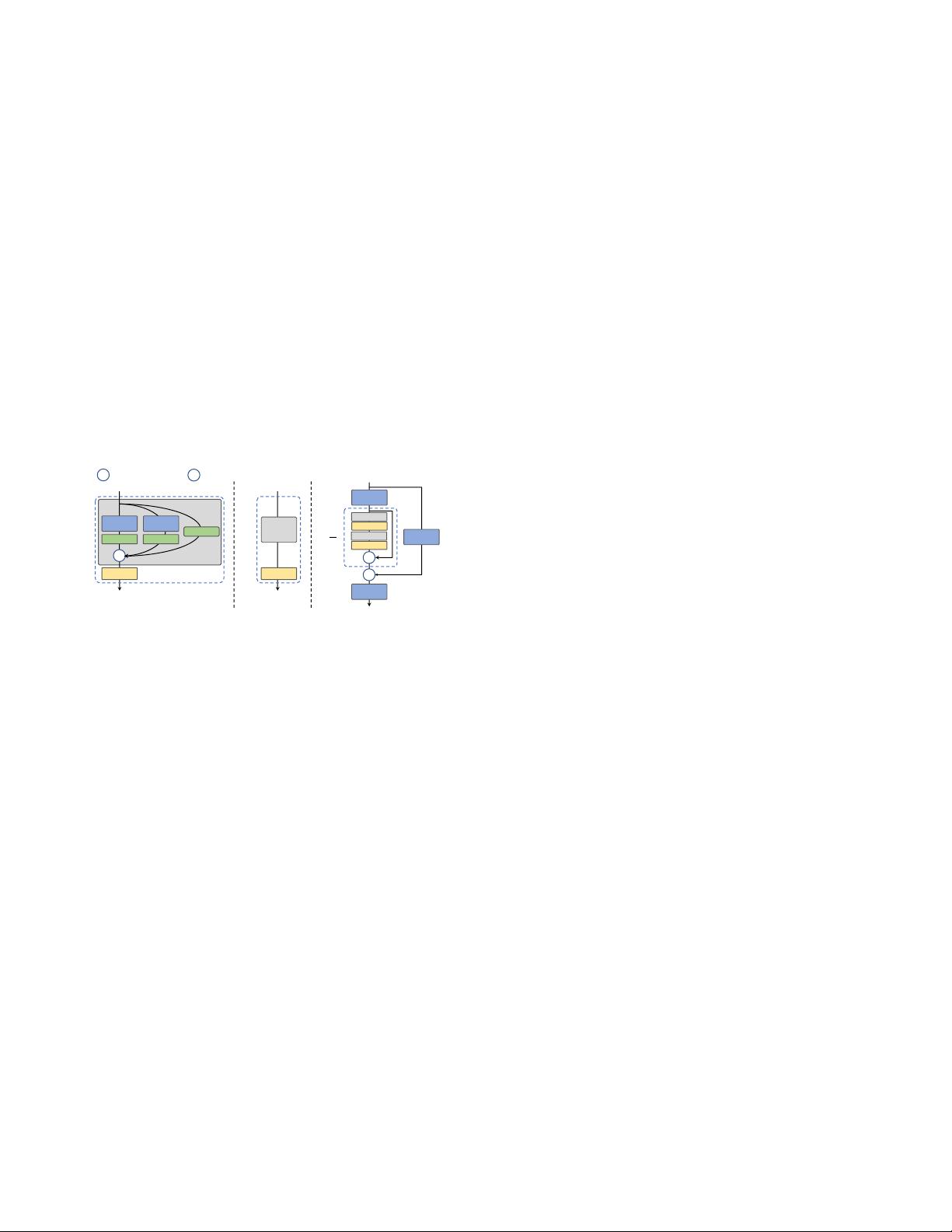

However, we notice that with the model capacity further

expanded, the computation cost and the number of param-

eters in the single-path plain network grow exponentially.

To achieve a better trade-off between the computation bur-

den and accuracy, we revise a CSPStackRep Block to build

the backbone of medium and large networks. As shown

in Fig. 3 (c), CSPStackRep Block is composed of three 1×1

convolution layers and a stack of sub-blocks consisting of

two RepVGG blocks [3] or RepConv (at training or infer-

ence respectively) with a residual connection. Besides, a

cross stage partial (CSP) connection is adopted to boost

performance without excessive computation cost. Com-

pared with CSPRepResStage [45], it comes with a more

succinct outlook and considers the balance between accu-

racy and speed.

RepConv

𝟏×𝟏

Conv

RepConv

+

𝑁

2

×

C

𝟏×𝟏

Conv

𝟏×𝟏

Conv

(a)

(c)

+

: Element-wise add

C

: Concatenation over channel dimension

ReLU

+

BN

𝟏×𝟏

Conv

𝟑×𝟑

Conv

BN

ReLU

𝑁×

RepVGG block

𝑁×

(b)

RepConv

ReLU

ReLU

BN

Figure 3: (a) RepBlock is composed of a stack of RepVGG

blocks with ReLU activations at training. (b) During infer-

ence time, RepVGG block is converted to RepConv. (c)

CSPStackRep Block comprises three 1×1 convolutional

layers and a stack of sub-blocks of double RepConvs fol-

lowing the ReLU activations with a residual connection.

2.1.2 Neck

In practice, the feature integration at multiple scales has

been proved to be a critical and effective part of object de-

tection [9, 21, 24, 40]. We adopt the modified PAN topol-

ogy [24] from YOLOv4 [1] and YOLOv5 [10] as the base

of our detection neck. In addition, we replace the CSP-

Block used in YOLOv5 with RepBlock (for small models)

or CSPStackRep Block (for large models) and adjust the

width and depth accordingly. The neck of YOLOv6 is de-

noted as Rep-PAN.

2.1.3 Head

Efficient decoupled head The detection head of

YOLOv5 is a coupled head with parameters shared be-

tween the classification and localization branches, while its

counterparts in FCOS [41] and YOLOX [7] decouple the

two branches, and additional two 3×3 convolutional layers

are introduced in each branch to boost the performance.

In YOLOv6, we adopt a hybrid-channel strategy to build

a more efficient decoupled head. Specifically, we reduce

the number of the middle 3×3 convolutional layers to only

one. The width of the head is jointly scaled by the width

multiplier for the backbone and the neck. These modifica-

tions further reduce computation costs to achieve a lower

inference latency.

Anchor-free Anchor-free detectors stand out because of

their better generalization ability and simplicity in decod-

ing prediction results. The time cost of its post-processing

is substantially reduced. There are two types of anchor-

free detectors: anchor point-based [7, 41] and keypoint-

based [16, 46, 53]. In YOLOv6, we adopt the anchor point-

based paradigm, whose box regression branch actually pre-

dicts the distance from the anchor point to the four sides of

the bounding boxes.

2.2. Label Assignment

Label assignment is responsible for assigning labels to

predefined anchors during the training stage. Previous work

has proposed various label assignment strategies ranging

from simple IoU-based strategy and inside ground-truth

method [41] to other more complex schemes [5, 7, 18, 48,

51].

SimOTA OTA [6] considers the label assignment in ob-

ject detection as an optimal transmission problem. It defines

positive/negative training samples for each ground-truth ob-

ject from a global perspective. SimOTA [7] is a simpli-

fied version of OTA [6], which reduces additional hyper-

parameters and maintains the performance. SimOTA was

utilized as the label assignment method in the early version

of YOLOv6. However, in practice, we find that introducing

SimOTA will slow down the training process. And it is not

rare to fall into unstable training. Therefore, we desire a

replacement for SimOTA.

Task alignment learning Task Alignment Learning

(TAL) was first proposed in TOOD [5], in which a unified

metric of classification score and predicted box quality is

designed. The IoU is replaced by this metric to assign object

labels. To a certain extent, the problem of the misalignment

of tasks (classification and box regression) is alleviated.

The other main contribution of TOOD is about the task-

aligned head (T-head). T-head stacks convolutional layers to

build interactive features, on top of which the Task-Aligned

Predictor (TAP) is used. PP-YOLOE [45] improved T-

head by replacing the layer attention in T-head with the

4