2.1. Hand-designed features for action recognition

Extracting local features for action recognition usually consists of two steps: detecting features with a detector and rep-

resenting features with a descriptor. Both the detector and descriptor are usually extended from 2D image domain to 3D

video domain, inspired by their success in object recognition. Laptev and Linderberg [25] extends the Harris detector [15]

and proposes the Harris3D detector. Dollar et al. [11] proposes the Cuboid detector, which first computes the temporal Gabor

filters responses and then locates the interest points by finding the maximal responses in a local range. Inspired by the suc-

cess of the Hessian saliency measure used in blob detection in images, Willems et al. [53] proposes the Hessian detector as a

spatio-temporal extension of the Hessian saliency measure. In addition to these sparse interest point detector, dense sam-

pling can also be considered as a special detector which extracts video patches at regular positions and scales.

After detecting an interest point, a feature descriptor is used to extract local features around the interest point. Dollar

et al. [11] proposes the Cuboid descriptor. To characterize the local motion and appearance features, Laptev et al. [26] com-

putes histograms of spatial gradient and optical flow accumulated in space–time neighborhoods of the detected interest

points. Klaser et al. [24] proposes the HOG3D descriptor, which is based on histograms of 3D gradient orientations and there-

fore can be seen as an extension of the popular SIFT descriptor [33]. Similarly, Willems et al. [53] extends the SURF descriptor

[4] to extract features from a video.

2.2. Learning based features for action recognition

Recently, feature learning methods have been introduced in action recognition. Taylor et al. [46] proposes a novel con-

volutional GRBM method for learning spatio-temporal features, which can be considered as an extension of convolutional

RBMs from 2D images to 3D videos. Because the objective function is intractable and thus sampling is required, their method

has high computational cost and takes 2–3 days to train the model on the Hollywood2 dataset. This disadvantage limits their

applications for large scale problems. Ji et al. [22] extends convolutional neural networks from 2D spatial domain to 3D spa-

tio-temporal domain to learn features for action recognition. This method extracts features from both the spatial and tem-

poral dimensions by performing 3D convolutions, thereby capturing the motion information encoded in multiple adjacent

frames. Similar to Taylor et al. [46], Le et al. [29] proposes a hierarchical invariant spatio-temporal feature learning frame-

work based on independent subspace analysis. Their model consists of two layers. The first layer vectorizes the sampled 3D

patches into column vectors and then uses ICA to learn a set of ICA basis functions. The second layer uses the subspace ICA to

encode the responses of the first layers. Finally the responses of the first and second layers are combined to form the final

features vector using the bag-of-word model [27]. The feature vectors are then fed into a SVM classifier to perform

classification.

3. Proposed method

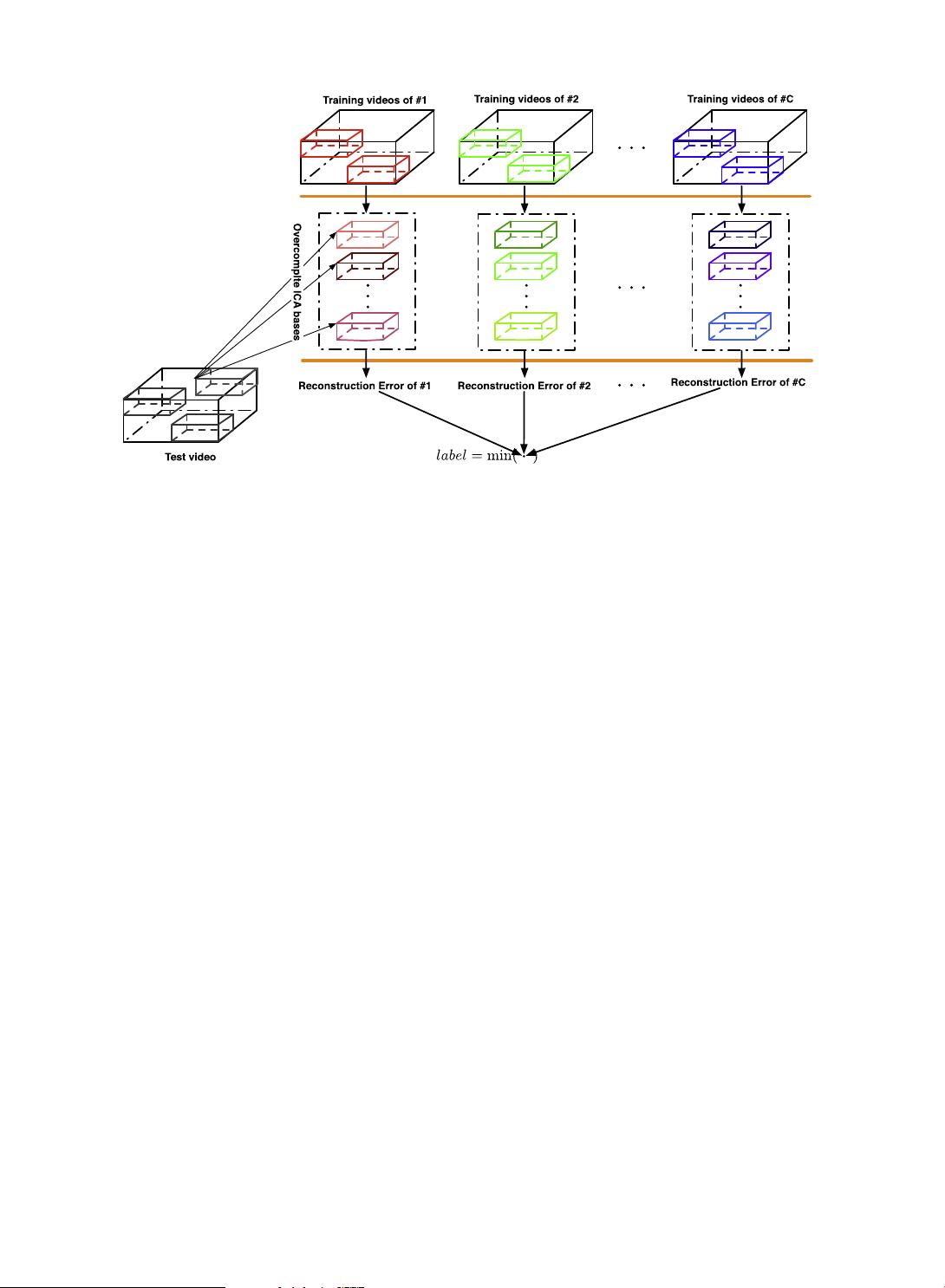

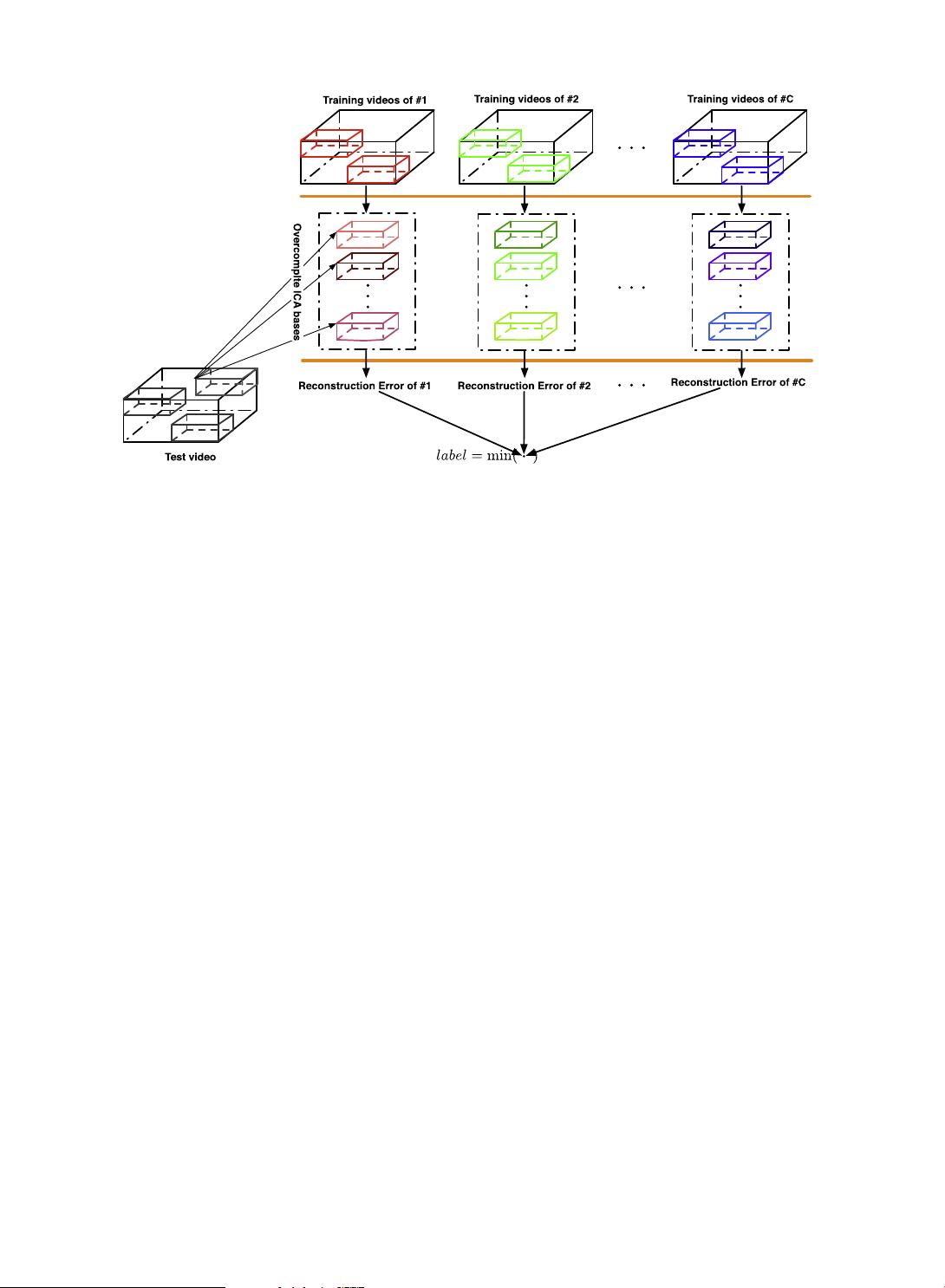

The proposed action recognition framework based on overcomplete ICA is illustrated in Fig. 1. At the training stage, after

densely sampling a set of 3D patches from training videos of each class, a set of overcomplete ICA basis functions are learned.

Fig. 1. A conceptual diagram of the proposed action recognition framework.

S. Zhang et al. / Information Sciences 281 (2014) 635–647

637