row manner, rows have more entropy, which make them hard to be

densely compressed. In Hive 0.4, Record Columnar File (RCFile)

[27] was introduced. Because RCFile is a columnar file format,

it achieved certain improvement on storage efficiency. However,

RCFile is still data-type-agnostic and its corresponding SerDe se-

rializes a single row at a time. Under this structure, data-type spe-

cific compression schemes cannot be effectively used. Thus, the

first key shortcoming of Hive on storage efficien cy is that data-

type-agnostic file formats and one-row-at-a-time serialization

prevent data values being efficiently compressed.

The query execution performance is largely determined by the

file format component, the query planning component (the query

planner), and the query execution component containing imple-

mentation of operators. Although RCFile is a columnar file for-

mat, its SerDe does not decompose a complex data type (e.g. Map).

Thus, when a query needs to access a fiel d of a complex data type,

all fields of this type have to be read, which introduces inefficiency

on data reading. Also, R CFile was mainly designed for sequential

data scan. It does not have any index and it does not take advantages

of semantic information provided by queries to skip unnecessary

data. Thus, the second key shortcoming of the file format com-

ponent is that data reading efficiency is limited by the lack of

indexes and non-decomposed columns with complex data types.

The query planner in the original Hive translates every operation

specified in this query to an operator. When an operation requires

its input datasets to be partitioned in a certain way

2

, Hive will in-

sert RSOps as the boundary between a Map phase and a Reduce

phase. Then, the query planner will break the entire operator tr ee

to MapReduce jobs based on these boundaries. During query plan-

ning and optimization, the planner only focuses on a single data

operation or a si ngle MapReduce job at a time. This query plan-

ning approach can significantly degrade t he query execution per-

formance by introducing unnecessary and time consuming opera-

tions. For example, t he original query planner was not aware of

correlations between major operations in a query [

31]. Thus, the

third key shortcoming of the query planner is that the query

translation approach ignores relationships between data oper-

ations, and thus introduces unnecessary operations hurting the

performance of query execution.

The query execution component of Hive was heavily influenced

by the working model of Hadoop MapReduce. In a Map or a Re-

duce task, the MapReduce engine fetches a key/value pair and then

forwards it to the Map or Reduce function at a time. For example,

in the case of word count, every Map task processes a line of a text

file at a time. Hive inherited this working model and it processes

rows with a one-row-at-a-time way. However, this working model

does not fit the architecture of modern CPUs and introduces high

interpretation overhead, under-utilized parallelism, low cache per-

formance, and high function call overhead [

19] [35] [50]. Thus,

the fourth key shortcoming of the query execution component

is that the runtime execution efficiency is limited by the one-

row-at-a-time execution model.

4. FILE FORMAT

To address the shortcoming of the storage and data access effi-

ciency, we have designed and implemented an improved file for-

mat called Optimized Record Columnar File (ORC File)

3

which

2

In the rest of this paper, this kind of data operations are called

major operations and an operator evaluating a major operation is

called a major operator.

3

In the rest of this paper, we use ORC File to refer to the file format

we introduced and we use an ORC file or ORC files to refer to one

or multiple fil es stored in HDFS with the format of ORC Fil e.

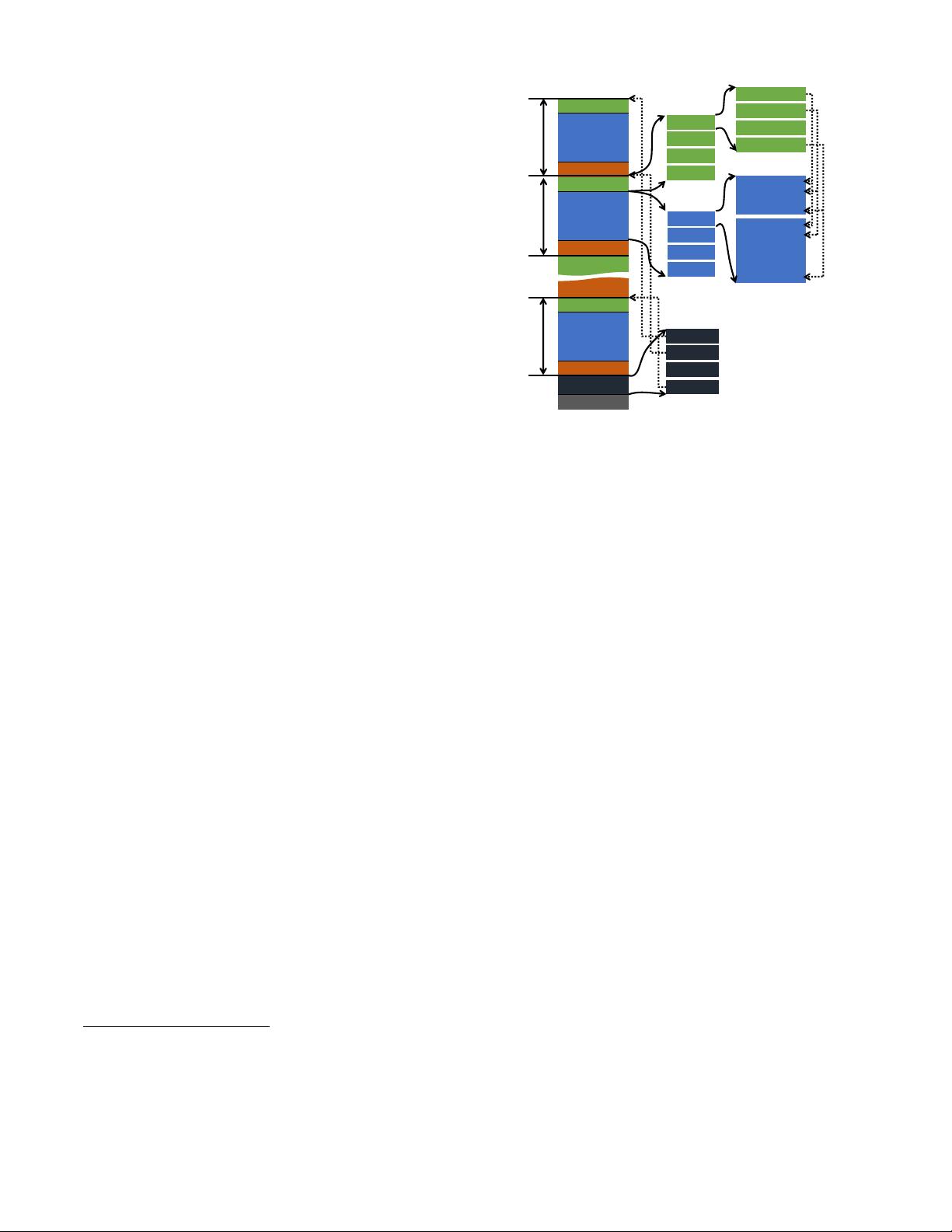

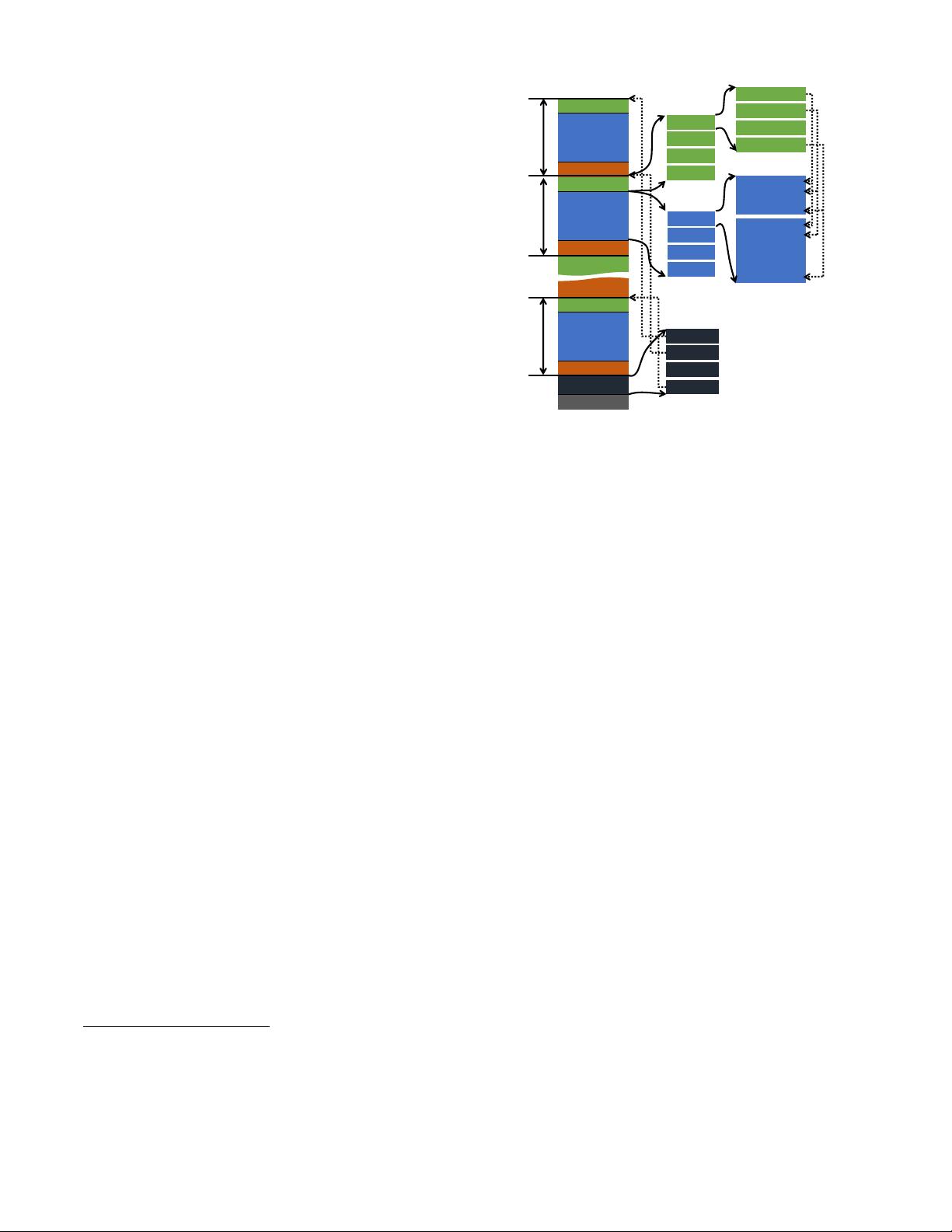

Index Data

Row Data

Stripe Footer

Index Data

Row Data

Stripe Footer

Index Data

Row Data

Stripe Footer

File Footer

Postscript

^ƚƌŝƉĞϭ^ƚƌŝƉĞϮ

^ƚƌŝƉĞŶ

Column 1

Column 2

Column m

…

Column 1

Column 2

Column m

…

Stripe 1

Stripe 2

Stripe n

…

Metadata

Streams

Data Stream

Row index 1

Row index 2

Row index k

…

Figure 2: The structure of an ORC file. Round-dotted lines

represent position pointers.

has several significant improvements over RCFile. In ORC File,

we have de-emphasized its SerDe and made the ORC file writer

data type aware. With this change, we are able to add various

type-specific data encoding schemes to store data efficiently. To

efficiently access data stored with ORC File, we have introduced

different kinds of indexes that do not exist in RCFile. Those in-

dexes are critical to help the ORC reader find needed data and skip

unnecessary data. Also, because the ORC writer is aware of the

data type of a value, it can decompose a column with a complex

data type (e.g. Map) to multiple child columns, which cannot be

done by RC File. Besides these three major improvements, we also

have introduced several practical improvements in ORC File, e.g.

a larger default stripe size and a memory manager to bound the

memory footprint of an ORC writer, aiming to overcome inconve-

nience and inefficiency we have observed through years’ operation

of RCFile.

In this section, we give an overview of ORC File and its im-

provements over RCFile. First, we introduce the way that ORC

File organizes and stores data values of a table (table placement

method). Then, we introduce i ndexes and compression schemes in

ORC File. Finally, we introduce the memory manager in ORC File,

which is a critical and yet often ignored component. This memory

manager bounds the total memory footprint of a task writing ORC

files. It can prevent the task from failing caused by running out of

memory. Figure

2 shows the structure of an ORC file. We will use

it to explain the design of O RC File.

4.1 The Table Placement Method

When a table is stored by a file format, the table placement

method of this file format describes t he way that data values of this

table are organized and stored in underlying filesystems. Based

on the definition in [

28], the table placement method of ORC File

shares the basic structure with that of RCFile. For a table stored in

an ORC file, it is first horizontally partitioned to multiple stripes.

Then, in a stripe, data values are stored in a column by column way.

All columns in a stripe are stored in the same file. Also, to be adap-

tive to different query patterns, especially ad hoc queries, O RC File

does not put columns into column groups.

From the perspective of the table placement method, ORC File

has three improvements over RCFile. First, it provides a larger de-