4 Chen Luo, Michael J. Carey

Bloom filter and then proceed to search its B

+

-tree only

if its associated Bloom filter reports a positive answer. Al-

ternatively, a Bloom filter can be built for each leaf page

of a disk component. In this design, a point lookup query

can first search the non-leaf pages of a B

+

-tree to locate the

leaf page, where the non-leaf pages are assumed to be small

enough to be cached, and then check the associated Bloom

filter before fetching the leaf page to reduce disk I/Os. Note

that the false positives reported by a Bloom filter do not im-

pact the correctness of a query, but a query may waste some

I/O searching for non-existent keys. The false positive rate

of a Bloom filter can be computed as (1 − e

−kn/m

)

k

, where k

is the number of hash functions, n is the number of keys, and

m is the total number of bits [18]. Furthermore, the optimal

number of hash functions that minimizes the false positive

rate is k =

m

n

ln2. In practice, most systems typically use 10

bits/key as a default configuration, which gives a 1% false

positive rate. Since Bloom filters are very small and can of-

ten be cached in memory, the number of disk I/Os for point

lookups is greatly reduced by their use.

Partitioning. Another commonly adopted optimization

is to range-partition the disk components of LSM-trees into

multiple (usually fixed-size) small partitions. To minimize

the potential confusion caused by different terminologies,

we use the term SSTable to denote such a partition, following

the terminology from LevelDB [4]. This optimization has

several advantages. First, partitioning breaks a large com-

ponent merge operation into multiple smaller ones, bound-

ing the processing time of each merge operation as well as

the temporary disk space needed to create new components.

Moreover, partitioning can optimize for workloads with se-

quentially created keys or skewed updates by only merging

components with overlapping key ranges. For sequentially

created keys, essentially no merge is performed since there

are no components with overlapping key ranges. For skewed

updates, the merge frequency of the components with cold

update ranges can be greatly reduced. It should be noted that

the original LSM-tree [52] automatically takes advantage of

partitioning because of its rolling merges. However, due to

the implementation complexity of its rolling merges, today’s

LSM-tree implementations typically opt for actual physical

partitioning rather than rolling merges.

An early proposal that applied partitioning to LSM-trees

is the partitioned exponential file (PE-file) [38]. A PE-file

contains multiple partitions, where each partition can be log-

ically viewed as a separate LSM-tree. A partition can be

further split into two partitions when it becomes too large.

However, this design enforces strict key range boundaries

among partitions, which reduces the flexibility of merges.

We now discuss the partitioning optimization used in

today’s LSM-tree implementations. It should be noted that

partitioning is orthogonal to merge policies; both leveling

and tiering (as well as other emerging merge policies) can

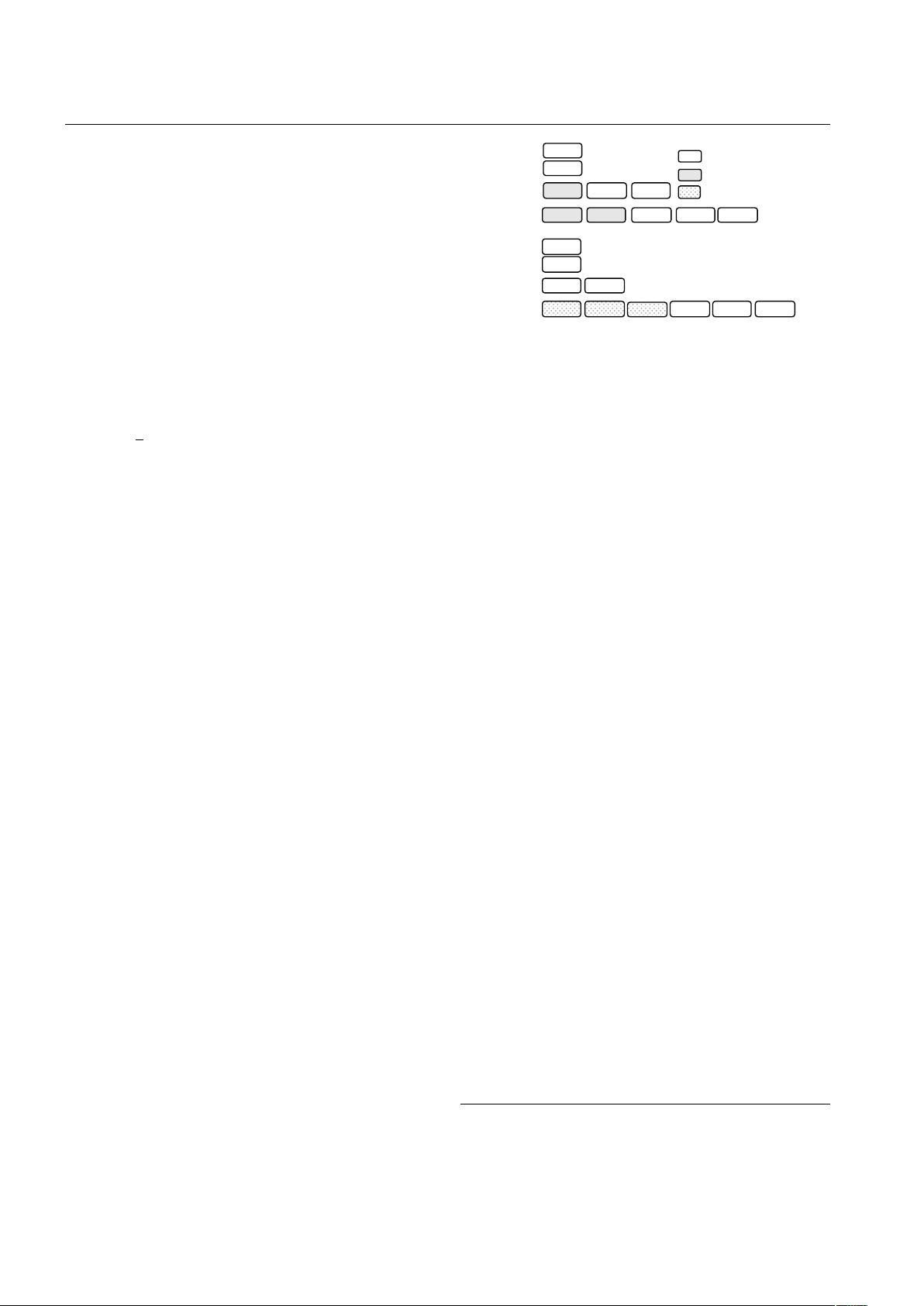

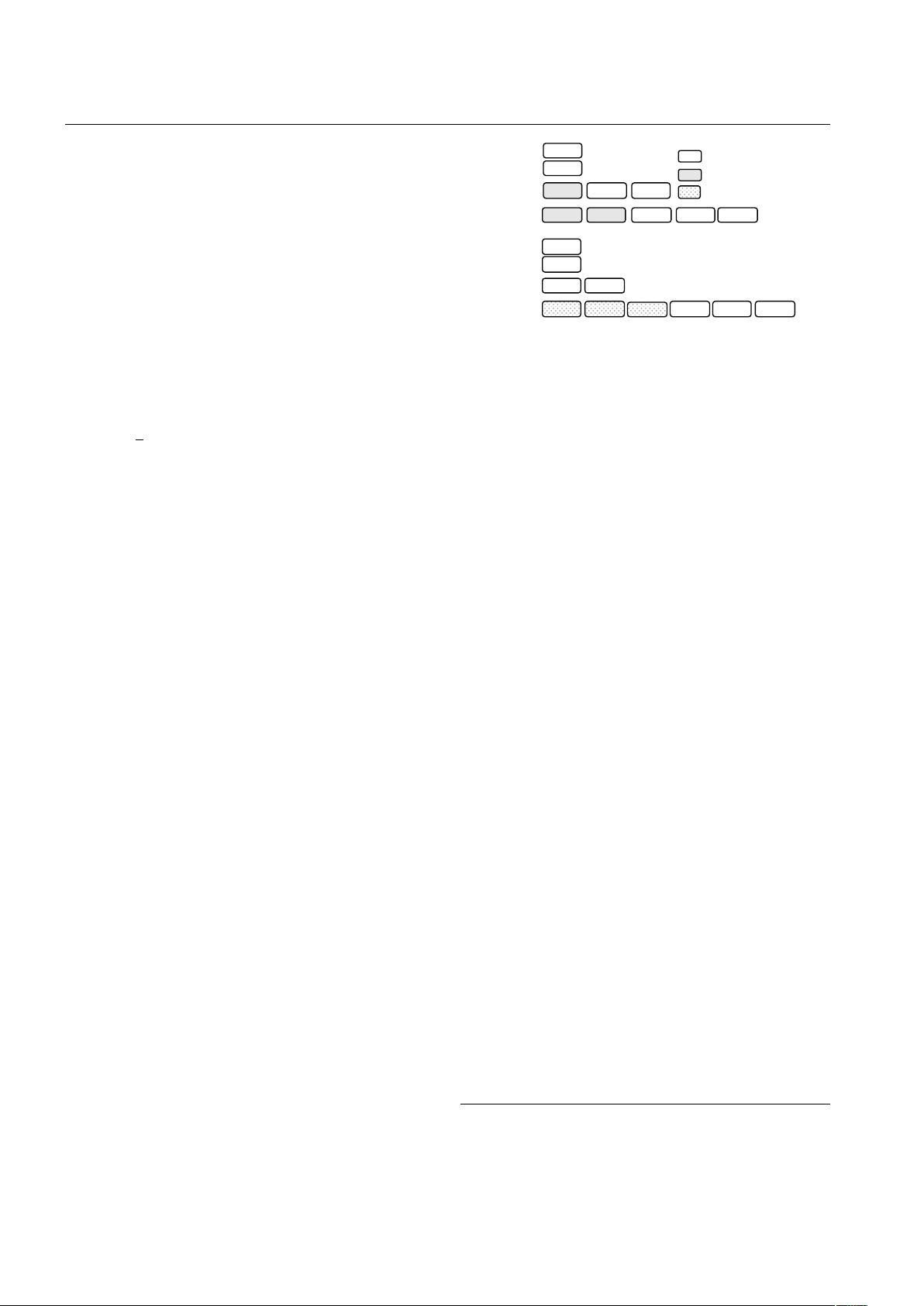

level 0

level 1

level 2

0-100

0-30 34-70 71-99

0-15 16-32 35-50 51-70 72-95

0-10 11-19

20-32

SSTable

Merging SSTable

New SSTable

34-70 71-99

35-50 51-70 72-95

level 1

level 2

Before Merge

After Merge

0-100

level 0

0-100

0-100

Fig. 4: Partitioned leveling merge policy

be adapted to support partitioning. To the best of our knowl-

edge, only the partitioned leveling policy has been fully im-

plemented by industrial LSM-based storage systems, such

as LevelDB [4] and RocksDB [6]. Some recent papers [12,

50,58,76,79] have proposed various forms of a partitioned

tiering merge policy to achieve better write performance

4

.

In the partitioned leveling merge policy, pioneered by

LevelDB [4], the disk component at each level is range-

partitioned into multiple fixed-size SSTables, as shown in

Figure 4. Each SSTable is labeled with its key range in the

figure. Note that the disk components at level 0 are not par-

titioned since they are directly flushed from memory. This

design can also help the system to absorb write bursts since

it can tolerate multiple unpartitioned components at level 0.

To merge an SSTable from level L into level L +1, all of its

overlapping SSTables at level L + 1 are selected, and these

SSTables are merged with it to produce new SSTables still at

level L + 1. For example, in the figure, the SSTable labeled

0-30 at level 1 is merged with the SSTables labeled 0-15 and

16-32 at level 2. This merge operation produces new SSTa-

bles labeled 0-10, 11-19, and 20-32 at level 2, and the old

SSTables will then be garbage-collected. Different policies

can be used to select which SSTable to merge next at each

level. For example, LevelDB uses a round-robin policy (to

minimize the total write cost).

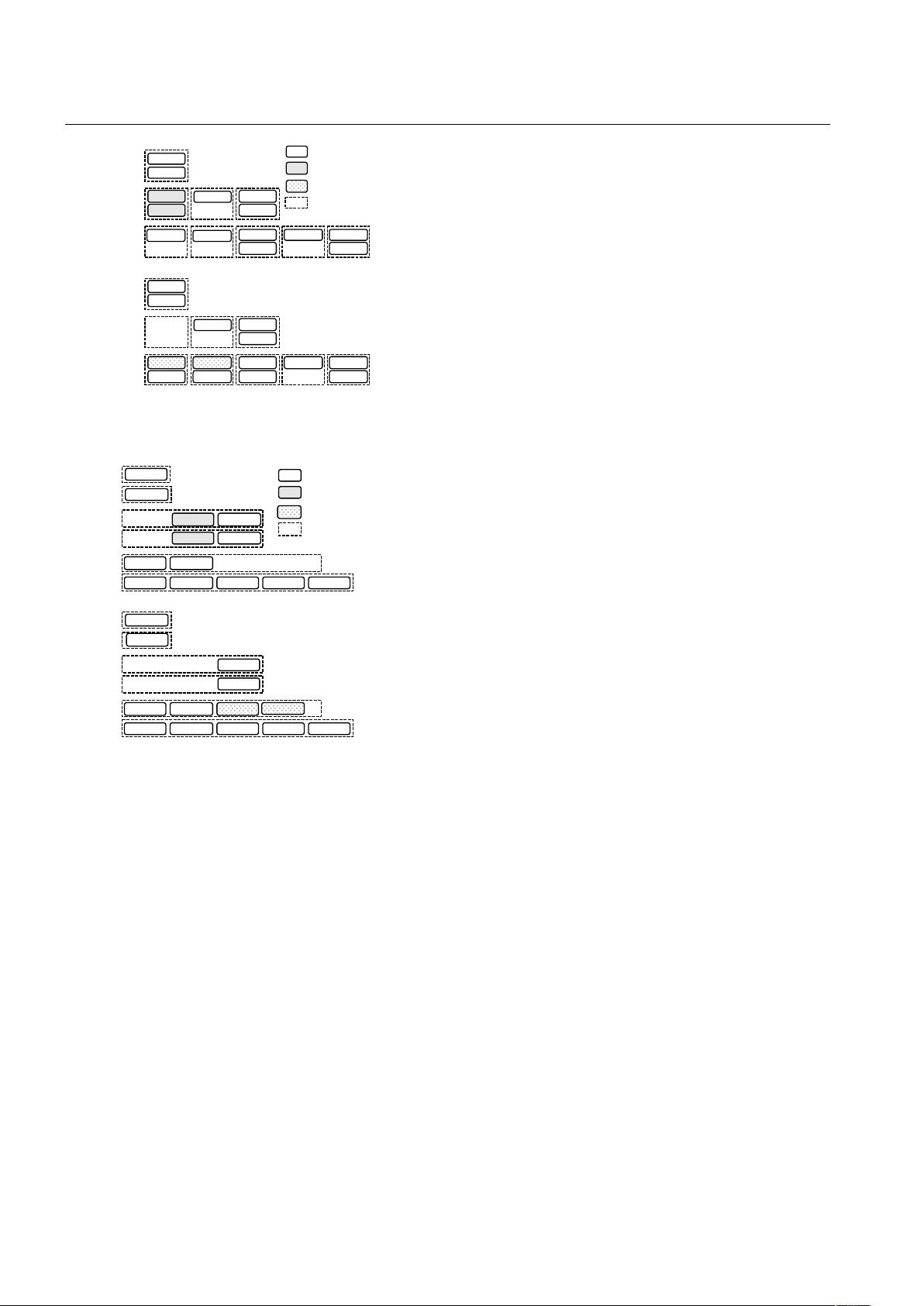

The partitioning optimization can also be applied to the

tiering merge policy. However, one major issue in doing so

is that each level can contain multiple SSTables with over-

lapping key ranges. These SSTables must be ordered prop-

erly based on their recency to ensure correctness. Two pos-

sible schemes can be used to organize the SSTables at each

level, namely vertical grouping and horizontal grouping. In

both schemes, the SSTables at each level are organized into

groups. The vertical grouping scheme groups SSTables with

overlapping key ranges together so that the groups have dis-

joint key ranges. Thus, it can be viewed as an extension

4

RocksDB supports a limited form of a partitioned tiering merge

policy to bound the maximum size of each SSTable due to its internal

restrictions. However, the disk space may still be doubled temporarily

during large merges.