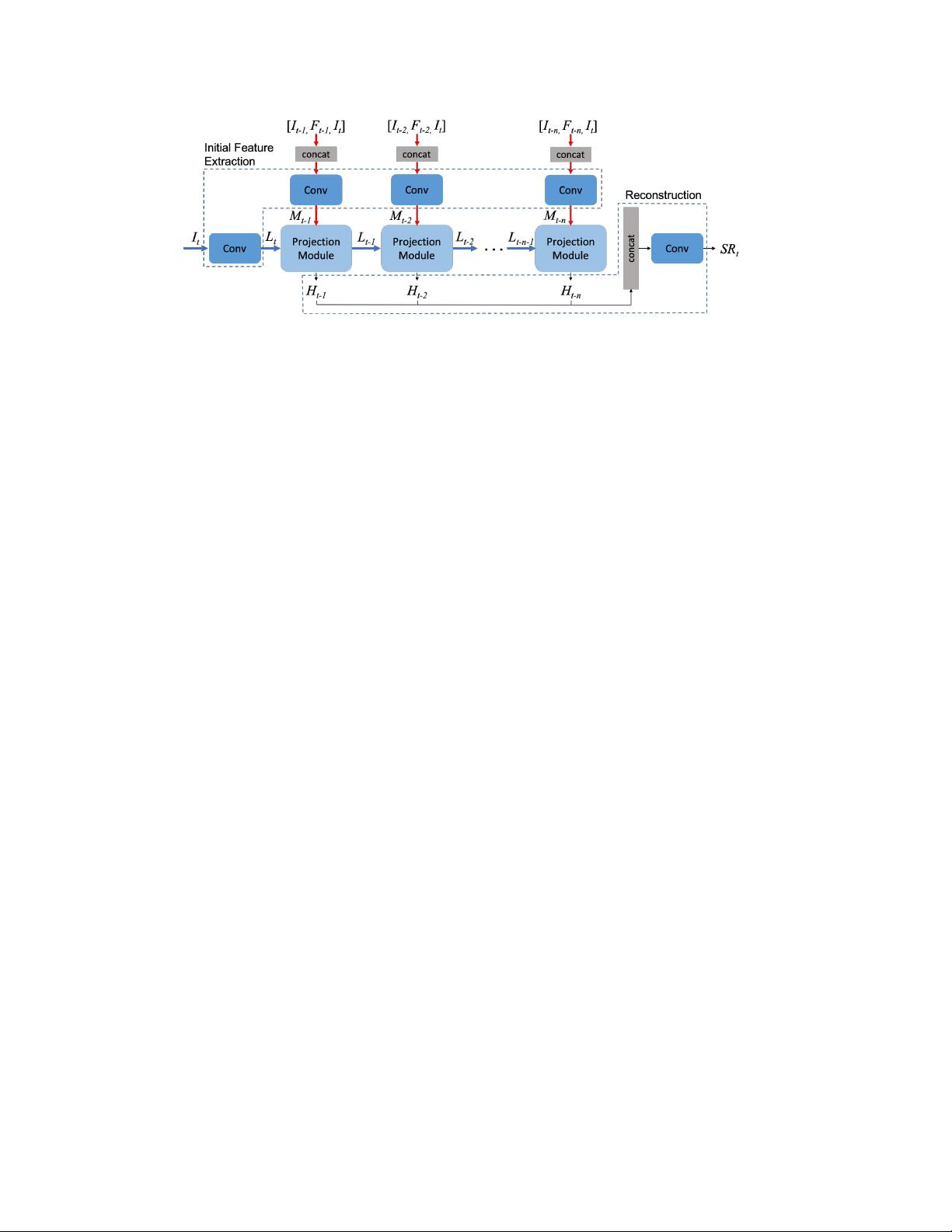

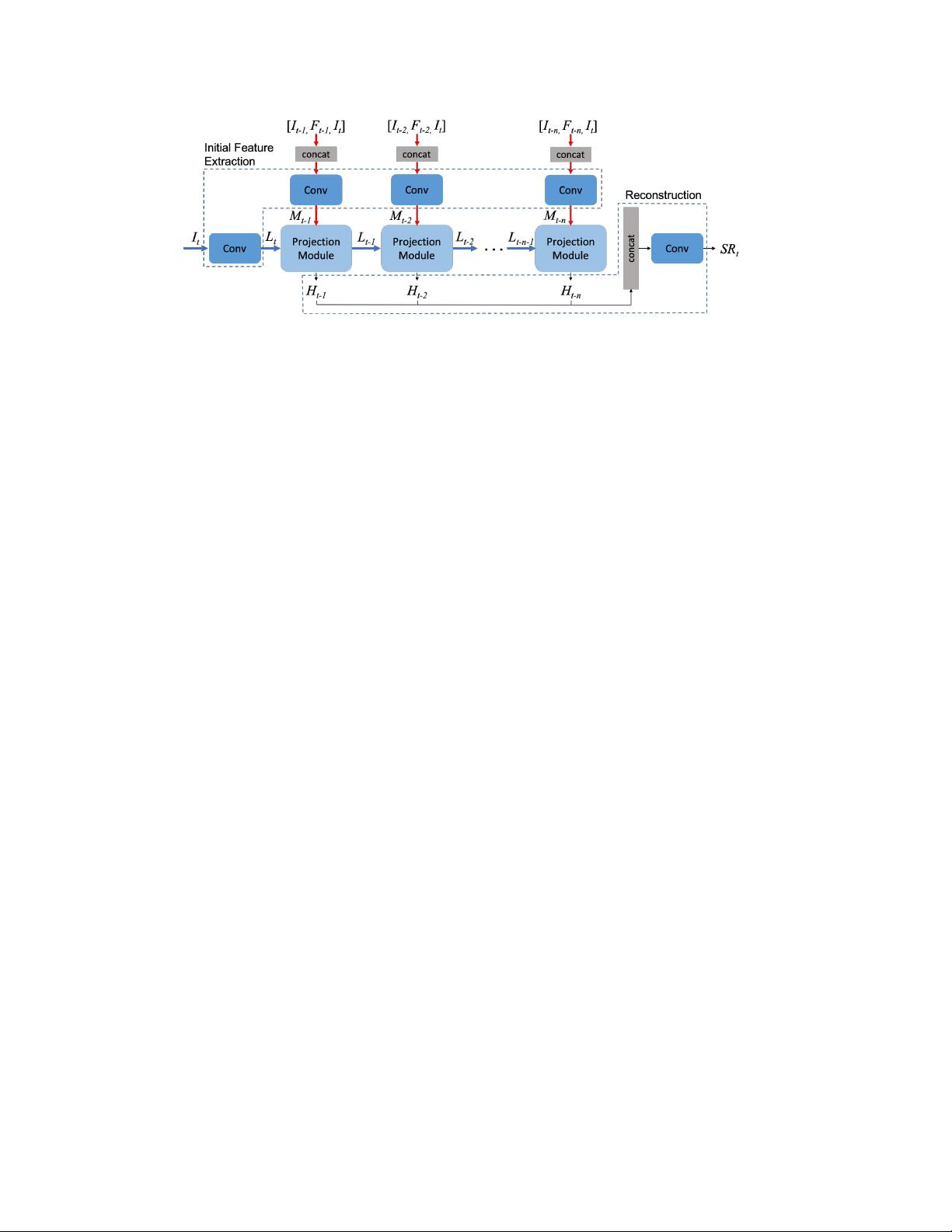

Figure 2. Overview of RBPN. The network has two approaches. The horizontal blue line enlarges I

t

using SISR. The vertical red line is

based on MISR to compute the residual features from a pair of I

t

to neighbor frames (I

t−1

, ..., I

t−n

) and the precomputed dense motion

flow maps (F

t−1

, ..., F

t−n

). Each step is connected to add the temporal connection. On each projection step, RBPN observes the missing

details on I

t

and extract the residual features from each neighbor frame to recover the missing details.

work capacity and has no frame alignment step. Further

improvement is proposed by [30] using a motion compen-

sation module and a convLSTM layer [33]. Recently, [27]

proposed an efficient many-to-many RNN that uses the pre-

vious HR estimate to super-resolve the next frames. While

recurrent feedback connections utilize temporal smoothness

between neighbor frames in a video for improving the per-

formance, it is not easy to jointly model subtle and signifi-

cant changes observed in all frames.

3. Recurrent Back-Projection Networks

3.1. Network Architecture

Our proposed network is illustrated in Fig. 2. Let I be

LR frame with size of (M

l

× N

l

). The input is sequence

of n + 1 LR frames {I

t−n

, . . . , I

t−1

, I

t

} where I

t

is the

target frame. The goal of VSR is to output HR version of I

t

,

denoted by SR

t

with size of (M

h

× N

h

) where M

l

< M

h

and N

l

< N

h

. The operation of RBPN can be divided into

three stages: initial feature extraction, multiple projections,

and reconstruction. Note that we train the entire network

jointly, end-to-end.

Initial feature extraction. Before entering projection mod-

ules, I

t

is mapped to LR feature tensor L

t

. For each neigh-

bor frame among I

t−k

, k ∈ [n], we concatenate the pre-

computed dense motion flow map F

t−k

(describing a 2D

vector per pixel) between I

t−k

and I

t

with the target frame

I

t

and I

t−k

. The motion flow map encourages the projec-

tion module to extract missing details between a pair of I

t

and I

t−k

. This stacked 8-channel “image” is mapped to a

neighbor feature tensor M

t−k

.

Multiple Projections. Here, we extract the missing details

in the target frame by integrating SISR and MISR paths,

then produce refined HR feature tensor. This stage receives

L

t−k−1

and M

t−k

, and outputs HR feature tensor H

t−k

.

Reconstruction. The final SR output is obtained

by feeding concatenated HR feature maps for all

frames into a reconstruction module, similarly to [8]:

SR

t

= f

rec

([H

t−1

, H

t−2

, ..., H

t−n

]). In our experiments,

f

rec

is a single convolutional layer.

3.2. Multiple Projection

The multiple projection stage of RBPN uses a re-

current chain of encoder-decoder modules, as shown in

Fig. 3. The projection module, shared across time

frames, takes two inputs: L

t−n−1

∈ R

M

l

×N

l

×c

l

and

M

t−n

∈ R

M

l

×N

l

×c

m

, then produces two outputs: L

t−n

and H

t−n

∈ R

M

h

×N

h

×c

h

where c

l

, c

m

, c

h

are the number

of channels for particular map accordingly.

The encoder produces a hidden state of estimated HR

features from the projection to a particular neighbor frame.

The decoder deciphers the respective hidden state as the

next input for the encoder module as shown in Fig. 4 which

are defined as follows:

Encoder: H

t−n

= Net

E

(L

t−n−1

, M

t−n

; θ

E

) (1)

Decoder: L

t−n

= Net

D

(H

t−n

; θ

D

) (2)

The encoder module Net

E

is defined as follows:

SISR upscale: H

l

t−n−1

= Net

sisr

(L

t−n−1

; θ

sisr

) (3)

MISR upscale: H

m

t−n

= Net

misr

(M

t−n

; θ

misr

) (4)

Residual: e

t−n

= Net

res

(H

l

t−n−1

− H

m

t−n

; θ

res

) (5)

Output: H

t−n

= H

l

t−n−1

+ e

t−n

(6)

3.3. Interpretation

Figure 5 illustrates the RBPN pipeline, for a 3-frame

video. In the encoder, we can see RBPN as the combina-

tion of SISR and MISR networks. First, target frame is en-

larged by Net

sisr

to produce H

l

t−k−1

. Then, for each com-

bination of concatenation from neighbor frames and target

frame, Net

misr

performs implicit frame alignment and ab-

sorbs the motion from neighbor frames to produce warping