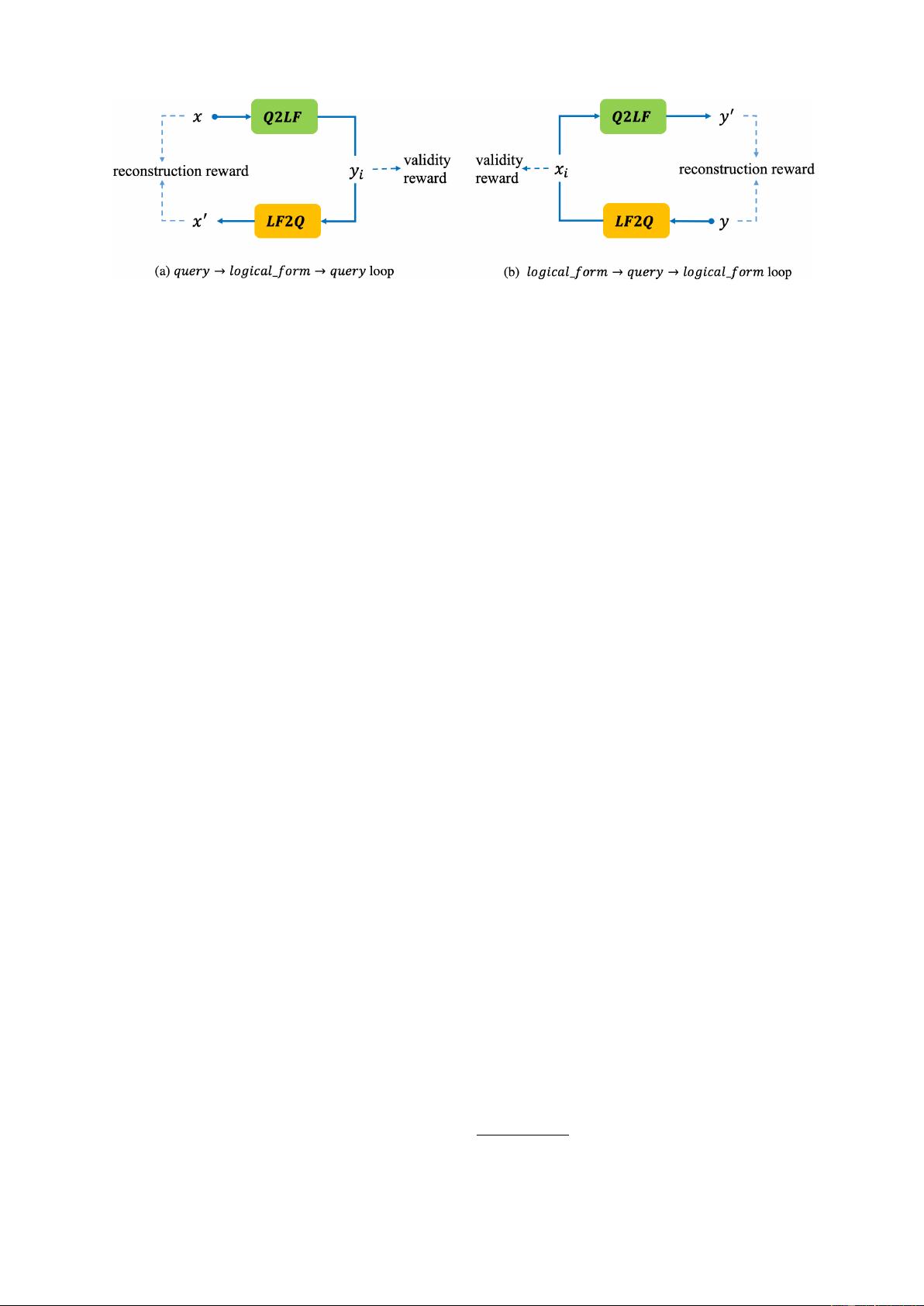

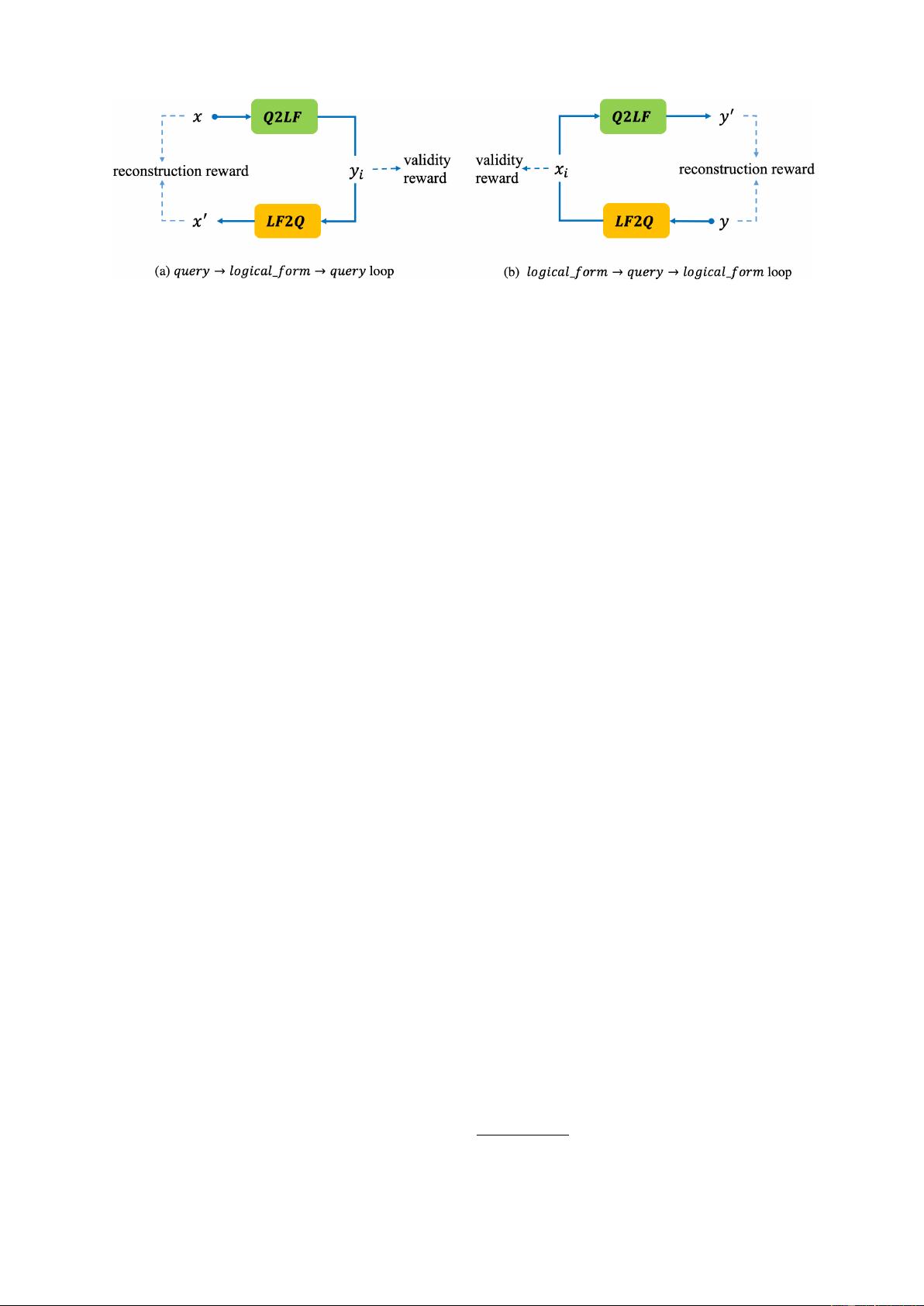

Figure 1: An overview of dual semantic parsing framework. The primal model (Q2LF ) and dual model (LF 2Q)

can form a closed cycle. But there are two different directed loops, depending on whether they start from a query

or logical form. Validity reward is used to estimate the quality of the middle generation output, and reconstruction

reward is exploited to avoid information loss. The primal and dual models can be pre-trained and fine-tuned with

labeled data to keep the models effective.

where v, b

a

∈ R

n

, and W

1

∈ R

n×2n

, W

2

∈ R

n×n

are parameters. Then we compute the vocabulary

distribution P

gen

(y

t

|y

<t

, x) by

c

t

=

|x|

X

i=1

a

t

i

h

i

(5)

P

gen

(y

t

|y

<t

, x) =softmax(W

o

[s

t

; c

t

] + b

o

) (6)

where W

o

∈ R

|V

y

|×3n

, b

o

∈ R

|V

y

|

and |V

y

| is the

output vocabulary size. Generation ends once an

end-of-sequence token “EOS” is emitted.

Copy Mechanism We also include copy mech-

anism to improve model generalization following

the implementation of See et al. (2017), a hybrid

between Nallapati et al. (2016) and pointer net-

work (Gulcehre et al., 2016). The predicted token

is from either a fixed output vocabulary V

y

or raw

input words x. We use sigmoid gate function σ to

make a soft decision between generation and copy

at each step t.

g

t

=σ(v

T

g

[s

t

; c

t

; φ(y

t−1

)] + b

g

) (7)

P (y

t

|y

<t

, x) =g

t

P

gen

(y

t

|y

<t

, x)

+ (1 − g

t

)P

copy

(y

t

|y

<t

, x)

(8)

where g

t

∈ [0, 1] is the balance score, v

g

is a

weight vector and b

g

is a scalar bias. Distribution

P

copy

(y

t

|y

<t

, x) will be described as follows.

Entity Mapping Although the copy mechanism

can deal with unknown words, many raw words

can not be directly copied to be part of a log-

ical form. For example, kobe bryant is

represented as en.player.kobe_bryant in

OVERNIGHT (Wang et al., 2015). It is common

that entities are identified by Uniform Resource

Identifier (URI, Klyne and Carroll, 2006) in a

knowledge base. Thus, a mapping from raw words

to URI is included after copying. Mathematically,

P

copy

in Eq.8 is calculated by:

P

copy

(y

t

= w|y

<t

, x) =

X

i,j: KB(x

i:j

)=w

j

X

k=i

a

t

k

where i < j, a

t

k

is the attention weight of posi-

tion k at decoding step t, KB(·) is a dictionary-

like function mapping a specific noun phrase to

the corresponding entity token in the vocabulary

of logical forms.

2.2 Dual Model

The dual task (LF 2Q) is an inverse of the primal

task, which aims to generate a natural language

query given a logical form. We can also exploit

the attention-based Encoder-Decoder architecture

(with copy mechanism or not) to build the dual

model.

Reverse Entity Mapping Different with the pri-

mal task, we reversely map every possible KB

entity y

t

of a logical form to the corresponding

noun phrase before query generation, KB

−1

(y

t

)

1

.

Since each KB entity may have multiple aliases in

the real world, e.g. kobe bryant has a nick-

name the black mamba, we make different

selections in two cases:

• For paired data, we select the noun phrase

from KB

−1

(y

t

), which exists in the query.

• For unpaired data, we randomly select one

from KB

−1

(y

t

).

1

KB

−1

(·) is the inverse operation of KB(·), which re-

turns the set of all corresponding noun phrases given a KB

entity.