Real-Time Human Pose Recognition in Parts from Single Depth Images

Jamie Shotton Andrew Fitzgibbon Mat Cook Toby Sharp Mark Finocchio

Richard Moore Alex Kipman Andrew Blake

Microsoft Research Cambridge & Xbox Incubation

Abstract

We propose a new method to quickly and accurately pre-

dict 3D positions of body joints from a single depth image,

using no temporal information. We take an object recog-

nition approach, designing an intermediate body parts rep-

resentation that maps the difficult pose estimation problem

into a simpler per-pixel classification problem. Our large

and highly varied training dataset allows the classifier to

estimate body parts invariant to pose, body shape, clothing,

etc. Finally we generate confidence-scored 3D proposals of

several body joints by reprojecting the classification result

and finding local modes.

The system runs at 200 frames per second on consumer

hardware. Our evaluation shows high accuracy on both

synthetic and real test sets, and investigates the effect of sev-

eral training parameters. We achieve state of the art accu-

racy in our comparison with related work and demonstrate

improved generalization over exact whole-skeleton nearest

neighbor matching.

1. Introduction

Robust interactive human body tracking has applica-

tions including gaming, human-computer interaction, secu-

rity, telepresence, and even health-care. The task has re-

cently been greatly simplified by the introduction of real-

time depth cameras [16, 19, 44, 37, 28, 13]. However, even

the best existing systems still exhibit limitations. In partic-

ular, until the launch of Kinect [21], none ran at interactive

rates on consumer hardware while handling a full range of

human body shapes and sizes undergoing general body mo-

tions. Some systems achieve high speeds by tracking from

frame to frame but struggle to re-initialize quickly and so

are not robust. In this paper, we focus on pose recognition

in parts: detecting from a single depth image a small set of

3D position candidates for each skeletal joint. Our focus on

per-frame initialization and recovery is designed to comple-

ment any appropriate tracking algorithm [7, 39, 16, 42, 13]

that might further incorporate temporal and kinematic co-

herence. The algorithm presented here forms a core com-

ponent of the Kinect gaming platform [21].

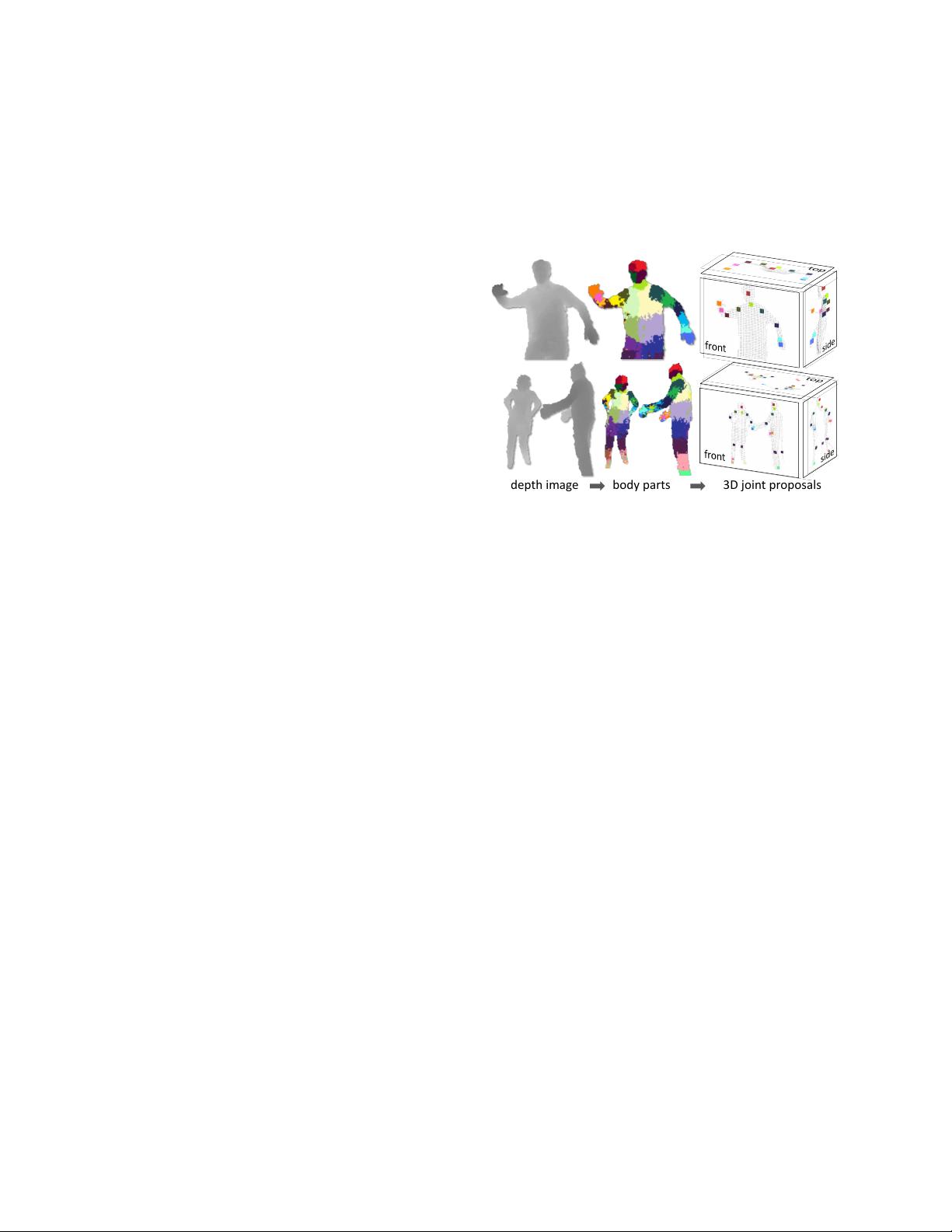

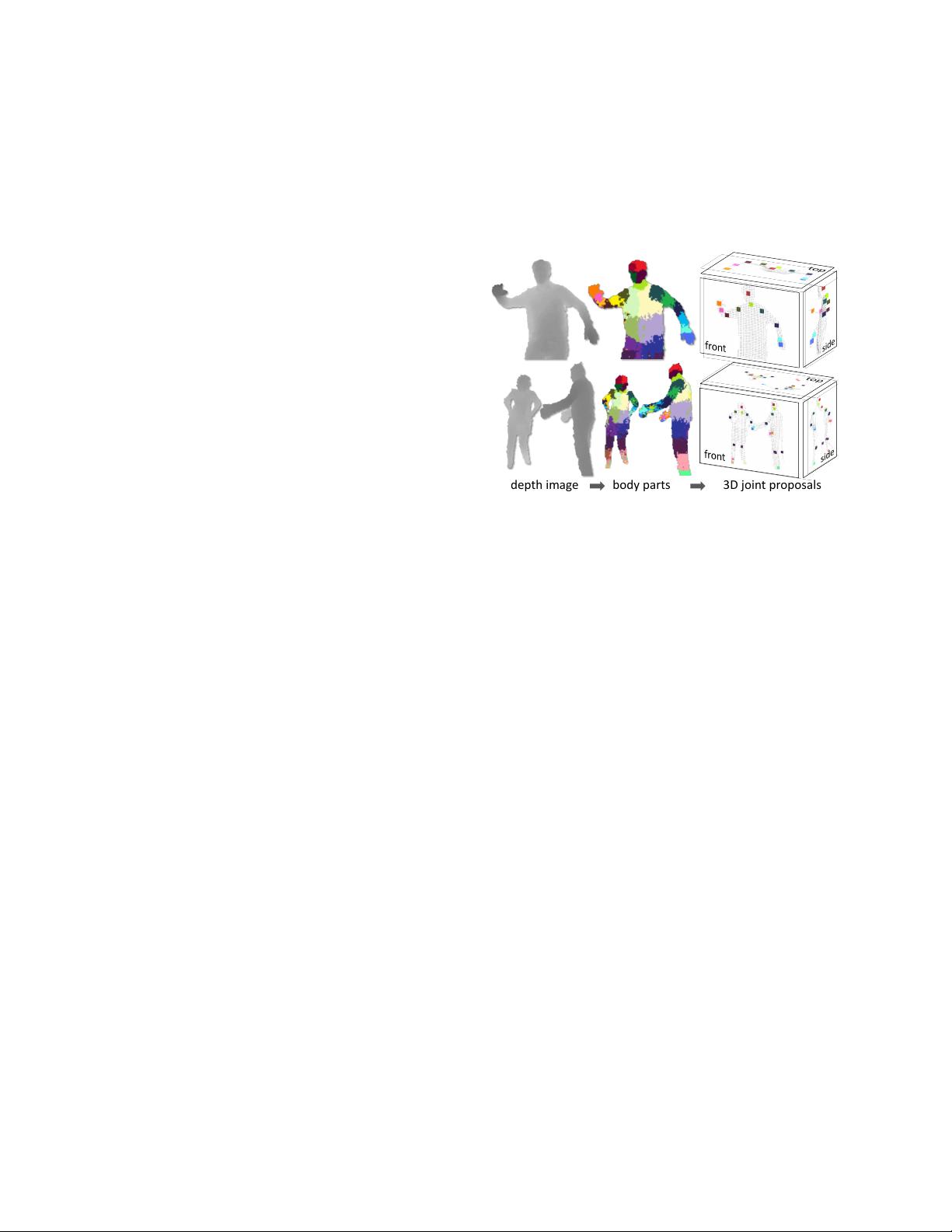

Illustrated in Fig. 1 and inspired by recent object recog-

nition work that divides objects into parts (e.g. [12, 43]),

our approach is driven by two key design goals: computa-

tional efficiency and robustness. A single input depth image

is segmented into a dense probabilistic body part labeling,

with the parts defined to be spatially localized near skeletal

CVPR Teaser

seq 1: frame 15

seq 2: frame 236

seq 5: take 1, 72

depth image body parts 3D joint proposals

Figure 1. Overview. From an single input depth image, a per-pixel

body part distribution is inferred. (Colors indicate the most likely

part labels at each pixel, and correspond in the joint proposals).

Local modes of this signal are estimated to give high-quality pro-

posals for the 3D locations of body joints, even for multiple users.

joints of interest. Reprojecting the inferred parts into world

space, we localize spatial modes of each part distribution

and thus generate (possibly several) confidence-weighted

proposals for the 3D locations of each skeletal joint.

We treat the segmentation into body parts as a per-pixel

classification task (no pairwise terms or CRF have proved

necessary). Evaluating each pixel separately avoids a com-

binatorial search over the different body joints, although

within a single part there are of course still dramatic dif-

ferences in the contextual appearance. For training data,

we generate realistic synthetic depth images of humans of

many shapes and sizes in highly varied poses sampled from

a large motion capture database. We train a deep ran-

domized decision forest classifier which avoids overfitting

by using hundreds of thousands of training images. Sim-

ple, discriminative depth comparison image features yield

3D translation invariance while maintaining high computa-

tional efficiency. For further speed, the classifier can be run

in parallel on each pixel on a GPU [34]. Finally, spatial

modes of the inferred per-pixel distributions are computed

using mean shift [10] resulting in the 3D joint proposals.

An optimized implementation of our algorithm runs in

under 5ms per frame (200 frames per second) on the Xbox

360 GPU, at least one order of magnitude faster than exist-

ing approaches. It works frame-by-frame across dramati-

cally differing body shapes and sizes, and the learned dis-

criminative approach naturally handles self-occlusions and

1