Two-Stage Multi-Target Joint Learning for Monaural Speech Separation

Shuai Nie

1

, Shan Liang

1

, Wei Xue

1

, Xueliang Zhang

2

Wenju Liu

1

1

National Laboratory of Patten Recognition, Institute of Automation, Chinese Academy of Sciences

2

College of Computer Science, Inner Mongolia University

{shuai.nie, sliang, wxue, lwj}@nlpr.ia.ac.cn cszxl@imu.edu.cn

Abstract

Recently, supervised speech separation has been extensively s-

tudied and shown considerable promise. However, many meth-

ods independently model each time-frequency(T-F) unit with

only one target and ignore the correlations over time-frequency

domains in speech auditory features and separation targets.

Moreover, due to temporal continuity of speech, speech au-

ditory features and separation targets have prominent spectro-

temporal structures, and some targets are highly related. These

information can be exploited for speech separation. In this pa-

per, we propose a two-stage multi-target joint learning method

to model the correlation in auditory features and separation tar-

gets. Systematic experiments show that the proposed approach

consistently achieves better separation and generalization per-

formances in the low signal-to-noise ratio(SNR) condition.

Index Terms: Speech Separation, Multi-target Learning, Com-

putational Auditory Scene Analysis (CASA)

1. Introduction

In real-world environments, background interferences substan-

tially degrade speech intelligibility and many applications per-

formances, such as the speech communication and automatic

speech recognition (ASR) [1, 7, 11, 17]. To address this issue,

decades of efforts have been devoted to speech separations, but

separations in real-world environments, especially in the low

SNR and monaural conditions, are still challenging tasks. As

a new trend, compared to the traditional speech enhancemen-

t [12], supervised speech separation has shown to be substan-

tially promising for challenging acoustic conditions [11, 23].

Speech separation can be formulated as a supervised learn-

ing problem. Typically, supervised speech separation learns a

function that maps the noisy features extracted from the mix-

ture to certain ideal masks or clean spectra that can be used to

separate the target speech from mixture signals.

Supervised speech separation has two main types of train-

ing targets, the mask-based and spectra-based approximation

targets [22, 25]. The mask-based targets learn the best approxi-

mation of an ideal mask computing using the clean and noisy

speech, such as the ideal ratio mask(IRM) [13, 24], and the

spectra-based targets learn the best approximation of the spec-

tra of clean speech, such as the Gammatone frequency power

spectrum(GF) [9]. The IRM and GF both can be used to gener-

ate the separated speech with the improved intelligibility and/or

perceptual quality [22], and they are highly related. Intuitively,

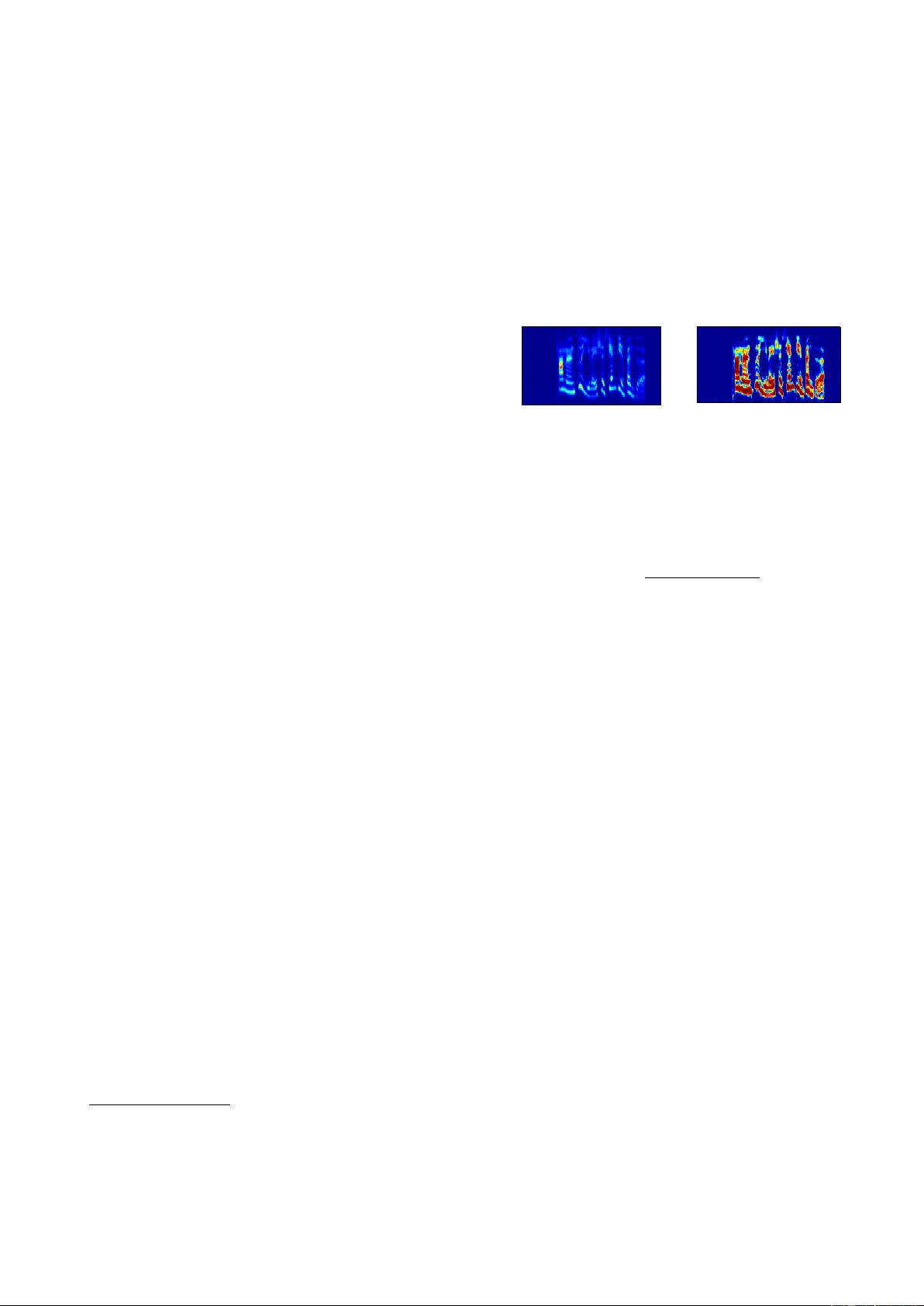

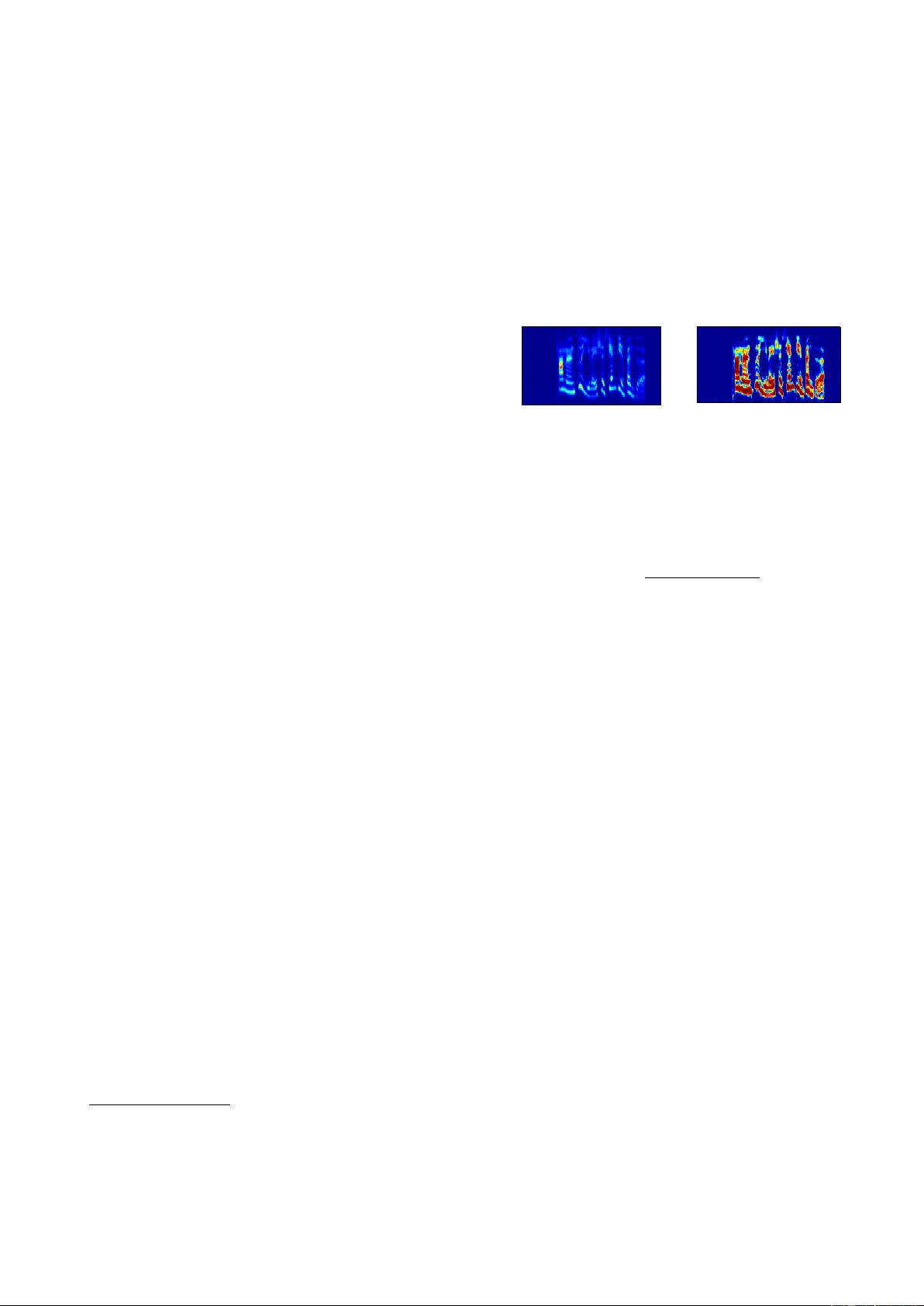

the IRM and the GF of clean speech present similar spectro-

temporal structures as the example shows in Fig. 1. Mathemati-

This research was partly supported by the China National Na-

ture Science Foundation (No.91120303, No.61273267, No.90820011,

No.61403370 and No.61365006).

Channel

Frame

100 200 300

16

32

48

64

Frame

Channel

100 200 300

16

32

48

64

Figure 1: Left Fig: the GF of clean speech; Right Fig: the IRM

at 0dB. The structures of the GF and IRM look very similar.

cally, the IRM can be derived from the GFs of clean speech and

noise, which is written as follows:

IRM (t, f ) =

S

2

(t, f)

S

2

(t, f) + N

2

(t, f)

(1)

where S

2

(t, f) and N

2

(t, f) are the GFs of clean speech and

noise in the T-F unit at channel f and frame t, respectively.

Moreover, due to the sparse distribution of speech on the T-F

domain, the GF can keep relatively invariant harmonic struc-

ture in various auditory environments, and the IRM is inher-

ently bounded and is less sensitive to estimation errors [14].

These correlations and complementarity can be exploited for

speech separation. But in previous works, they are much ig-

nored. Therefore, jointly modeling the IRM and GF in one

model will probably improve the separation performance.

In this paper, we propose a multi-target deep neural net-

work (DNN) to jointly model the IRM and GF. Its target is the

combination of the IRM and the GF of clean speech. To fur-

ther improve the separation performance, a two-stage method

is proposed. In the first stage, the proposed multi-target DNN

is trained to learn a function that maps the noisy features to

the joint targets for the whole frame, rather than for the indi-

vidual T-F unit. Modeling at the frame level can capture the

correlations over the T-F domain in speech. Moreover, to ex-

ploit the spectro-temporal structures in speech auditory features

and joint targets, we use denoising autoencoders (DAE) to mod-

el them by self-learning, respectively. The learned DAEs are

combined with a linear transformation matrix W

h

to initialize

the multi-target DNN. In addition, according to the differences

of errors produced by output nodes, a backpropagation (BP) al-

gorithm with bias weights is further explored to fine tune the

multi-target DNN. In the second stage, the estimated IRM and

GF are integrated into another DNN to obtain the final separa-

tion with higher smoothness and perceptual quality.

2. First Stage: Multi-Target Joint Learning

Typically, related tasks share some common information that

can be exploited to improve each other by the multi-task joint