LayoutNet: Reconstructing the 3D Room Layout from a Single RGB Image

Chuhang Zou

†

Alex Colburn

‡

Qi Shan

‡

Derek Hoiem

†

†

University of Illinois at Urbana-Champaign

{czou4, dhoiem}@illinois.edu

‡

Zillow Group

{alexco, qis}@zillow.com

Abstract

We propose an algorithm to predict room layout from a

single image that generalizes across panoramas and per-

spective images, cuboid layouts and more general layouts

(e.g. “L”-shape room). Our method operates directly on the

panoramic image, rather than decomposing into perspec-

tive images as do recent works. Our network architecture is

similar to that of RoomNet [

15], but we show improvements

due to aligning the image based on vanishing points, pre-

dicting multiple layout elements (corners, boundaries, size

and translation), and fitting a constrained Manhattan lay-

out to the resulting predictions. Our method compares well

in speed and accuracy to other existing work on panora-

mas, achieves among the best accuracy for perspective im-

ages, and can handle both cuboid-shaped and more general

Manhattan layouts.

1. Introduction

Estimating the 3D layout of a room from one image is an

important goal, with applications such as robotics and vir-

tual/augmented reality. The room layout specifies the posi-

tions, orientations, and heights of the walls, relative to the

camera center. The layout can be represented as a set of

projected corner positions or boundaries, or as a 3D mesh.

Existing works apply to special cases of the problem, such

as predicting cuboid-shaped layouts from perspective im-

ages or from panoramic images.

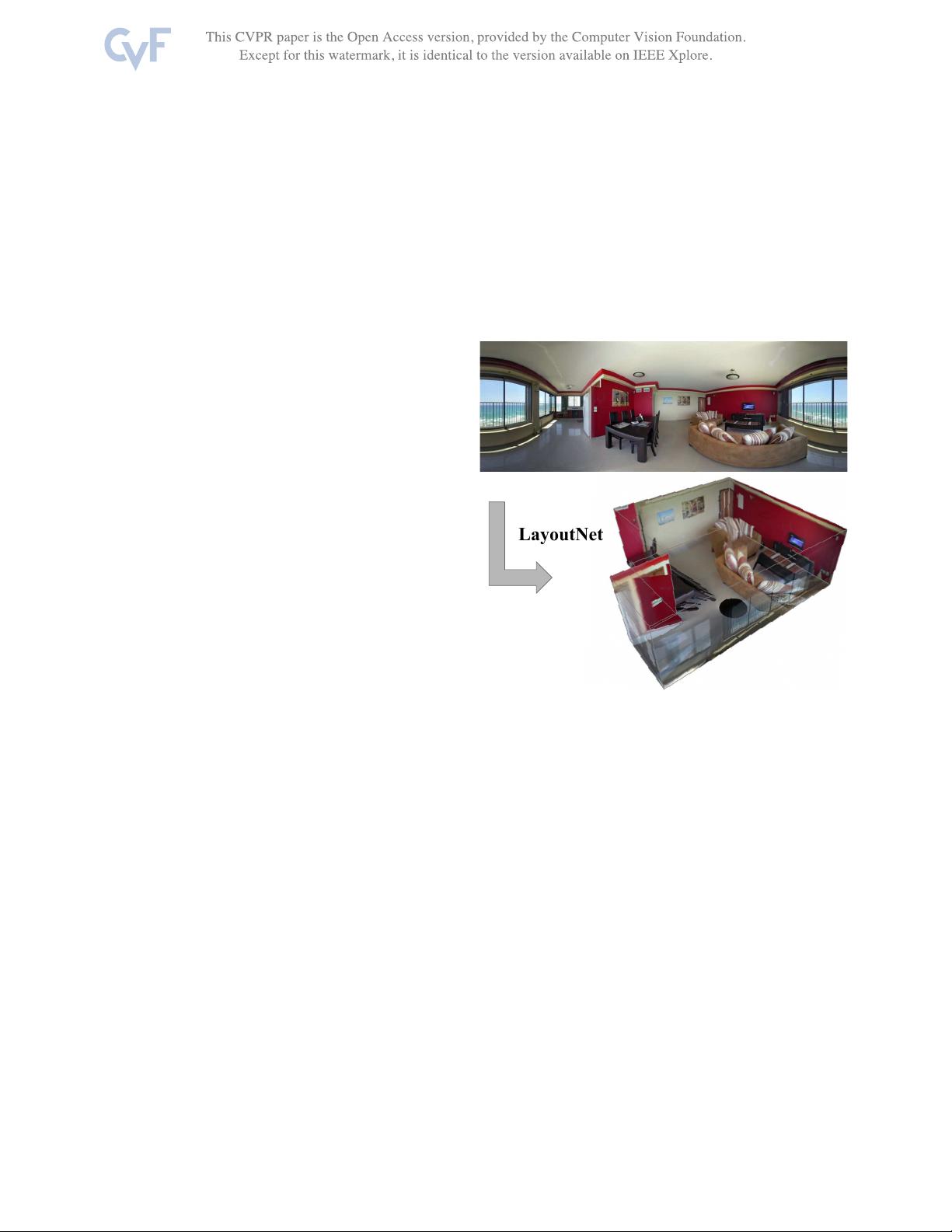

We present LayoutNet, a deep convolution neural net-

work (CNN) that estimates the 3D layout of an indoor

scene from a single perspective or panoramic image (Fig-

ure.

1). Our method compares well in speed and accu-

racy on panoramas and is among the best on perspec-

tive images. Our method also generalizes to non-cuboid

Manhattan layouts, such as “L”-shaped rooms. Code is

available at:

https://github.com/zouchuhang/

LayoutNet

.

Our LayoutNet approach operates in three steps ( Fig-

ure.

2). First, our system analyzes the vanishing points

Figure 1. Illustration. Our LayoutNet predicts a non-cuboid room

layout from a single panorama under equirectangular projection.

and aligns the image to be level with the floor (Sec. 3.1).

This alignment ensures that wall-wall boundaries are ver-

tical lines and substantially reduces error according to our

experiments. In the second step, corner (layout junctions)

and boundary probability maps are predicted directly on the

image using a CNN with an encoder-decoder structure and

skip connections (Sec.

3.2). Corners and boundaries each

provide a complete representation of room layout. We find

that jointly predicting them in a single network leads to bet-

ter estimation. Finally, the 3D layout parameters are opti-

mized to fit the predicted corners and boundaries (Sec.

3.4).

The final 3D layout loss from our optimization process is

difficult to back-propagate through the network, but direct

regression of the 3D parameters during training serves as an

effective substitute, encouraging predictions that maximize

accuracy of the end result.

Our contributions are:

• We propose a more general RGB image to layout al-

gorithm that is suitable for perspective and panoramic

2051