The Stanford CoreNLP Natural Language Processing Toolkit

Christopher D. Manning

Linguistics & Computer Science

Stanford University

manning@stanford.edu

Mihai Surdeanu

SISTA

University of Arizona

msurdeanu@email.arizona.edu

John Bauer

Dept of Computer Science

Stanford University

horatio@stanford.edu

Jenny Finkel

Prismatic Inc.

jrfinkel@gmail.com

Steven J. Bethard

Computer and Information Sciences

U. of Alabama at Birmingham

bethard@cis.uab.edu

David McClosky

IBM Research

dmcclosky@us.ibm.com

Abstract

We describe the design and use of the

Stanford CoreNLP toolkit, an extensible

pipeline that provides core natural lan-

guage analysis. This toolkit is quite widely

used, both in the research NLP community

and also among commercial and govern-

ment users of open source NLP technol-

ogy. We suggest that this follows from

a simple, approachable design, straight-

forward interfaces, the inclusion of ro-

bust and good quality analysis compo-

nents, and not requiring use of a large

amount of associated baggage.

1 Introduction

This paper describe the design and development of

Stanford CoreNLP, a Java (or at least JVM-based)

annotation pipeline framework, which provides

most of the common core natural language pro-

cessing (NLP) steps, from tokenization through to

coreference resolution. We describe the original

design of the system and its strengths (section 2),

simple usage patterns (section 3), the set of pro-

vided annotators and how properties control them

(section 4), and how to add additional annotators

(section 5), before concluding with some higher-

level remarks and additional appendices. While

there are several good natural language analysis

toolkits, Stanford CoreNLP is one of the most

used, and a central theme is trying to identify the

attributes that contributed to its success.

2 Original Design and Development

Our pipeline system was initially designed for in-

ternal use. Previously, when combining multiple

natural language analysis components, each with

their own ad hoc APIs, we had tied them together

with custom glue code. The initial version of the

!"#$%&'()"%*

+$%,$%-$*+./&0%1*

2(3,4"546.$$-7*!(11&%1*

8"3.7"/"1&-(/*9%(/:6&6*

;(<$=*>%),:*?$-"1%&)"%*

+:%,(-)-*2(36&%1*

@,7$3*9%%",(,"36*

A"3$5$3$%-$*?$6"/B)"%**

?(C*

,$D,*

>D$-B)"%*E/"C*

9%%",()"%*

@FG$-,*

9%%",(,$=*

,$D,*

HtokenizeI*

HssplitI*

HposI*

HlemmaI*

HnerI*

HparseI*

HdcorefI*

(gender, sentiment)!

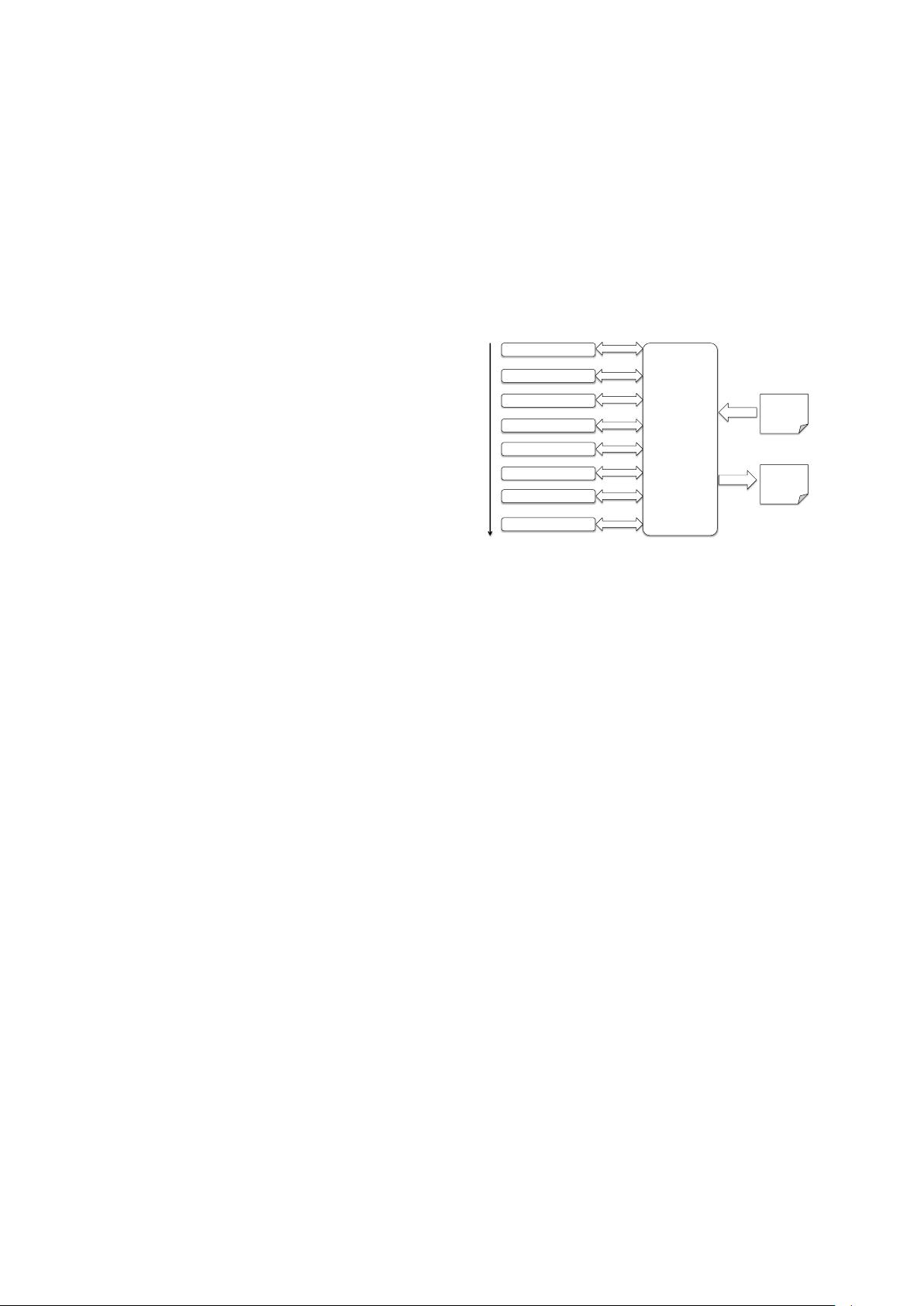

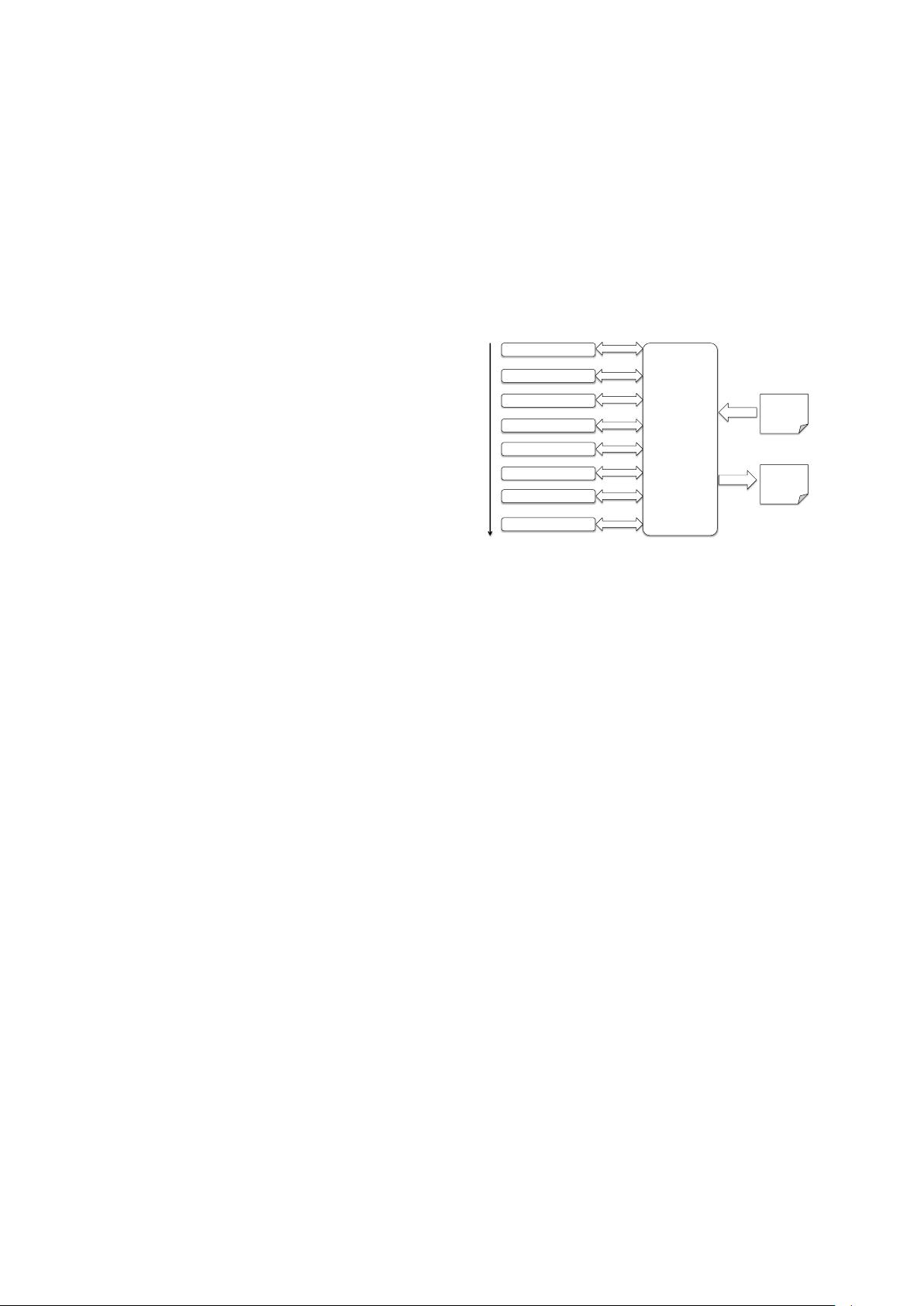

Figure 1: Overall system architecture: Raw text

is put into an Annotation object and then a se-

quence of Annotators add information in an analy-

sis pipeline. The resulting Annotation, containing

all the analysis information added by the Annota-

tors, can be output in XML or plain text forms.

annotation pipeline was developed in 2006 in or-

der to replace this jumble with something better.

A uniform interface was provided for an Annota-

tor that adds some kind of analysis information to

some text. An Annotator does this by taking in an

Annotation object to which it can add extra infor-

mation. An Annotation is stored as a typesafe het-

erogeneous map, following the ideas for this data

type presented by Bloch (2008). This basic archi-

tecture has proven quite successful, and is still the

basis of the system described here. It is illustrated

in figure 1. The motivations were:

• To be able to quickly and painlessly get linguis-

tic annotations for a text.

• To hide variations across components behind a

common API.

• To have a minimal conceptual footprint, so the

system is easy to learn.

• To provide a lightweight framework, using plain

Java objects (rather than something of heav-

ier weight, such as XML or UIMA’s Common

Analysis System (CAS) objects).