SSIM = 1

1

1

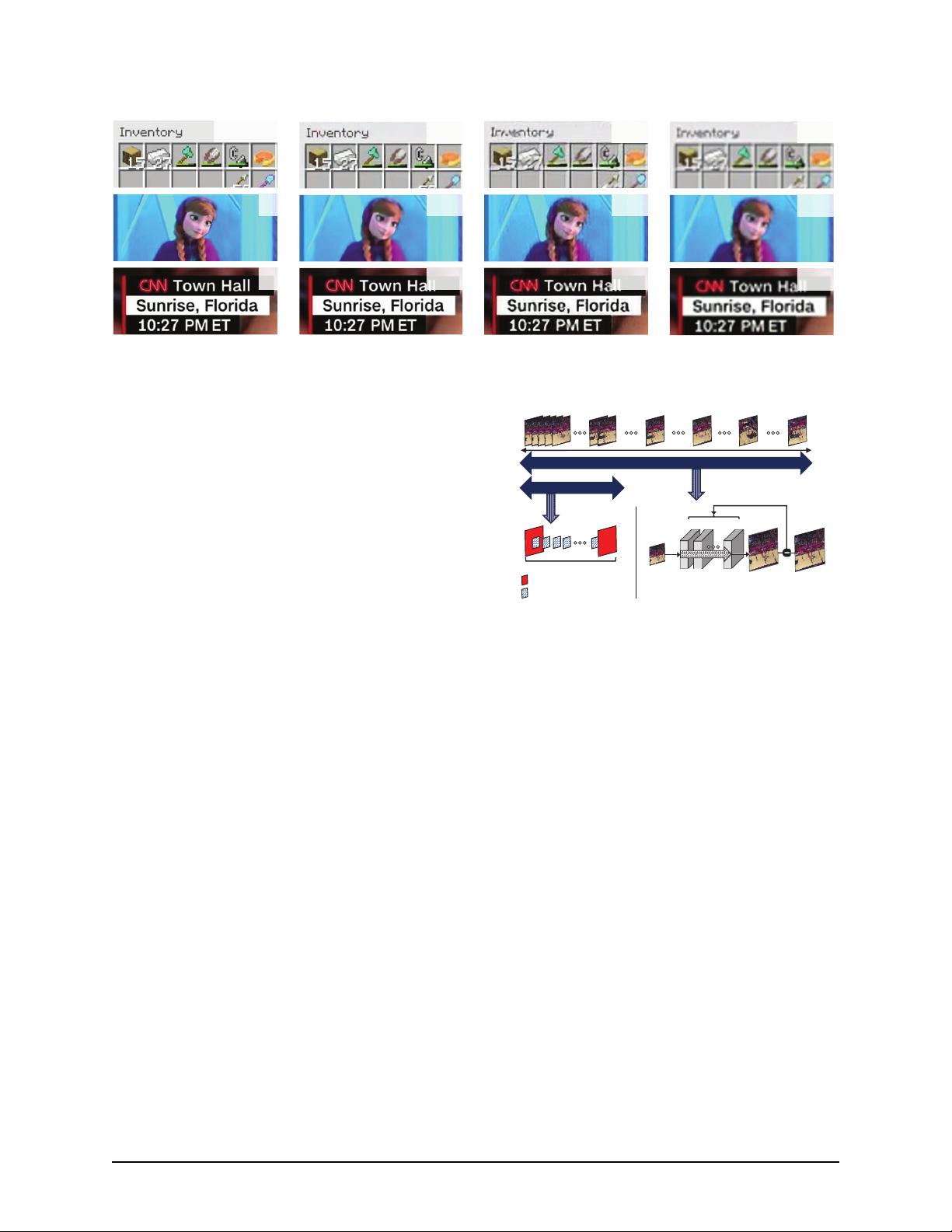

(a) Original (1080p)

0.94

0.93

0.91

(b) Content-aware DNN

0.88

0.84

0.89

(c) Content-agnostic DNN

(d) 240p

Figure 2: 240p to 1080p super-resolution results

(Content type – 1st row: Game [15], 2nd row: Entertainment [14], 3rd row: News [16])

processing used in Facebook. ExCamera [31] uses mas-

sive parallelism to enable interactive and collaborative

editing. They focus on solving distributed system prob-

lems within a datacenter without changing the clients,

whereas we focus on the division of work between the

servers and clients.

Studies on video control plane

[32, 41, 44, 51] identify

spatial and temporal diversity of CDNs in performance

and advocate for an Internet-scale control plane which

coordinates client behaviors to collectively optimize user

QoE. Although they control client behaviors, they do not

utilize client computation to directly enhance the video

quality.

4 Key Design Choices

Achieving our goal requires redesigning major compo-

nents of video delivery. This section describes the key

design choices we make to overcome practical challenges.

4.1 Content-aware DNN

Key challenge.

Developing a universal DNN model that

works well across all Internet video is impractical be-

cause the number of video episodes is almost infinite.

A single DNN of finite capacity, in principle, may not

be expressive enough to capture all of them. Note, a

fundamental trade-off exists between generalization and

specialization for any machine learning approach (i.e.,

as the model coverage becomes larger, its performance

degrades), which is referred to as the ‘no free lunch’ the-

orem [75]. Even worse, one can generate ‘adversarial’

new videos of arbitrarily low quality, given any existing

DNN model [38, 58], making the service vulnerable to

reduction of quality attacks.

NAS’ content-aware model.

To tackle the challenge, we

consider a content-aware DNN model in which we use a

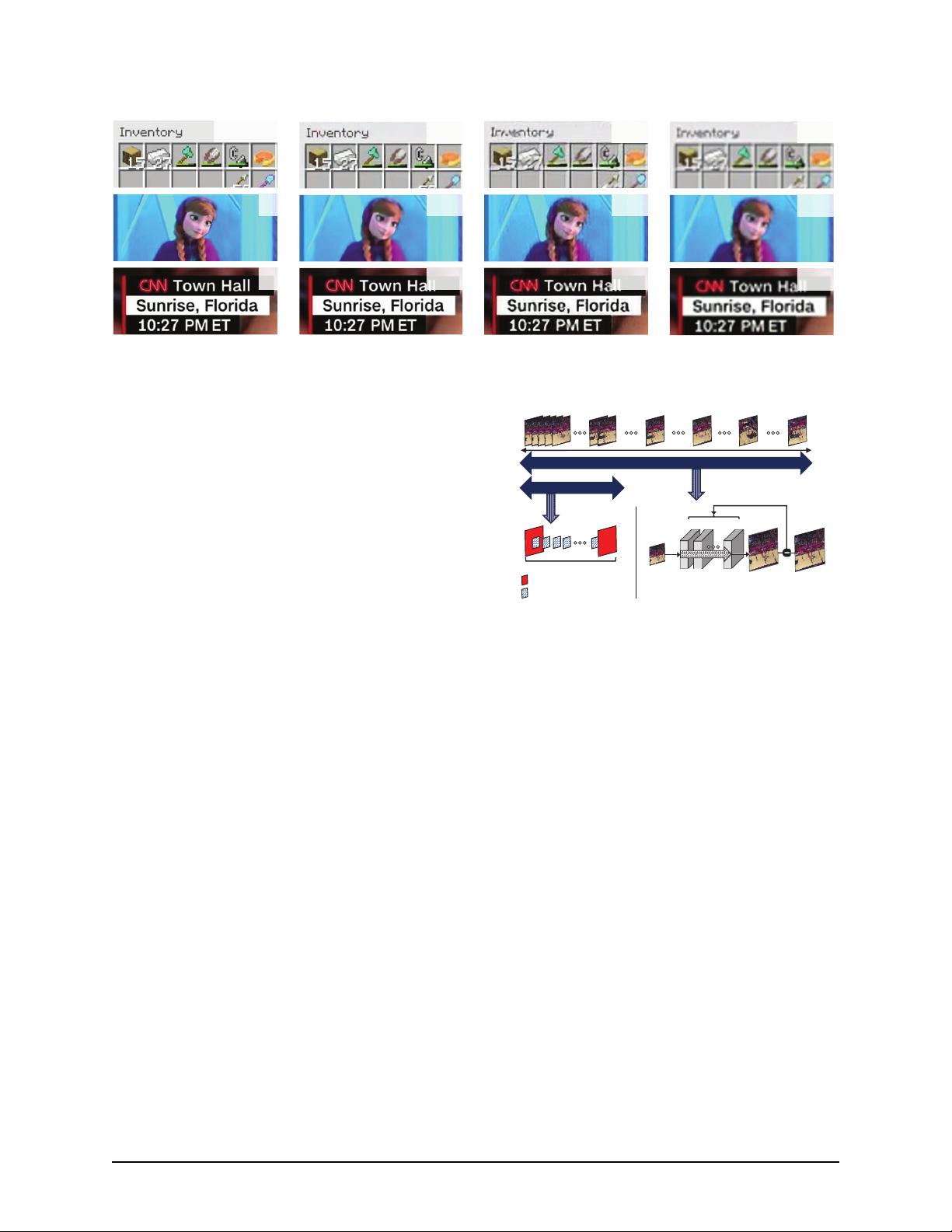

Start

End

Large timescale redundancy

Short timescales

: Intra-frame coding

: Inter-frame coding

Group of Pictures (GOP)

H.26x, VPx

Input

Output

Update

Target

Recover high-quality redundancy

(e.g., Super-resolution)

DNN

Content-aware DNN

Figure 3: Content-aware DNN based video encoding

different DNN for each video episode. This is attractive

because DNNs typically achieve near-zero training error,

but the testing error is often much higher (i.e., over-fitting

occurs) [67]. Although the deep learning community

has made extensive efforts to reduce the gap [40, 67],

relying on the DNN’s testing accuracy may result in un-

predictable performance [38, 58].

NAS

exploits DNN’s

inherent overfitting property to guarantee reliable and

superior performance.

Figure 2 shows the super-resolution results of our

content-aware DNN and a content-agnostic DNN

trained on standard benchmark images (NTIRE 2017

dataset [19]). We use 240p images as input (d) to the

super-resolution DNNs to produce output (b) or (c). The

images are snapshots of video clips from YouTube. The

generic, universal model fails to achieve high quality con-

sistently over a variety of contents—in certain cases, the

quality degrades after processing. In

§

5.1, we show how

to design a content-aware DNN for adaptive streaming.

The content-aware approach can be seen as a type of

video compression as illustrated in Figure 3. The content-

aware DNN captures redundancy that occurs at large time

scales (e.g. multiple GOPs) and operates over the entire

video. In contrast, the conventional codecs deals with re-

648 13th USENIX Symposium on Operating Systems Design and Implementation USENIX Association