IEEE ROBOTICS AND AUTOMATION LETTERS. PREPRINT VERSION. ACCEPTED JANUARY, 2020 1

A Lightweight and Accurate Localization Algorithm

Using Multiple Inertial Measurement Units

Ming Zhang, Xiangyu Xu, Yiming Chen, and Mingyang Li

Abstract—This paper proposes a novel inertial-aided local-

ization approach by fusing information from multiple inertial

measurement units (IMUs) and exteroceptive sensors. IMU is a

low-cost motion sensor which provides measurements on angular

velocity and gravity compensated linear acceleration of a moving

platform, and widely used in modern localization systems. To

date, most existing inertial-aided localization methods exploit

only one single IMU. While the single-IMU localization yields

acceptable accuracy and robustness for different use cases, the

overall performance can be further improved by using multiple

IMUs. To this end, we propose a lightweight and accurate

algorithm for fusing measurements from multiple IMUs and

exteroceptive sensors, which is able to obtain noticeable per-

formance gain without incurring additional computational cost.

To achieve this, we first probabilistically map measurements

from all IMUs onto a virtual IMU. This step is performed

by stochastic estimation with least-square estimators and prob-

abilistic marginalization of inter-IMU rotational accelerations.

Subsequently, the propagation model for both state and error

state of the virtual IMU is also derived, which enables the

use of the classical filter-based or optimization-based sensor

fusion algorithms for localization. Finally, results from both

simulation and real-world tests are provided, which demonstrate

that the proposed algorithm outperforms competing algorithms

by noticeable margins.

Index Terms—Sensor Fusion; Localization; SLAM.

I. INTRODUCTION

I

N recent years, commercial products which exploit inertial

measurement units (IMUs) have been under fast develop-

ment. This popular motion sensor can be found in robotics,

personal electronic devices, wearable devices, and so on [1].

On one hand, the maturity of MEMS manufacturing process

significantly reduces the size, price, and power consumption

of the IMU hardware. On the other hand, significant progress

has also been made in both algorithm and software design

for IMUs, ranging from sensor characterization and calibra-

tion [2]–[5], measurement integration [6]–[8], sensor fusion

[9]–[13], and so on.

In this work, we focus on the inertial-aided localization,

which is to estimate the 6D poses (3D position and 3D

orientation) of a moving platform. Since localization with

only IMU inevitably suffers from pose drift, measurements

from other sensors (i.e. aiding), e.g., RGB cameras, depth

Manuscript received: September, 10, 2019; Revised December, 17, 2019;

Accepted January, 13, 2020.

This paper was recommended for publication by Editor Eric Marchand upon

evaluation of the Associate Editor and Reviewers’ comments.

The authors are with Alibaba Group, Hangzhou,

China. {mingzhang, xiangyuxu, yimingchen,

mingyangli}@alibaba-inc.com.

Digital Object Identifier (DOI): see top of this page.

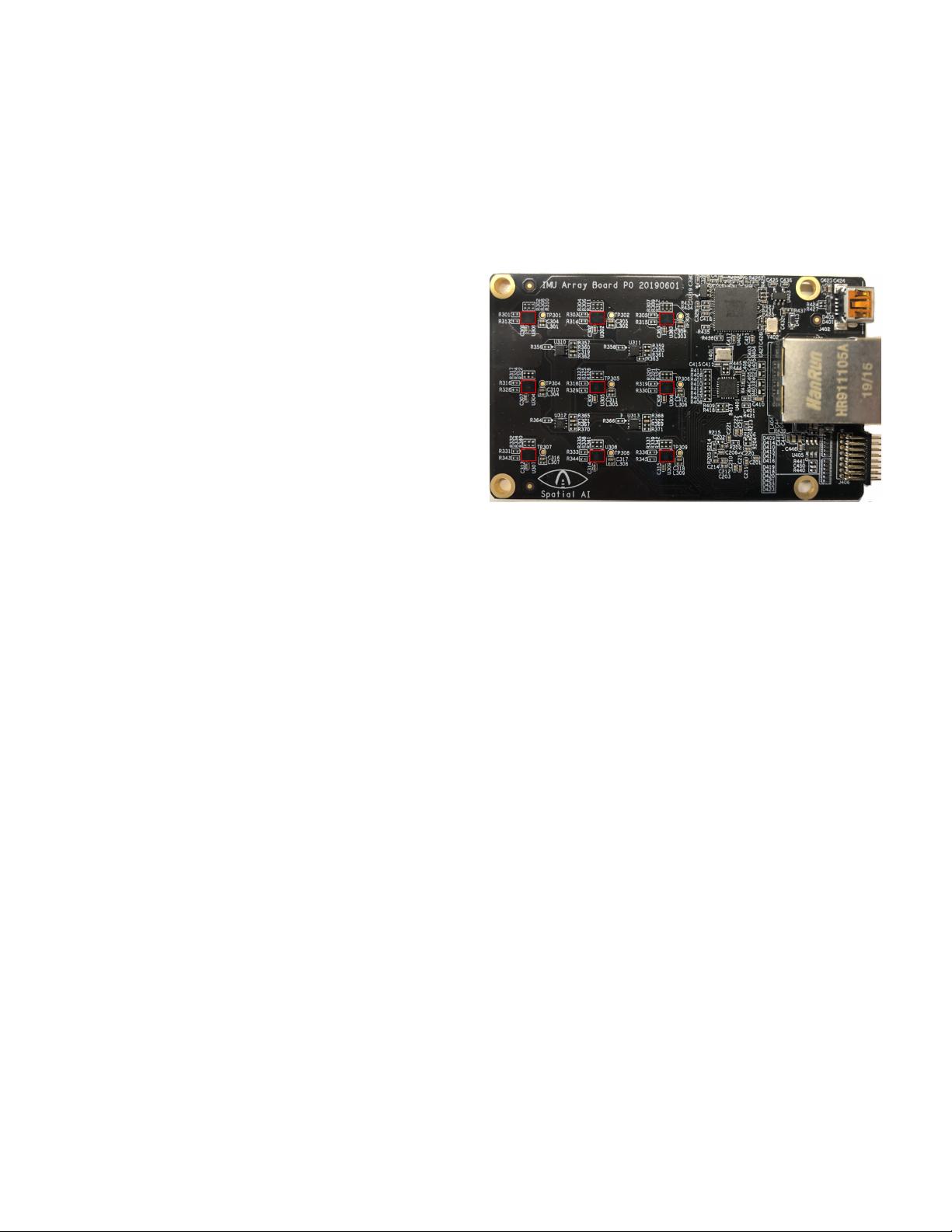

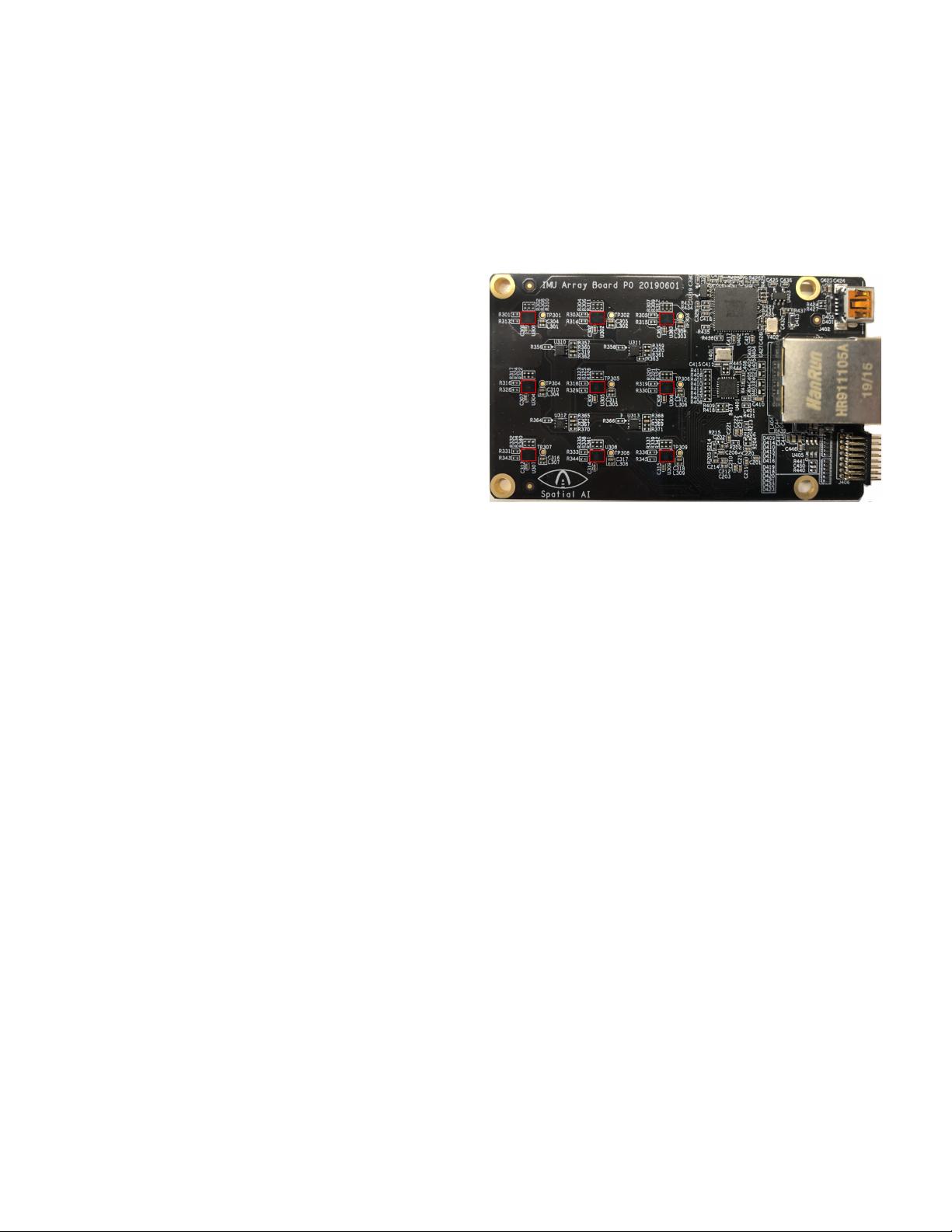

Fig. 1. The IMU array board used in this work, which contains nine ST

LSM6DSOX IMUs marked by red rectangles and a processor interface to

connect cameras. The IMUs are synchronized by an embedded processor.

cameras, or LiDARs (Light Detection And Ranging sensors),

are typically used in combination with IMUs to provide long-

term performance guarantees [12], [14], [15]. To perform

accurate pose estimation, the majority of existing works use

measurements from IMU for pose prediction, which is fol-

lowed by probabilistic refinement using measurements from

other sensors [7]–[12].

To date, most algorithms on inertial-aided localization are

designed based on a single IMU [7]–[13]. Although these

algorithms are successfully deployed in different applications,

using additional IMU sensors creates new possibilities for

further improving the system accuracy and robustness. Com-

pared to other popular sensors for localization (e.g., cameras

or LiDARs), IMUs especially the off-the-shelf MEMS ones

are priced only hundredths or thousandths, and of smaller

size as well as lower power consumption. In addition, as a

reliable proprioceptive sensor, IMU also poses less restrictions

on operating environments and hardware configurations (in

contrast, e.g. stereo cameras require enough spatial baseline

to achieve performance gain [16], [17], which might not be

feasible on various applications including mobile devices).

Most existing methods on using multiple IMUs focus on

processing IMU measurements only or integration with global

navigation satellite systems (GNSS) [10], [18], [19]. Fusing

measurements from multiple IMUs with exteroceptive sensors

for localization is a less-explored topic. To the best of the

authors’ knowledge, the only work in recent years in this

domain is [20], which proposed an approach for vision-aided

inertial navigation using measurements from multiple IMUs.

However, the proposed algorithm is of significantly increased

arXiv:1909.04869v2 [cs.RO] 17 Jan 2020