10

User Studies

User studies typically measure user satisfaction through explicit ratings. Users receive

recommendations generated by different recommendation approaches, users rate the

recommendations, and the approach with the highest average rating is considered most

effective [264]. Study participants are typically asked to quantify their overall satisfaction

with the recommendations. However, they might also be asked to rate individual aspects

of a recommender system, for instance, how novel or authoritative recommendations are

[117], or how suitable they are for non-experts [72]. A user study can also collect

qualitative feedback, but qualitative feedback is rarely used in the field of (research-

paper) recommender systems [156], [159].

We distinguish between “lab” and “real-world” user studies. In lab studies, participants

are aware that they are part of a user study, which together with other factors might affect

user behavior and thereby the evaluation's results [267], [268]. In real-world studies,

participants are not aware of the study and rate recommendations for their own benefit,

for instance because the recommender system improves recommendations based on user

ratings (i.e. relevance feedback [263]), or user ratings are required to generate

recommendations (i.e. collaborative filtering [222]). All reviewed user studies were lab-

based.

Often, user studies are considered the optimal evaluation method [269]. However, the

outcome of user studies often depends on the questions asked. Cremonesi et al. found that

it makes a difference if users are asked for the “perceived relevance” or the "global

satisfaction" of recommendations [270]. Similarly, it made a difference whether users

were asked to rate the novelty or the relevance of recommendations [271]. A large number

of participants are also crucial to user study validity, which makes user studies relatively

expensive to conduct. The number of required participants, to receive statistically

significant results, depends on the number of approaches being evaluated, the number of

recommendations being displayed, and the variations in the results [272], [273].

However, as a rough estimate, at least a few dozen participants are required, often more.

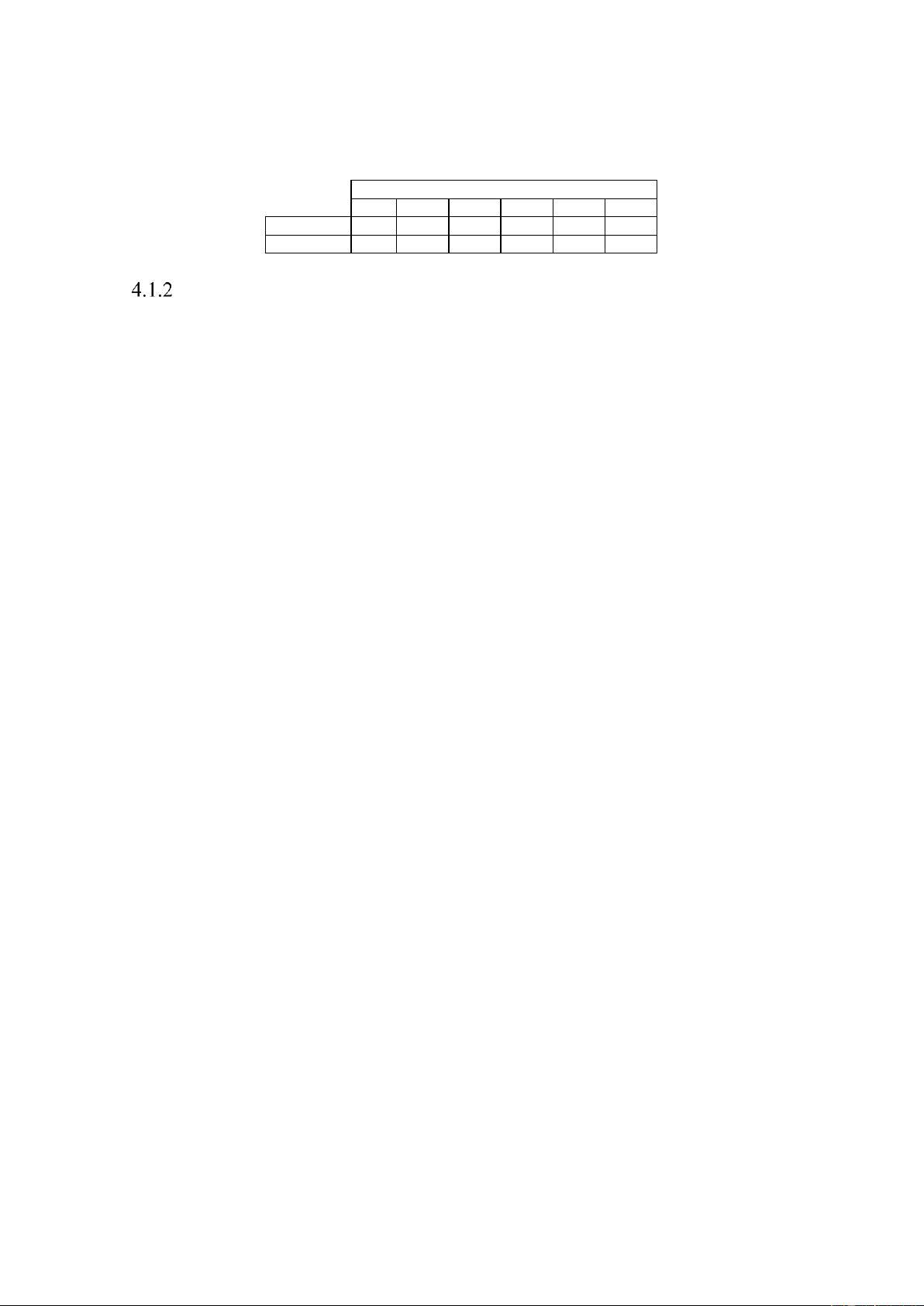

Most participants rated only a few recommendations and four studies (15%) were

conducted with fewer than five participants [62], [123], [171]; five studies (19%) had five

to ten participants [66], [84], [101]; three studies (12%) had 11-15 participants [15], [146],

[185]; and five studies (19%) had 16-50 participants [44], [118], [121]. Six studies (23%)

were conducted with more than 50 participants [93], [98], [117]. Three studies (12%)

failed to mention the number of participants [55], [61], [149] (Table 4). Given these

findings, we conclude that most user studies were not large enough to arrive at meaningful

conclusions.