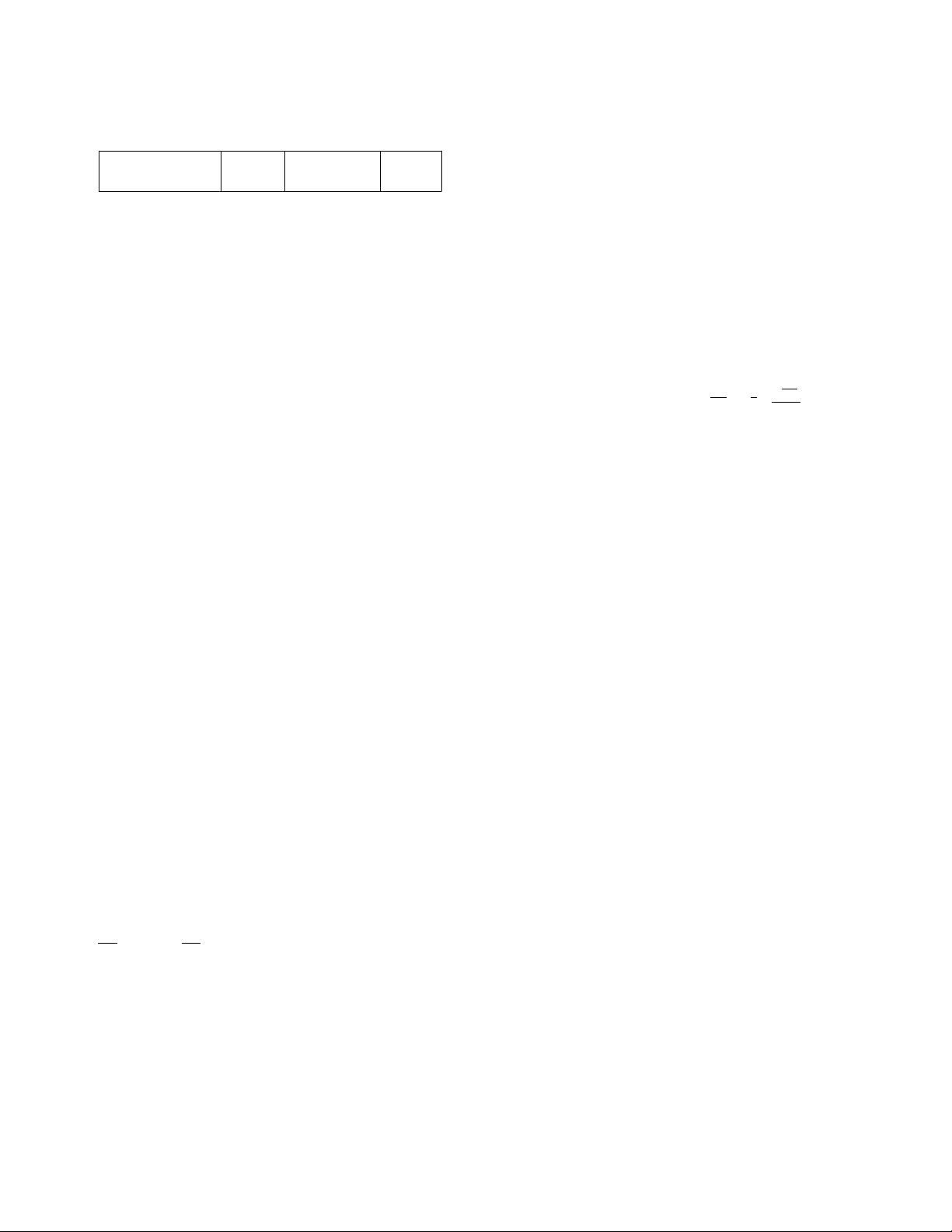

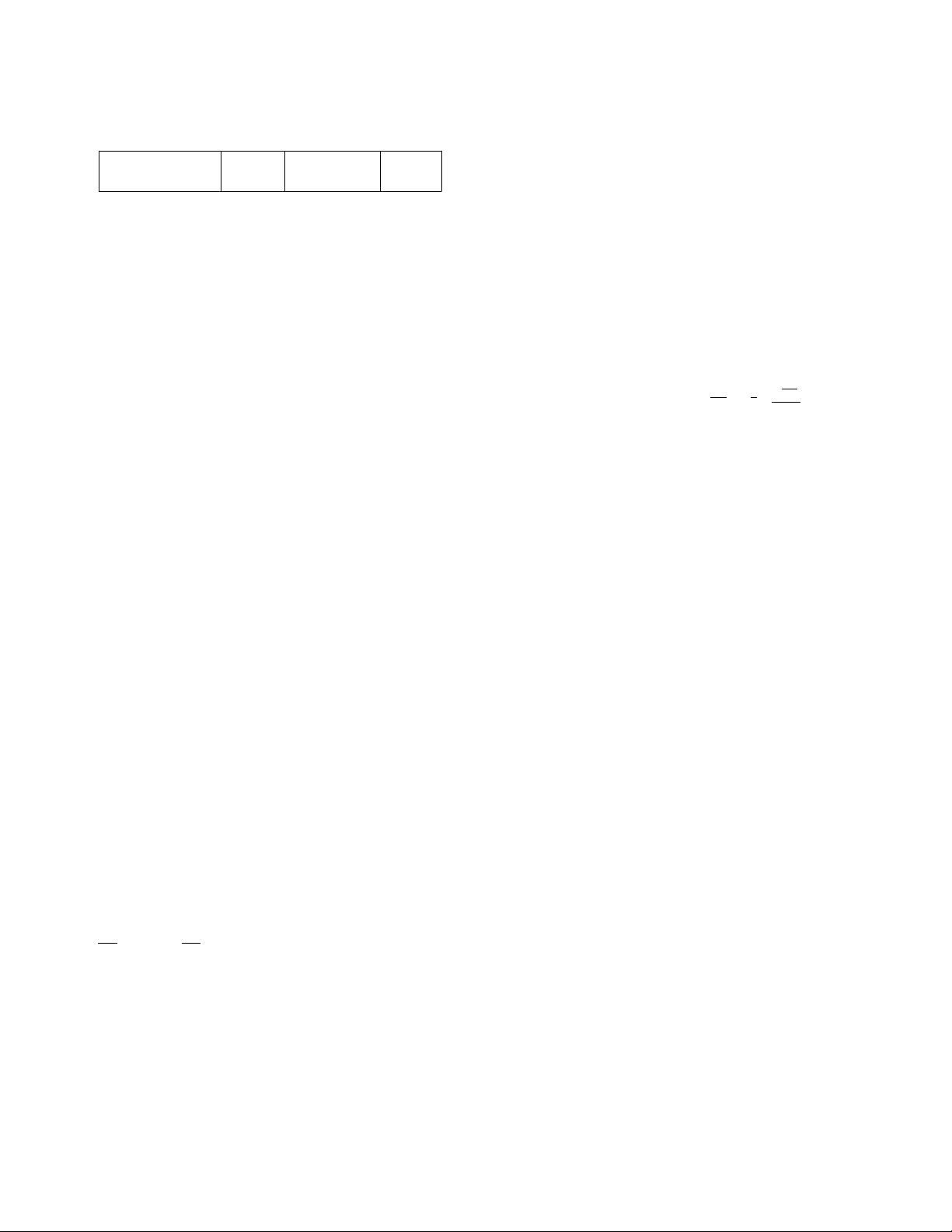

Leveling Lazy-Leveling Tiering

application query O(L) O(L · T ) O(L · T )

application update O(L · T ) O(L + T ) O(L)

Table 1: Blocked Bloom lters’ memor y I/O complexities.

the buer (for a delete, the value is a tombstone). Whenever runs

that contain entries with the same key are merged, older versions

are discarded and only the newest version is kept. To always nd

the most recent version of an entry, a query traverses the runs from

youngest to oldest (from smaller to larger levels, and from lower

to higher sub-levels within a level). It terminates when it nds the

rst entry with a matching key. If this entry’s value is a tombstone,

the query returns a negative result.

Fence Pointers.

For each run, there are fence pointers in memory

that comprise the min/max key at every data block. They allow

queries to binary search for the relevant data block that contains

a given key in

≈ log(N )

memory I/Os so that this block can be

retrieved cheaply with one storage I/O.

Bloom Filters.

For each run in the LSM-tree, there is an in-memory

Bloom lter (BF), a space-ecient probabilistic data structure used

to test whether a key is a member of a set [

11

]. A BF is an array of

bits with

h

hash functions. Each key is mapped using these hash

functions to

h

random bits, setting them from 0 to 1 or keeping them

set to 1. Checking for the existence of a key requires examining its

h

bits. If any are set to 0, we have a negative. If all are set to 1, we

have either a true or a false positive. The false positive probability

(FPP) is 2

−M ·ln(2)

, where

M

is the number of bits per entry. As we

increase

M

, the probability of hash collisions decreases and so the

FPP drops. A BF does not support range reads or deletes. The lack

of delete support means that a new BF has to be built from scratch

for every new run as a result of compaction.

A BF entails

h

memory I/Os for an insertion or for a query to an

existing key. For a query to a non-existing key, it entails on average

two memory I/Os since

≈

50% of the bits are set to zero and so the

expected number of bits checked before incurring a zero is two.

Blocked Bloom Filters.

To optimize memory I/Os, Blocked Bloom

lter has been proposed as an array of contiguous BFs, each the size

of a cache line [

66

,

84

]. A key is inserted by rst hashing it to one of

the constituent BFs and then inserting the key into it. This entails

only one memory I/O for any insertion or query. The trade-o is

a slight FPP increase. RocksDB has adopted blocked BFs. We use

standard and blocked BFs as baselines in this paper, and we focus

more on blocked BFs as they are the tougher competition.

Memory I/O Analysis.

With blocked BFs, the overall cost of a

point query is

(L −

1

) · K + Z

memory I/Os, one for each sub-

level of the LSM-tree. The cost of an insertion/update/delete, on

the other hand, is the same as the LSM-tree’s write-amplication:

≈

T −1

K +1

· (L −

1

) +

T −1

Z +1

with Dostoevsky. The reason is that every

compaction that an entry participates in leads to one BF insertion,

which costs one memory I/O. Table 1 summarizes these costs for

each of the merge policies. It shows that query cost over the BFs can

be signicant, especially with tiering and lazy leveling. Moreover,

the BF’s query and construction costs both increase with respect

to the number of levels

L

and thus with the data size. Finally, there

is an inverse relationship between the BFs’ query and construction

costs: the greedier we set the LSM-tree’s merge policy to be (i.e., by

ne-tuning the the parameters

T

,

K

and

Z

), query cost decreases as

there are fewer BFs while construction cost increases as the BFs get

rebuilt more frequently. Can we better scale these costs with respect

to the data size while also alleviating their read/write contention?

False Positive Rate Analysis.

We dene the false positive rate

(FPR) as the sum of FPPs across all lters. The FPR expresses the

average number of I/Os due to false positives that occur per point

query over the whole LSM-tree. Equation 2 expresses the FPR for

most KV-stores, which assign the same number of bits per entry

to all their BFs. This approach, however, was recently deemed sub-

optimal. The optimal approach is to reassign

≈

1 bit per entry from

the largest level and to use it to assign linearly more bits per entry

to lters at smaller levels [

25

,

26

]. While this increases the largest

level’s FPP, it exponentially decreases the FPPs at smaller levels

such that the overall FPR is lower, as expressed in Equation 3 [

28

].

F P R

uni f or m

= 2

−M ·ln(2)

·

(

K · (L − 1) + Z

)

(2)

F P R

opt imal

= 2

−M ·ln(2)

· Z

T −1

T

· K

1

T

·

T

T

T −1

T − 1

(3)

Equation 3 states that with the optimal approach, the relationship

between memory and FPR is independent of the number of levels

and thus of data size, unlike Equation 2. The reason is that as the

LSM-tree grows, smaller levels are assigned exponentially lower

FPRs thus causing the sum of FPRs to converge. It is imperative

that any replacement we devise for the LSM-tree’s Bloom lters

either matches or improves on the FPR expressed in Equation 3.

3 PROMISE: LSM-TREE & CUCKOO FILTER

Cuckoo lter [

39

] (CF) is one of several data structures [

9

,

15

,

44

,

81

,

96

] that recently emerged as alternatives to Bloom lters. In

their core, these structures all employ a compact hash table that

stores ngerprints of keys, where a ngerprint is a string of

F

bits

derived by hashing a key. CF comprises an array of buckets, each

with

S

slots for ngerprints. During insertion, an entry with key

k

is hashed to two bucket addresses

b

1

and

b

2

using Equation 4. A

ngerprint of key

k

is inserted into whichever bucket has space. If

both buckets are full, however, some ngerprint from one of the two

buckets is randomly chosen and swapped to its alternative bucket

to clear space. By virtue of using the xor operator, the right-hand

side of Equation 4 allows to always compute an entry’s alternative

bucket using the ngerprint and current bucket address without

the original key. The swapping process continues recursively either

until a free slot is found for all ngerprints or until a swapping

threshold is reached, at which point the insertion fails.

b

1

= hash(k) b

2

= b

1

⊕ hash(k

′

s f inдer print) (4)

We employ a CF with

S

set to four slots per bucket through the

paper. Such a tuning can reach 95% space utilization with high

probability without incurring insertion failures and with only 1-2

amortized swaps per insertion. The false positive rate is

≈

2

·S ·

2

−F

,

where

F

is the ngerprint size in bits. Querying entails at most two

memory I/Os as each entry is in one of two buckets.

Promise.

Cuckoo lter supports storing updatable auxiliary data

for each entry alongside its ngerprint. We propose to replace

the LSM-tree’s multiple Bloom lters with a CF that maps each

data entry not only to a ngerprint but also to a Level ID (LID),

mapping to the sub-level that contains the entry. The promise is to

allow nding the run that contains a given entry with at most two

memory I/Os, far more cheaply than with blocked Bloom lters.

3