FlyNeRF: NeRF-Based Aerial Mapping for High-Quality 3D Scene

Reconstruction

Maria Dronova, Vladislav Cheremnykh, Alexey Kotcov, Aleksey Fedoseev, and Dzmitry Tsetserukou

Abstract— Current methods for 3D reconstruction and envi-

ronmental mapping frequently face challenges in achieving high

precision, highlighting the need for practical and effective solu-

tions. In response to this issue, our study introduces FlyNeRF, a

system integrating Neural Radiance Fields (NeRF) with drone-

based data acquisition for high-quality 3D reconstruction.

Utilizing unmanned aerial vehicle (UAV) for capturing images

and corresponding spatial coordinates, the obtained data is

subsequently used for the initial NeRF-based 3D reconstruction

of the environment. Further evaluation of the reconstruction

render quality is accomplished by the image evaluation neural

network developed within the scope of our system. According

to the results of the image evaluation module, an autonomous

algorithm determines the position for additional image capture,

thereby improving the reconstruction quality.

The neural network introduced for render quality assessment

demonstrates an accuracy of 97%. Furthermore, our adaptive

methodology enhances the overall reconstruction quality, re-

sulting in an average improvement of 2.5 dB in Peak Signal-

to-Noise Ratio (PSNR) for the 10% quantile. The FlyNeRF

demonstrates promising results, offering advancements in such

fields as environmental monitoring, surveillance, and digital

twins, where high-fidelity 3D reconstructions are crucial.

I. INTRODUCTION

Autonomous navigation remains a significant challenge in

the development of agents designed to operate in unknown

environments such as cluttered rooms and facilities. Recently,

Neural Radiance Field (NeRF) technology has found many

applications in robotics, one of the most promising being

the reconstruction of 3D scenes for further use in navi-

gation, mapping, and path planning. However, despite its

capabilities, the quality of NeRF renderings is determined

by the quality of the input images, especially when the robot

captures them at high speed, under challenging conditions, or

from an unfavorable angle. Low-quality renders affect path

planning and localization of the autonomous agent, leading

to poor construction of the 3D map.

In the topic of exploration of unseen environments previ-

ous works have mainly focused on optimization of reward

policies [1], [2] to maximize the survey area, finding a spe-

cific group of targets [3], [4], recognizing by images or lan-

guage [5], [6], [7]. In our work, the main focus is assessing

render quality and improving it with an autonomous agent

using data from our neural network. Through this process, we

obtain information about camera poses where image quality

is below a certain threshold. This information is later used

The authors are with the Intelligent Space Robotics Laboratory, Skolkovo

Institute of Science and Technology, Bolshoy Boulevard 30, bld. 1, 121205,

Moscow, Russia

email: (maria.dronova, vladislav.cheremnykh, alexey.kotcov,

aleksey.fedoseev, d.tsetserukou)@skoltech.ru

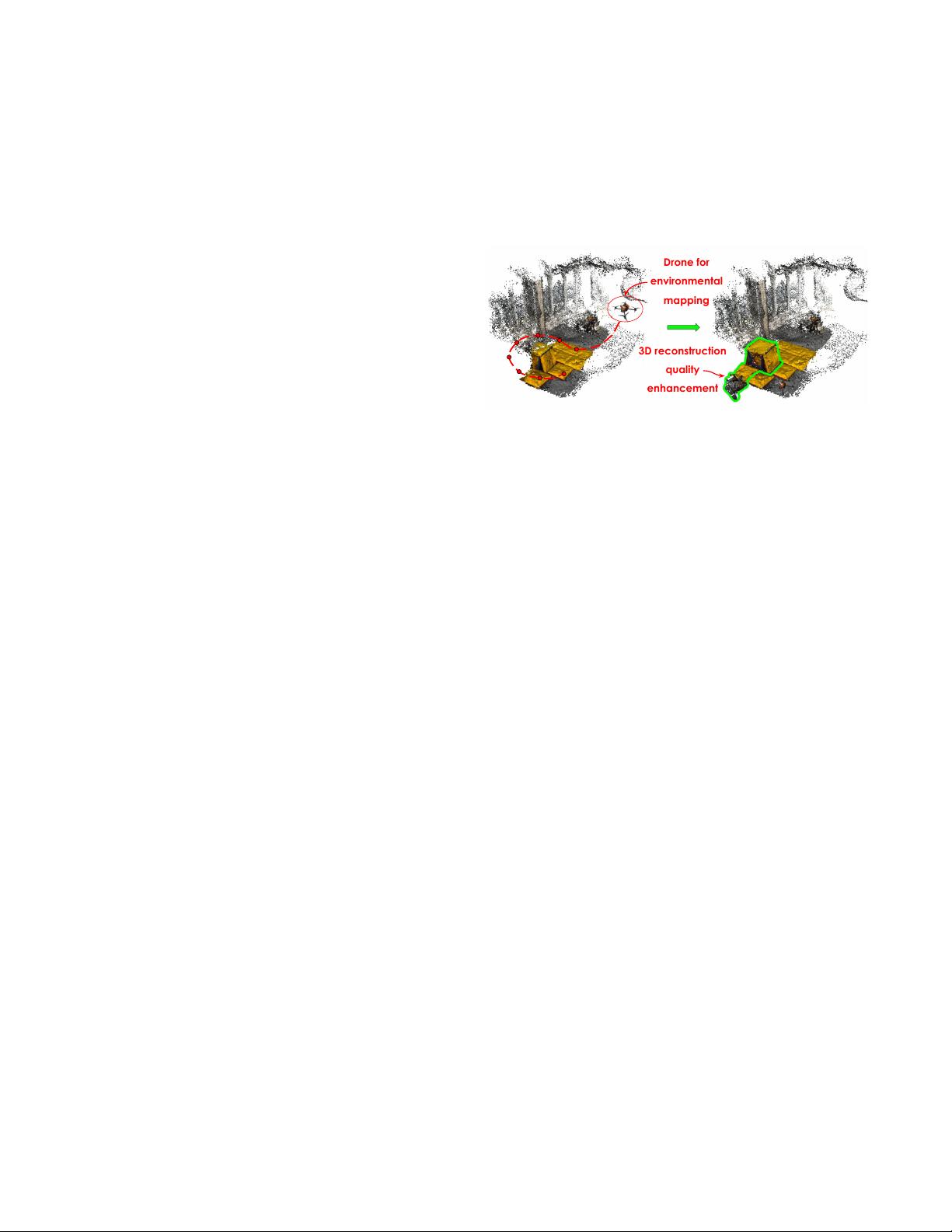

3D reconstruction

quality

enhancement

Drone for

environmental

mapping

Fig. 1: FlyNeRF system during the mission. The dashed red

line with dots represents the trajectory executed by the drone

and positions for additional image capture. The green area

signifies the improvement in the quality of the reconstruction.

by the agent to provide new images. By iteratively refining

the dataset with novel images, our proposed framework aims

to improve the overall quality of the NeRF renderings and

the accuracy of the associated 3D reconstructions.

In summary, we present FlyNeRF (Fig. 1), a novel system

utilizing UAV to explore an unseen scene to collect and

refine data for training a NeRF model. Our method uses a

neural network-based quality assessment approach to identify

images of poor quality based on Structural Similarity Index

(SSIM) and Peak Signal-to-Noise Ratio (PSNR) metrics.

These identified images, which are indicative of poor camera

poses or environmental conditions, are then used to generate

the list of positions and orientations for additional image

capture during the subsequent drone flight.

II. RELATED WORKS

Neural Radiance Fields. Implicit neural representations,

particularly NeRF, pioneered by Mildenhall et al. [8], of-

fer a novel approach to generating complex 3D scenes.

Leveraging neural networks to encode scene spatial layouts,

NeRF represents a significant advancement, introducing a

differentiable volume rendering loss. This innovation enables

precise control over 3D scene reconstruction from 2D ob-

servations, achieving remarkable performance in novel view

synthesis tasks. Despite the compelling properties and results

demonstrated by vanilla NeRF [8], the training process can

be time-consuming. For instance, it typically necessitates

approximately a day to train for a simple scene. This is

due to the nature of the volume rendering, which requires

a significant number of sample points to render an image.

Subsequent research efforts have focused on improving the

efficiency of the training and inference processes [9], [10],

[11], as well as overcoming the challenges of training from

arXiv:2404.12970v1 [cs.RO] 19 Apr 2024