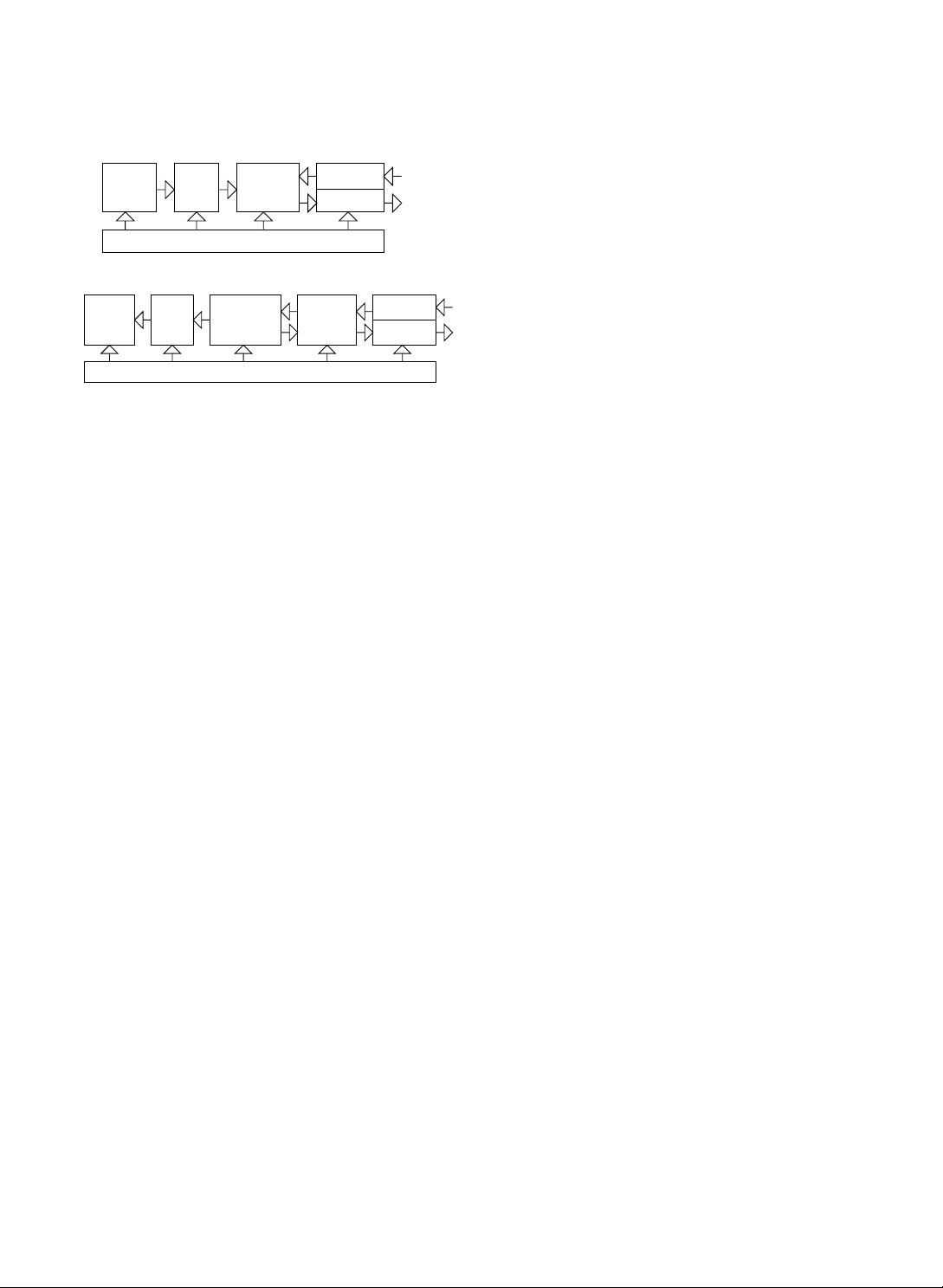

and (b), respectively. Sensor nodes are equipped

with power unit, communication subsystems (re-

ceiver and transmitter), storage and processing re-

sources, Analog to Digital Converter (ADC) and

sensing unit, as shown in Fig. 3(a). The sensing

unit observes phenomena such as thermal, optic

or acoustic event. The collected analog data are

converted to digital data by ADC and then are

analyzed by a processor and then transmitted to

nearby actors.

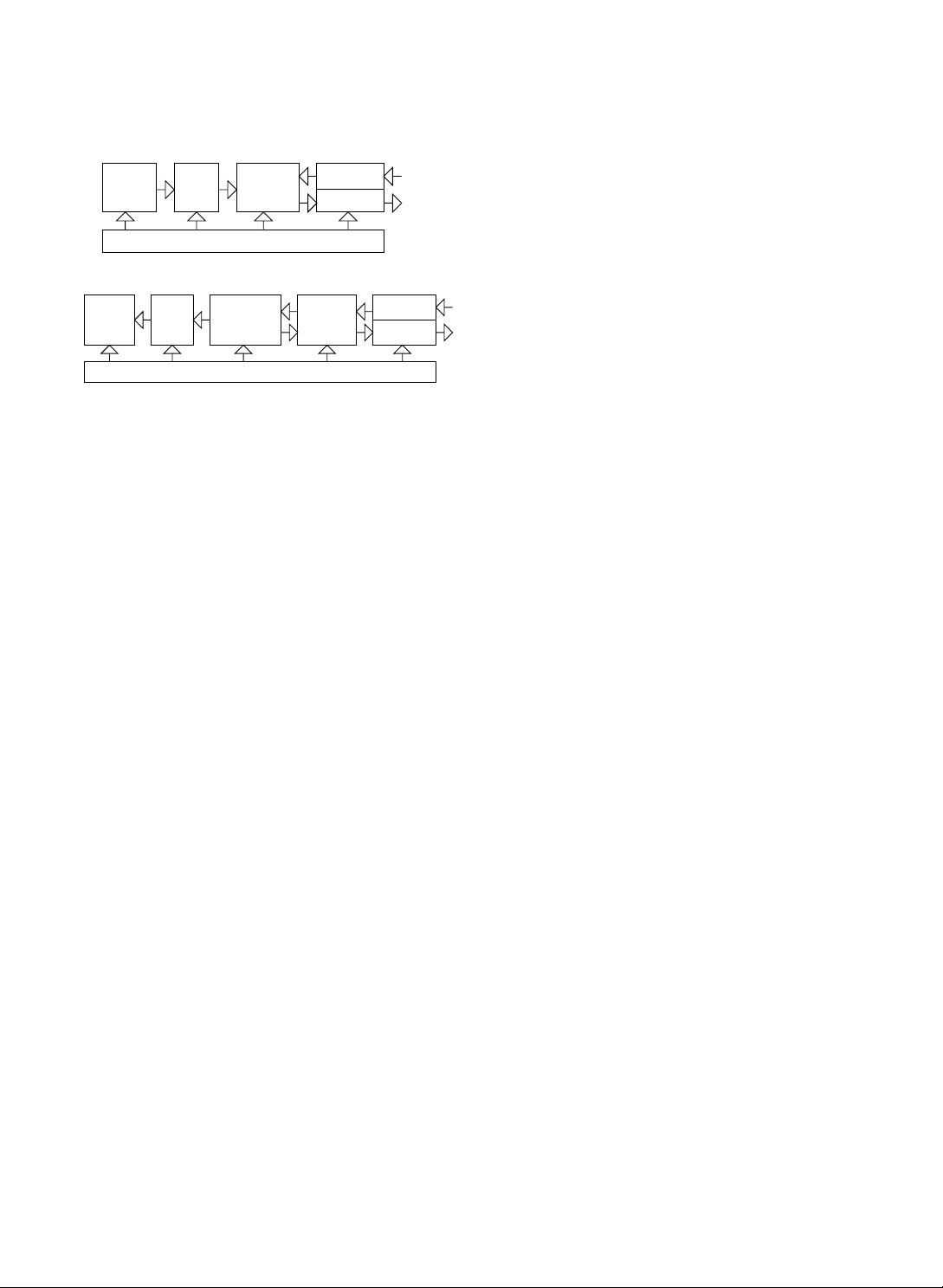

The decision unit (controller) functions as an

entity that takes sensor readings as input and gen-

erates action commands as output. These action

commands are then converted to analog signals

by the Digital to Analog Converter (DAC) and

are transformed into actions via the actuation unit

as shown in Fig. 3(b).

In some applications, integrated sensor/actor

nodes may replace actor nodes. Since an integrated

sensor/actor node is capable of both sensing and

acting, it has sensing unit and ADC in addition

to all components of an actor node shown in

Fig. 3(b).

One of the examples for an integrated sensor/

actor node is a robot. However, a single robot

may not have a sufficient sensing capability to

sense the entire event area. Hence, in order to ini-

tiate more reliable actions, robots (integrated sen-

sor/actor nodes) should act based on its own

sensor readings as well as on the other nearby sen-

sor nodesÕ data in the network. In other words,

sensors transmit their readings to the nearby ro-

bots which process all sensor readings including

their own sensor data. This way robots can collab-

orate with sensor nodes which provide them to

have a reliable knowledge about the overall event.

Then, the decision unit takes appropriate decisions

and the actuation unit performs actions as in an

actor node.

The use of integrated sensor/actor or actor node

does not influence the overall architecture of

WSANs. However, in most of the real applica-

tions, integrated sensor/actor nodes, especially ro-

bots, are used instead of actor nodes.

The robots designed by several Robotics Re-

search Laboratories are shown in Fig. 4(a)–(d).

Low-flying helicopter platform shown in Fig.

4(a) provides ground mapping, and air-to-ground

cooperation of autonomous robotic vehicles [24].

However, it is likely that in the near future more

several actuation functionalities such as water

sprinkling or disposing of a gas can be supported

by this helicopter platform, which will make

WSANs much more efficient than today. An

example of Robotic Mule which is called autono-

mous battlefield robot designed for the Army is gi-

ven in Fig. 4(b). There are several autonomous

battlefield robot projects sponsored by Space and

Naval Warfare Systems Command [9] and Defense

Advanced Research Projects Agency (DARPA)

[8]. These developed battlefield robots can detect

and mark mines, carry weapons, function as tanks

or maybe in the future totally replace soldiers in

the battlefield. Moreover, SKITs shown in Fig.

4(c) are networked tele-robots having a radio tur-

ret which enables communication over UHF fre-

quencies at 4800 kbits/sec [22]. These robots can

coordinate with each other by exploiting their

wireless communication capabilities and perform

the tasks determined by the application. Finally,

possibly the worldÕs smallest autonomous unteth-

ered robot (1/4 cubic inch and weighing less than

an ounce) being developed in Sandia National Lab-

oratories [20] is given in Fig. 4(d). Although it is

not capable of performing difficult tasks that are

done with much larger robots yet, it is very likely

that it will be the robot of the future. A sensor

node and a sink are given in Fig. 5. MICA is an

open-source hardware and software platform that

combines sensing, communications, and comput-

&

Power Unit

Storage

ADC

Unit

Sensing

Transmitter

Receiver

Processor

(a)

Power Unit

Transmitter

Receiver

Unit

Storage

Actuation

&

Processor

Controller

(Decision Unit)

DAC

(b)

Fig. 3. The components of (a) sensors and (b) actors.

354 I.F. Akyildiz, I.H. Kasimoglu / Ad Hoc Networks 2 (2004) 351–367