DORIGO AND GAMBARDELLA: ANT COLONY SYSTEM 55

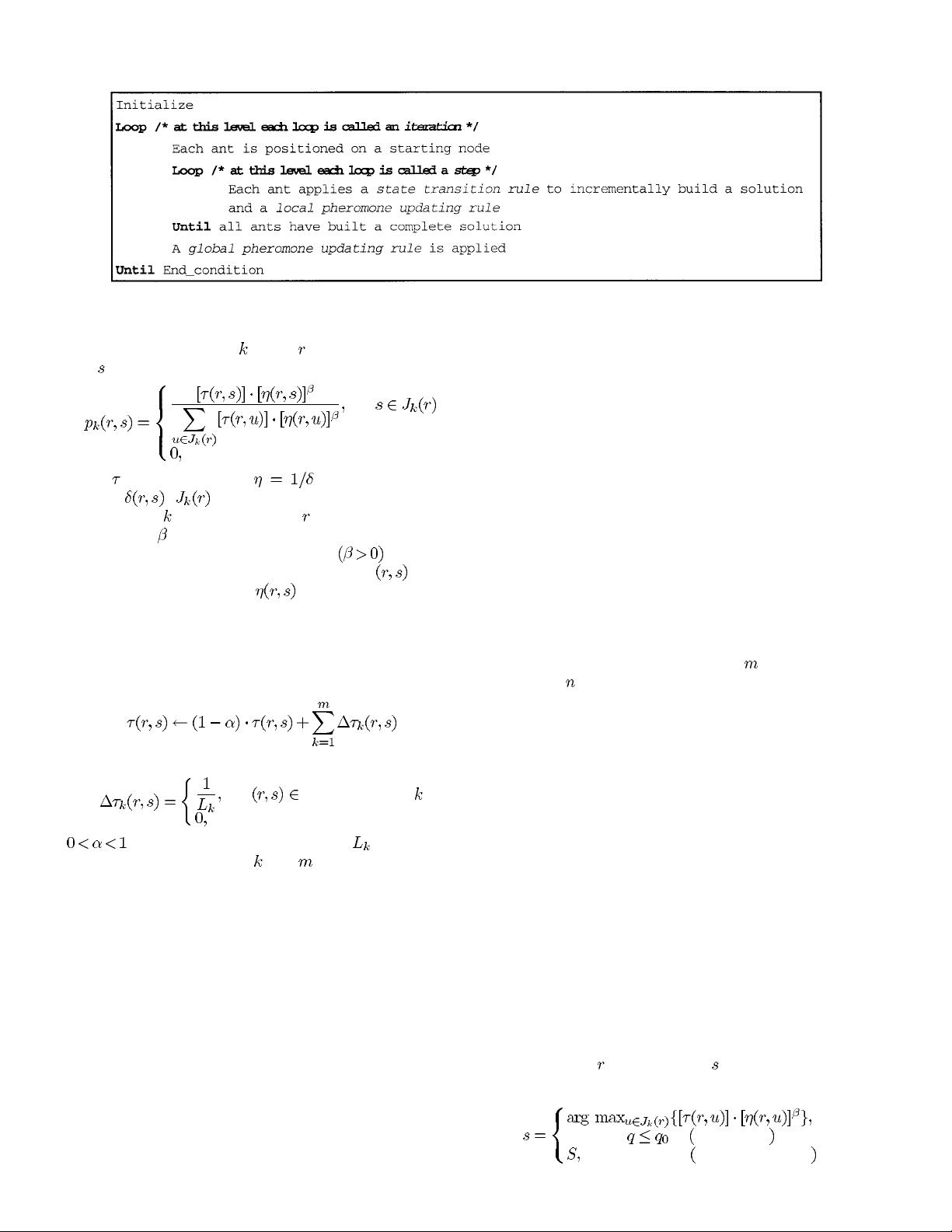

Fig. 3. The ACS algorithm.

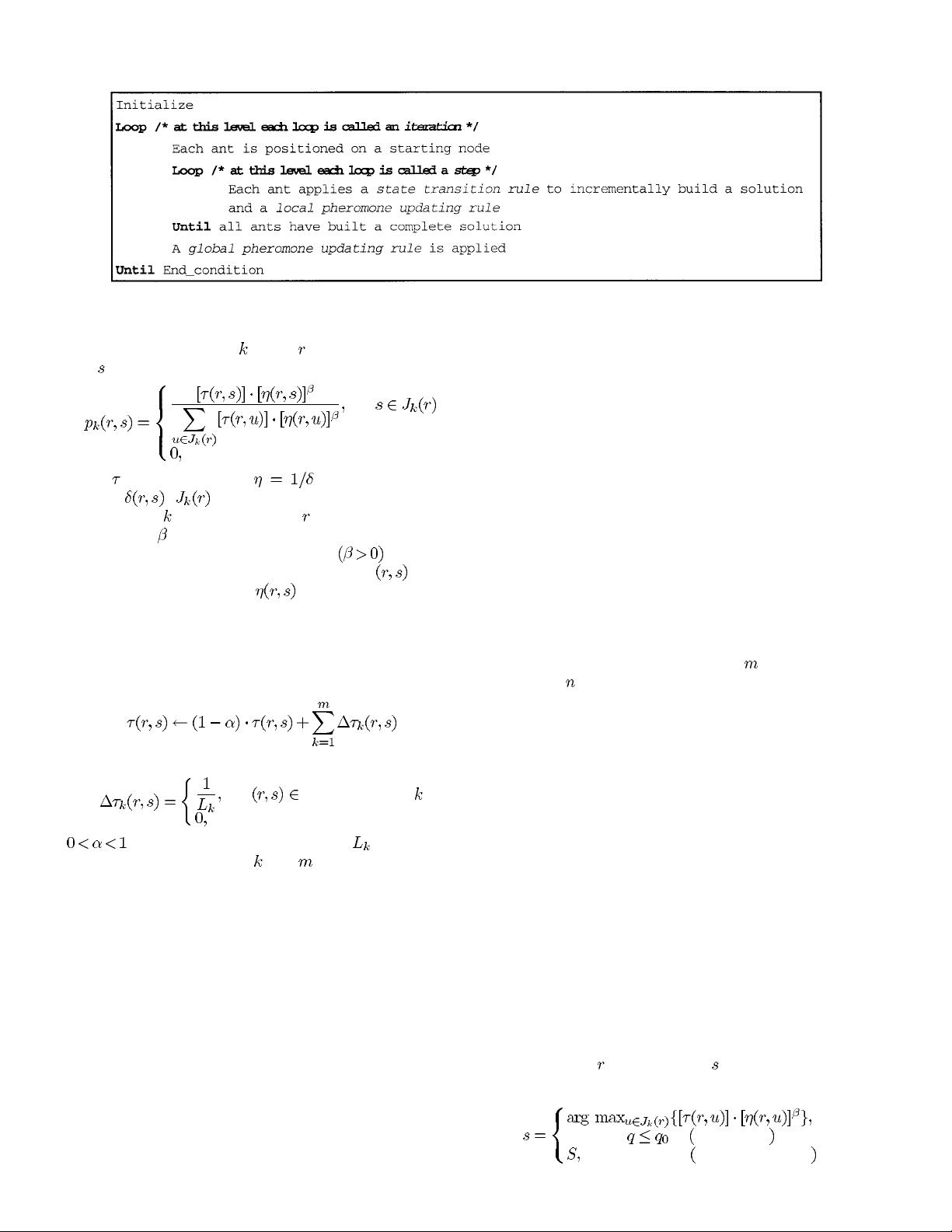

probability with which ant in city chooses to move to the

city

if

otherwise

(1)

where

is the pheromone, is the inverse of the

distance

, is the set of cities that remain to be

visited by ant

positioned on city (to make the solution

feasible), and

is a parameter which determines the relative

importance of pheromone versus distance

.

In (1) we multiply the pheromone on edge

by the

corresponding heuristic value

. In this way we favor the

choice of edges which are shorter and which have a greater

amount of pheromone.

In ant system, the global updating rule is implemented as

follows. Once all ants have built their tours, pheromone is

updated on all edges according to

(2)

where

if tour done by ant

otherwise

is a pheromone decay parameter, is the length

of the tour performed by ant

, and is the number of ants.

Pheromone updating is intended to allocate a greater amount

of pheromone to shorter tours. In a sense, this is similar to

a reinforcement learning scheme [2], [26] in which better

solutions get a higher reinforcement (as happens, for exam-

ple, in genetic algorithms under proportional selection). The

pheromone updating formula was meant to simulate the change

in the amount of pheromone due to both the addition of new

pheromone deposited by ants on the visited edges and to

pheromone evaporation.

Pheromone placed on the edges plays the role of a dis-

tributed long-term memory: this memory is not stored locally

within the individual ants, but is distributed on the edges of

the graph. This allows an indirect form of communication

called stigmergy [9], [23]. The interested reader will find a

full description of ant system, of its biological motivations,

and computational results in [12].

Although ant system was useful for discovering good or

optimal solutions for small TSP’s (up to 30 cities), the time

required to find such results made it infeasible for larger

problems. We devised three main changes to improve its

performance which led to the definition of the ACS, presented

in the next section.

III. ACS

The ACS differs from the previous ant system because

of three main aspects: i) the state transition rule provides a

direct way to balance between exploration of new edges and

exploitation of a priori and accumulated knowledge about the

problem, ii) the global updating rule is applied only to edges

which belong to the best ant tour, and iii) while ants construct

a solution a local pheromone updating rule (local updating

rule, for short) is applied.

Informally, the ACS works as follows:

ants are initially

positioned on

cities chosen according to some initialization

rule (e.g., randomly). Each ant builds a tour (i.e., a feasible

solution to the TSP) by repeatedly applying a stochastic greedy

rule (the state transition rule). While constructing its tour, an

ant also modifies the amount of pheromone on the visited

edges by applying the local updating rule. Once all ants have

terminated their tour, the amount of pheromone on edges is

modified again (by applying the global updating rule). As

was the case in ant system, ants are guided, in building their

tours, by both heuristic information (they prefer to choose

short edges) and by pheromone information. An edge with

a high amount of pheromone is a very desirable choice. The

pheromone updating rules are designed so that they tend to

give more pheromone to edges which should be visited by

ants. The ACS algorithm is reported in Fig. 3. In the following

we discuss the state transition rule, the global updating rule,

and the local updating rule.

A. ACS State Transition Rule

In the ACS the state transition rule is as follows: an ant

positioned on node

chooses the city to move to by applying

the rule given by (3)

if exploitation

otherwise biased exploration

(3)