Real-Time Video Super-Resolution with Spatio-Temporal Networks and Motion

Compensation

Jose Caballero, Christian Ledig, Andrew Aitken, Alejandro Acosta,

Johannes Totz, Zehan Wang, Wenzhe Shi

Twitter

{jcaballero, cledig, aaitken, aacostadiaz, johannes, zehanw, wshi}@twitter.com

Abstract

Convolutional neural networks have enabled accurate

image super-resolution in real-time. However, recent at-

tempts to benefit from temporal correlations in video super-

resolution have been limited to naive or inefficient archi-

tectures. In this paper, we introduce spatio-temporal sub-

pixel convolution networks that effectively exploit temporal

redundancies and improve reconstruction accuracy while

maintaining real-time speed. Specifically, we discuss the

use of early fusion, slow fusion and 3D convolutions for

the joint processing of multiple consecutive video frames.

We also propose a novel joint motion compensation and

video super-resolution algorithm that is orders of magni-

tude more efficient than competing methods, relying on a

fast multi-resolution spatial transformer module that is end-

to-end trainable. These contributions provide both higher

accuracy and temporally more consistent videos, which we

confirm qualitatively and quantitatively. Relative to single-

frame models, spatio-temporal networks can either reduce

the computational cost by 30% whilst maintaining the same

quality or provide a 0.2dB gain for a similar computational

cost. Results on publicly available datasets demonstrate

that the proposed algorithms surpass current state-of-the-

art performance in both accuracy and efficiency.

1. Introduction

Image and video super-resolution (SR) are long-standing

challenges of signal processing. SR aims at recovering a

high-resolution (HR) image or video from its low-resolution

(LR) version, and finds direct applications ranging from

medical imaging [38, 34] to satellite imaging [5], as well

as facilitating tasks such as face recognition [13]. The

reconstruction of HR data from a LR input is however a

highly ill-posed problem that requires additional constraints

to be solved. While those constraints are often application-

dependent, they usually rely on data redundancy.

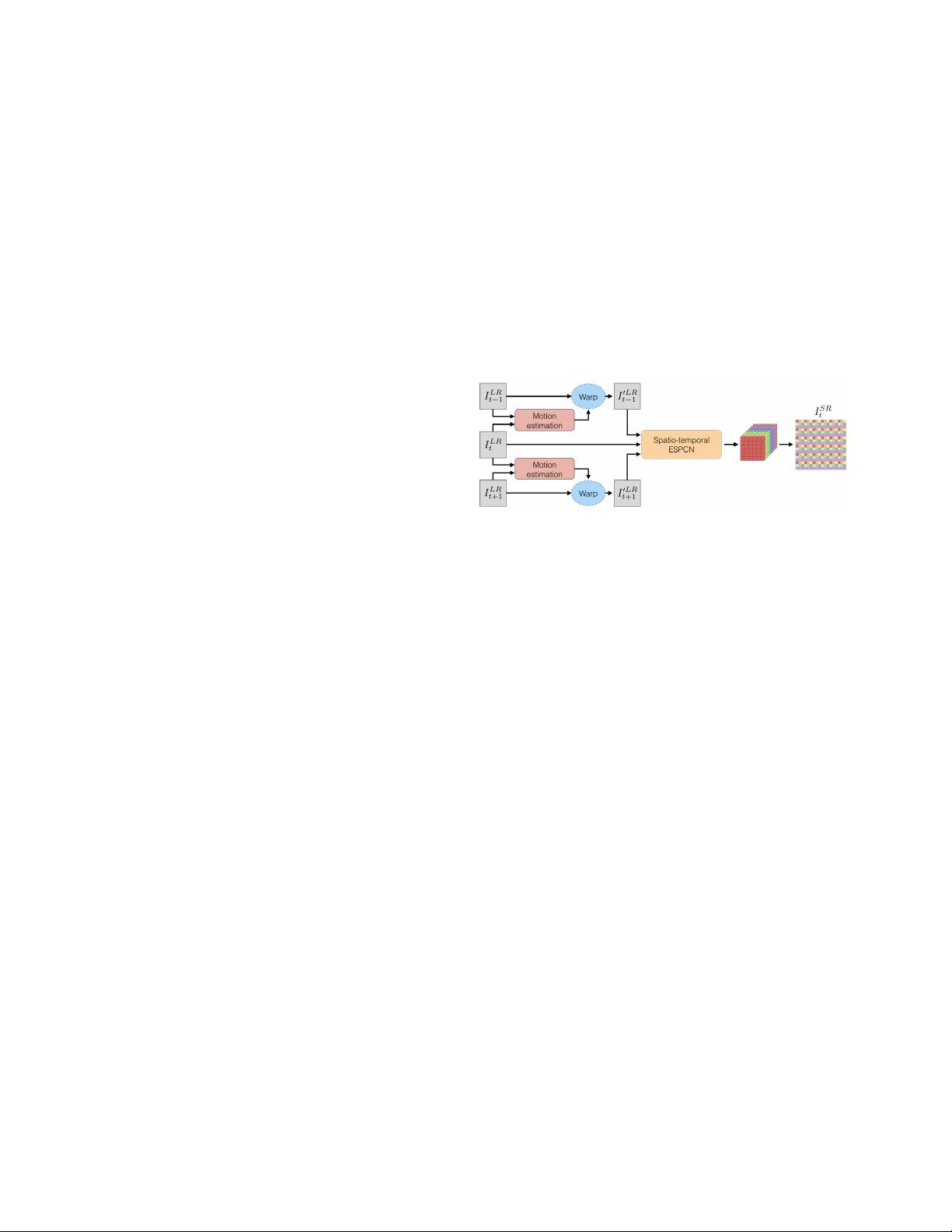

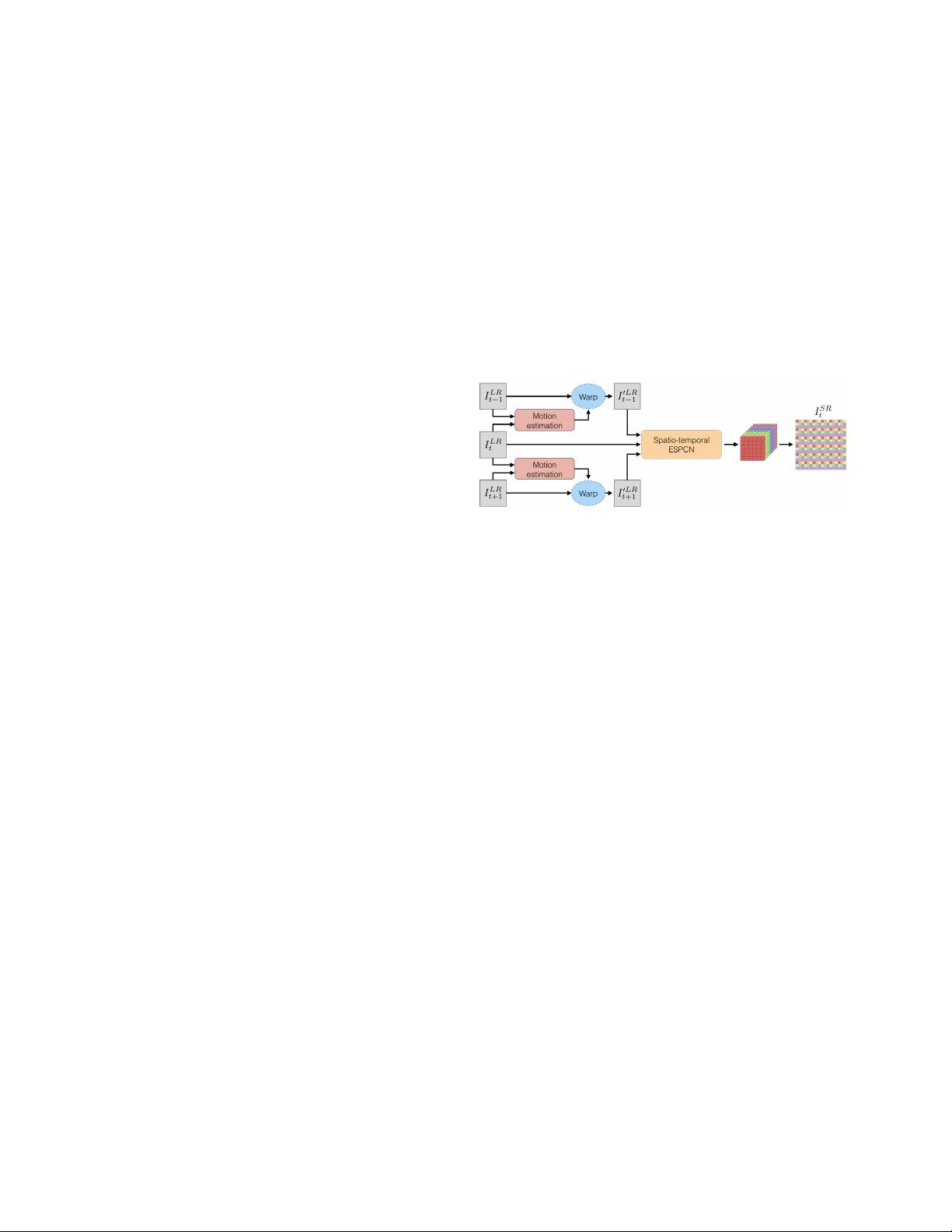

Figure 1: Proposed design for video SR. The motion esti-

mation and ESPCN modules are learnt end-to-end to obtain

a motion compensated and fast algorithm.

In single image SR, where only one LR image is pro-

vided, methods exploit inherent image redundancy in the

form of local correlations to recover lost high-frequency

details by imposing sparsity constraints [39] or assuming

other types of image statistics such as multi-scale patch re-

currence [12]. In multi-image SR [28] it is assumed that

different observations of the same scene are available, hence

the shared explicit redundancy can be used to constrain the

problem and attempt to invert the downscaling process di-

rectly. Transitioning from images to videos implies an ad-

ditional data dimension (time) with a high degree of corre-

lation that can also be exploited to improve performance in

terms of accuracy as well as efficiency.

1.1. Related work

Video SR methods have mainly emerged as adaptations

of image SR techniques. Kernel regression methods [35]

have been shown to be applicable to videos using 3D ker-

nels instead of 2D ones [36]. Dictionary learning ap-

proaches, which define LR images as a sparse linear com-

bination of dictionary atoms coupled to a HR dictionary,

have also been adapted from images [38] to videos [4]. An-

other approach is example-based patch recurrence, which

assumes patches in a single image or video obey multi-scale

relationships, and therefore missing high-frequency content

at a given scale can be inferred from coarser scale patches.

arXiv:1611.05250v2 [cs.CV] 10 Apr 2017