This article has been accepted for inclusion in a future issue of this journal. Content is final as presented, with the exception of pagination.

WU et al.: TREE-STRUCTURE-BASED APPROACH TO MULTILABEL LEARNING 3

multilabel learning based on SVM algorithm where they use

the average fraction of incorrectly ordered pairs of labels as

cost function.

D. Tree-Based Methods

The methods that adapt the tree structure for the task of

multilabel learning are referred as the tree-based methods.

Clare and King [7] propose the ML-C4.5 algorithm which

adapts the C4.5 algorithm for multilabel data by modifying

the formula of entropy calculation. Comité et al. [2] extend

an alternative decision tree to handle multilabel data, where

the AdaBoost.MH algorithm proposed by Schapire and

Singer [1] is employed to train the multilabel alternating

decision trees. Blockeel et al. [19] propose the concept

of predictive clustering trees (PCTs). Vens et al. [20]

introduce several approaches for the PCTs algorithm to

hierarchy multilabel classification where instances may

belong to multiple classes and these classes are organized

in a hierarchy. Kocev et al. [21] consider two ensemble

learning techniques, bagging and random forests, and apply

them to PCTs for multilabel learning. Tsoumakas et al. [23]

propose the HOMER algorithm to handle data sets with a

large number of labels. HOMER partitions the whole label set

into disjointed subsets. The partition process is implemented

by a balance clustering algorithm. Bengio et al. [24]

put forward an algorithm for learning a tree structure of

classifiers by optimizing the overall tree loss. This algorithm

is originally proposed for multiclass problem, but its way

of constructing a label tree is also applicable to multilabel

problem. Punera et al. [25] develop a new technique that

extracts a suitable hierarchical structure automatically from

a corpus of label documents. Deng et al. [26] present a

novel approach to learn a label tree for learning a large-scale

classification with many classes efficiently. Madjarov and

Gjorgjevikj [27] build decision trees for multilabel

classification, where the leaves contain SVM-based classifiers

to provide multilabel predictions. Fu et al. [28] construct a

tree structure of the labels to describe the label dependency

in multilabel data. Cesa-Bianchi et al. [29] study the

hierarchical classification problem and introduce a refined

evaluation scheme to turn the hierarchical SVM classifier

into an approximated Bayes optimal classifier. They

also study the problem of hierarchical classification in a

taxonomy that allows classifications to be associated with

multiple and/or partial paths. They further propose a new

incremental algorithm to learn classifier for each node of the

taxonomy [30]. Bi and Kwok [31] provide a novel hierarchical

multilabel classification algorithm which can be used on both

tree and DAG structure hierarchies. Zhang and Zhang [32]

use a Bayesian network structure to encode the conditional

dependencies of the labels and the feature set efficiently, with

the feature set as the common parent of all labels. In this

network, multilabel learning is decomposed into a series of

single-label classification problems. Zhang and Zhang [32]

describe the multilabel problem from the perspective of the

Bayesian probability and classify the multilabel learning

methods into the first-order, the second-order, and high-order

approaches on the basis of the order of label correlations in a

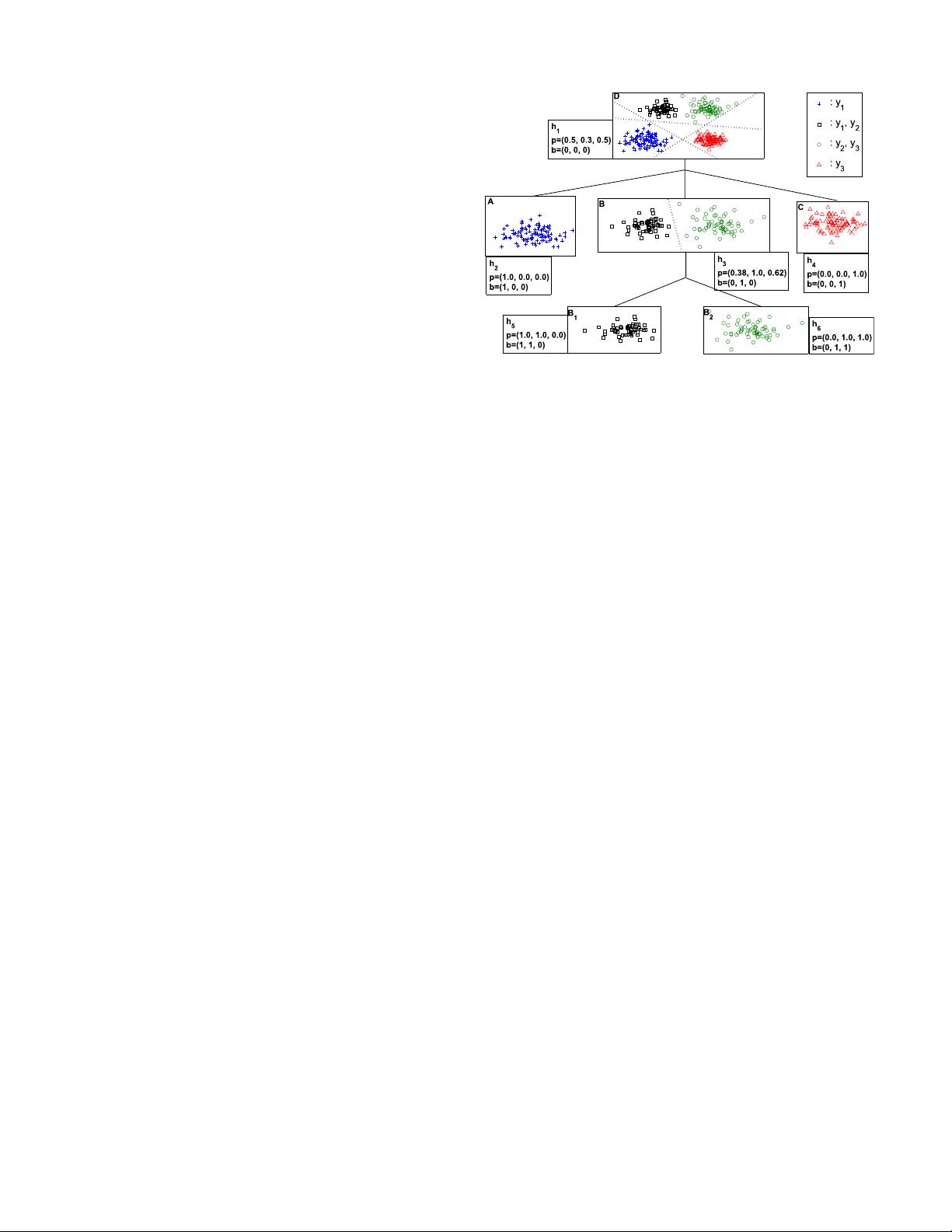

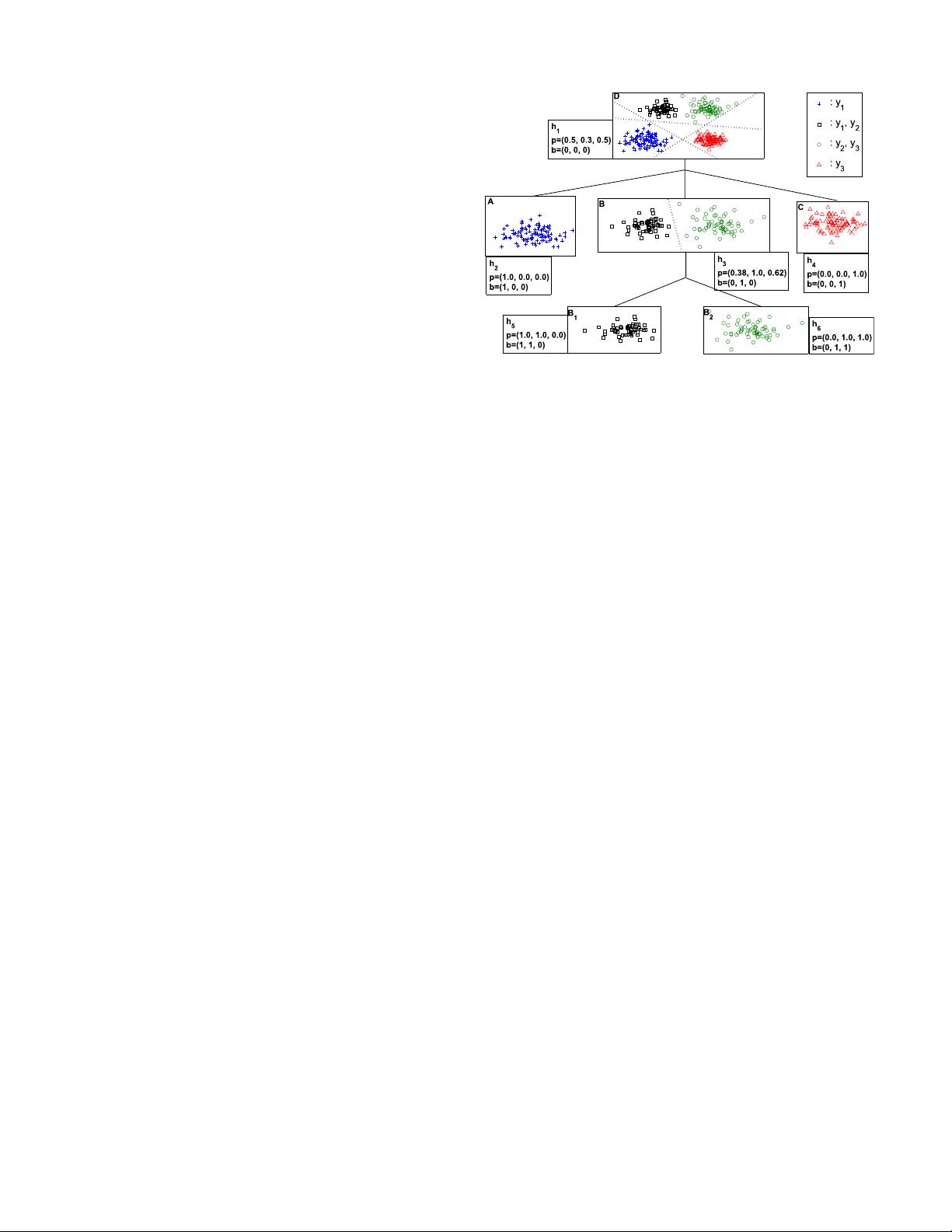

Fig. 1. Example to illustrate the process of building a hierarchical tree

model. h

i

: the one-against-all SVM classifiers learnt at each node. Dash lines:

the decision boundaries of h

i

. b: the predictive label vector indicating the

predictive labels of a node. p: the class purity vector representing the purity of

the classes of a node. In the example, we set λ = 0.8, meaning that the classes

with purity value p

v

(l) ≥ 0.8 are predictive labels and their corresponding

value of b

v

(l) are set to be 1. The predictive labels of a parent node will be

transferred to its child nodes.

system. Our proposed method is different from these previous

works in the way that the predictive labels are formulated

at each node of the hierarchy. The predictive labels are

shared across the hierarchical tree structure and transferred

in a top-down manner. With this assumption, the original

multilabel classification is built into the nested single-label

prediction problems in a hierarchical way, which preserves

the correlations between multiple labels.

III. ML-T

REE FOR MULTILABEL LEARNING

A. Hierarchical Tree Model

In this paper, we develop a new hierarchical tree algorithm

for multilabel learning, that is, ML-Tree. A hierarchical tree

is constructed where the root node corresponds to all the

instances in the training data set D, the instances are recur-

sively partitioned into smaller subsets while moving down the

tree. Each internal node v contains the union of the training

instances of its child nodes, D

v

=∪

k

i=1

D

c

i

, c

i

∈ children(v)

and D

c

i

∩ D

c

j

=∅, i = j for i, j = 1, 2, ...,k. At each

node v of the tree, one-against-all SVM classifiers are used to

partition the corresponding data set D

v

into disjoint subsets.

This process continues until the remaining instances at the

node cannot be further split by the induced classifier.

Fig. 1 shows an example of a hierarchical tree constructed

from a multilabel data set containing three labels in 2-D feature

spaces. Each node v contains a set of one-against-all SVM

classifiers h

v

. Dash lines at the node: the decision boundaries

given by the classifiers. The top node contains all training

data. At the top level, the whole data set is partitioned into

threedatasubsets{A, B, C}. The second subset B is further

partitioned into two subsets {B

1

, B

2

} at the bottom level.

Each node in the tree contains two important components,

that is, class purity vector and predictive label vector (see

Fig. 1), for handling multilabel learning in training phase

as well as prediction in testing phase. Suppose we have a

set of multilabel training instances D

v

at node v, x

i

∈ D

v