Deep Cross-Modality Alignment for Multi-Shot Person

Re-IDentification

Zhichao Song, Bingbing Ni, Yichao Yan, Zhe Ren, Yi Xu, Xiaokang Yang

Shanghai Jiao Tong University

{5110309394,nibingbing,yanyichao,sunshinezhe,xuyi,xkyang}@sjtu.edu.cn

ABSTRACT

Multi-shot person Re-IDentification (Re-ID) has recently re-

ceived more research attention as its problem setting is more

realistic compared to single-shot Re-ID in terms of applica-

tion. While many large-scale single-shot Re-ID human image

datasets have been released, most existing multi-shot Re-ID

video sequence datasets contain only a few (i.e., several hun-

dreds) human instances, which hinders further improvement

of multi-shot Re-ID performance. To this end, we propose

a deep cross-modality alignment network, which jointly ex-

plores both human sequence pairs and image pairs to facili-

tate training better multi-shot human Re-ID models, i.e., via

transferring knowledge from image data to sequence data. To

mitigate modality-to-modality mismatch issue, the proposed

network is equipped with an image-to-sequence adaption

module called cross-modality alignment sub-network, which

successfully maps each human image into a pseudo human

sequence to facilitate knowledge transferring and joint train-

ing. Extensive experimental results on several multi-shot

person Re-ID benchmarks demonstrate great performance

gain brought up by the proposed network.

KEYWORDS

person Re-ID, cross-modality alignment network, knowledge

transferring

ACM Reference Format:

Zhichao Song, Bingbing Ni, Yichao Yan, Zhe Ren, Yi Xu, Xiaokang

Yang. 2017. Deep Cross-Mo dality Alignment for Multi-Shot Person

Re-IDentification. In Proceedings of MM ’17, Mountain View, CA,

USA, October 23–27, 2017, 9 pages.

https://doi.org/10.1145/3123266.3123324

1 INTRODUCTION

Multi-shot person Re-ID is an important problem in video

surveillance. Compared with traditional single-shot person

Re-ID, the problem setting of multi-shot person Re-ID is

closer to real-world application, i.e., in surveillance, usually a

Permission to make digital or hard copies of all or part of this work

for personal or classroom use is granted without fee provided that

copies are not made or distributed for profit or commercial advantage

and that copies bear this notice and the full citation on the first

page. Copyrights for components of this work owned by others than

ACM must be honored. Abstracting with credit is permitted. To copy

otherwise, or republish, to post on servers or to redistribute to lists,

requires prior specific permission and/or a fee. Request permissions

from permissions@acm.org.

MM ’17, October 23–27, 2017, Mountain View, CA, USA

© 2017 Association for Computing Machinery.

ACM ISBN 978-1-4503-4906-2/17/10. . . $15.00

https://doi.org/10.1145/3123266.3123324

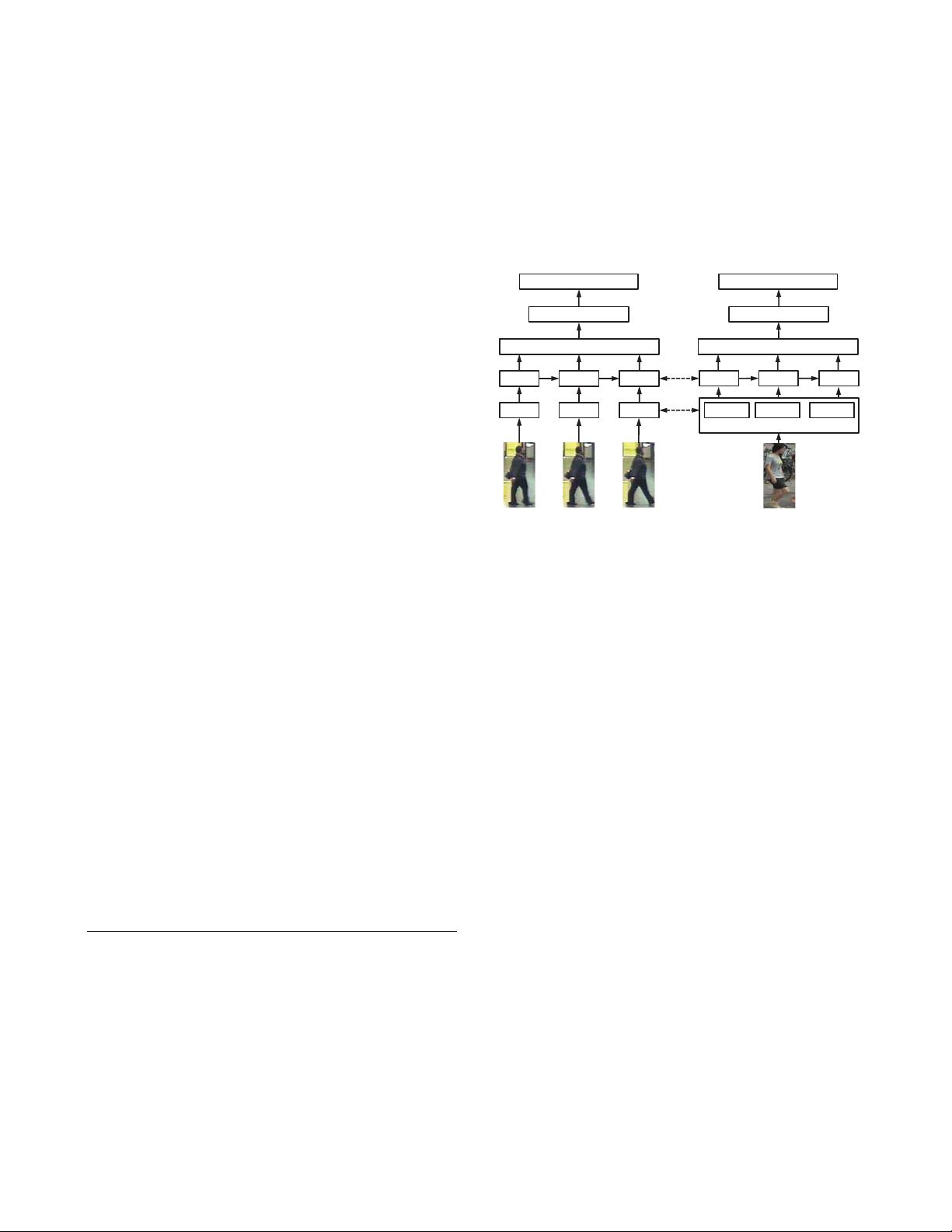

CNN

Temporal Pooling

RNN

CNN

RNN

CNN

RNN

RNN

RNN

RNN

CGMCNN

Temporal Pooling

Sequence Feature Sequence Feature

Cross-Modality Alignment Network

Video Sequence Loss Pseudo Sequence Loss

Shared

Weights

MGM

Video Sequence

Single Image

Figure 1: Pipeline of our proposed network. The in-

put data includes two parts: the video sequence and

the single image. The single image gets though the

cross-modality alignment network to produce a pseu-

do sequence. Both types of data go through the par-

allel sub-network which contains CNNs and RNNs.

Finally, video sequence and pseudo sequence com-

pute their loss value independently.

video sequence of human is observable, instead of a snapshot

of this person. There exist several datasets for multi-shot

person Re-ID, however, most of them have only hundreds of

video sequences, which is far from sufficient for training a high

performance multi-shot person Re-ID model. For example,

the well-known iLIDS-VID dataset [

29

] contains 600 image

sequences for 300 people. The PRID 2011 dataset [

11

] includes

400 image sequences for 200 people.

While lack of multi-shot person Re-ID data, there exist

many large-scale single-shot person Re-ID datasets. For exam-

ple, the CUHK03 dataset [

15

] contains 1467 human identities.

Similarly, the Market-1501 dataset [

34

] contains 32668 bound-

ing boxes of 1501 human identities. Therefore, it is natural

to ask the following question: whether we can utilize these

rich image data to facilitate better sequence based multi-shot

person Re-ID algorithm training? In other words, whether

we can transfer the knowledge obtained from image dataset

to sequence dataset?

A typical way of performing cross-modality knowledge

transfer in deep learning is the DeepID face verification

pipeline [

28

]: first pre-train deep CNN model based on a

human classification dataset, which contains a large number

MM’17, October 23–27, 2017, Mountain View, CA, USA