constraint by minimizing the brightness error

l

color

=

Z

H

0

2

kI

0

2

(d

1

) − I

2

k

1

+

Z

H

0

1

kI

0

1

(d

2

) − I

1

k

1

(4)

where H

0

2

= H

0

2

(H

1

, d

1

) is the warped region from H

1

computed by head poses and d

1

in the transformation pro-

cess described above; similarly for H

0

1

= H

0

1

(H

2

, d

2

). We

also apply a gradient discrepancy loss which is robust to il-

lumination change thus widely adopted in stereo and optical

flow estimation [6, 5, 56]:

l

g rad

=

Z

H

0

2

k∇I

0

2

(d

1

) −∇I

2

k

1

+

Z

H

0

1

k∇I

0

1

(d

2

) −∇I

1

k

1

(5)

where ∇ denotes the gradient operator. To impose a spatial

smoothness prior, we add a second-order smoothness loss

l

smooth

=

Z

H

1

|∆d

1

| +

Z

H

2

|∆d

2

| (6)

where ∆ denotes the Laplace operator.

Face depth as condition and output. Instead of directly

estimating hair and ear depth from the input image I, we

project the reconstructed face shape F onto image plane to

get a face depth map d

f

. We make d

f

an extra conditional

input concatenated with I. Note d

f

provides beneficial in-

formation (e.g., head pose, camera distance) for hair and

ear depth estimation. In addition, it allows the known face

depth around the contour to be easily propagated to the ad-

jacent regions with unknown depth.

More importantly, we train the network to also predict

the depth of the facial region using d

f

as target:

l

face

==

Z

F

1

−S

h

1

T

F

1

|d

1

− d

f

1

|+

Z

F

2

−S

h

2

T

F

2

|d

2

− d

f

2

| (7)

where S

h

denotes the hair region defined by segmentation.

Note learning face depth via l

face

should not introduce

much extra burden for the network since d

f

is provided as

input. But crucially, we can now easily enforce the consis-

tency between the reconstructed 3D face and the estimated

3D geometry in other regions, as in this case we calculate

the smoothness loss across whole head regions S

1

, S

2

:

l

smooth

=

Z

S

1

|∆d

1

| +

Z

S

2

|∆d

2

| (8)

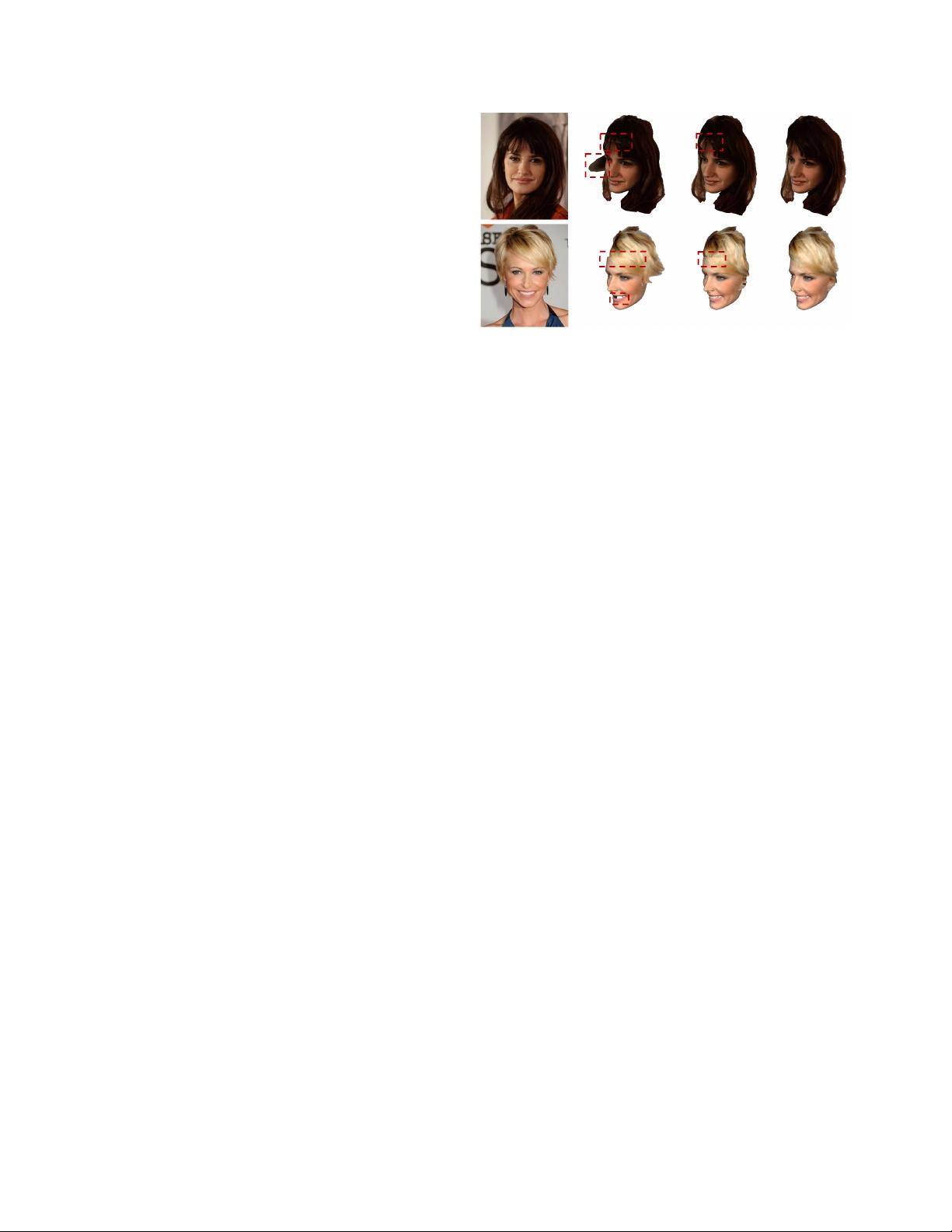

Figure 2 (2nd and 3rd columns) compares the results

with and without face depth. We also show quantitative

comparisons in Table 1 (2nd and 3rd columns). As can be

observed, using face depth significantly improves head ge-

ometry consistency and reconstruction accuracy.

Layer-order loss. Hair can often occlude a part of facial

region, leading to two depth layers. To ensure correct rela-

tive position between the hair and occluded face region (i.e.,

Input w/o face depth with face depth + l

layer

Figure 2: 3D head reconstruction result of our method with

different settings.

the former should be in front of the latter), we introduced a

layer-order loss defined as:

l

layer

=

Z

S

h

1

T

F

1

max(0, d

1

− d

f

1

)+

Z

S

h

2

T

F

2

max(0, d

2

− d

f

2

)

(9)

which penalizes incorrect layer order. As shown in Fig. 2,

the reconstructed shapes are more accurate with l

layer

.

Network structure. We apply a simple encoder-decoder

structure using a ResNet-18 [25] as backbone. We discard

its global average pooling and the last fc layers, and append

several transposed convolutional layers to upsample the fea-

ture maps to the full resolution. Skip connections are added

at 64×64, 32×32 and 16×16 resolutions. The input image

size is 256 × 256. More details of the network structure can

be found in the suppl. material.

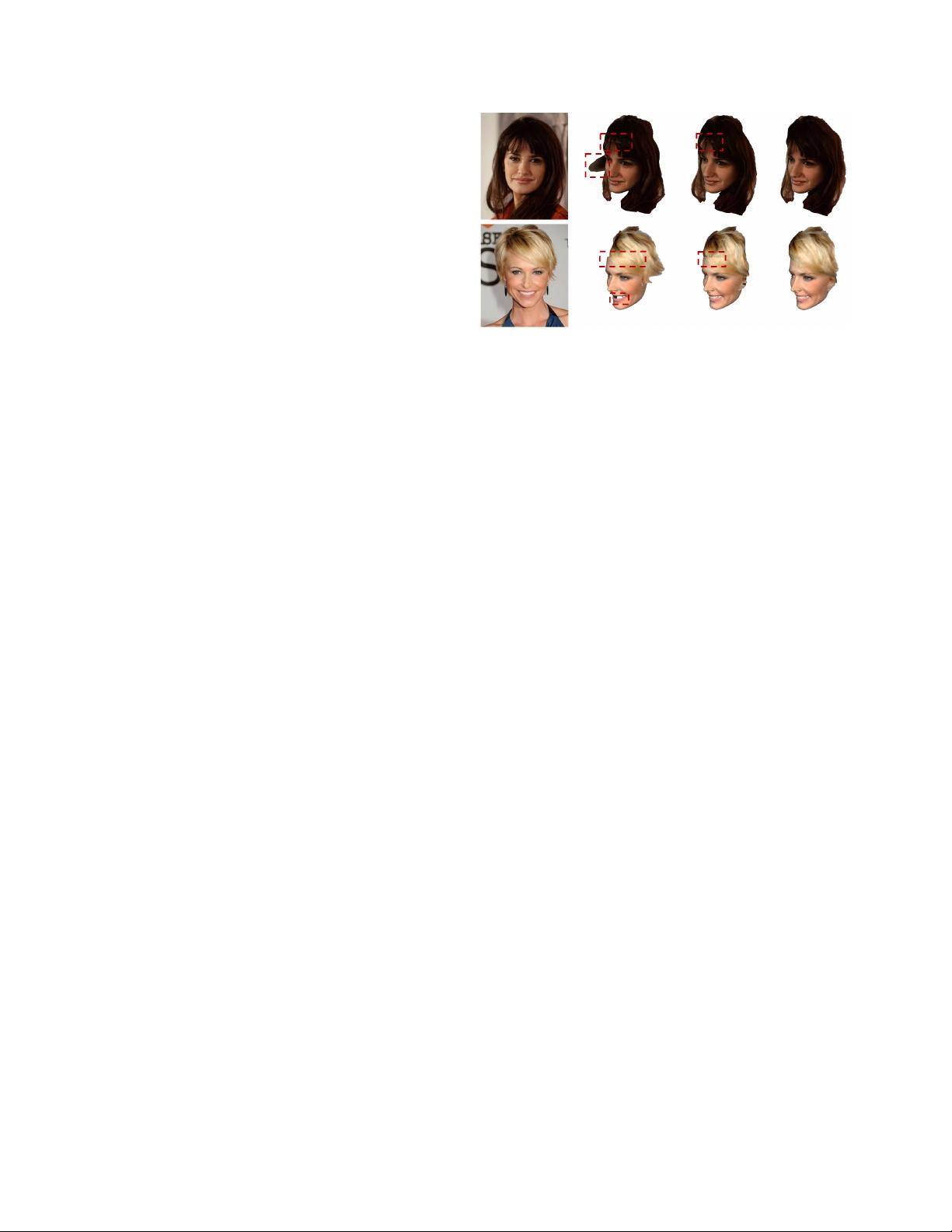

5. Single-Image Head Pose Manipulation

Given the 3D head model reconstructed from the input

portrait image, we modify its pose and synthesize new por-

trait images, described as follows.

5.1. 3D Pose Manipulation and Projection

To change the head pose, one simply needs to apply a

rigid transformation in 3D for the 3DMM face F and hair-

ear mesh H given the target pose

¯

p or displacement δp. Af-

ter the pose is changed, we reproject the 3D model onto 2D

image plane to get coarse synthesis results. Two examples

are shown in Fig. 3.

5.2. Image Refinement with Adversarial Learning

The reprojected images suffer from several issues. No-

tably, due to pose and expression change, some holes may

appear, where the missing background and/or head region

should be hallucinated akin to an image inpainting process.

Besides, the reprojection procedure may also induce certain

artifacts due to imperfect rendering.