1.1 What is computer vision? 3

1.1 What is computer vision?

As humans, we perceive the three-dimensional structure of the world around us with apparent

ease. Think of how vivid the three-dimensional percept is when you look at a vase of flowers

sitting on the table next to you. You can tell the shape and translucency of each petal through

the subtle patterns of light and shading that play across its surface and effortlessly segment

each flower from the background of the scene (Figure 1.1). Looking at a framed group por-

trait, you can easily count (and name) all of the people in the picture and even guess at their

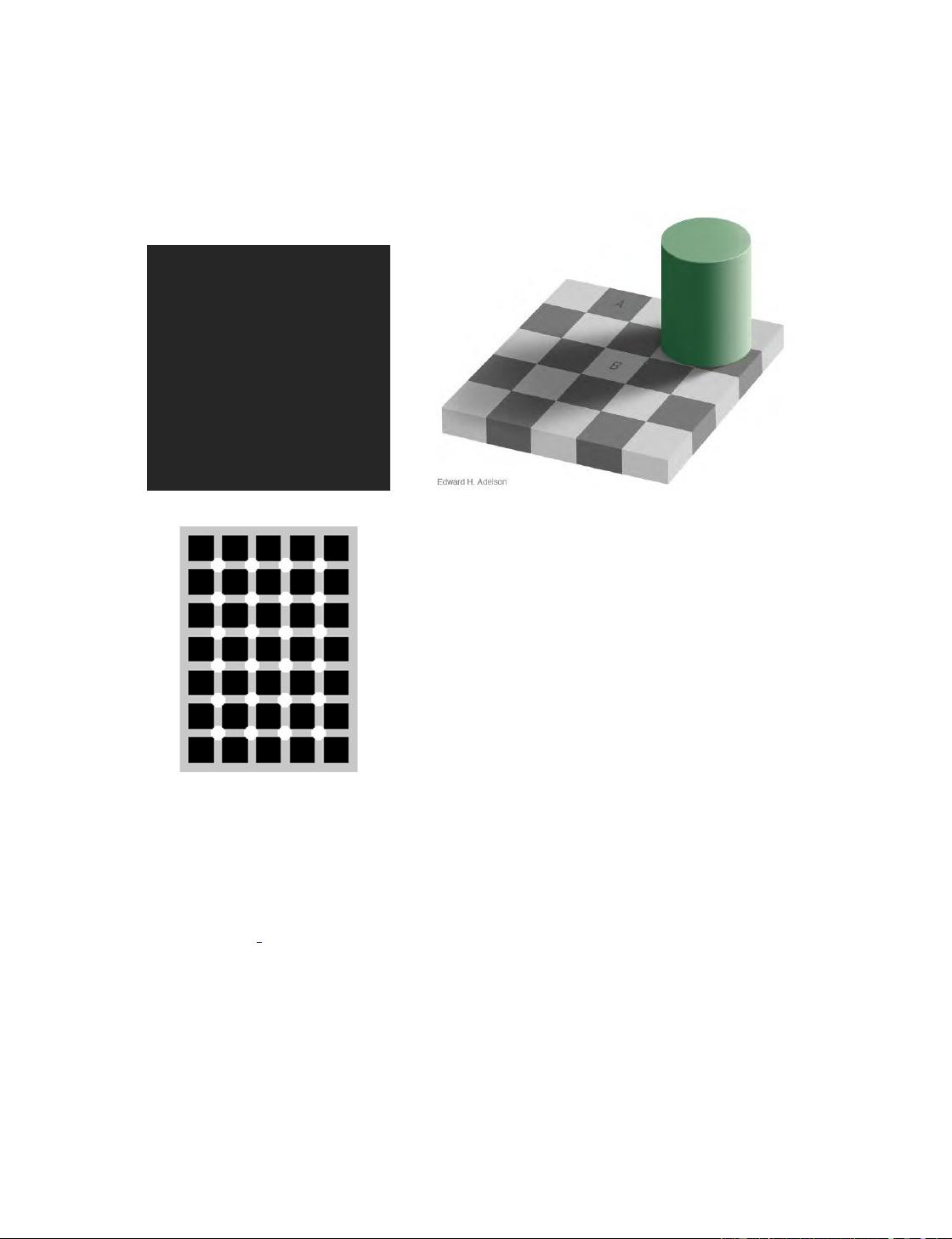

emotions from their facial appearance. Perceptual psychologists have spent decades trying to

understand how the visual system works and, even though they can devise optical illusions

1

to tease apart some of its principles (Figure 1.3), a complete solution to this puzzle remains

elusive (Marr 1982; Palmer 1999; Livingstone 2008).

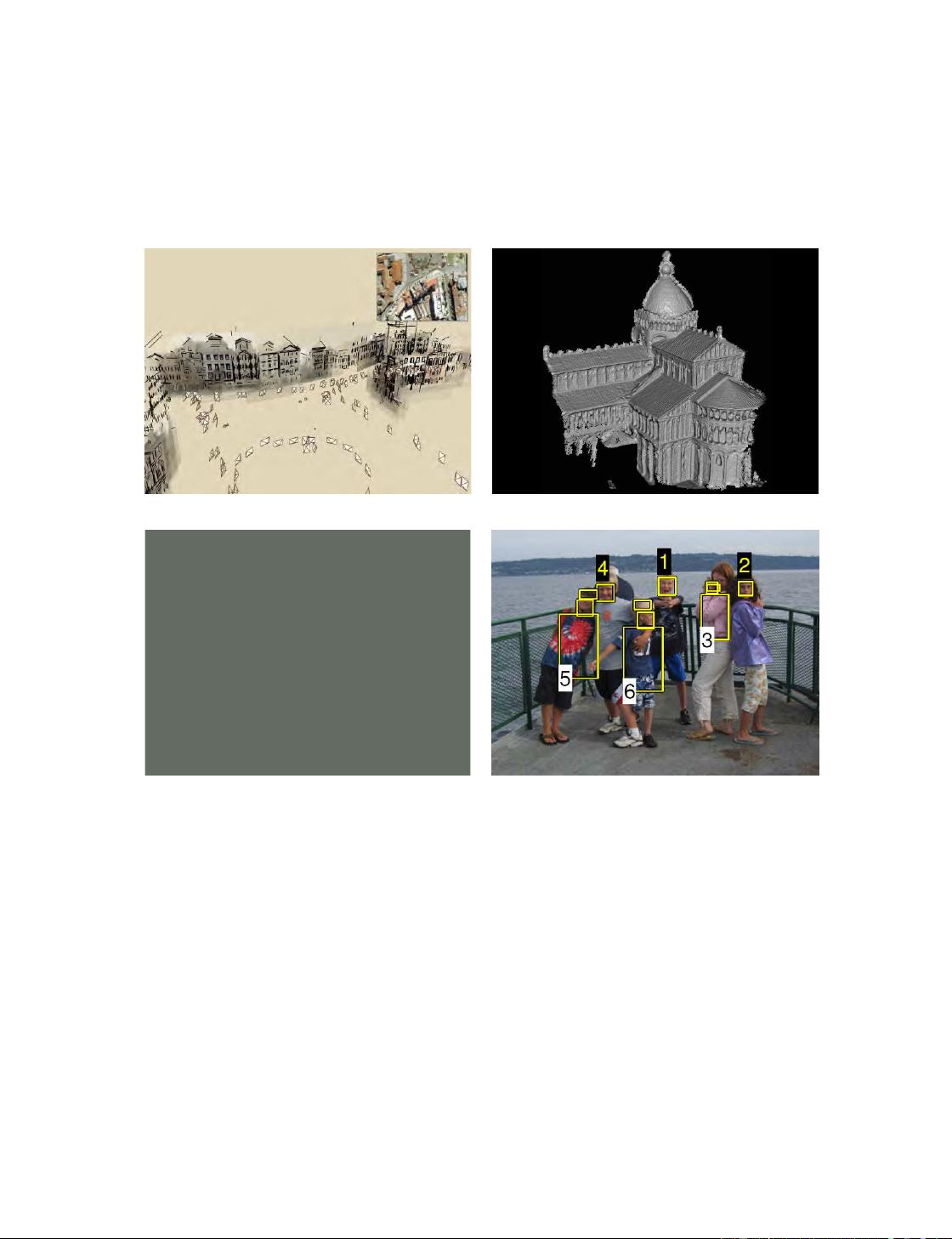

Researchers in computer vision have been developing, in parallel, mathematical tech-

niques for recovering the three-dimensional shape and appearance of objects in imagery. We

now have reliable techniques for accurately computing a partial 3D model of an environment

from thousands of partially overlapping photographs (Figure 1.2a). Given a large enough

set of views of a particular object or fac¸ade, we can create accurate dense 3D surface mod-

els using stereo matching (Figure 1.2b). We can track a person moving against a complex

background (Figure 1.2c). We can even, with moderate success, attempt to find and name

all of the people in a photograph using a combination of face, clothing, and hair detection

and recognition (Figure 1.2d). However, despite all of these advances, the dream of having a

computer interpret an image at the same level as a two-year old (for example, counting all of

the animals in a picture) remains elusive. Why is vision so difficult? In part, it is because

vision is an inverse problem, in which we seek to recover some unknowns given insufficient

information to fully specify the solution. We must therefore resort to physics-based and prob-

abilistic models to disambiguate between potential solutions. However, modeling the visual

world in all of its rich complexity is far more difficult than, say, modeling the vocal tract that

produces spoken sounds.

The forward models that we use in computer vision are usually developed in physics (ra-

diometry, optics, and sensor design) and in computer graphics. Both of these fields model

how objects move and animate, how light reflects off their surfaces, is scattered by the at-

mosphere, refracted through camera lenses (or human eyes), and finally projected onto a flat

(or curved) image plane. While computer graphics are not yet perfect (no fully computer-

animated movie with human characters has yet succeeded at crossing the uncanny valley

2

that separates real humans from android robots and computer-animated humans), in limited

domains, such as rendering a still scene composed of everyday objects or animating extinct

creatures such as dinosaurs, the illusion of reality is perfect.

In computer vision, we are trying to do the inverse, i.e., to describe the world that we see

in one or more images and to reconstruct its properties, such as shape, illumination, and color

distributions. It is amazing that humans and animals do this so effortlessly, while computer

vision algorithms are so error prone. People who have not worked in the field often under-

estimate the difficulty of the problem. (Colleagues at work often ask me for software to find

and name all the people in photos, so they can get on with the more “interesting” work.) This

1

http://www.michaelbach.de/ot/sze muelue

2

The term uncanny valley was originally coined by roboticist Masahiro Mori as applied to robotics (Mori 1970).

It is also commonly applied to computer-animated films such as Final Fantasy and Polar Express (Geller 2008).