没有合适的资源?快使用搜索试试~ 我知道了~

首页数据挖掘入门经典:原理与技术详解

数据挖掘入门经典:原理与技术详解

需积分: 9 3 下载量 83 浏览量

更新于2024-07-31

收藏 3.67MB PDF 举报

《数据挖掘原理》是一本由David Hand、Heikki Mannila和Padhraic Smyth合著的经典之作,作为麻省理工学院的参考教材,它在2001年由The MIT Press出版,共546页。本书深入探讨了如何从大型数据库中提取有用信息的数学与科学基础,对于想要进入数据挖掘领域的学习者来说,是入门的宝贵指南。

该书分为多个章节,内容涵盖了数据挖掘的各个方面:

1. 介绍:首先概述了数据挖掘的概念和目标,帮助读者理解其在实际应用中的重要性。

2. 测量与数据:这部分着重于数据的质量评估和预处理,包括数据清洗、缺失值处理等,为后续分析奠定坚实的基础。

3. 数据可视化与探索:通过图表和可视化工具展示数据特征,使得复杂的数据结构变得直观,有助于发现潜在模式。

4. 数据分析与不确定性:讲解了如何处理不确定性,如噪声和异常值,以及概率和统计方法在数据挖掘中的运用。

5. 数据挖掘算法概览:系统地介绍了各类数据挖掘技术,如分类、聚类、关联规则挖掘等核心算法。

6. 模型与模式:讨论了各种模型的构建和解释,以及如何从数据中提炼出有意义的模式。

7. 评分函数:阐述了评价数据挖掘结果的度量标准,如准确率、召回率等,以便优化算法性能。

8. 搜索与优化方法:涉及搜索策略和优化技术,如何在大规模数据中有效地寻找最优解。

9. 描述性建模:侧重于描述性分析,即对已知数据的理解和总结。

10. 预测性建模(分类):通过学习历史数据,构建分类模型以预测未来的事件或类别。

11. 预测性建模(回归):探讨连续变量的预测,如预测数值型数据的趋势。

12. 数据组织与数据库:介绍了数据库设计和管理,强调数据存储对挖掘性能的影响。

13. 发现模式与规则:讲解频繁模式挖掘和关联规则发现的方法,揭示数据间的潜在关系。

14. 内容检索:讨论如何通过文本挖掘技术实现基于内容的信息检索。

附录部分深入解析随机变量的概念,为理解数据挖掘中的概率和统计理论提供补充。全书还包括参考文献、索引、图例列表、表格列表以及示例列表,以帮助读者全面掌握内容。

《数据挖掘原理》不仅适合专业人士,也适合对数据科学有兴趣的读者,通过阅读本书,读者可以建立起扎实的数据挖掘理论基础,并学会将这些技术应用于实际问题中。

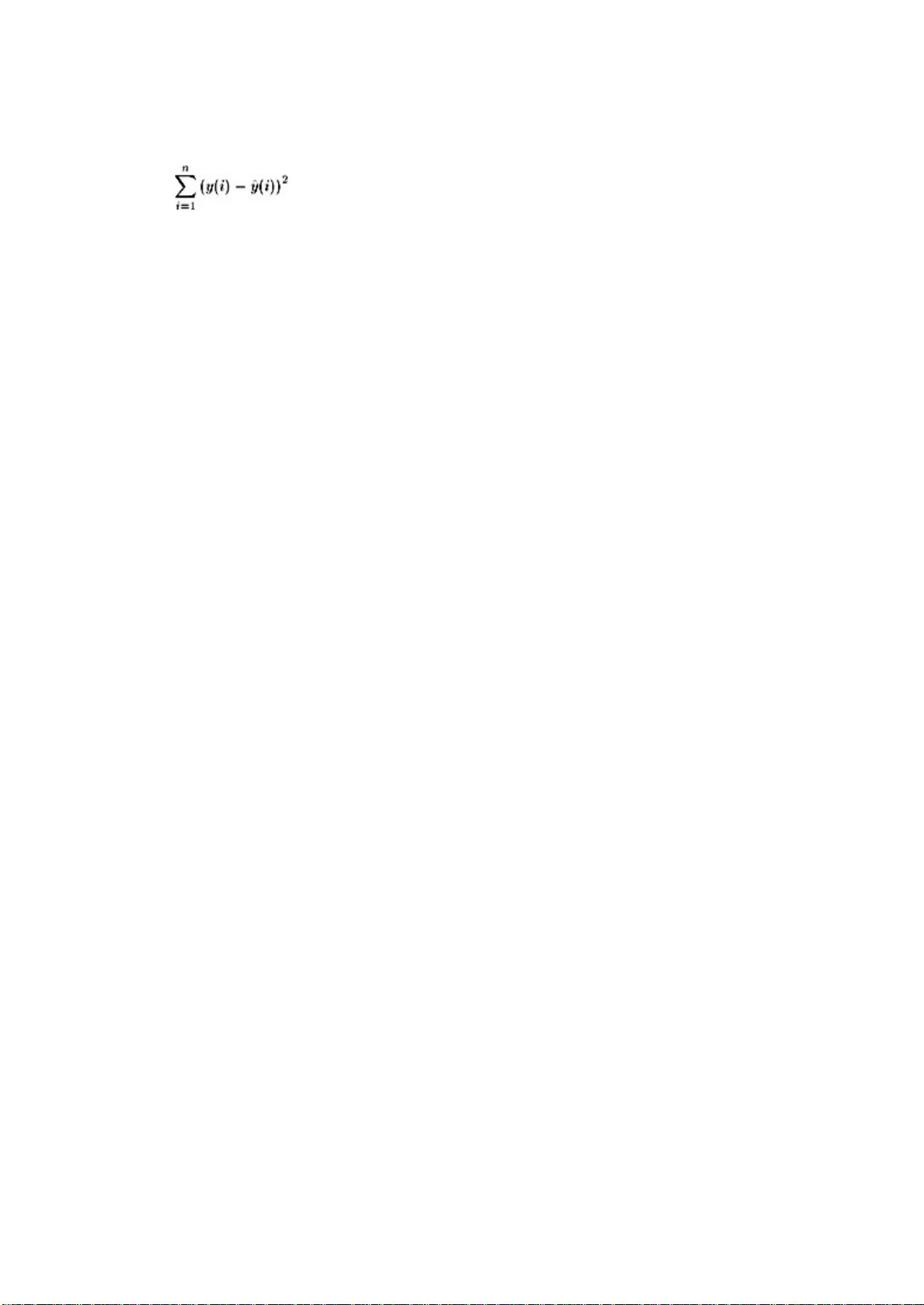

Several score functions are widely used for this purpose; these include likelihood, sum of

squared errors, and misclassification rate (the latter is used in supervised classification

problems). For example, the well-known squared error score function is defined as

(1.1)

where we are predicting n "target" values y(i), 1 = i = n, and our predictions for each are

denoted as y(i) (typically this is a function of some other "input" variable values for

prediction and the parameters of the model).

Any views we may have on the theoretical appropriateness of different criteria must be

moderated by the practicality of applying them. The model that we consider to be most

likely to have given rise to the data may be the ideal one, but if estimating its parameters

will take months of computer time it is of little value. Likewise, a score function that is

very susceptible to slight changes in the data may not be very useful (its utility will

depend on the objectives of the study). For example if altering the values of a few

extreme cases leads to a dramatic change in the estimates of some model parameters

caution is warranted; a data set is usually chosen from a number of possible data sets,

and it may be that in other data sets the value of these extreme cases would have

differed. Problems like this can be avoided by using robust methods that are less

sensitive to these extreme points.

1.5.2 Optimization and Search Methods

The score function is a measure of how well aspects of the data match proposed models

or patterns. Usually, these models or patterns are described in terms of a structure,

sometimes with unknown parameter values. The goal of optimization and search is to

determine the structure and the parameter values that achieve a minimum (or maximum,

depending on the context) value of the score function. The task of finding the "best"

values of parameters in models is typically cast as an optimization (or estimation)

problem. The task of finding interesting patterns (such as rules) from a large family of

potential patterns is typically cast as a combinatorial search problem, and is often

accomplished using heuristic search techniques. In linear regression, a prediction rule is

usually found by minimizing a least squares score function (the sum of squared errors

between the prediction from a model and the observed values of the predicted variable).

Such a score function is amenable to mathematical manipulation, and the model that

minimizes it can be found algebraically. In contrast, a score function such as

misclassification rate in supervised classification is difficult to minimize analytically. For

example, since it is intrinsically discontinuous the powerful tool of differential calculus

cannot be brought to bear.

Of course, while we can produce score functions to produce a good match between a

model or pattern and the data, in many cases this is not really the objective. As noted

above, we are often aiming to generalize to new data which might arise (new customers,

new chemicals, etc.) and having too close a match to the data in the database may

prevent one from predicting new cases accurately. We discuss this point later in the

chapter.

1.5.3 Data Management Strategies

The final component in any data mining algorithm is the data management strategy: the

ways in which the data are stored, indexed, and accessed. Most well-known dat a

analysis algorithms in statistics and machine learning have been developed under the

assumption that all individual data points can be accessed quickly and efficiently in

random-access memory (RAM). While main memory technology has improved rapidly,

there have been equally rapid improvements in secondary (disk) and tertiary (tape)

storage technologies, to the extent that many massive data sets still reside largely on

disk or tape and will not fit in available RAM. Thus, there will probably be a price to pay

for accessing massive data sets, since not all data points can be simultaneously close to

the main processor.

Many data analysis algorithms have been developed without including any explicit

specification of a data management strategy. While this has worked in the past on

relatively small data sets, many algorithms (such as classification and regression tree

algorithms) scale very poorly when the "traditional version" is applied directly to data that

reside mainly in secondary storage.

The field of databases is concerned with the development of indexing methods, data

structures, and query algorithms for efficient and reliable data retrieval. Many of these

techniques have been developed to support relatively simple counting (aggregating)

operations on large data sets for reporting purposes. However, in recent years,

development has begun on techniques that support the "primitive" data access

operations necessary to implement efficient versions of data mining algorithms (for

example, tree-structured indexing systems used to retrieve the neighbors of a point in

multiple dimensions).

1.6 The Interacting Roles of Statistics and Data Mining

Statistical techniques alone may not be sufficient to address some of the more

challenging issues in data mining, especially those arising from massive data sets.

Nonetheless, statistics plays a very important role in data mining: it is a necessary

component in any data mining enterprise. In this section we discuss some of the

interplay between traditional statistics and data mining.

With large data sets (and particularly with very large data sets) we may simply not know

even straightforward facts about the data. Simple eye-balling of the data is not an option.

This means that sophisticated search and examination methods may be required to

illuminate features which would be readily apparent in small data sets. Moreover, as we

commented above, often the object of data mining is to make some inferences beyond

the available database. For example, in a database of astronomical objects, we may

want to make a statement that "all objects like this one behave thus," perhaps with an

attached qualifying probability. Likewise, we may determine that particular regions of a

country exhibit certain patterns of telephone calls. Again, it is probably not the calls in the

database about which we want to make a statement. Rather it will probably be the

pattern of future calls which we want to be able to predict. The database provides the set

of objects which will be used to construct the model or search for a pattern, but the

ultimate objective will not generally be to describe those data. In most cases the

objective is to describe the general process by which the data arose, and other data sets

which could have arisen by the same process. All of this means that it is necessary to

avoid models or patterns which match the available database too closely: given that the

available data set is merely one set from the sets of data which could have arisen, one

does not want to model its idiosyncrasies too closely. Put another way, it is necessary to

avoid overfitting the given data set; instead one wants to find models or patterns which

generalize well to potential future data. In selecting a score function for model or pattern

selection we need to take account of this. We will discuss these issues in more detail in

chapter 7 and chapters 9 through 11. While we have described them in a data mining

context, they are fundamental to statistics; indeed, some would take them as the defining

characteristic of statistics as a discipline.

Since statistical ideas and methods are so fundamental to data mining, it is legitimate to

ask whether there are really any differences between the two enterprises. Is data mining

merely exploratory statistics, albeit for potentially huge data sets, or is there more to data

mining than exploratory data analysis? The answer is yes—there is more to data mining.

The most fundamental difference between classical statistical applications and data

mining is the size of the data set. To a conventional statistician, a "large" data set may

contain a few hundred or a thousand data points. To someone concerned with data

mining, however, many millions or even billions of data points is not unexpected—

gigabyte and even terabyte databases are by no means uncommon. Such large

databases occur in all walks of life. For instance the American retailer Wal-Mart makes

over 20 million transactions daily (Babcock, 1994), and constructed an 11 terabyte

database of customer transactions in 1998 (Piatetsky-Shapiro, 1999). AT&T has 100

million customers and carries on the order of 300 million calls a day on its long distance

network. Characteristics of each call are used to update a database of models for every

telephone number in the United States (Cortes and Pregibon, 1998). Harrison (1993)

reports that Mobil Oil aims to store over 100 terabytes of data on oil exploration. Fayyad,

Djorgovski, and Weir (1996) describe the Digital Palomar Observatory Sky Survey as

involving three terabytes of data. The ongoing Sloan Digital Sky Survey will create a raw

observational data set of 40 terabytes, eventually to be reduced to a mere 400 gigabyte

catalog containing 3 × 10

8

individual sky objects (Szalay et al., 1999). The NASA Earth

Observing System is projected to generate multiple gigabytes of raw data per hour

(Fayyad, Piatetsky-Shapiro, and Smyth, 1996). And the human genome project to

complete sequencing of the entire human genome will likely generate a data set of more

than 3.3 × 10

9

nucleotides in the process (Salzberg, 1999). With data sets of this size

come problems beyond those traditionally considered by statisticians.

Massive data sets can be tackled by sampling (if the aim is modeling, but not necessarily

if the aim is pattern detection) or by adaptive methods, or by summarizing the records in

terms of sufficient statistics. For example, in standard least squares regression

problems, we can replace the large numbers of scores on each variable by their sums,

sums of squared values, and sums of products, summed over the records—these are

sufficient for regression co-efficients to be calculated no matter how many records there

are. It is also important to take account of the ways in which algorithms scale, in terms of

computation time, as the number of records or variables increases. For example,

exhaustive search through all subsets of variables to find the "best" subset (according to

some score function), will be feasible only up to a point. With p variables there are 2

p

- 1

possible subsets of variables to consider. Efficient search methods, mentioned in the

previous section, are crucial in pushing back the boundaries here.

Further difficulties arise when there are many variables. One that is important in some

contexts is the curse of dimensionality; the exponential rate of growth of the number of

unit cells in a space as the number of variables increases. Consider, for example, a

single binary variable. To obtain reasonably accurate estimates of parameters within

both of its cells we might wish to have 10 observations per cell; 20 in all. With two binary

variables (and four cells) this becomes 40 observations. With 10 binary variables it

becomes 10240 observations, and with 20 variables it becomes 10485760. The curse of

dimensionality manifests itself in the difficulty of finding accurate estimates of probability

densities in high dimensional spaces without astronomically large databases (so large, in

fact, that the gigabytes available in data mining applications pale into insignificance). In

high dimensional spaces, "nearest" points may be a long way away. These are not

simply difficulties of manipulating the many variables involved, but more fundamental

problems of what can actually be done. In such situations it becomes necessary to

impose additional restrictions through one's prior choice of model (for example, by

assuming linear models).

Various problems arise from the difficulties of accessing very large data sets. The

statistician's conventional viewpoint of a "flat" data file, in which rows represent objects

and columns represent variables, may bear no resemblance to the way the data are

stored (as in the text and Web transaction data sets described earlier). In many cases

the data are distributed, and stored on many machines. Obtaining a random sample from

data that are split up in this way is not a trivial matter. How to define the sampling frame

and how long it takes to access data become important issues.

Worse still, often the data set is constantly evolving—as with, for example, records of

telephone calls or electricity usage. Distributed or evolving data can multiply the size of a

data set many-fold as well as changing the nature of the problems requiring solution.

While the size of a data set may lead to difficulties, so also may other properties not

often found in standard statistical applications. We have already remarked that data

mining is typically a secondary process of data analysis; that is, the data were originally

collected for some other purpose. In contrast, much statistical work is concerned with

primary analysis: the data are collected with particular questions in mind, and then are

analyzed to answer those questions. Indeed, statistics includes subdisciplines of

experimental design and survey design—entire domains of expertise concerned with the

best ways to collect data in order to answer specific questions. When data are used to

address problems beyond those for which they were originally collected, they may not be

ideally suited to these problems. Sometimes the data sets are entire populations (e.g., of

chemicals in a particular class of chemicals) and therefore the standard statistical notion

of inference has no relevance. Even when they are not entire populations, they are often

convenience or opportunity samples, rather than random samples. (For instance,the

records in question may have been collected because they were the most easily

measured, or covered a particular period of time.)

In addition to problems arising from the way the data have been collected, we expect

other distortions to occur in large data sets—including missing values, contamination,

and corrupted data points. It is a rare data set that does not have such problems. Indeed,

some elaborate modeling methods include, as part of the model, a component describing

the mechanism by which missing data or other distortions arise. Alternatively, an

estimation method such as the EM algorithm (described in chapter 8) or an imputation

method that aims to generate artificial data with the same general distributional

properties as the missing data might be used. Of course, all of these problems also arise

in standard statistical applications (though perhaps to a lesser degree with small,

deliberately collected data sets) but basic statistical texts tend to gloss over them.

In summary, while data mining does overlap considerably with the standard exploratory

data analysis techniques of statistics, it also runs into new problems, many of which are

consequences of size and the non traditional nature of the data sets involved.

1.7 Data Mining: Dredging, Snooping, and Fishing

An introductory chapter on data mining would not be complete without reference to the

historical use of terms such as "data mining," "dredging," "snooping," and "fishing." In the

1960s, as computers were increasingly applied to data analysis problems, it was noted

that if you searched long enough, you could always find some model to fit a data set

arbitrarily well. There are two factors contributing to this situation: the complexity of the

model and the size of the set of possible models.

Clearly, if the class of models we adopt is very flexible (relative to the size of the

available data set), then we will probably be able to fit the available data arbitrarily well.

However, as we remarked above, the aim may be to generalize beyond the available

data; a model that fits well may not be ideal for this purpose. Moreover, even if the aim is

to fit the data (for example, when we wish to produce the most accurate summary of data

describing a complete population) it is generally preferable to do this with a simple

model. To take an extreme, a model of complexity equivalent to that of the raw data

would certainly fit it perfectly, but would hardly be of interest or value.

Even with a relatively simple model structure, if we consider enough different models

with this basic structure, we can eventually expect to find a good fit. For example,

consider predicting a response variable, Y from a predictor variable X which is chosen

from a very large set of possible variables, X

1

, ..., X

p

, none of which are related to Y. By

virtue of random variation in the data generating process, although there are no

underlying relationships between Y and any of the X variables, there will appear to be

relationships in the data at hand. The search process will then find the X variable that

appears to have the strongest relationship to Y. By this means, as a consequence of the

large search space, an apparent pattern is found where none really exists. The situation

is particularly bad when working with a small sample size n and a large number p of

potential X variables. Familiar examples of this sort of problem include the spurious

correlations which are popularized in the media, such as the "discovery" that over the

past 30 years when the winner of the Super Bowl championship in American football is

from a particular league, a leading stock market index historically goes up in the

following months. Similar examples are plentiful in areas such as economics and the

social sciences, fields in which data are often relatively sparse but models and theories

to fit to the data are relatively plentiful. For instance, in economic time-series prediction,

there may be a relatively short time-span of historical data available in conjunction with a

large number of economic indicators (potential predictor variables). One particularly

humorous example of this type of prediction was provided by Leinweber (personal

communication) who achieved almost perfect prediction of annual values of the well-

known Standard and Poor 500 financial index as a function of annual values from

previous years for butter production, cheese production, and sheep populations in

Bangladesh and the United States.

The danger of this sort of "discovery" is well known to statisticians, who have in the past

labelled such extensive searches "data mining" or "data dredging"—causing these terms

to acquire derogatory connotations. The problem is less serious when the data sets are

large, though dangers remain even then, if the space of potential structures examined is

large enough. These risks are more pronounced in pattern detection than model fitting,

since patterns, by definition, involve relatively few cases (i.e., small sample sizes): if we

examine a billion data points, in search of an unusual configuration of just 50 points, we

have a good chance of detecting this configuration.

There are no easy technical solutions to this problem, though various strategies have

been developed, including methods that split the data into subsamples so that models

can be built and patterns can be detected using one part, and then their validity can be

tested on another part. We say more about such methods in later chapters. The final

answer, however, is to regard data mining not as a simple technical exercise, divorced

from the meaning of the data. Any potential model or pattern should be presented to the

data owner, who can then assess its interest, value, usefulness, and, perhaps above all,

its potential reality in terms of what else is known about the data.

1.8 Summary

Thanks to advances in computers and data capture technology, huge data sets—

containing gigabytes or even terabytes of data—have been and are being collected.

These mountains of data contain potentially valuable information. Th e trick is to extract

that valuable information from the surrounding mass of uninteresting numbers, so that

the data owners can capitalize on it. Data mining is a new discipline that seeks to do just

that: by sifting through these databases, summarizing them, and finding patterns.

Data mining should not be seen as a simple one-time exercise. Huge data collections

may be analyzed and examined in an unlimited number of ways. As time progresses, so

new kinds of structures and patterns may attract interest, and may be worth seeking in

the data.

Data mining has, for good reason, recently attracted a lot of attention: it is a new

technology, tackling new problems, with great potential for valuable commercial and

scientific discoveries. However, we should not expect it to provide answers to all

questions. Like all discovery processes, successful data mining has an element of

serendipity. While data mining provides useful tools, that does not mean that it will

inevitably lead to important, interesting, or valuable results. We must beware of over-

exaggerating the likely outcomes. But the potential is there.

1.9 Further Reading

Brief, general introductions to data mining are given in Fayyad, Piatetsky-Shapiro, and

Smyth (1996), Glymour et al. (1997), and a special issue of the Communications of the

ACM, Vol. 39, No. 11. Overviews of certain aspects of predictive data mining are given

by Adriaans and Zantige (1996) and Weiss and Indurkhya (1998). Witten and Franke

(2000) provide a very readable, applications-oriented account of data mining from a

machine learning (artificial intelligence) perspective and Han and Kamber (2000) is an

accessible textbook written from a database perspective data mining. Th ere are many

texts on data mining aimed at business users, notably Berry and Linoff (1997, 2000) that

contain extensive practical advice on potential business applications of data mining.

Leamer (1978) provides a general discussion of the dangers of data dredging, and Lovell

(1983) provides a general review of the topic. From a statistical perspective. Hendry

(1995, section 15.1) provides an econometrician's view of data mining. Hand et al.

(2000) and Smyth (2000) present comparative discussions of data mining and statistics.

剩余321页未读,继续阅读

点击了解资源详情

点击了解资源详情

点击了解资源详情

2009-06-01 上传

2018-03-26 上传

2008-08-24 上传

2010-04-29 上传

youyousky20

- 粉丝: 0

- 资源: 5

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

最新资源

- spring-music

- 微信/支付宝 H5支付接口(C#版demo)

- kakaopay-assignment-1

- cidr-range:获取给定CIDR范围的IP地址数组

- CSC-289-0B01-CAPSTONE:编程Capstone项目

- JavaLearnings:这是托管示例程序的教程,涵盖 Java 中的高级主题

- Cluster Orchestrator:协调器/集群部署工具-开源

- exchange-rate:获取货币汇率

- awesome-list-vue-angola:uma listaincreíveldo ecossistema Vue

- 计算机软件-商业源码-ps.zip

- joseelias:压缩器C#

- fib-app:快速构建Restful API的开发框架

- simple_chat_rest:它是一个简单的聊天套接字服务

- 基于vue-element-admin的后台权限验证系统

- kakadu::rocket:用于对远程站点进行本地测试更改的模块(脚本调试,改编等)

- 应用服务器高可用部署方案.zip

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功