YOLOv3: An Incremental Improvement

Joseph Redmon, Ali Farhadi

University of Washington

Abstract

We present some updates to YOLO! We made a bunch

of little design changes to make it better. We also trained

this new network that’s pretty swell. It’s a little bigger than

last time but more accurate. It’s still fast though, don’t

worry. At 320 × 320 YOLOv3 runs in 22 ms at 28.2 mAP,

as accurate as SSD but three times faster. When we look

at the old .5 IOU mAP detection metric YOLOv3 is quite

good. It achieves 57.9 AP

50

in 51 ms on a Titan X, com-

pared to 57.5 AP

50

in 198 ms by RetinaNet, similar perfor-

mance but 3.8× faster. As always, all the code is online at

https://pjreddie.com/yolo/.

1. Introduction

Sometimes you just kinda phone it in for a year, you

know? I didn’t do a whole lot of research this year. Spent

a lot of time on Twitter. Played around with GANs a little.

I had a little momentum left over from last year [12] [1]; I

managed to make some improvements to YOLO. But, hon-

estly, nothing like super interesting, just a bunch of small

changes that make it better. I also helped out with other

people’s research a little.

Actually, that’s what brings us here today. We have

a camera-ready deadline [4] and we need to cite some of

the random updates I made to YOLO but we don’t have a

source. So get ready for a TECH REPORT!

The great thing about tech reports is that they don’t need

intros, y’all know why we’re here. So the end of this intro-

duction will signpost for the rest of the paper. First we’ll tell

you what the deal is with YOLOv3. Then we’ll tell you how

we do. We’ll also tell you about some things we tried that

didn’t work. Finally we’ll contemplate what this all means.

2. The Deal

So here’s the deal with YOLOv3: We mostly took good

ideas from other people. We also trained a new classifier

network that’s better than the other ones. We’ll just take

you through the whole system from scratch so you can un-

derstand it all.

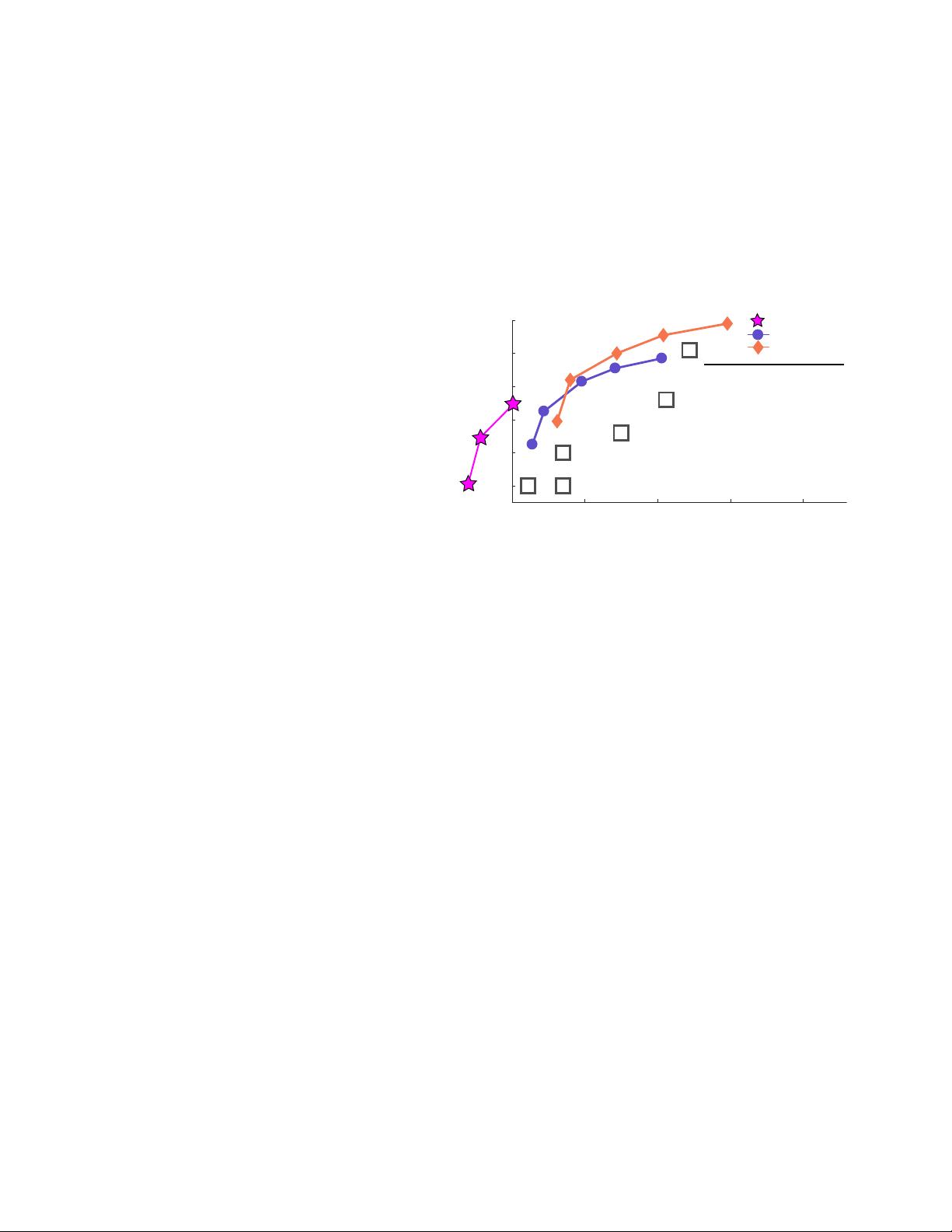

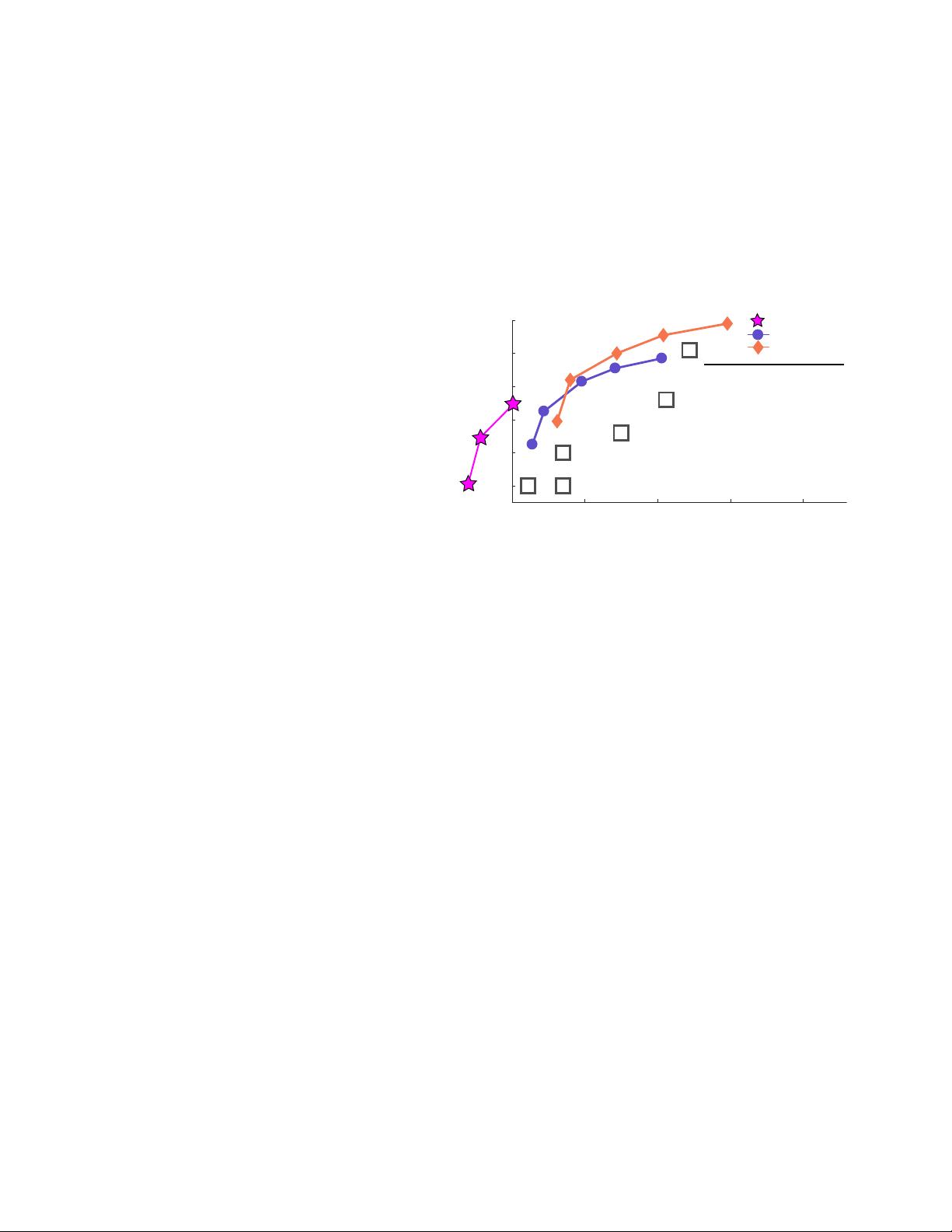

B C

D

E

F

G

RetinaNet-50

RetinaNet-101

YOLOv3

Method

[B] SSD321

[C] DSSD321

[D] R-FCN

[E] SSD513

[F] DSSD513

[G] FPN FRCN

RetinaNet-50-500

RetinaNet-101-500

RetinaNet-101-800

YOLOv3-320

YOLOv3-416

YOLOv3-608

mAP

28.0

28.0

29.9

31.2

33.2

36.2

32.5

34.4

37.8

28.2

31.0

33.0

time

61

85

85

125

156

172

73

90

198

22

29

51

Figure 1. We adapt this figure from the Focal Loss paper [9].

YOLOv3 runs significantly faster than other detection methods

with comparable performance. Times from either an M40 or Titan

X, they are basically the same GPU.

2.1. Bounding Box Prediction

Following YOLO9000 our system predicts bounding

boxes using dimension clusters as anchor boxes [15]. The

network predicts 4 coordinates for each bounding box, t

x

,

t

y

, t

w

, t

h

. If the cell is offset from the top left corner of the

image by (c

x

, c

y

) and the bounding box prior has width and

height p

w

, p

h

, then the predictions correspond to:

b

x

= σ(t

x

) + c

x

b

y

= σ(t

y

) + c

y

b

w

= p

w

e

t

w

b

h

= p

h

e

t

h

During training we use sum of squared error loss. If the

ground truth for some coordinate prediction is

ˆ

t

*

our gra-

dient is the ground truth value (computed from the ground

truth box) minus our prediction:

ˆ

t

*

− t

*

. This ground truth

value can be easily computed by inverting the equations

above.

YOLOv3 predicts an objectness score for each bounding

box using logistic regression. This should be 1 if the bound-

ing box prior overlaps a ground truth object by more than

any other bounding box prior. If the bounding box prior

1