AUGMENTING REMOTE MULTIMODAL PERSON VERIFICATION BY EMBEDDING

VOICE CHARACTERISTICS INTO FACE IMAGES

Su Wang

a

, Roland Hu

a

, Huimin Yu

a

, Xia Zheng

b

, R. I. Damper

c

a

Department of Information Science & Electronic Engineering, Zhejiang University, 310027, China

b

Department of Culture Heritage & Museology, Zhejiang University, 310027, China

c

Department of Electronics & Computer Science, University of Southampton, SO17 1BJ, UK

ABSTRACT

This paper presents a biometric watermarking algorithm to

augment remote multimodal recognition by embedding voice

characteristics into face images. We embed both a fragile wa-

termark for tampering detection, as well as a robust water-

mark to represent the GMM parameters extracted from voice.

We show that the proposed scheme can detect tampering, and

is also robust to various watermarking attacks. Person ver-

ification experiments on the XM2VTS database indicate the

validity of combining face and voice classifiers.

Index Terms— Face recognition, speaker recognition,

Digital watermarking, Quantization index modulation

1. INTRODUCTION

With the rapid progress of computer technology, biometric

recognition systems have become increasingly popular com-

pared to traditional approaches such as identification cards,

passwords, etc. However, biological information itself is not

secure. Ratha and Connell [1] summarized eight types of se-

curity attacks on a biometric system. These attacks would

alter original data in every stage of recognition. Hence, resist-

ing these attacks and protecting the security of data become

an important task.

Watermarking provides another means to increase the se-

curity of biometric data. Research work in this area can be

divided into three approaches [2]. The first is to embed bio-

metric template/sample data into host data (referred as ‘tem-

plate watermarking’ in [3]). Applications associated with this

category include convert transmission of biometric data in in-

secure channels. The second approach is to embed some data

into biometric template/sample data (referred as ‘sample wa-

termarking’ in [3]). Applications in this category can be fur-

ther distinguished by embedding robust watermarks into bio-

metric data to protect ownership; or embedding fragile wa-

termarks to detect tampering of the biometric data. Finally,

the above two approaches can be combined to form a multi-

biometric recognition system, by embedding biometric tem-

plate/sample data into another biometric data. This approach

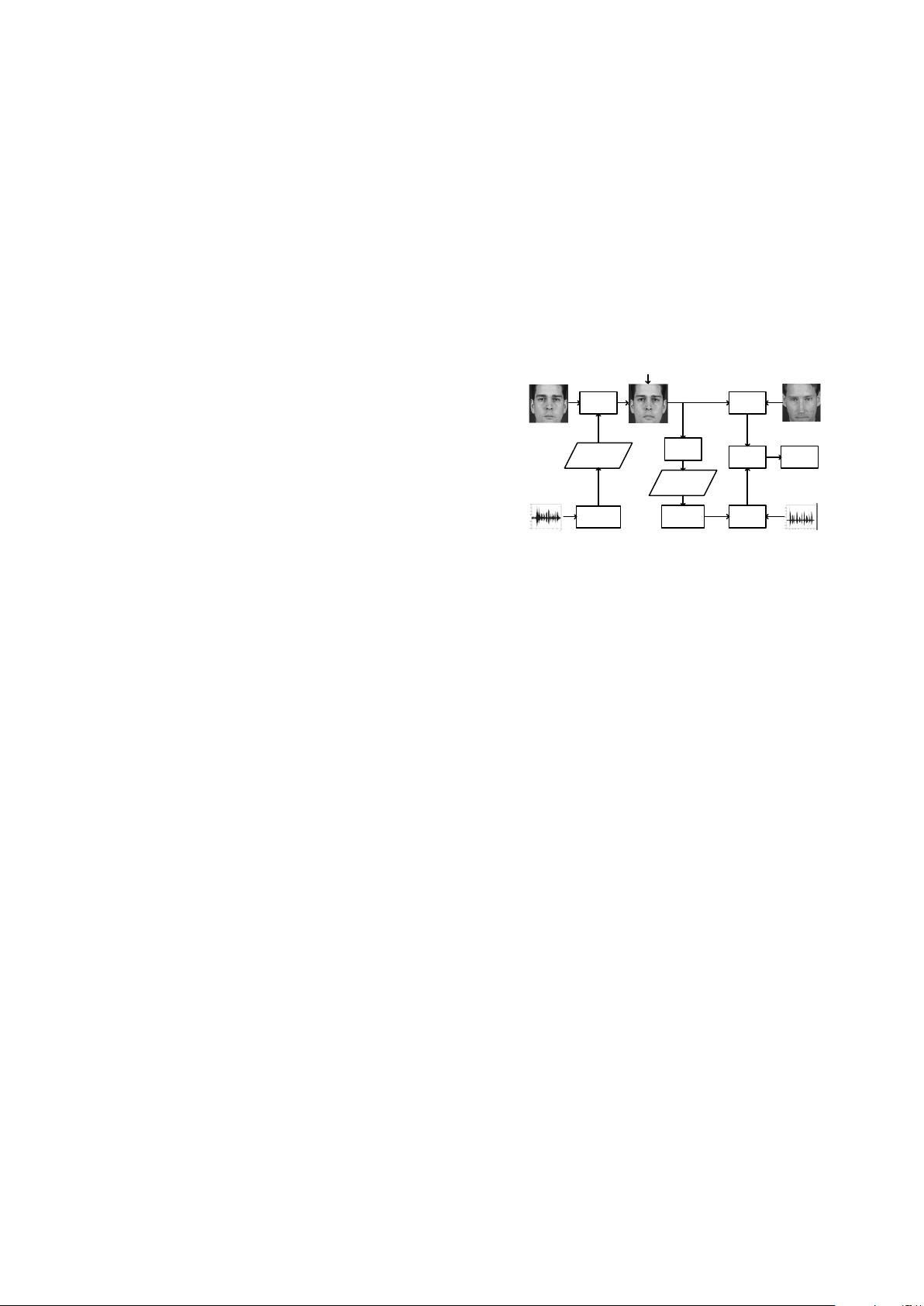

Watermark

Embedding

Extracting

Voice Features

Encoding &

Generate

Watermarks

Host Voice

Face Image

Watermarked Image

Face

Recognition

Watermark

Extraction

Voice

Recognition

Final

Evaluation

Decoding &

Rebuild

Watermarks

Rebuild Voice

Features

Recognition

Result

Database Image

Database Voice

Noise or Resize Attacks

Fig. 1. The flowchart of the watermark embedding and ex-

traction scheme.

can take advantages of both template watermarking and sam-

ple watermarking. In addition, recognition performance can

also be improved by multiple biometrics compared with only

one biometric [4, 5].

In this paper, we propose a watermarking method to build

a multimodal system by embedding voice features into face

images. We confine our application into the area of remote

multimodal person verification. We propose a framework for

transferring multi-biometrics over fixed or remote networks

in which a face image is used as a container to embed voice

features before they are sent to the server for verification.

The flowchart of the system is given in Fig. 1. At the sen-

sor side, voices and face images are obtained by the audio

and visual sensors individually. Then voice characteristics are

extracted by Mel-Frequency Cepstrum Coefficients (MFCC)

and trained by the Gaussian Mixture Models [6]. The GMM

parameters are embedded into the face images using a Quan-

tization Index Modulation (QIM)-based watermarking algo-

rithm [7]. Face images embedded with voice features are

transmitted to the server for verification. In order to prevent

malicious tampering of facial features during transmission,

a fragile watermark is also embedded into salient facial re-

gions (e.g., eyes, noise and mouth). The server firstly checks

whether the fragile watermark is retained. If not, it stops au-

thentication and inform the user that his/her biometric data

has been tampered during transmission. If the fragile water-

mark is retained, then voice features are extracted from the