tion [15, 20], bug detection [18], and vulnerability detection

[25].

Comparison method. There are two kinds of compar-

ison methods: vector comparison and approximate/exact

matching. The vector comparison method first converts the

representation of a vulnerability and the representation of a

target program into vectors, and then compares these vec-

tors for detecting vulnerabilities [12, 25, 29, 30]. The ap-

proximate/exact matching method searches the representa-

tion of a vulnerability in the code representation of a target

program via containment [13, 17, 19], substring matching

[16], full subgraph isomorphism matching [18], or approxi-

mate γ-isomorphism matching [20].

Finally, it is worth mentioning that exploit signatures are

sometimes called vulnerability signatures, although they are

actually used to recognize exploits [4, 6]. These signatures

characterize the inputs that can be used to exploit vulnera-

bilities [6]. Exploit signatures are orthogonal to the vulner-

ability signatures we study in the present paper.

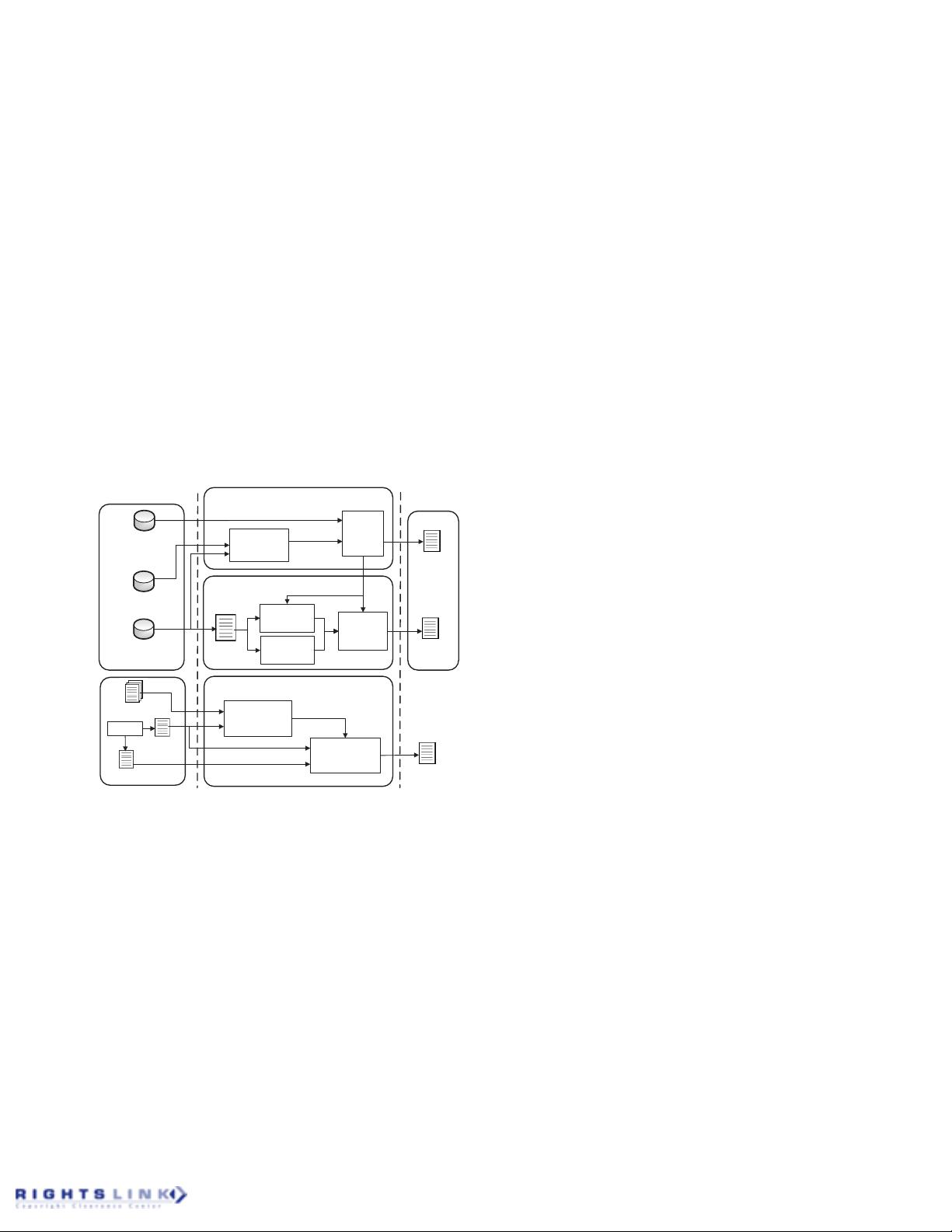

3. DESIGN

3.1 Overview

Vulnerability

Patch Database

(VPD)

Target programs

Vulnerability

diff hunk feature

generator

Code-similarity algorithm selection

Algorithm

selection

engine

Vulnerability signature generation

Patched/

unpatched diff

code extraction

Unpatched

code fragment

extraction

Diff

Vulnerability

signature

generator

Target program

signature generator

Vulnerability

detection engine

Vulnerability

locations

Vulnerability detection

Input

NVD

CVE-to-

algorithm

mapping

Vulnerability

signatures

CVE-IDs

Vulnerability

signatures

CVE-to-

algorithm

mapping

Output

Learning phase

Detection phase

Vulnerability Code

Instance Database

(VCID)

Figure 1: Overview of VulPecker: The learning

phase selects code-similarity algorithm(s) that is ef-

fective for a vulnerability. The select algorithms in

turn guide the generation of vulnerability signatures

and the detection of vulnerabilities.

Figure 1 gives an overview of VulPecker. It has two phases:

a learning phase and a detection phase. Before we elaborate

on the modules described in Figure 1, let us discuss two

high-level issues. First, which code-similarity algorithm(s) is

effective for detecting which vulnerability? In answering this

question, we analyze a set of candidate code-similarity algo-

rithms by taking advantage of features describing vulnerabil-

ities and patches. This analysis leads to a CVE-to-algorithm

mapping, which maps a CVE-ID to the select code-similarity

algorithm(s) that is effective for detecting the vulnerability.

Second, how should we generate and use vulnerability sig-

natures? Recall that a code-similarity algorithm can be

characterized by three attributes: code-fragment level, code

representation, and comparison method. We observe that

code-fragment level and code representation offer guidance

for generating signatures of vulnerabilities as well as signa-

tures of target programs. These signatures are then com-

pared with each other for determining whether or not a tar-

get program has a vulnerability. If a vulnerability is found,

the location of the vulnerable code fragment(s) is reported.

3.2 Defining vulnerability and code-reuse fea-

tures

Since our focus is to use code similarity analysis to detect

vulnerabilities, we need to define features to characterize

vulnerabilities and code reuses.

Features for describing vulnerability diff hunks.

Given a vulnerability and its patch, the vulnerability can be

characterized by the vulnerability diff,whichiscomposed

of one or multiple diff hunks.Eachdiffhunkcontainsa

sequence of lines of code, where each line is prefixed by a

“+” symbol (addition), “-” symbol (deletion), or nothing. In

order to define features to describe a vulnerability, it is suf-

ficient to define features that describe these diff hunks. For

adiffhunk,wedefinetwosetsoffeatures: basic features and

patch features. Table 1 summarizes these features. Basic fea-

tures are the Type 1 features described in Table 1, including

the unique CVE-ID, the Common Weakness Enumeration

Identifier (CWE-ID) that represents the vulnerability type,

product vendor, product affected, and vulnerability severity.

Patch features are the Type 2–Type 6 features described in

Table 1 and describe the code changes from the unpatched

piece of code to the patched one. The five types of patch

features are elaborated as follows.

• Non-substantive features: These features describe chan-

ges in whitespace, format or comment which have no

impact on useful code.

• Component features: These features describe the chan-

ges of components in statements such as varia bles, op-

erators, constants, and functions.

• Expression features: These features describe the chan-

ges of expressions in statements such as assignment

expression, if condition, and for condition.

• Statement features: These features describe the chan-

ges of statements involving addition, deletion, and mo-

vement.

• Function-level features: These features describe the

changes of functions or changes outside a function,

such as macros and global variable definitions.

Features for describing code reuses. The term “code

reuse”often means code cloning [5, 26], including exact clones,

renamed clones, near miss clones, and semantic clones. For

vulnerability detection, we are given a piece of code con-

taining a vulnerability and a target piece of code that may

or may not contain the same vulnerability, where the latter

may or may not be an exact clone of the former. Note that

we have already defined five types of patch features for de-

scribing vulnerabilities, namely Type 2–Type 6 described in

Table 1. Since these features can already describe the “trans-

formation” from an unpatched piece of vulnerable code to a

patched piece of code, which may be seen as a sort of code

reuse in a sense, we can naturally use these patch features

to describe code reuses.

3.3 Preparing the input

203