Image Quilting for Texture Synthesis and Transfer

Alexei A. Efros

1,2

William T. Freeman

2

1

University of California, Berkeley

2

Mitsubishi Electric Research Laboratories

Abstract

We present a simple image-based method of generating novel vi-

sual appearance in which a new image is synthesized by stitching

together small patches of existing images. We call this process im-

age quilting. First, we use quilting as a fast and very simple texture

synthesis algorithm which produces surprisingly good results for

a wide range of textures. Second, we extend the algorithm to per-

form texture transfer – rendering an object with a texture taken from

a different object. More generally, we demonstrate how an image

can be re-rendered in the style of a different image. The method

works directly on the images and does not require 3D information.

Keywords: Texture Synthesis, Texture Mapping, Image-based

Rendering

1 Introduction

In the past decade computer graphics experienced a wave of ac-

tivity in the area of image-based rendering as researchers explored

the idea of capturing samples of the real world as images and us-

ing them to synthesize novel views rather than recreating the entire

physical world from scratch. This, in turn, fueled interest in image-

based texture synthesis algorithms. Such an algorithm should be

able to take a sample of texture and generate an unlimited amount

of image data which, while not exactly like the original, will be per-

ceived by humans to be the same texture. Furthermore, it would be

useful to be able to transfer texture from one object to anther (e.g.

the ability to cut and paste material properties on arbitrary objects).

In this paper we present an extremely simple algorithm to ad-

dress the texture synthesis problem. The main idea is to synthesize

new texture by taking patches of existing texture and stitching them

together in a consistent way. We then present a simple generaliza-

tion of the method that can be used for texture transfer.

1.1 Previous Work

Texture analysis and synthesis has had a long history in psychol-

ogy, statistics and computer vision. In 1950 Gibson pointed out

the importance of texture for visual perception [8], but it was the

pioneering work of Bela Julesz on texture discrimination [12] that

paved the way for the development of the field. Julesz suggested

1

Computer Science Division, UC Berkeley, Berkeley, CA 94720, USA.

2

MERL, 201 Broadway, Cambridge, MA 02139, USA.

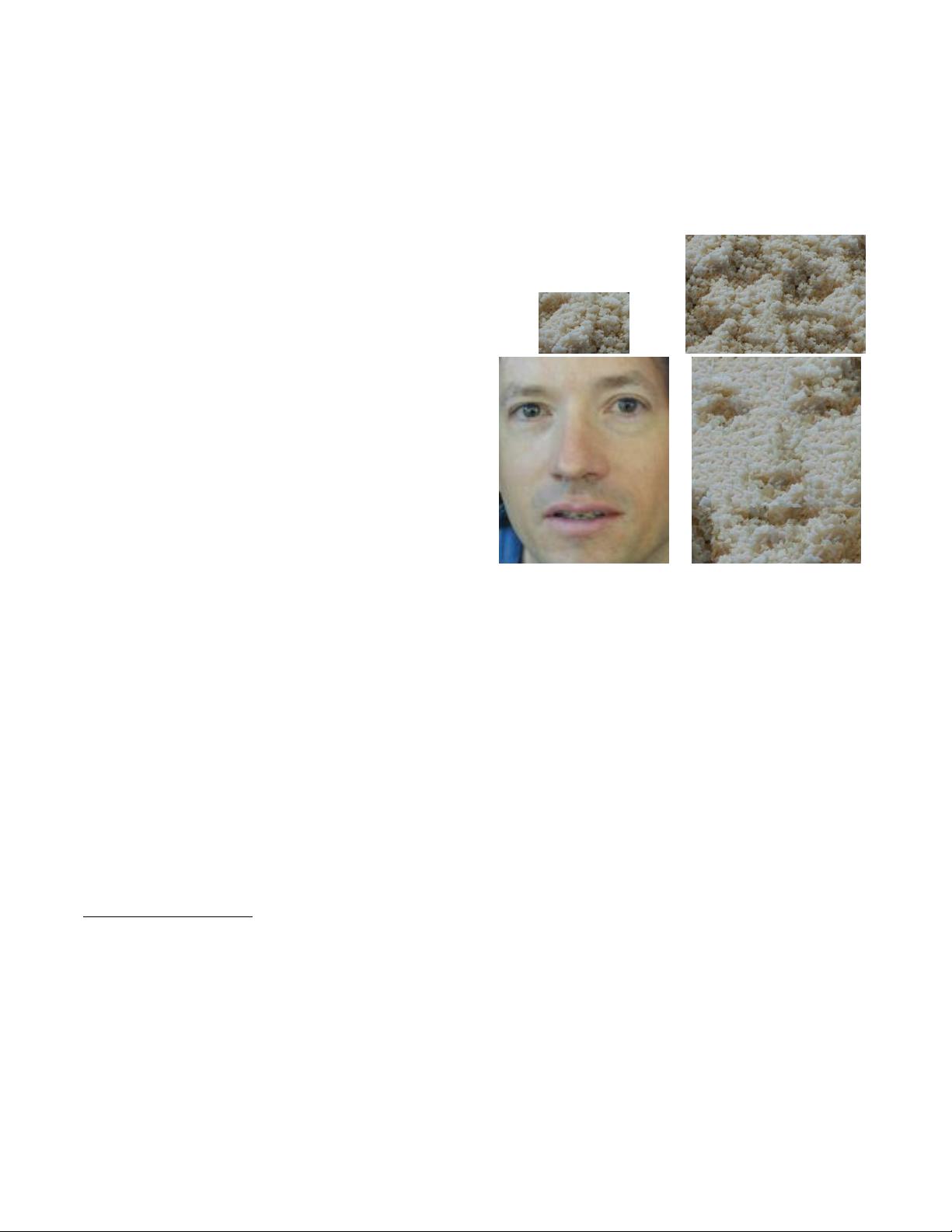

input images quilting results

Figure 1: Demonstration of quilting for texture synthesis and tex-

ture transfer. Using the rice texture image (upper left), we can syn-

thesize more such texture (upper right). We can also transfer the

rice texture onto another image (lower left) for a strikingly differ-

ent result.

that two texture images will be perceived by human observers to

be the same if some appropriate statistics of these images match.

This suggests that the two main tasks in statistical texture synthe-

sis are (1) picking the right set of statistics to match, (2) finding an

algorithm that matches them.

Motivated by psychophysical and computational models of hu-

man texture discrimination [2, 14], Heeger and Bergen [10] pro-

posed to analyze texture in terms of histograms of filter responses

at multiple scales and orientations. Matching these histograms it-

eratively was sufficient to produce impressive synthesis results for

stochastic textures (see [22] for a theoretical justification). How-

ever, since the histograms measure marginal, not joint, statistics

they do not capture important relationships across scales and ori-

entations, thus the algorithm fails for more structured textures. By

also matching these pairwise statistics, Portilla and Simoncelli [17]

were able to substantially improve synthesis results for structured

textures at the cost of a more complicated optimization procedure.

In the above approaches, texture is synthesized by taking a ran-

dom noise image and coercing it to have the same relevant statistics

as in the input image. An opposite approach is to start with an in-

put image and randomize it in such a way that only the statistics

to be matched are preserved. De Bonet [3] scrambles the input in

a coarse-to-fine fashion, preserving the conditional distribution of

filter outputs over multiple scales (jets). Xu el.al. [21], inspired by

the Clone Tool in P

HOTOSHOP, propose a much simpler approach

yielding similar or better results. The idea is to take random square

blocks from the input texture and place them randomly onto the

synthesized texture (with alpha blending to avoid edge artifacts).