4

Symbols Descriptions

D (A, or B) the uncertain database(s)

d the dimensionality of the data set

UR( o) the uncertainty region of object o

¯(o) the hypersphere that tightly bounds UR(o)

α the user-specified probability threshold

dist(·, ·) the Euclidean distance between two objects

Table 1 Notations and Descriptions

(NN) of each data object, and then check whether or

not this object is closer to query point than to its NN.

However this requires a sequential scan over the entire

data set and incurs high computation cost (I/O cost

as well). In order to reduce such cost, many pruning

techniques [18,36,41,33,38] have been proposed, which

assume that data objects are precise points.

Similarly, for our PRNN problem, it is also ineffi-

cient to apply the sequential scan method. Moreover,

the inherent uncertainty of data objects makes the ex-

isting RNN techniques inapplicable to the PRNN re-

trieval. For example, the pruning region defined in the

state-of-the-art TPL method [38] is invalid in the PRNN

case, since the positions of objects are uncertain. Fur-

thermore, in order to refine PRNN candidates, the cal-

culation of the complex Inequality (1) requires access-

ing all the objects in the database, which is very costly.

Therefore, in the sequel, we first design an effective

pruning method that is specific for our PRNN query

processing, and then reduce the number of objects that

are involved in the procedure of refining candidates. Ta-

ble 1 summarizes the commonly-used symbols in this

paper.

4 Probabilistic RNN Search

As mentioned above, it is not efficient to answer PRNN

queries via sequential scan, in terms of both compu-

tation and I/O costs. Thus, we use a multidimensional

index I (e.g. R-tree [12], M-tree [10], or SR-tree [14]) to

facilitate PRNN query processing over uncertain database

D. Since our proposed method is independent of the

underlying index, in this paper, we use one of popular

indexes, R-tree, to index uncertain objects (i.e. their

uncertainty regions), which has been extensively used

in the literature of RNN on precise data in Euclidean

space [18,33,36,38,39] or uncertain query processing

[20,21,23,24,28]. The framework for our PRNN search

is as follows. Given any query object q, we first tra-

verse the R-tree I with an effective pruning method to

reduce the PRNN search space, obtaining a candidate

set, and then refine the candidates by checking their ac-

tual probabilities P

P RN N

(q, o) in Inequality (1). Those

objects with probabilities greater than or equal to α are

returned as the query result.

4.1 Heuristics of GP Method

In this subsection, we show the heuristics of our pruning

method, namely geometric pruning (GP), that permits

the development of efficient PRNN search procedures.

Recall that, a PRNN query retrieves all the data ob-

jects that have query object q as their NNs with prob-

abilities greater than or equal to α ∈ (0, 1]. Thus, our

basic pruning idea is to discard those objects that have

probabilities never greater than β, for some β ∈ [0, α).

For brevity, we denote such a pruning method as GP

β

,

which, based on the PRNN definition, would not intro-

duce any false dismissals (actual answers to the query

that are however not in the retrieval result).

Note that, a special case of GP

β

, GP

0

when β = 0,

will filter out data objects that are definitely not RNNs

(i.e. with zero probability to be RNNs) of a query point

q. In fact, this case corresponds to applications where

we do not know the distributions of data objects within

their uncertainty regions. In order to guarantee no-

false-dismissal property of the query result, our GP

0

method has to conservatively prune data objects based

on the geometric property (e.g. shape) of their uncer-

tainty regions, assuming objects can locate anywhere in

regions and with any distributions. On the other hand,

in applications where object distributions are known in

advance, our GP

β

method (β ∈ [0, α)) can wisely uti-

lize this additional information to enhance the pruning

ability. Since GP

β

(β > 0) essentially applies techniques

similar to GP

0

, in the sequel, we will start with GP

0

,

and later generalize GP

0

to GP

β

(β ∈ [0, α)) in Section

4.5.

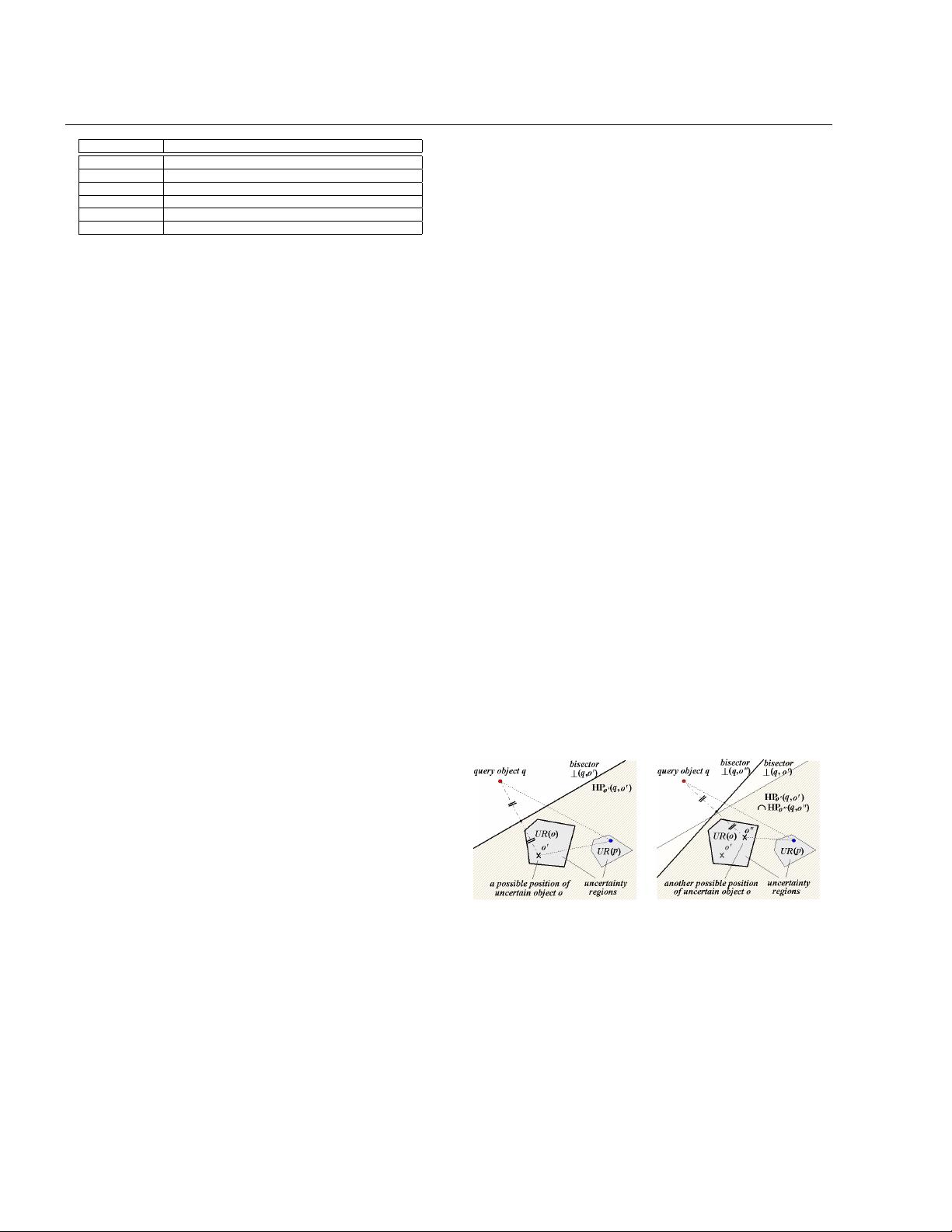

(a)

(b)

Fig. 2 2D Example of GP

0

Heuristics

Consider a query point q and any uncertain object o

in a 2D space as shown in Fig. 2(a), where o can locate

anywhere in its uncertainty region UR(o) with any dis-

tribution. Let o

0

be one possible position of uncertain

object o. The perpendicular bisector ⊥ (q, o

0

) between

points q and o

0

would partition the entire data space

into two halfplanes, HP

o

0

(q, o

0

) that contains point o

0

and HP

q

(q, o

0

) that contains point q. For any data ob-

ject p that completely falls into HP

o

0

(q, o

0

) (i.e. the

shaded area), we have dist(o

0

, p) < dist(q, p) (i.e. q is

not NN of p). Therefore, p cannot be the RNN of q [38].