is the same in the former. Surprisingly, the chain of thought examples from different domains

(common sense to arithmetic) but with the same answer (multiple-choice) format provide substantial

performance gain over Zero-shot (to AQUA-RAT), measured relative to the possible improvements

from Zero-shot-CoT or Few-shot-CoT. In contrast, the performance gain becomes much less when

using examples with different answer types (to MultiArith), confirming prior work [Min et al., 2022]

that suggests LLMs mostly leverage the few-shot examples to infer the repeated format rather than

the task itself in-context. Nevertheless, for both cases the results are worse than Zero-shot-CoT,

affirming the importance of task-specific sample engineering in Few-shot-CoT.

5 Discussion and Related Work

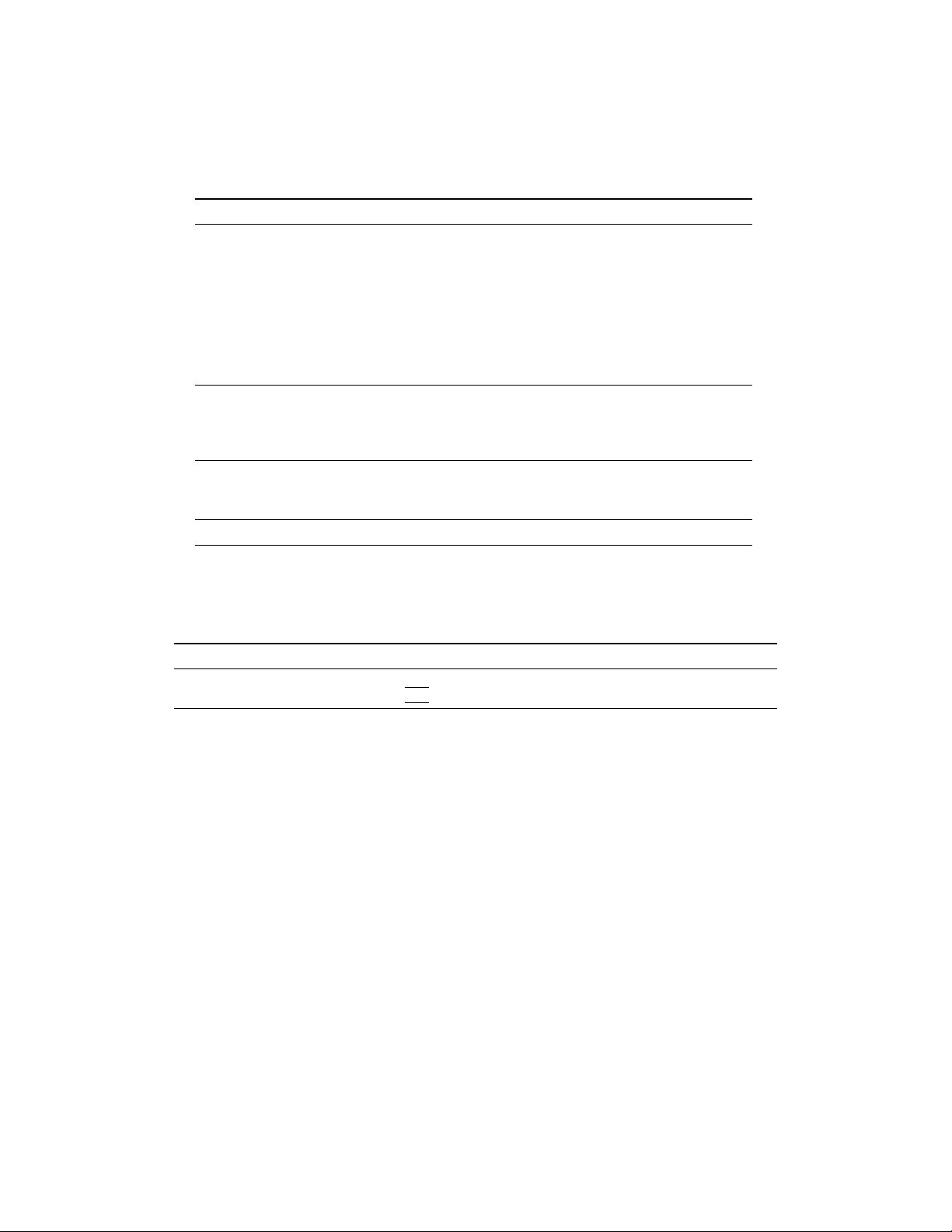

Table 6: Summary of related work on arithmetic/commonsense reasoning tasks. Category denotes the

training strategy. CoT denotes whether to output chain of thought. Task column lists the tasks that

are performed in corresponding papers. AR: Arithmetic Reasoning, CR: Commonsense Reasoning.

Method Category CoT Task Model

Rajani et al. [2019] Fine-Tuning X CR GPT

Cobbe et al. [2021] Fine-Tuning X AR GPT-3

Zelikman et al. [2022] Fine-Tuning X AR,CR GPT-3, etc

Nye et al. [2022] Fine-Tuning

5

X AR Transformer(Decoder)

Brown et al. [2020] Few/Zero-Shot CR GPT-3

Smith et al. [2022] Few/Zero-Shot AR,CR MT-NLG

Rae et al. [2021] Few-Shot AR,CR Gopher

Wei et al. [2022] Few-Shot X AR,CR PaLM, LaMBDA, GPT-3

Wang et al. [2022] Few-Shot X AR,CR PaLM, etc

Chowdhery et al. [2022] Few-Shot X AR,CR PaLM

Shwartz et al. [2020] Zero-Shot X CR GPT-2, etc

Reynolds and McDonell [2021] Zero-Shot X AR GPT-3

Zero-shot-CoT (Ours) Zero-Shot X AR,CR PaLM, Instruct-GPT3, GPT-3, etc

Reasoning Ability of LLMs

Several studies have shown that pre-trained models usually are not

good at reasoning [Brown et al., 2020, Smith et al., 2022, Rae et al., 2021], but its ability can be

substantially increased by making them produce step-by-step reasoning, either by fine-tuning [Rajani

et al., 2019, Cobbe et al., 2021, Zelikman et al., 2022, Nye et al., 2022] or few-shot prompting [Wei

et al., 2022, Wang et al., 2022, Chowdhery et al., 2022] (See Table 6 for summary of related work).

Unlike most prior work, we focus on zero-shot prompting and show that a single fixed trigger prompt

substantially increases the zero-shot reasoning ability of LLMs across a variety of tasks requiring

complex multi-hop thinking (Table 1), especially when the model is scaled up (Figure 3). It also

generates reasonable and understandable chain of thought across diverse tasks (Appendix B), even

when the final prediction is wrong (Appendix C). Similar to our work, Reynolds and McDonell

[2021] demonstrate a prompt, “Let’s solve this problem by splitting it into steps”, would facilitate

the multi-step reasoning in a simple arithmetic problem. However, they treated it as a task-specific

example and did not evaluate quantitatively on diverse reasoning tasks against baselines. Shwartz et al.

[2020] propose to decompose a commonsense question into a series of information seeking question,

such as “what is the definition of

[X]

”. It does not require demonstrations but requires substantial

manual prompt engineering per each reasoning task. Our results strongly suggest that LLMs are

decent zero-shot reasoners, while prior work [Wei et al., 2022] often emphasize only few-shot learning

and task-specific in-context learning, e.g. no zero-shot baselines were reported. Our method does

not require time-consuming fine-tuning or expensive sample engineering, and can be combined with

any pre-trained LLM, serving as the strongest zero-shot baseline for all reasoning tasks.

Zero-shot Abilities of LLMs

Radford et al. [2019] show that LLMs have excellent zero-shot

abilities in many system-1 tasks, including reading comprehension, translation, and summarization.

5

Nye et al. [2022] also evaluates few-shot settings, but the few-shot performances on their domains are worse

than the fine-tuning results.

9