> REPLACE THIS LINE WITH YOUR PAPER IDENTIFICATION NUMBER (DOUBLE-CLICK HERE TO EDIT) <

block are narrower than the middle. Using 1 × 1 convolution

kernel can not only reduce the number of parameters in the

network but also greatly improve the network's nonlinearity.

A lot of experiments in [37] have proved that ResNet can

mitigate the gradient vanishing problem without degeneration

in deep neural networks since the gradient can directly flow

through shortcut connections.

Based upon ResNet, many studies have managed to improve

the performance of the original ResNet, such as pre-activation

ResNet [38], wide ResNet [39], stochastic depth ResNets (SDR)

[40], and ResNet in ResNet (RiR) [41].

F. DCGAN

Generative Adversarial Network (GAN) [42] is an

unsupervised model proposed by Goodfellow et al. in 2014.

GAN contains a generative model G and a discriminative model

D. The model G with random noise z generates a sample G(z)

that subjects to the data distribution P

data

learned by G. The

model D can determine whether the input sample is real data x

or generated data G(z). Both G and D can be nonlinear functions,

such as deep neural networks. The aim of G is to generate data

as real as possible; nevertheless, the aim of D is to distinguish

the fake data generated by G from the real data. There exists an

interestingly adversarial relationship between the generative

network and the discriminative network. This idea originates

from game theory, in which the two sides use their strategies to

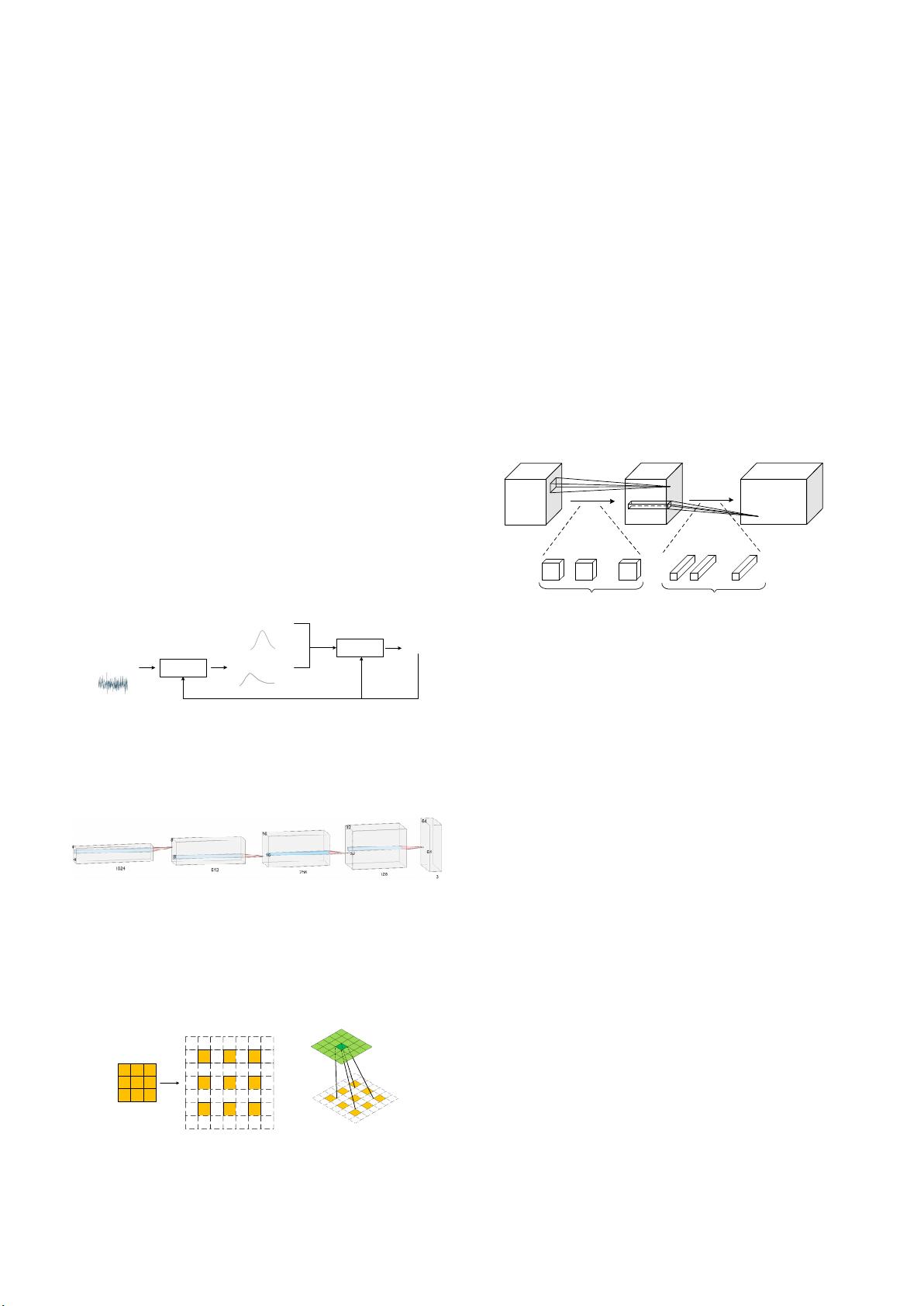

achieve the goal of winning. The procedure is shown in Fig. 10.

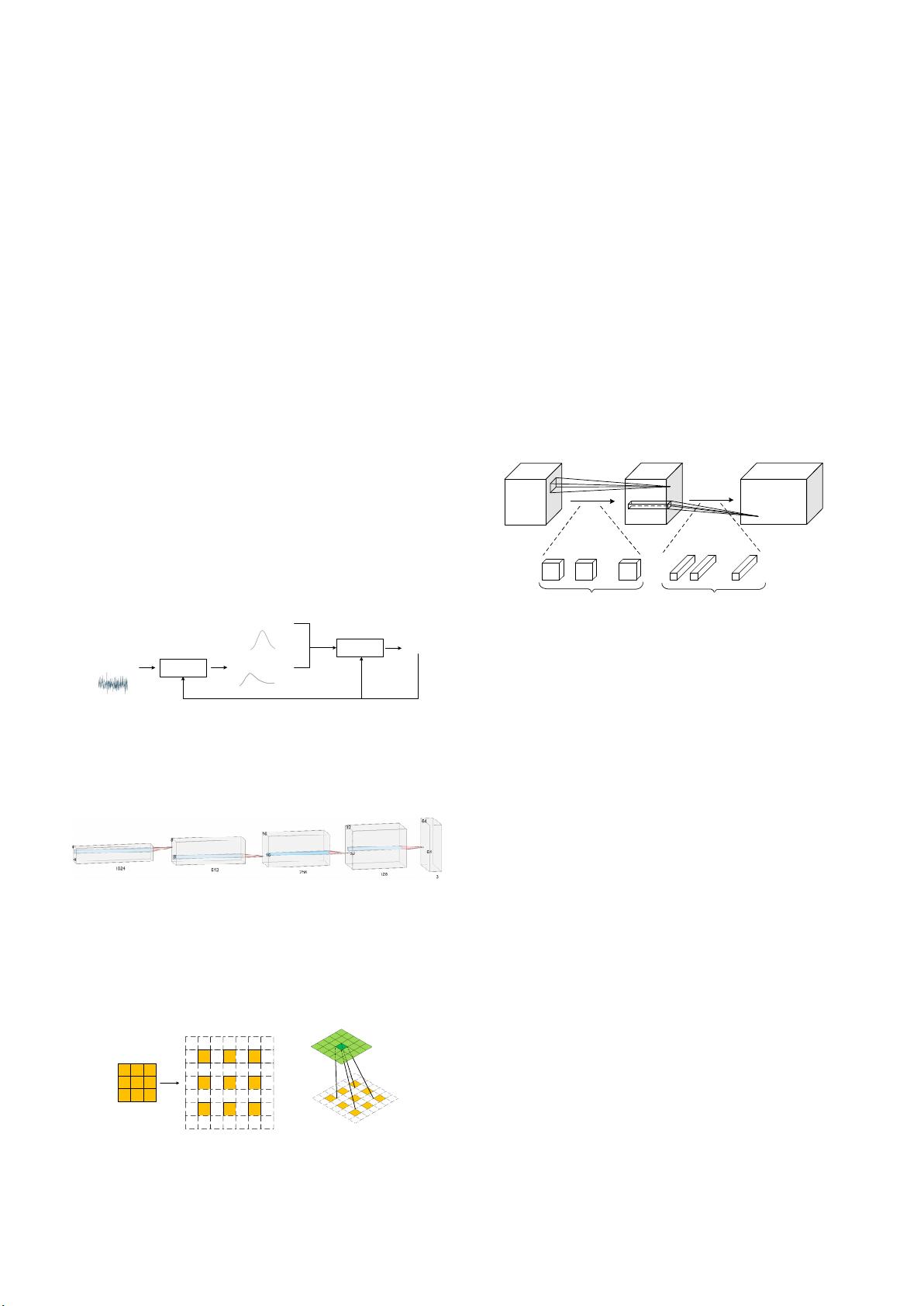

Radford et al. [43] proposed Deep Convolutional Generative

Adversarial Network (DCGAN) in 2015. The generator of

DCGAN on Large-scale Scene Understanding (LSUN) dataset

is implemented by using deep convolutional neural networks,

the structure of which is shown in the figure below.

In Fig. 11, the generative model of DCGAN performs up-

sampling by "fractionally-strided convolution". As shown in

Fig. 12 (a), supposing that there is a 3 × 3 input, and the size of

the output is expected to be larger than 3 × 3, then the 3 × 3

input can be expanded by inserting zero between pixels. After

expanding to a 5 × 5 size, performing convolution, shown in

Fig. 12 (b), can obtain an output larger than 3 × 3.

G. MobileNets

MobileNets are a series of lightweight models proposed by

Google for embedded devices such as mobile phones. They use

depth-wise separable convolutions and several advanced

techniques to build thin deep neural networks. There are three

versions of MobileNets to date, namely MobileNet v1 [44],

MobileNet v2 [45], and MobileNet v3 [46].

1) MobileNet v1

MobileNet v1 [44] utilizes depth-wise separable convolutions

proposed in Xception [26], which decomposes the standard

convolution into depth-wise convolution and pointwise

convolution (1 × 1 convolution), as shown in Fig. 13.

Specifically, standard convolution applies each convolution

kernel to all the channels of input. In contrast, depth-wise

convolution applies each convolution kernel to only one

channel of input, and then 1 × 1 convolution is used to combine

the output of depth-wise convolution. This decomposition can

substantially reduce the number of parameters.

MobileNet v1 also introduces the width multiplier to reduce

the number of channels of each layer and the resolution

multiplier to lower the resolution of the input image (feature

map).

2) MobileNet v2

Based upon MobileNet v1, MobileNet v2 [45] mainly

introduces two improvements: inverted residual blocks and

linear bottleneck modules.

In Section 3.5, we have explained three-layer residual blocks,

the purpose of which is to make use of 1 × 1 convolution to

reduce the number of parameters involved in 3 × 3 convolution.

In a word, the whole process of a residual block is channel

compression—standard convolution—channel expansion. In

MobileNet v2, an inverted residual block (seen in Fig. 14 (b))

is opposite to a residual block (seen in Fig. 14 (a)). The input of

an inverted residual block is firstly convoluted by 1 × 1

convolution kernels for channel expansion, then convoluted by

3 × 3 depth-wise separable convolution, and finally convoluted

by 1 × 1 convolution kernels to compress the number of

channels back. Briefly speaking, the whole process of an

inverted residual block is channel expansion—depth-wise

separable convolution—channel compression. Also, due to the

fact that depth-wise separable convolution cannot change the

number of channels, which causes the number of input channels

limits the feature extraction, inverted residual blocks are

harnessed to handle the problem.

Generator

Discriminator

Real data x

Generated data G(z)Random noise z

Result [0, 1]

Update

Fig. 10. The flowchart of GAN

Fig. 11. DCGAN generator used for LSUN scene modeling

(a) (b)

Fig. 12. An example of fractionally-strided

convolution. (a) Inserting zero

between 3 × 3 kernel points. (b) Convolving the 7 × 7 graph

#M, d×d×1

#N, 1×1×M

d

d

1

M

1

d

d

1

...

M

M N

M

1

1

M

...

N

Fig. 13. Depth-

wise separable convolutions in MobileNet v1. #M and #N

represent the number of kernels of depth-wise convolution and po

convolution, respectively