only the maximum value from the two maps. Note that

C

1

responses are not computed at every possible locations

and that C

1

units only overlap by an amount

S

. This makes

the computations at the next stage more efficient. Again,

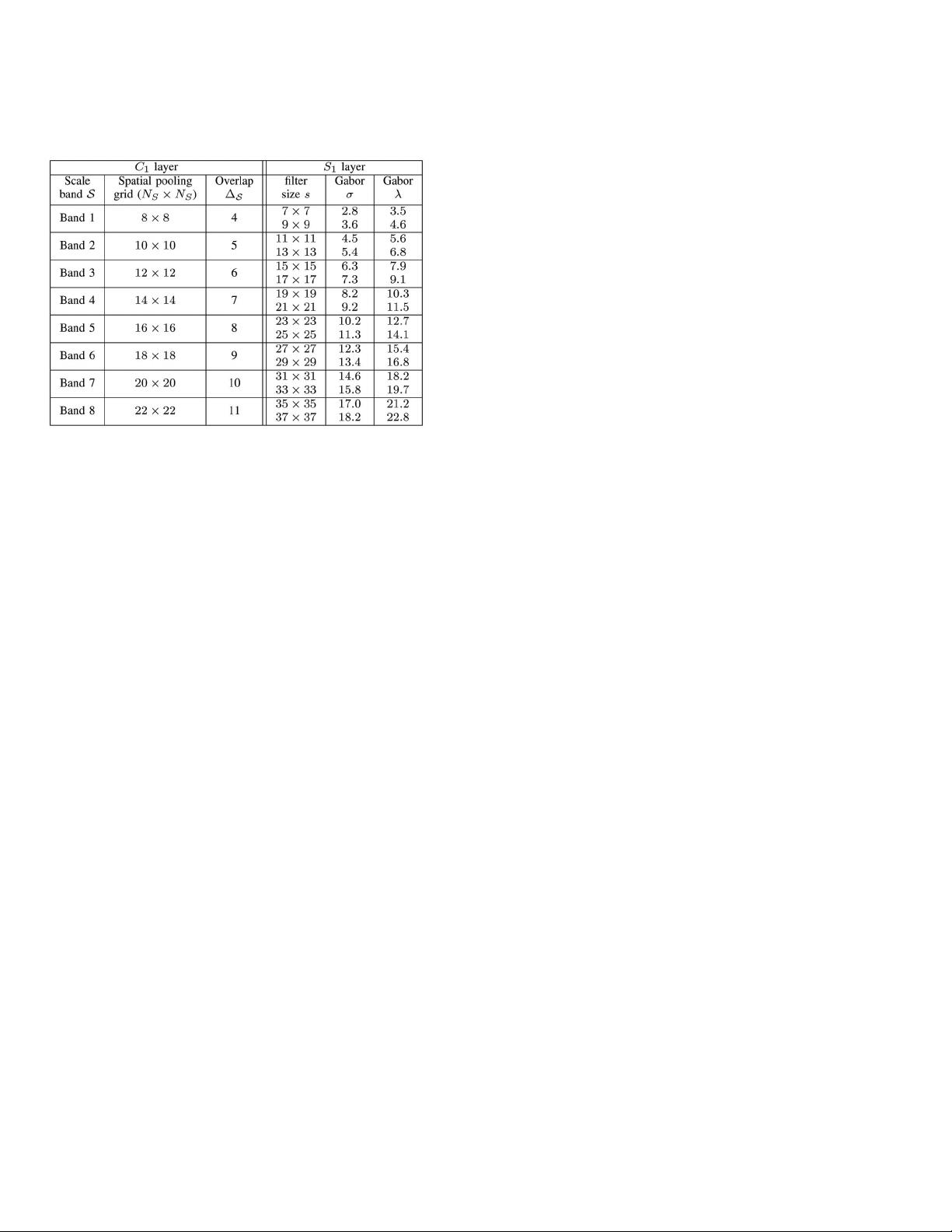

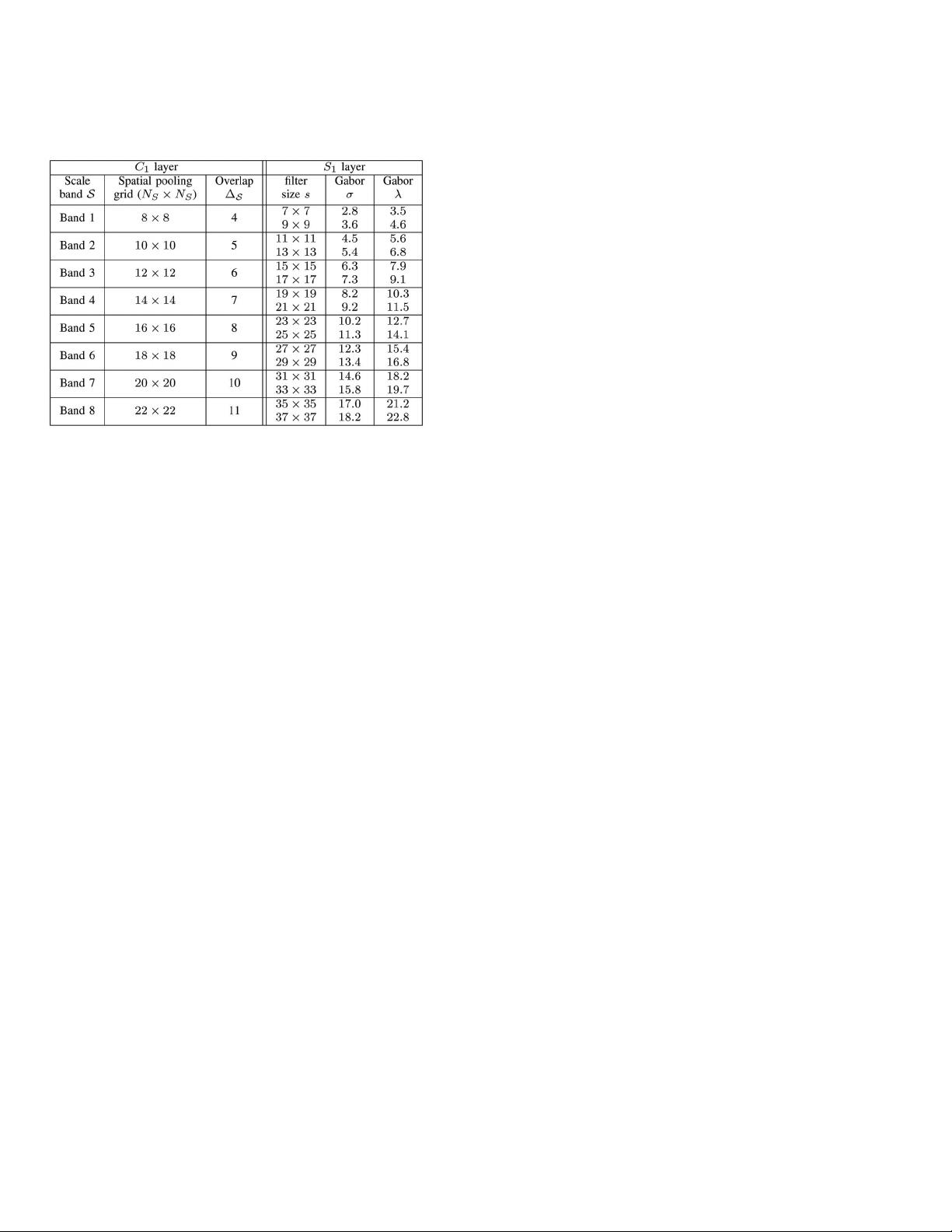

parameters (see Table 1) governing this pooling operation

were adjusted such that the tuning of the C

1

units match the

tuning of complex cells as measured experimentally (see [41]

for details).

S

2

units: In the S

2

layer, units pool over afferent C

1

units

from a local spatial neighborhood across all four orienta-

tions. S

2

units behave as radial basis function ( RBF) units.

2

Each S

2

unit response depends in a Gaussian-like way on

the Euclidean distance between a new input and a stored

prototype. That is, for an image patch X from the previous

C

1

layer at a particular scale S, the response r of the

corresponding S

2

unit is given by:

r ¼ exp kX P

i

k

2

; ð4Þ

where defines the sharpness of the

TUNING and P

i

is one

of the N features (center of the

RBF units) learned during

training (see below). At runtime, S

2

maps are computed

across all positions for each of the eight scale bands. One

such multiple scale map is computed for each one of the

ðN 1; 000Þ prototypes P

i

.

C

2

units: Our final set of shift- and scale-invariant C

2

responses is computed by taking a global maximum ((3))

over all scales and positions for each S

2

type over the entire

S

2

lattice, i.e., the S

2

measures the match between a stored

prototype P

i

and the input image at every position and

scale; we only keep the value of the best match and discard

the rest. The result is a vector of NC

2

values, where N

corresponds to the number of prototypes extracted during

the learning stage.

The learning stage: The learning process corresponds to

selecting a set of N prototypes P

i

(or features) for the S

2

units.

This is done using a simple sampling process such that,

during training, a large pool of prototypes of various sizes

and at random positions are extracted from a target set of

images. These prototypes are extracted at the level of the C

1

layer across all four orientations, i.e., a patch P

o

of size n n

contains n n 4 elements. In the following, we extracted

patches of four different sizes ðn ¼ 4; 8 ; 12; 16Þ. An important

question for both neuroscience and computer vision regards

the choice of the unlabeled target set from which to learn—in

an unsupervised way—this vocabulary of visual features. In

the following, features are learned from the positive training

set for each object independently, but, in Section 3.1.2, we

show how a universal dictionary of features can be learned

from a random set of natural images and shared between

multiple object classes.

The Classification Stage: At runtime, each image is propa-

gated through the architecture described in Fig. 1. The C

1

and

C

2

standard model features (SMFs) are then extracted and

further passed to a simple linear classifier (we experimented

with both

SVM and boosting).

3EMPIRICAL EVALUATION

We evaluate the performance of the SMFs in several object

detection tasks. In Section 3.1, we show results for detection in

clutter (sometimes referred to as weakly supervised) for

which the target object in both the training and test sets

appears at variable scales and positions within an unseg-

mented image, such as in the CalTech101 object database [21].

For such applications, because 1) the size of the image to be

classified may vary and 2) because of the large variations in

appearance, we use the scale and position-invariant C

2

SMFs

(the number N of which is independent of the image size and

only depends on the number of prototypes learned during

training) that we pass to a linear classifier trained to perform a

simple object present/absent recognition task.

In Section 3.2, we evaluate the performance of the SMFs in

conjunction with a windowing approach. That is, we extract a

large number of fixed-size image windows from an input

image at various scales and positions, which each have to be

classified for a target object to be presentor absent. In this task,

the target object in both the training and test images exhibits a

limited variability to scale and position (lighting and within-

class appearance variability remain) which is accounted for

by the scanning process. For this task, the presence of clutter

within each image window to be classified is also limited.

Because the size of the image windows is fixed, both C

1

and

C

2

SMFs can be used for classification. We show that, for such

an application, due to the limited variability of the target

object in positionand scale and the absence of clutter, C

1

SMFs

appear quite competitive.

In Section 3.3, we show results using the SMFs for the

recognition of texture-based objects like trees and roads. For

this application, the performance of the SMFs is evaluated at

every pixel locations from images containing the target

object which is appropriate for detecting amorphous objects

in a scene, where drawing a closely cropped bounding box

is often impossible. For this task, the C

2

SMFs outperform

the C

1

SMFs.

3.1 Object Recognition in Clutter

Because of their invariance to scale and position, the

C

2

SMFs can be used for weakly supervised learning tasks

for which a labeled training set is available but for which the

training set is not normalized or segmented. That is, the

target object is presented in clutter and may undergo large

414 IEEE TRANSACTIONS ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE, VOL. 29, NO. 3, MARCH 2007

TABLE 1

Summary of the S

1

and C

1

SMFs Parameters

2. This is consistent with well-known response properties of neurons in

primate inferotemp oral cortex and seems to be the key property for learning

to generalize in the visual and motor systems [42].