Robust Speech Recognition via Large-Scale Weak Supervision 6

0 1 2 3 4 5 6 7 8

WER on LibriSpeech dev-clean (%)

0

10

20

30

40

50

Average WER on [Common Voice, CHiME-6, TED-LIUM] (%)

Supervised LibriSpeech models

Zero-shot Whisper models

Zero-shot Human (Alec)

Ideal robustness (y = x)

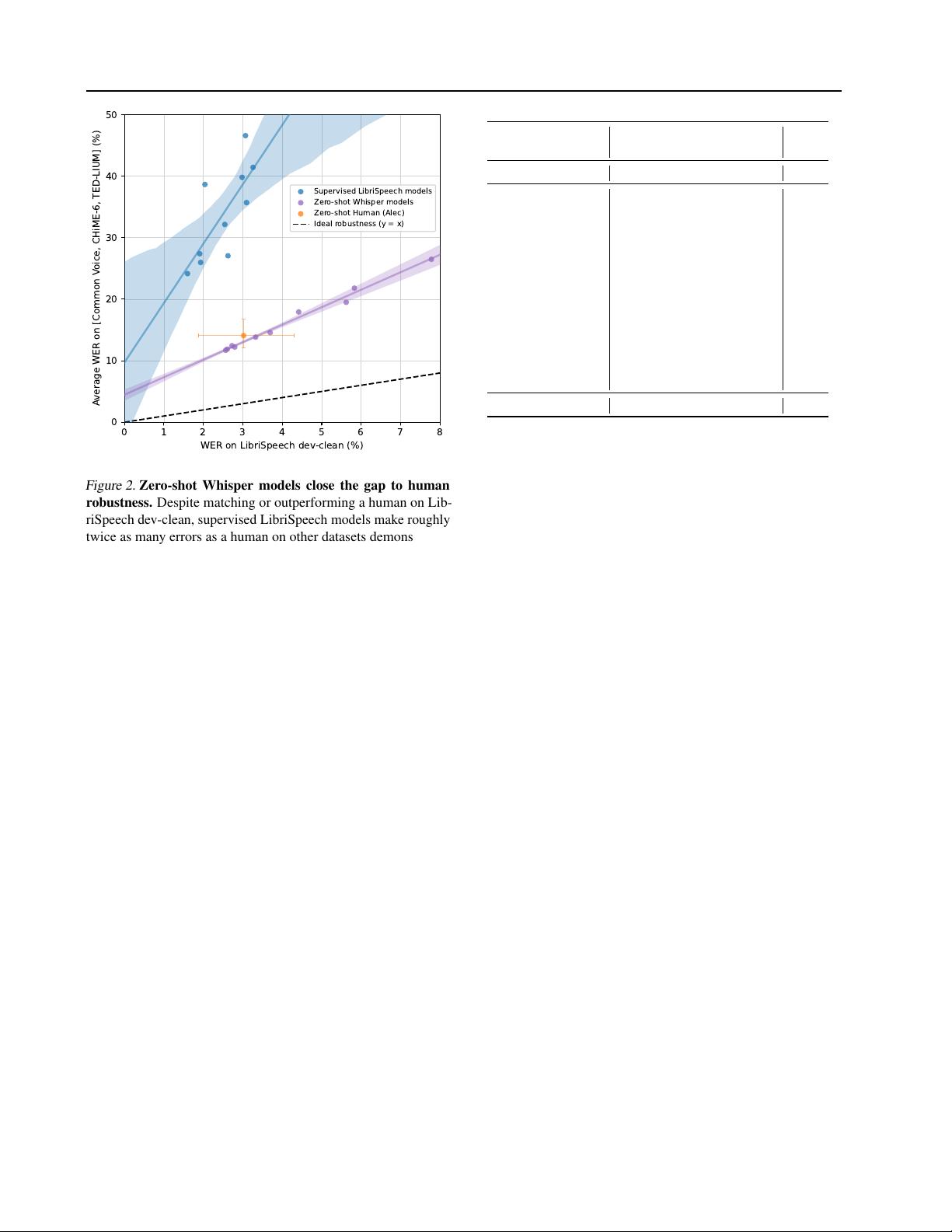

Figure 2. Zero-shot Whisper models close the gap to human

robustness.

Despite matching or outperforming a human on Lib-

riSpeech dev-clean, supervised LibriSpeech models make roughly

twice as many errors as a human on other datasets demonstrating

their brittleness and lack of robustness. The estimated robustness

frontier of zero-shot Whisper models, however, includes the 95%

confidence interval for this particular human.

To quantify this difference, we examine both overall ro-

bustness, that is average performance across many distribu-

tions/datasets, and effective robustness, introduced by Taori

et al. (2020), which measures the difference in expected

performance between a reference dataset, which is usually

in-distribution, and one or more out-of-distribution datasets.

A model with high effective robustness does better than

expected on out-of-distribution datasets as a function of its

performance on the reference dataset and approaches the

ideal of equal performance on all datasets. For our analy-

sis, we use LibriSpeech as the reference dataset due to its

central role in modern speech recognition research and the

availability of many released models trained on it, which

allows for characterizing robustness behaviors. We use a

suite of 12 other academic speech recognition datasets to

study out-of-distribution behaviors. Full details about these

datasets can be found in Appendix A.

Our main findings are summarized in Figure 2 and Table 2.

Although the best zero-shot Whisper model has a relatively

unremarkable LibriSpeech clean-test WER of 2.5, which

is roughly the performance of modern supervised baseline

or the mid-2019 state of the art, zero-shot Whisper models

have very different robustness properties than supervised

LibriSpeech models and out-perform all benchmarked Lib-

riSpeech models by large amounts on other datasets. Even

wav2vec 2.0 Whisper RER

Dataset Large (no LM) Large V2 (%)

LibriSpeech Clean 2.7 2.7 0.0

Artie 24.5 6.2 74.7

Common Voice 29.9 9.0 69.9

Fleurs En 14.6 4.4 69.9

Tedlium 10.5 4.0 61.9

CHiME6 65.8 25.5 61.2

VoxPopuli En 17.9 7.3 59.2

CORAAL 35.6 16.2 54.5

AMI IHM 37.0 16.9 54.3

Switchboard 28.3 13.8 51.2

CallHome 34.8 17.6 49.4

WSJ 7.7 3.9 49.4

AMI SDM1 67.6 36.4 46.2

LibriSpeech Other 6.2 5.2 16.1

Average 29.3 12.8 55.2

Table 2. Detailed comparison of effective robustness across

various datasets.

Although both models perform within 0.1%

of each other on LibriSpeech, a zero-shot Whisper model performs

much better on other datasets than expected for its LibriSpeech

performance and makes 55.2% less errors on average. Results

reported in word error rate (WER) for both models after applying

our text normalizer.

the smallest zero-shot Whisper model, which has only 39

million parameters and a 6.7 WER on LibriSpeech test-clean

is roughly competitive with the best supervised LibriSpeech

model when evaluated on other datasets. When compared

to a human in Figure 2, the best zero-shot Whisper models

roughly match their accuracy and robustness. For a detailed

breakdown of this large improvement in robustness, Table

2 compares the performance of the best zero-shot Whisper

model with a supervised LibriSpeech model that has the

closest performance to it on LibriSpeech test-clean. Despite

their very close performance on the reference distribution,

the zero-shot Whisper model achieves an average relative

error reduction of 55.2% when evaluated on other speech

recognition datasets.

This finding suggests emphasizing zero-shot and out-of-

distribution evaluations of models, particularly when at-

tempting to compare to human performance, to avoid over-

stating the capabilities of machine learning systems due to

misleading comparisons.

3.4. Multi-lingual Speech Recognition

In order to compare to prior work on multilingual speech

recognition, we report results on two low-data benchmarks:

Multilingual LibriSpeech (MLS) (Pratap et al., 2020b) and

VoxPopuli (Wang et al., 2021) in Table 3.

Whisper performs well on Multilingual LibriSpeech, out-

performing XLS-R (Babu et al., 2021), mSLAM (Bapna