Oversubscribed Command Queues in GPUs

GPGPU’18, Vosendorf, Austria

3

(HSA queues) to launch kernels. However, it is challenging for the

GPU to locate all outstanding works on these queues when it can

only monitor a fixed number of queues at a time. Therefore, the

GPU periodically swaps different queues into the available

hardware queue slots (hereafter referred as hardware queues) and

processes ready tasks on the monitored queues. To ensure all

queues are eventually serviced, the hardware will swap in and out

queues at regular intervals, called the scheduling quantum.

When the number of allocated HSA queues is greater than the

number of hardware queues, the GPU wastes significant time

rotating between all allocated queues in search of ready tasks.

Furthermore, barrier packets that handle task dependencies can

block queues while waiting for prior tasks to complete. In this

situation, it is important for the GPU to choose a scheduling policy

that prioritizes queues having ready tasks. In this work, combining

round-robin and priority-based scheduling with various queue

mapping and unmapping criteria, we evaluate five different

scheduling policies (three round-robin and two priority-based).

3. GPU Command Queue Organization

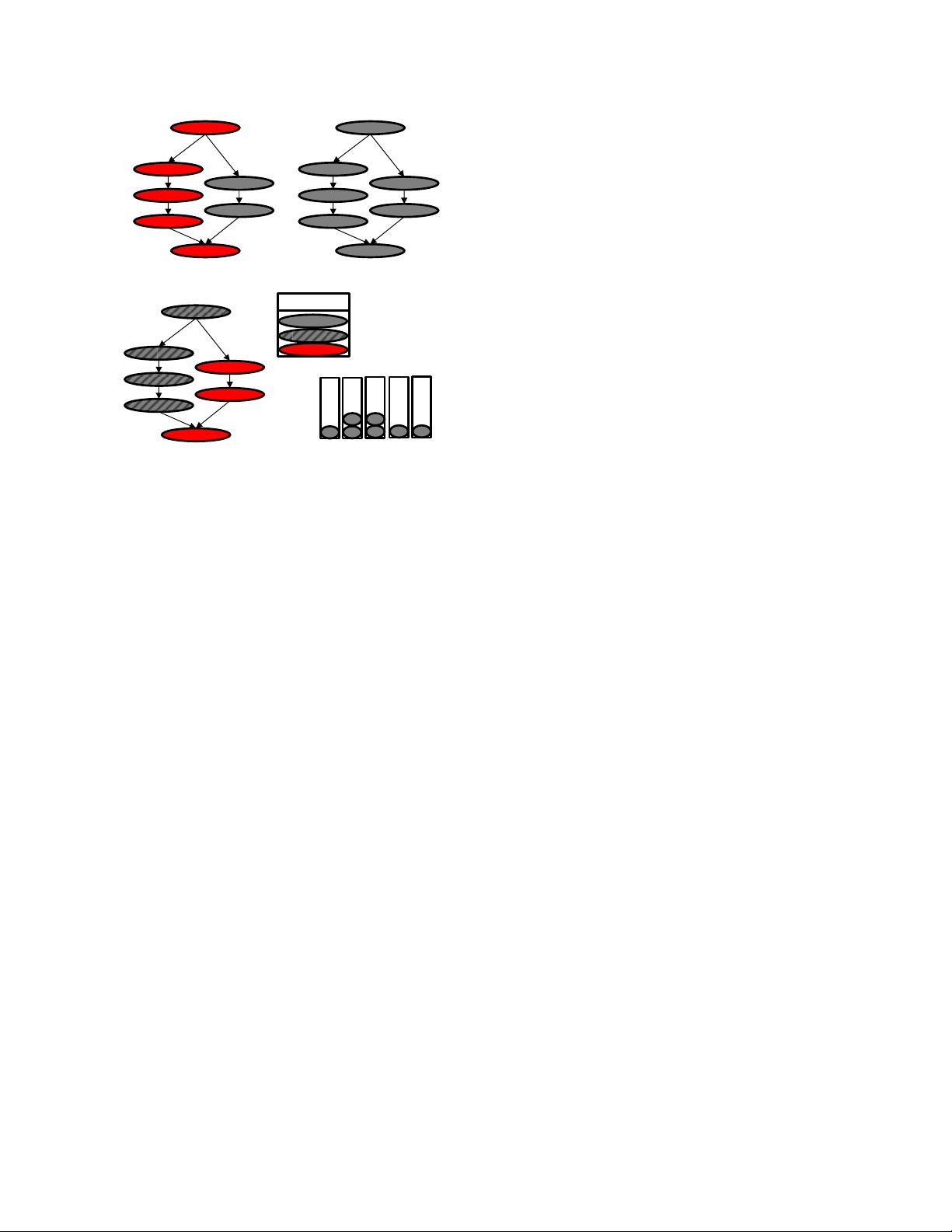

Figure 2 shows the GPU queue scheduling and task dispatching

mechanism used in this paper. The GPU device driver is

responsible for creating queues in the system memory. The driver

also creates a queue list that contains the queue descriptors of all

the queues created by the application. Queue descriptors have all

the necessary information to access HSA queues, such as the

pointer to the base address of a queue. This queue list is passed to

the GPU hardware scheduler during initialization phase. Although

the queue list is read by the hardware scheduler, it is modified only

by the device driver. The hardware scheduler maps and unmaps

queues from the queue list to the hardware list stored inside the

packet processor at each scheduling quantum. The number of

entries in the hardware list is limited and each entry in the hardware

list corresponds to the HSA queue mapped to a hardware queue.

The packet processor monitors these hardware queues, processes

both kernel dispatch and barrier HSA packets, resolves

dependencies expressed by barrier packets and forwards ready

tasks to the dispatcher. The dispatcher then launches these tasks on

the shaders in work-group granularity.

When the HSA queues are oversubscribed, the hardware

scheduler unmaps a queue from the hardware list and maps a new

queue from the queue list. Our baseline hardware scheduler selects

a new queue to be mapped at each scheduling quantum based on a

round-robin policy. However, the newly selected queue may not

have ready tasks, resulting in idling GPU resources. Priority-based

queue scheduling techniques tries to reduce idling by intelligently

mapping a queue with ready tasks. The next section discusses this

priority-based queue scheduling in detail.

4. Priority-Based Queue Scheduling

While HSA can expose more task level concurrency to the GPU, it

shifts the burden of task scheduling to the hardware. In a complex

task graph with hundreds of interdependent tasks, many task queues

can become unblocked at any given time. From a data dependency

standpoint, the task scheduler is free to launch any ready task.

However, a naïve policy may not be able to achieve efficient use of

resources and may leave tasks blocked for long periods of time.

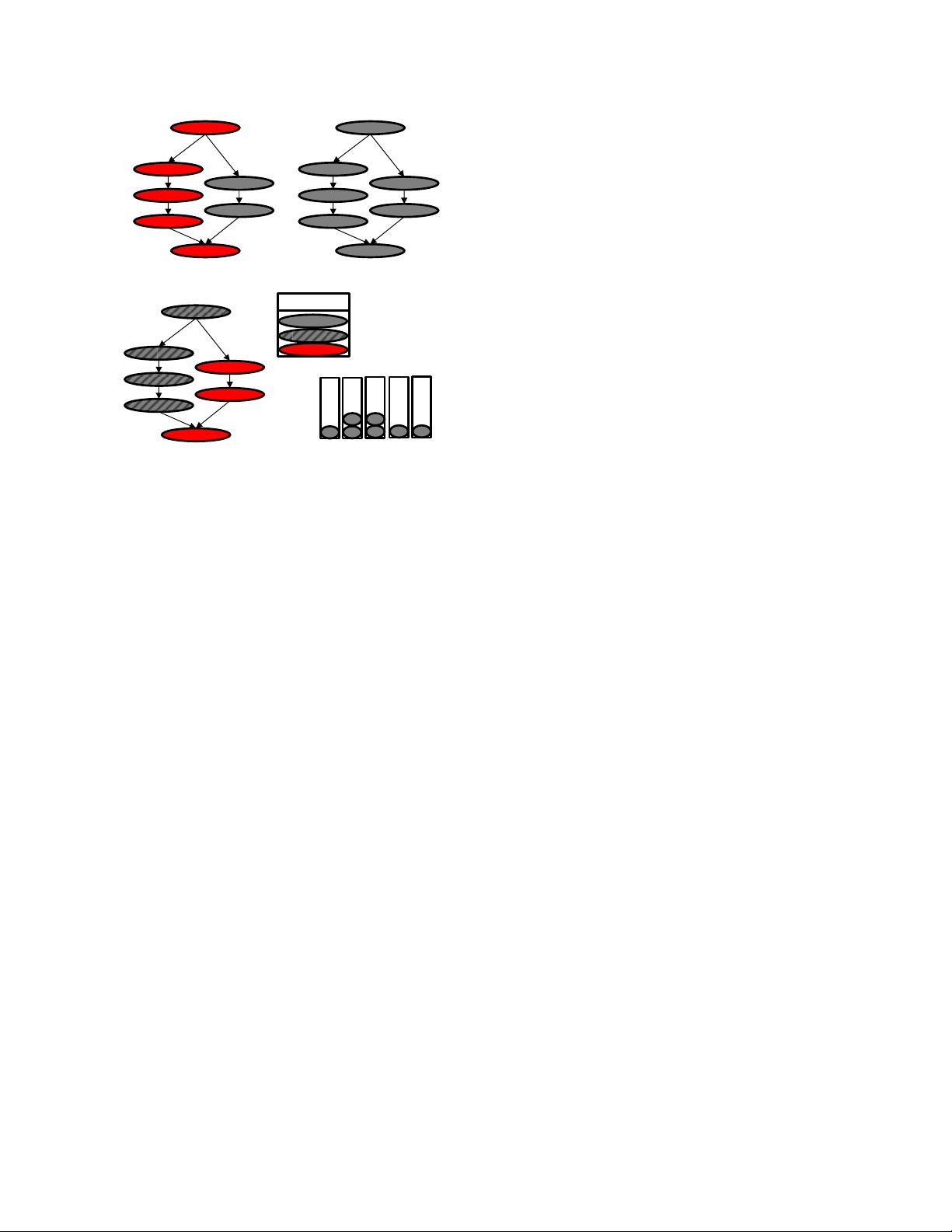

Figure 3 explains the deficiency of the naïve scheduler. Tasks

on the initial critical path of execution are marked in red in Figure

3 (a). After task A is executed, both tasks B and C are ready to

execute. A naïve scheduler could pick either of these two tasks, but

executing task B first is the better scheduling decision because task

B is on the critical path. Task C can be delayed, but Figure 3 (b)

shows delaying the execution of task C until tasks B, D, and F

complete, puts task C on the critical path. A more informed

scheduler would have executed task C before task F.

To make better decisions, a task priority-aware scheduler is

needed. Many techniques have been proposed in literature to

prioritize tasks [15][54]. One such technique is the Heterogeneous

Earliest-Finish-Time (HEFT) algorithm proposed by Topçuoğlu et

al. [54] that computes upward ranks of the tasks and selects the task

with highest upward rank for scheduling. A task’s upward rank is

its distance from an exit node. Figure 3 (c) shows the same task

graph from Figure 3 (a) with each task annotated with their upward

rank. The ranking uses HEFT algorithm but assumes equal

computation time for all tasks. Since the tasks are now annotated

with priorities, a scheduler that is aware of the priorities can make

informed scheduling decisions. For example, after task A is

completed, priority-based scheduler will choose task B to be

scheduled because the rank of task B (rank 4) is higher than task C

(rank 3). In this paper, we use this HEFT algorithm to determine

the priorities of each task.

4.1 Priority-based Hardware Scheduling in HSA

There are three challenges to implement a priority-based task

scheduler, (a) the tasks need to be annotated by a ranking algorithm,

(b) these annotations should be exposed to the scheduler, and (c)

the scheduler should be able to use these rankings when making

scheduling decisions.

Since HSA relies on queues to expose concurrency and schedule

tasks, we modified the HSA queue creation API [Figure 4], to

include a priority field. A queue priority (or rank) is a positive

integer value that can be specified at the time of queue creation and

Figure 3. Task graph execution and priorities.

Barrie

r

C

A

B

D

F

G

E

C (3)

A (5)

B (4)

D (3)

F (2)

G (1)

E (2)

C

A

B

D

F

G

E

(a)

(b)

(c)

completed

to be run

Legend

critical path

G

E

C

B

A

F

D

(d)

Q1

Q2

Q3 Q4

Q5

hsa_queue_create(gpu_agent, queue_size,

HSA_QUEUE_TYPE_SINGLE, NULL, NULL,

UINT32_MAX, UINT32_MAX, q_ptr, priority);

Figure 4. HSA API modifications. priority is added to HSA

queue create API.