Optimizing for TCP

TCP is an adaptive protocol designed to be fair to all network peers and to make the most efficient use of the underlying

network. Thus, the best way to optimize TCP is to tune how TCP senses the current network conditions and adapts its

behavior based on the type and the requirements of the layers below and above it: wireless networks may need different

congestion algorithms, and some applications may need custom quality of service (QoS) semantics to deliver the best

experience.

The close interplay of the varying application requirements, and the many knobs in every TCP algorithm makes TCP tuning

and optimization an inexhaustible area of academic and commercial research. In this chapter we have only scratched the

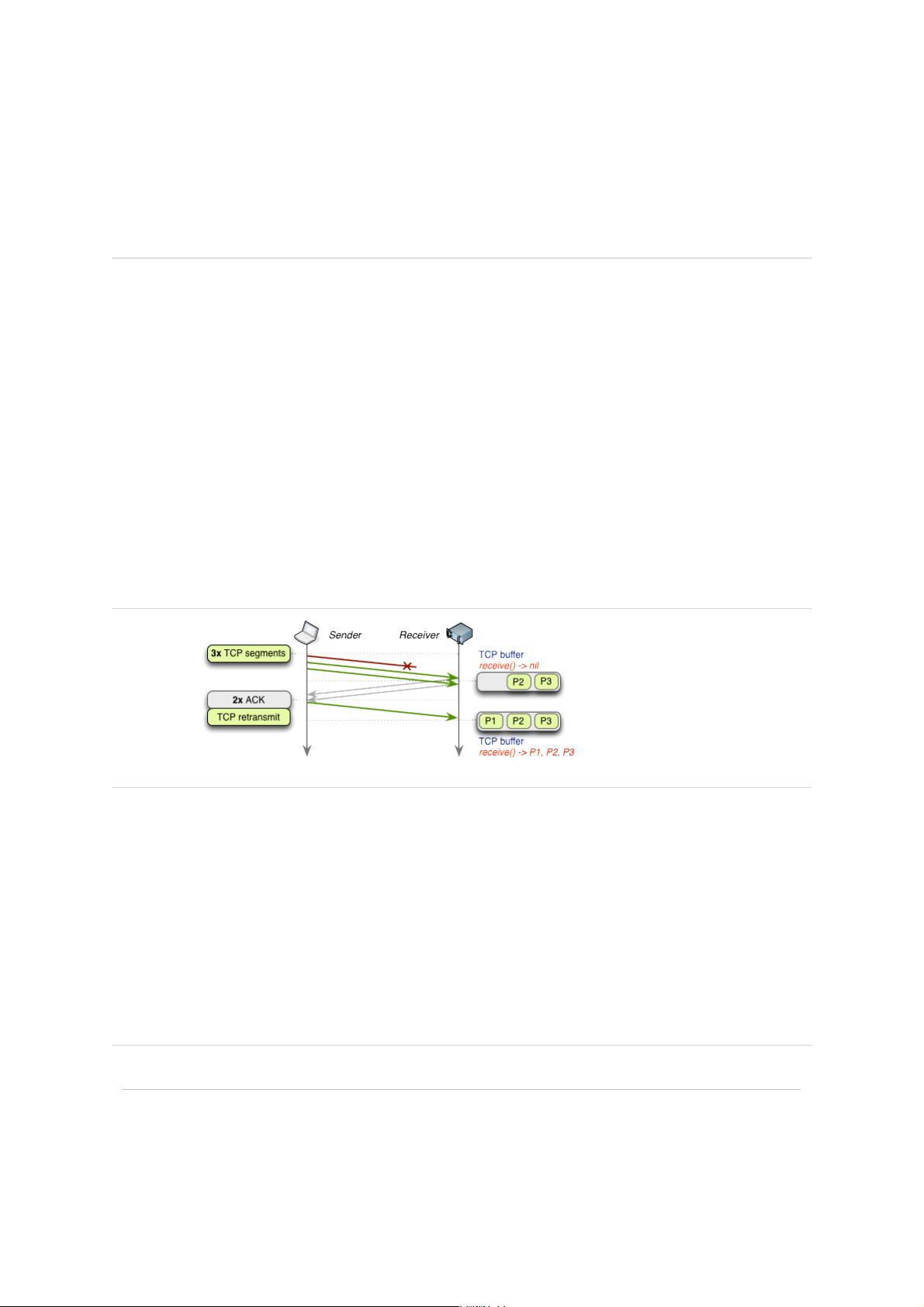

surface of the many factors that govern TCP performance. Additional mechanisms such as selective acknowledgments

(SACK), delayed acknowledgments, and fast retransmit amongst many others make each TCP session much more

complicated (or interesting, depending on your perspective) to understand, analyze, and tune.

Having said that, while the specific details of each algorithm and feedback mechanism will continue to evolve, the core

principles and their implications remain unchanged:

TCP three-way handshake introduces a full roundtrip of latency

TCP slow-start is applied to every new connection

TCP flow and congestion control regulates throughput of all connections

TCP throughput is regulated by current congestion window size

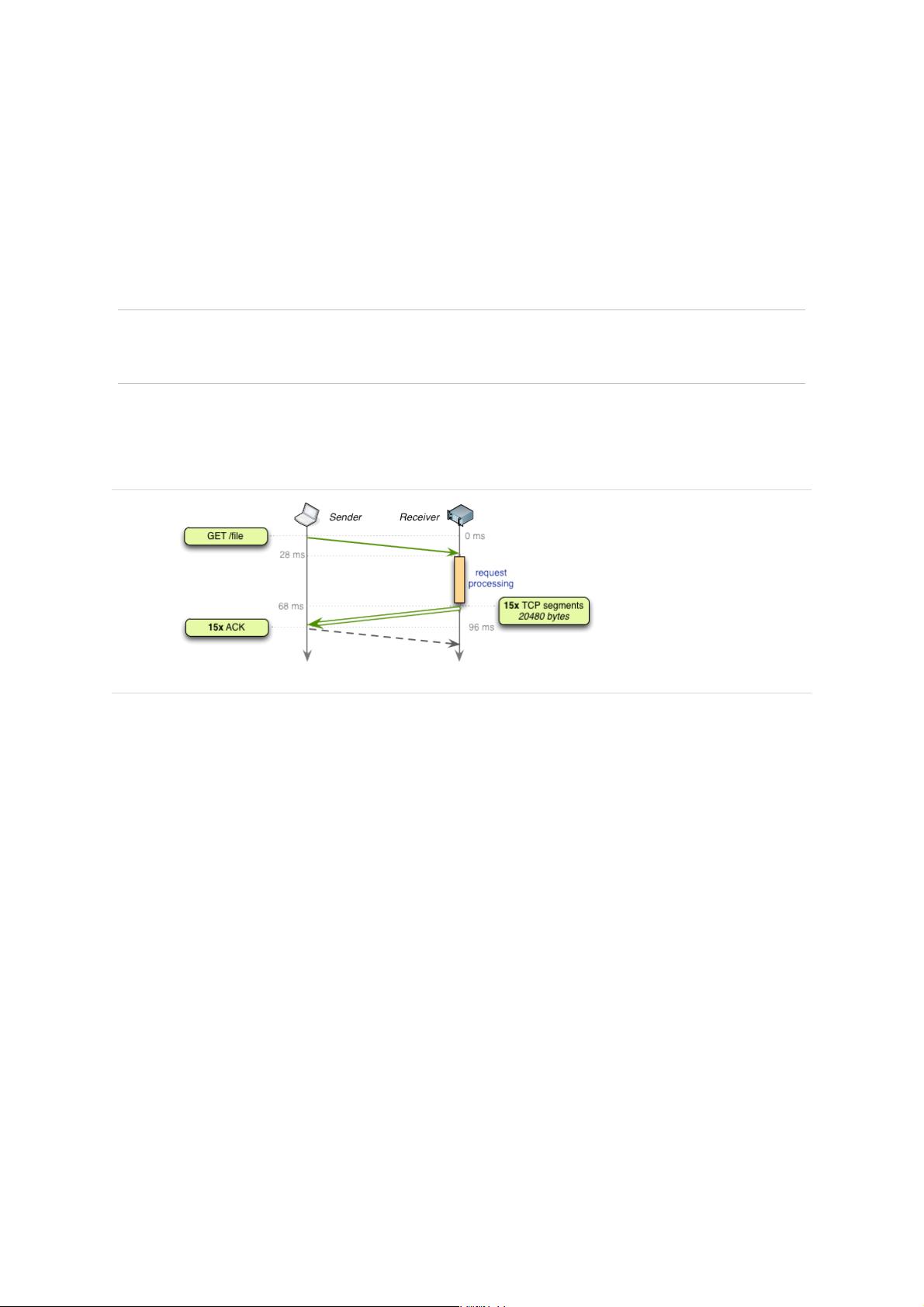

As a result, the rate with which a TCP connection can transfer data in modern high-speed networks is often limited by the

roundtrip time between the receiver and sender. Further, while bandwidth continues to increase, latency is bounded by the

speed of light and is already within a small constant factor of its maximum value. In most cases, latency, not bandwidth, is

the bottleneck for TCP - e.g. see Figure 2-5.

Tuning server configuration

As a starting point, prior to tuning any specific values for each buffer and timeout variable in TCP, of which there are dozens,

you are much better off simply upgrading your hosts to their latest system versions. TCP best practices and underlying

algorithms that govern its performance continue to evolve, and most of these changes are only available in latest kernels. In

short, keep your servers up-to-date to ensure the optimal interaction between the sender and receiver’s TCP stacks.

With the latest kernel in place, it is good practice to ensure that your server is configured to use the following best practices:

“Increasing TCP’s Initial Congestion Window”

Larger starting congestion window allows TCP transfer more data in first roundtrip, and significantly accelerates the

order delivery - incidentally, this is also why WebRTC uses UDP as its base transport.

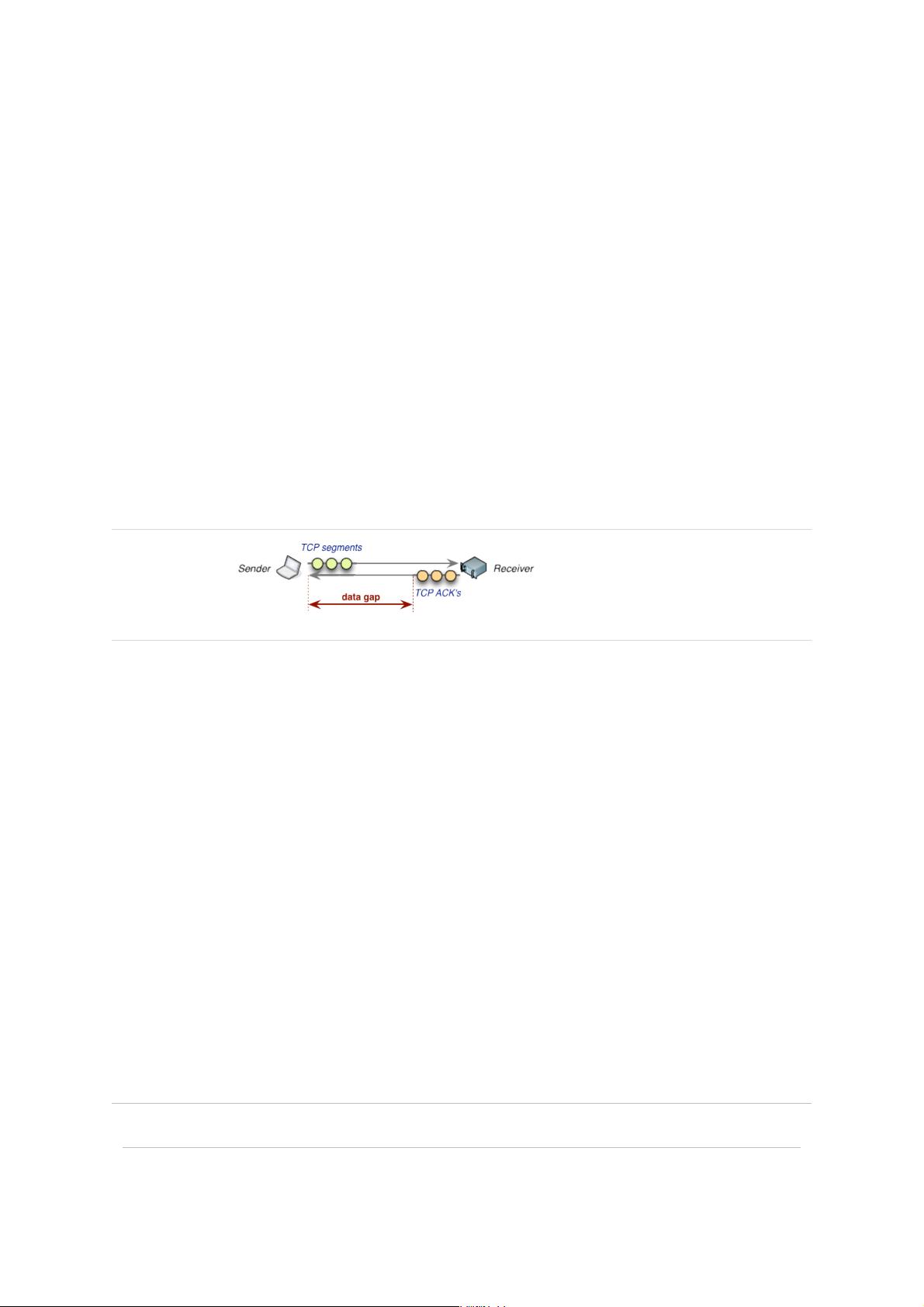

If a packet is lost, then the audio codec can simply insert a minor break in the audio and continue processing the incoming

packets. If the gap is small, the user may not even notice, and waiting for the lost packet runs the risk of introducing

variable pauses in audio output, which would result in a much worse experience for the user.

Similarly, if we are delivering game state updates for a character in a 3D world, then waiting for a packet describing their

state at time MJ0, when we already have the packet for time M is often simply unnecessary - ideally, we would receive each

and every update, but to avoid gameplay delays, we can accept intermittent loss in favor of lower latency.

On the surface, upgrading server kernel versions seems like trivial advice. However, in practice, it is often met with

significant resistance: many existing servers are tuned for specific kernel versions, and system administrators are

reluctant to perform the upgrade.

To be fair, every upgrade brings its risks, but to get the best TCP performance, it is also likely the single best

investment you can make.