Real-Time Grasp Detection Using Convolutional Neural Networks

Joseph Redmon

1

, Anelia Angelova

2

Abstract— We present an accurate, real-time approach to

robotic grasp detection based on convolutional neural networks.

Our network performs single-stage regression to graspable

bounding boxes without using standard sliding window or

region proposal techniques. The model outperforms state-of-

the-art approaches by 14 percentage points and runs at 13

frames per second on a GPU. Our network can simultaneously

perform classification so that in a single step it recognizes the

object and finds a good grasp rectangle. A modification to this

model predicts multiple grasps per object by using a locally

constrained prediction mechanism. The locally constrained

model performs significantly better, especially on objects that

can be grasped in a variety of ways.

I. INTRODUCTION

Perception—using the senses (or sensors if you are a

robot) to understand your environment—is hard. Visual per-

ception involves mapping pixel values and light information

onto a model of the universe to infer your surroundings. Gen-

eral scene understanding requires complex visual tasks such

as segmenting a scene into component parts, recognizing

what those parts are, and disambiguating between visually

similar objects. Due to these complexities, visual perception

is a large bottleneck in real robotic systems.

General purpose robots need the ability to interact with

and manipulate objects in the physical world. Humans see

novel objects and know immediately, almost instinctively,

how they would grab them to pick them up. Robotic grasp

detection lags far behind human performance. We focus on

the problem of finding a good grasp given an RGB-D view

of the object.

We evaluate on the Cornell Grasp Detection Dataset, an

extensive dataset with numerous objects and ground-truth

labelled grasps (see Figure 1). Recent work on this dataset

runs at 13.5 seconds per frame with an accuracy of 75 percent

[1] [2]. This translates to a 13.5 second delay between a robot

viewing a scene and finding where to move its grasper.

The most common approach to grasp detection is a sliding

window detection framework. The sliding window approach

uses a classifier to determine whether small patches of an

image constitute good grasps for an object in that image. This

type of system requires applying the classifier to numerous

places on the image. Patches that score highly are considered

good potential grasps.

We take a different approach; we apply a single network

once to an image and predict grasp coordinates directly. Our

network is comparatively large but because we only apply

it once to an image we get a massive performance boost.

1

University of Washington

2

Google Research

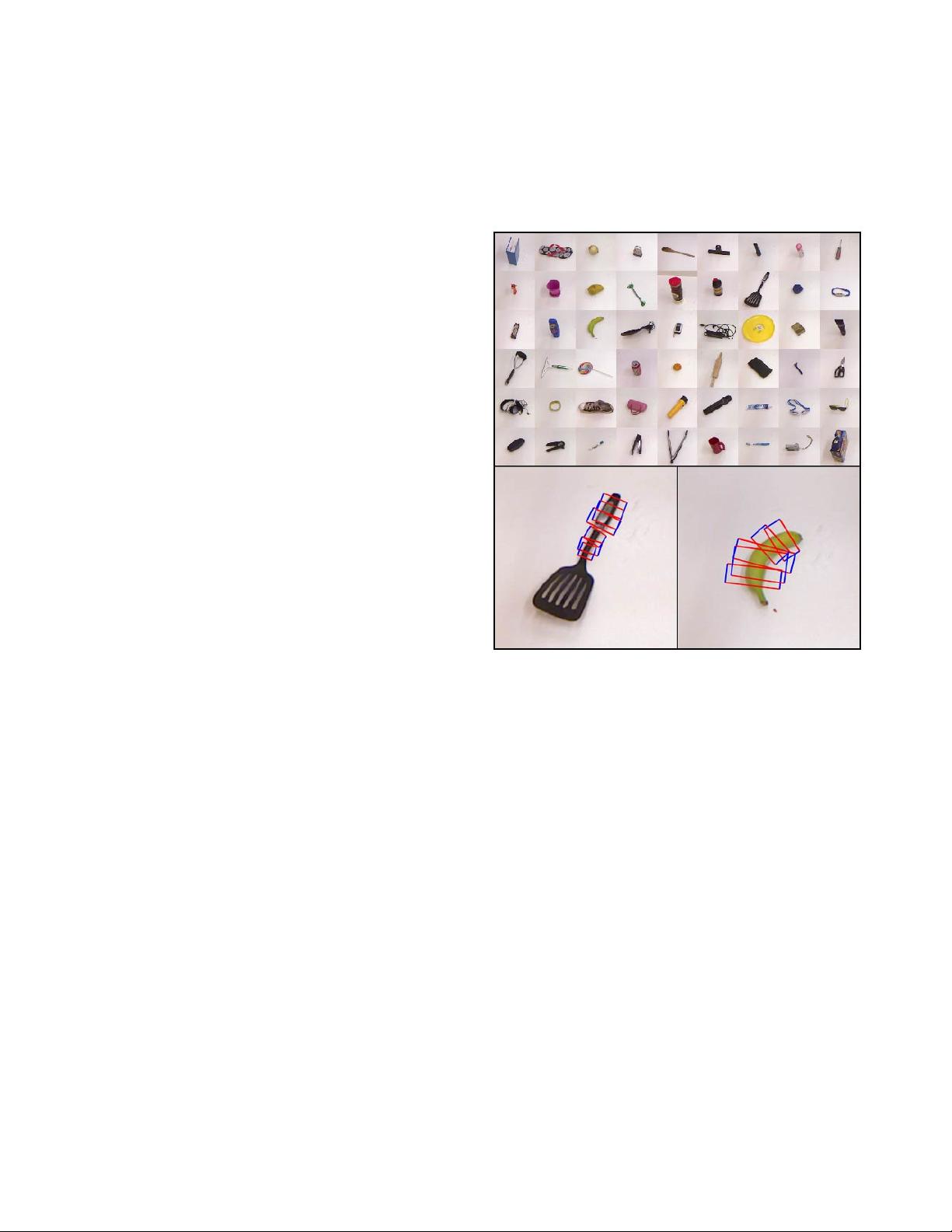

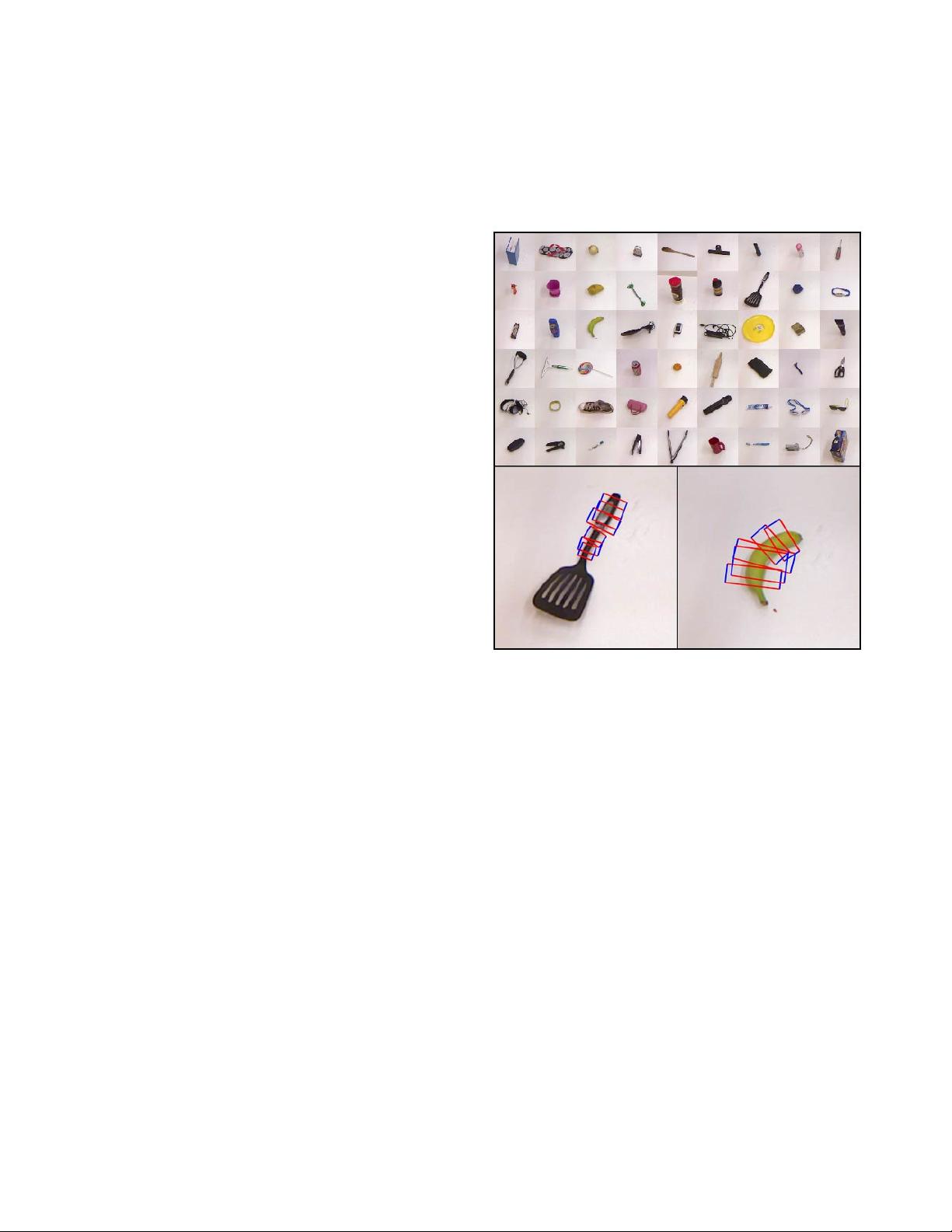

Fig. 1. The Cornell Grasping Dataset contains a variety of objects, each

with multiple labelled grasps. Grasps are given as oriented rectangles in

2-D.

Instead of looking only at local patches our network uses

global information in the image to inform its grasp predic-

tions, making it significantly more accurate. Our network

achieves 88 percent accuracy and runs at real-time speeds

(13 frames per second). This redefines the state-of-the-art

for RGB-D grasp detection.

II. RELATED WORK

Significant past work uses 3-D simulations to find good

grasps [3] [4] [5] [6] [7]. These approaches are powerful but

rely on a full 3-D model and other physical information about

an object to find an appropriate grasp. Full object models are

often not known a priori. General purpose robots may need

to grasp novel objects without first building complex 3-D

models of the object.

Robotic systems increasingly leverage RGB-D sensors and

data for tasks like object recognition [8], detection [9] [10],

and mapping [11] [12]. RGB-D sensors like the Kinect are

cheap, and the extra depth information is invaluable for

robots that interact with a 3-D environment.

Recent work on grasp detection focusses on the problem

2015 IEEE International Conference on Robotics and Automation (ICRA)

Washington State Convention Center

Seattle, Washington, May 26-30, 2015

978-1-4799-6923-4/15/$31.00 ©2015 IEEE 1316