conv5_3:

-0.0 199.9

1

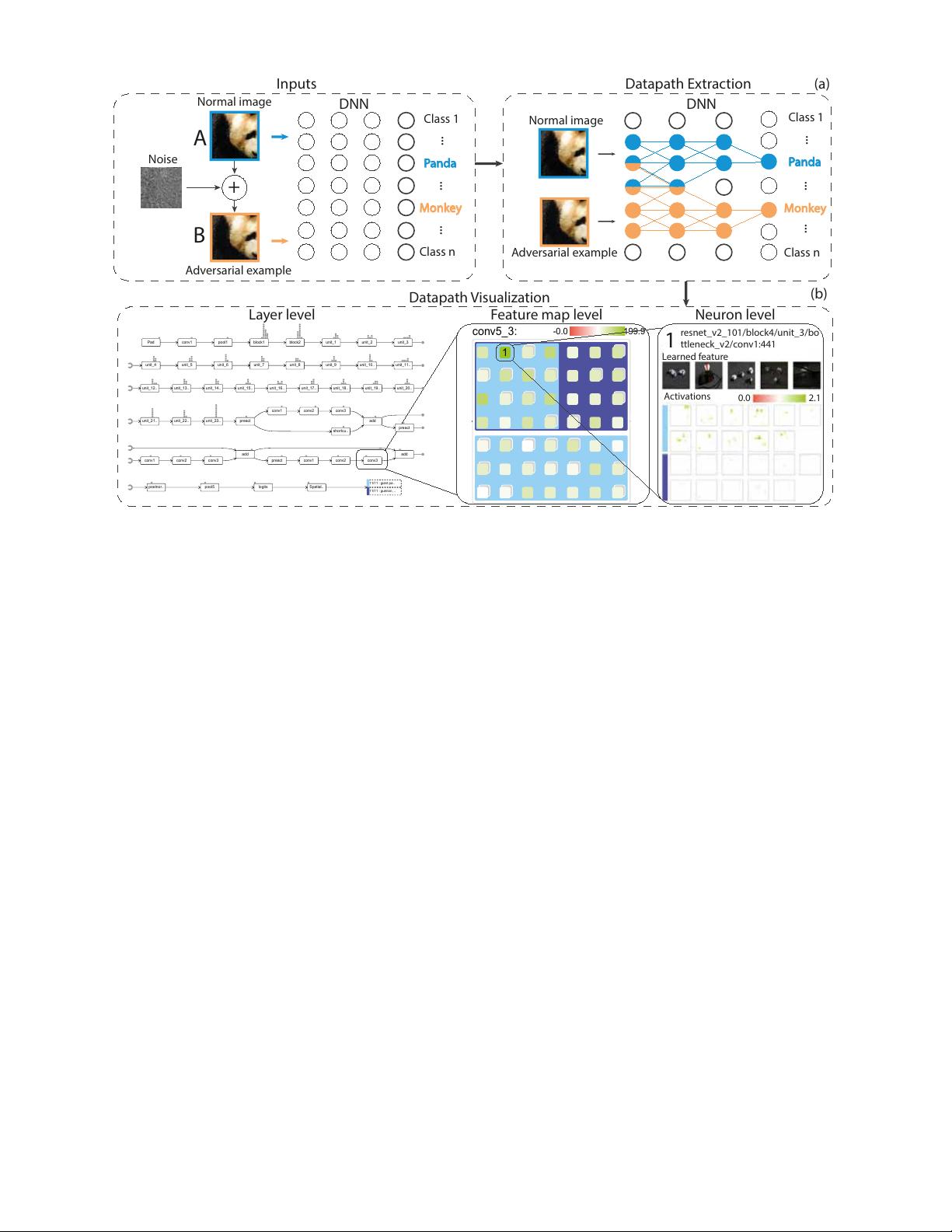

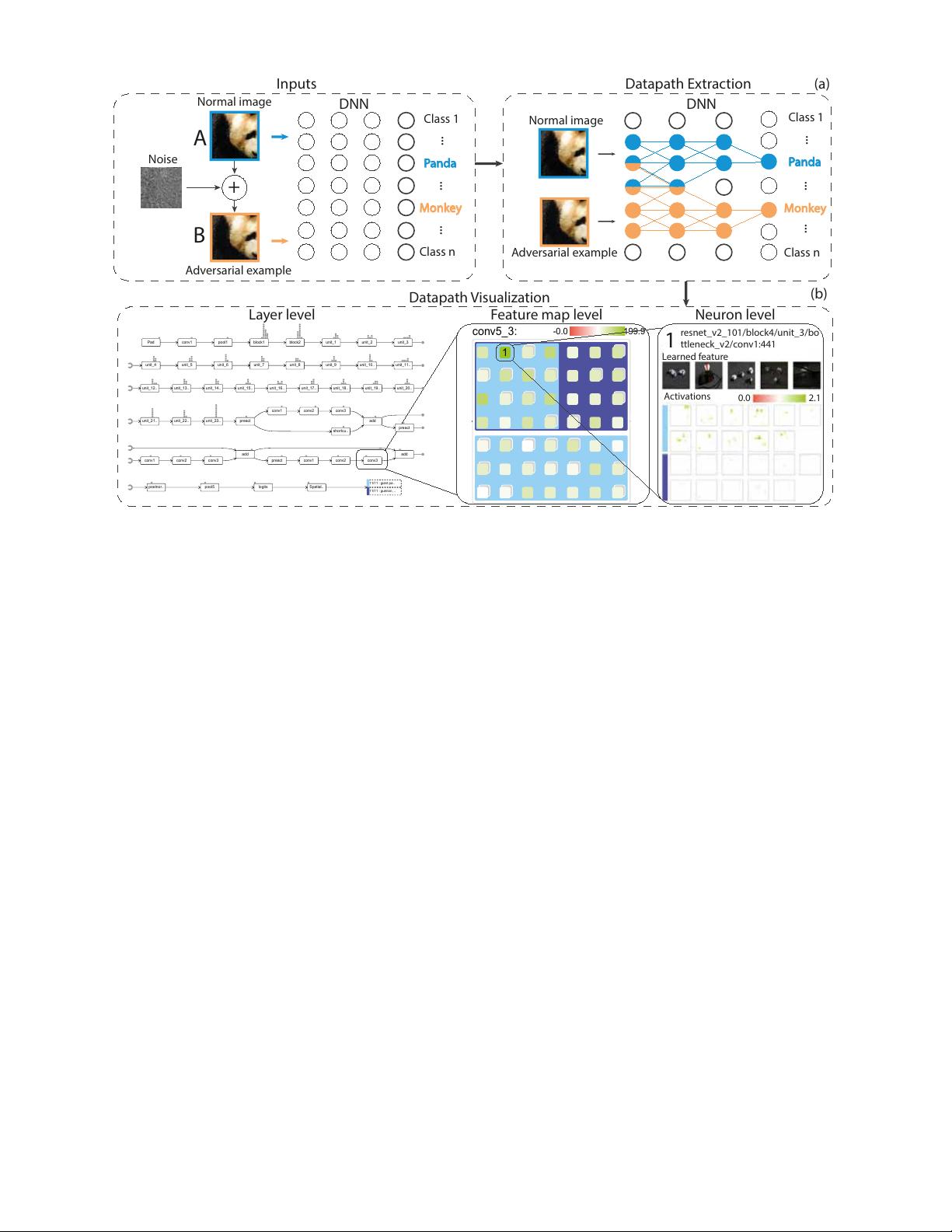

Inputs

Datapath Extraction

Datapath Visualization

+

Noise

Normal image

Adversarial example

DNN

Panda

Monkey

Normal image

Adversarial example

Feature map levelLayer level Neuron level

Class 1

Class n

...

...

Panda

Monkey

Class 1

Class n

...

...

...

...

DNN

(a)

(b)

A

B

Pad

11/11 : giant pa..

11/11 : guenon, ..

resnet_v2_101/block4/unit_3/bo

ttleneck_v2/conv1:441

Activations

Learned feature

1

0.0 2.1

Figure 2: AEVis contains two modules: (a) a datapath extraction module and (b) a datapath visualization module that illustrates datapaths in

multiple levels: layer level, feature map level, and neuron level.

3 THE DESIGN OF AEVIS

3.1 Motivation

The development of AEVis is collaborated with the machine learning

team that won the first place in the NIPS 2017 non-targeted adver-

sarial attack and targeted adversarial attack competitions, which aim

at attacking CNNs [

15

,

51

]. Despite their promising results, the

experts found that the research process was inefficient and incon-

venient, especially the explanation of the model outputs. In their

research process, a central step is explaining misclassification in-

troduced by adversarial examples. Understanding why an error has

been made helps the experts detect the weakness of the model and

further design a more effective attacking/defending approach. To

this end, they desire to understand the roles of the neurons and their

connections for prediction. Because there are millions of neurons in

a CNN, examining all neurons and their connections is prohibitive.

In the prediction of a set of examples, the experts usually

extract

and

examine

the critical neurons and their connections, which are

referred to as datapaths in their field.

To extract datapaths, the experts often treat the most activated

neurons as the critical neurons [

62

]. However, they are not satisfied

with the current activation-based approach because it may result in

misleading results. For instance, considering an image with highly

recognizable secondary objects, which are mixed with the main

object in the image. The activations of the neurons that detect the

secondary objects are also large, however, the experts are not inter-

ested in them because these neurons are often irrelevant to the predic-

tion of the main object. Currently, the experts have to rely on their

knowledge to manually ignore these neurons in the analysis process.

After extracting datapaths, the experts examine them to under-

stand their roles for prediction. Currently, they utilize discrepancy

maps [

64

], heat maps [

62

], and weight visualization [

18

] to under-

stand the role of the datapaths. Although these methods can help

the experts at the neuron level, they commented that there lacked

an effective exploration mechanism enabling them to investigate the

extracted datapaths from high-level layers to individual neurons.

3.2 Requirement Analysis

To collect the requirements of our tool, we follow the human-

centered design process [

9

,

32

], which involves two experts (E

1

and E

2

) from the winning team of the NIPS 2017 competition. The

design process consists of several iterations. In each iteration, we

present the developed prototype to the experts, probe further re-

quirements, and modify our tool accordingly. We have identified

the following high-level requirements in this process. Among these

requirements,

R2

and

R3

are two initial requirements, while

R1

and

R4 are gradually identified in the development.

R1 - Extracting the datapath for a set of examples of interest.

Both experts expressed the need for extracting the datapath of an

example, which serves as the basis for analyzing why an adversarial

example is misclassified. In a CNN, different neurons learn to

detect different features [

62

]. Thus, the roles of the neurons are

different for the prediction of an example. E

1

said that analyzing

the datapath can greatly save experts’ effort because they are able

to focus on the critical neurons instead of examining all neurons.

Besides the datapath for individual examples, E

1

emphasized the

need for extracting the common datapath for a set of examples of

the same class. He commented that the datapath of one example

sometimes is not representative for the image class. For example,

given an image of a panda’s face, the extracted datapath will probably

not include the neuron detecting the body of a panda, which is also

a very important feature to classify a panda.

R2 - Providing an overview of the datapath.

In a large CNN, a

datapath often contains millions of neurons and connections. Di-

rectly presenting all neurons in a datapath will induce severe visual

clutter. Thus, it is necessary to provide experts an overview of a

datapath. E

1

commented, “I cannot examine all the neurons in a dat-

apath because there are too many of them. In the examining process,

I often start by selecting an important layer based on my knowledge,

and examine the neurons in that layer to analyze the learned features

and the activations of these neurons. The problem of this method is

when dealing with a new architecture, I may not know which layer to