Supervised Descent Method and its Applications to Face Alignment

Xuehan Xiong Fernando De la Torre

The Robotics Institute, Carnegie Mellon University, Pittsburgh PA, 15213

xxiong@andrew.cmu.edu ftorre@cs.cmu.edu

Abstract

Many computer vision problems (e.g., camera calibra-

tion, image alignment, structure from motion) are solved

through a nonlinear optimization method. It is generally

accepted that 2

nd

order descent methods are the most ro-

bust, fast and reliable approaches for nonlinear optimiza-

tion of a general smooth function. However, in the context of

computer vision, 2

nd

order descent methods have two main

drawbacks: (1) The function might not be analytically dif-

ferentiable and numerical approximations are impractical.

(2) The Hessian might be large and not positive definite.

To address these issues, this paper proposes a Supervised

Descent Method (SDM) for minimizing a Non-linear Least

Squares (NLS) function. During training, the SDM learns

a sequence of descent directions that minimizes the mean

of NLS functions sampled at different points. In testing,

SDM minimizes the NLS objective using the learned descent

directions without computing the Jacobian nor the Hes-

sian. We illustrate the benefits of our approach in synthetic

and real examples, and show how SDM achieves state-of-

the-art performance in the problem of facial feature detec-

tion. The code is available at www.humansensing.cs.

cmu.edu/intraface.

1. Introduction

Mathematical optimization has a fundamental impact in

solving many problems in computer vision. This fact is

apparent by having a quick look into any major confer-

ence in computer vision, where a significant number of pa-

pers use optimization techniques. Many important prob-

lems in computer vision such as structure from motion, im-

age alignment, optical flow, or camera calibration can be

posed as solving a nonlinear optimization problem. There

are a large number of different approaches to solve these

continuous nonlinear optimization problems based on first

and second order methods, such as gradient descent [1] for

dimensionality reduction, Gauss-Newton for image align-

ment [22, 5, 14] or Levenberg-Marquardt for structure from

motion [8].

“I am hungry. Where is the

apple? Gotta do Gradient

descent”

𝑓 𝐱 = ℎ 𝐱 − 𝐲

2

𝑓(𝐱, 𝐲

1

)

𝐱

∗

1

𝐱

∗

3

𝐱

∗

2

(𝐛)

∆𝐱

𝟏

= 𝐑

𝑘

×

𝑓(𝐱, 𝐲

2

)

𝑓(𝐱, 𝐲

3

)

∆𝐱

𝟐

= 𝐑

𝑘

×

∆𝐱

𝟑

= 𝐑

𝑘

×

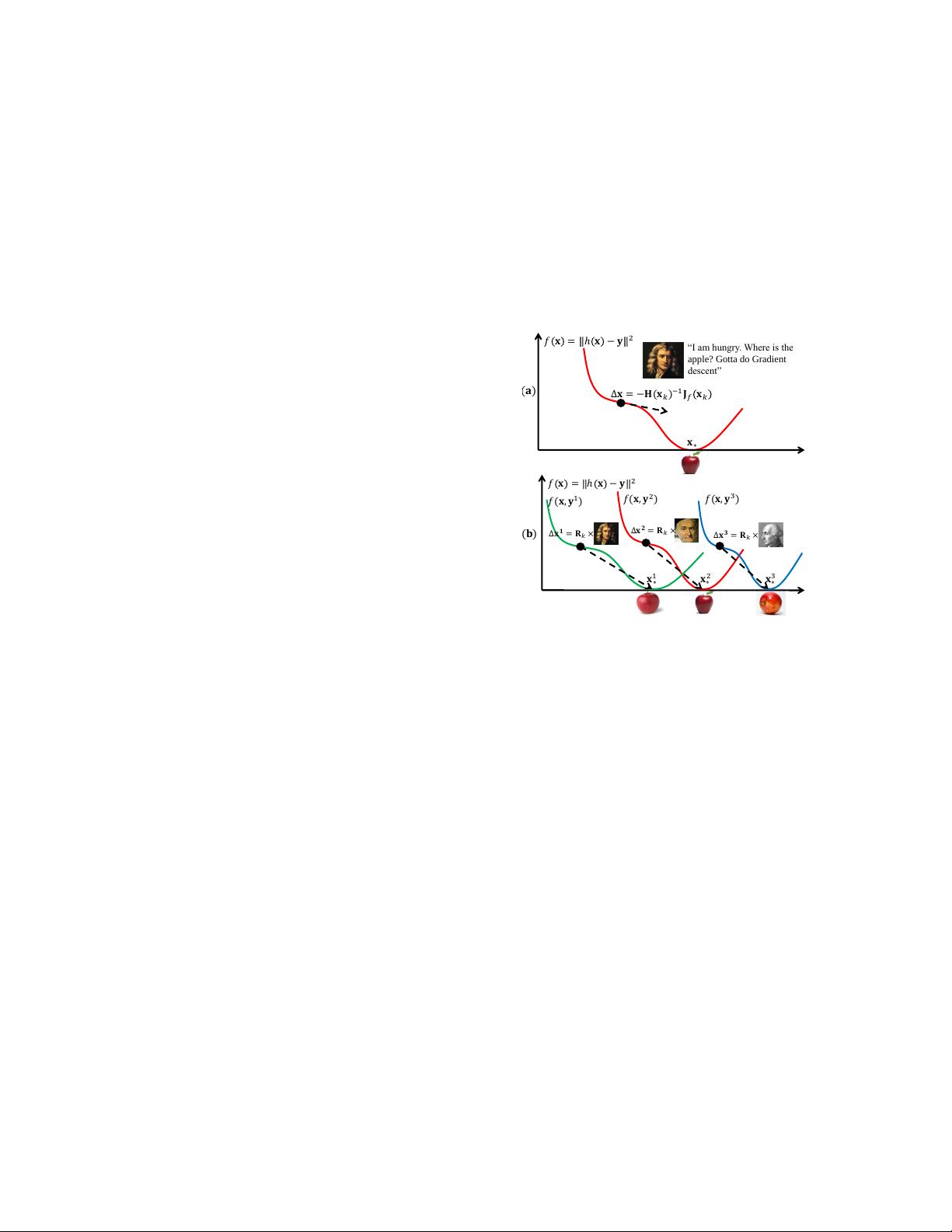

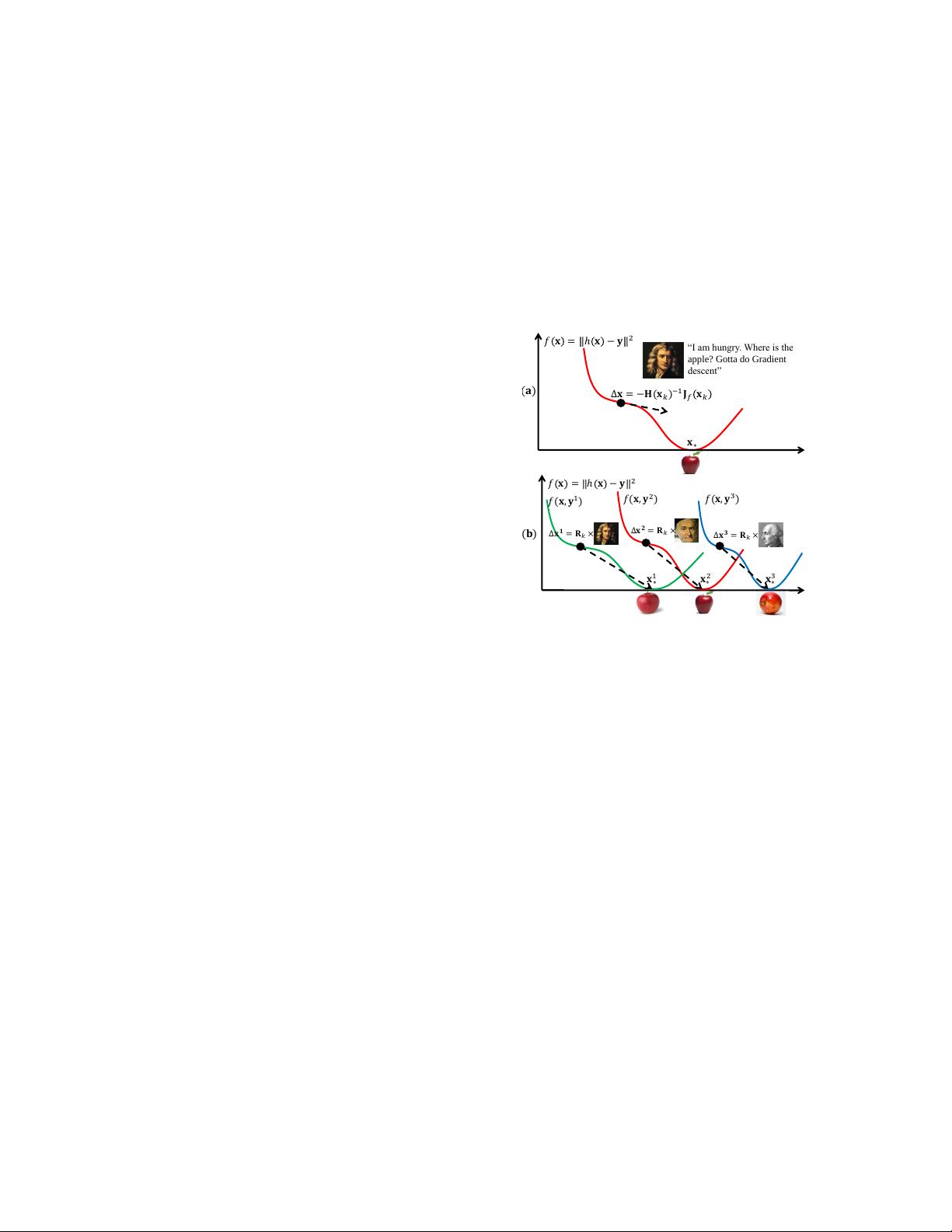

Figure 1: a) Using Newton’s method to minimize f(x). b) SDM

learns from training data a set of generic descent directions {R

k

}.

Each parameter update (∆x

i

) is the product of R

k

and an image-

specific component (y

i

), illustrated by the 3 great Mathematicians.

Observe that no Jacobian or Hessian approximation is needed at

test time. We dedicate this figure to I. Newton, C. F. Gauss, and J.

L. Lagrange for their everlasting impact on today’s sciences.

Despite its many centuries of history, the Newton’s

method (and its variants) is regarded as a major optimiza-

tion tool for smooth functions when second derivatives are

available. Newton’s method makes the assumption that

a smooth function f(x) can be well approximated by a

quadratic function in a neighborhood of the minimum. If

the Hessian is positive definite, the minimum can be found

by solving a system of linear equations. Given an initial es-

timate x

0

∈ <

p×1

, Newton’s method creates a sequence of

updates as

x

k+1

= x

k

− H

−1

(x

k

)J

f

(x

k

), (1)

where H(x

k

) ∈ <

p×p

and J

f

(x

k

) ∈ <

p×1

are the Hessian

matrix and Jacobian matrix evaluated at x

k

. Newton-type

methods have two main advantages over competitors. First,

when it converges, the convergence rate is quadratic. Sec-

ond, it is guaranteed to converge provided that the initial

estimate is sufficiently close to the minimum.

1